International Journal of Science, Engineering and Technology Research (IJSETR)

Volume 1, Issue 1, July 2012

Selection of Appropriate Candidates for

Scholarship Application Form Using KNN

Algorithm

Khin Thuzar Tun1, Aung Myint Aye2

Department of Information Technology, Mandalay Technological University

khinthuzar71183@gmail.com

Abstract—The proposed system is purposed to make decision

for Universities’ scholarship programs. This system defines

required facts for specified application forms and rules for

these facts. KNN (K-Nearest Neighbor) provides nearest result

for scholarship program based on some suitable similarity

function or distance metric. Euclidean distance is used to

calculate the distance between training and test data. In this

system, the required data of administrator and participants are

stored in SQL database. C#.Net programming language is used

to implement this system.

Keywords— Decision-making, universities’ scholarship, KNN,

Euclidean distance, SQL

I. INTRODUCTION

Since technological developments has been increased, web

based applications have been popular in various fields such as

business, education, medical and so on. Today, different

systems are very popular in web applications. Similarly,

candidates who need to attend in scholar programs at foreign

universities can study and apply using online system. So,

decision-making system is used in various web application

as well as in other fields.

Decision-making can be regarded as the cognitive process

resulting in the selection of a belief and/or a course of action

among

several

alternative

possibilities.

Every

decision-making process produces a final choice [1] that may

or may not prompt action. Decision-making can also be

known as a problem-solving activity terminated by a solution

deemed to be satisfactory.

Three major types of pattern recognition terminology:

unsupervised, semi-supervised and supervised learning.

Supervised learning is based on training a data sample

from data source with correct classification already assigned.

Self-Organizing neural networks learn using unsupervised

learning algorithm to identify hidden patterns in unlabelled

input data. This unsupervised refers to the ability to learn and

organize information without providing an error signal to

evaluate the potential solution [2].

The supervised category is also called classification or

regression, each object of the data comes with a

pre-assigned class label. The task is to train a classifier to

perform the labeling, using the teacher. A procedure which

tries to leverage the teacher‘s answer to generalize the

problem and obtain his knowledge is learning algorithm. The

data and the teacher‘s labeling are supplied to the machine to

run the procedure of learning over the data. Although the

classification knowledge learned by the machine in this

process might be obscure, the recognition accuracy of the

classifier will be the judge of its quality of learning or its

performance [3].

There are many classification and clustering methods as

well as the combinational approaches [4-5]. While the

supervised learning tries to learn from the true labels or

answers of the teacher, in semi-supervised the learner

conversely uses teacher just to approve or not to approve the

data in total. It means that in semi-supervised learning there is

not really available teacher or supervisor. The procedure first

starts with fully random manner, and when it reaches the state

of final, it looks to the condition whether he win or lose. K

Nearest Neighbor (KNN) classification is one of the most

fundamental and simple classification methods.

A technique that classifies each record in a dataset based

on a combination of the classes of the k record(s) most similar

to it in a historical dataset (where k= 1). Sometimes it is

called the k-nearest neighbor technique. K-Nearest Neighbor

is a supervised learning algorithm where the result of new

instance query is classified based on majority of K-Nearest

Neighbor category. . Many researchers have found that the

K-NN algorithm accomplishes very good performance in

their experiments on different data sets.

In this system, KNN algorithm with Euclidean distance is

used to make suitable decision for online scholarship

programs in order to choose the suitable candidates.

III. CLASSIFICATION AND CLUSTERING

Data mining has recently emerged as a growing field of

multidisciplinary research. It combines disciplines such as

databases, machine learning, artificial intelligence, statistics,

automated scientific discovery, data visualization, decision

science, and high performance computing.

Data mining technique is used often with large database,

data warehouse etc. It is mainly applied in decision support

systems for modeling and prediction. There are several kinds

of data mining: classification, clustering, association,

sequencing etc.

Two common data mining techniques are clustering and

classification.

For classification, the classifier model is needed. Data are

divided into training set and test set. The training data is used

to create the model. Then the test set applied for checking the

model correctness. Until satisfied, the model is trained and

adjusted by training data. Common techniques used in

1

All Rights Reserved © 2012 IJSETR

International Journal of Science, Engineering and Technology Research (IJSETR)

Volume 1, Issue 1, July 2012

classification are decision tree, neural network, naïve bayes,

Euclidean distance, etc.

For clustering, a loose definition of clustering could be

“the process of organizing objects into groups whose

members are similar in some way”. A cluster is therefore a

collection of objects which are “similar to each other and are

“dissimilar” to the objects belonging to other clusters. Cluster

analysis is also used to form descriptive statistics to ascertain

whether or not the data consists of a set distinct subgroups,

each group representing objects with substantially different

properties.

Imaging a database of customer records, where each record

represents a customer's attributes. These can include

identifiers such as name and address, demographic

information such as gender and age, and financial attributes

such as income and revenue spent.

Clustering is an automated process to group related

records together. Related records are grouped together on the

basis of having similar values for attributes. In fact, the

objective of the analysis is often to discover segments or

clusters, and then examine the attributes and values that

define the clusters or segments. As such, interesting and

surprising ways of grouping customers together can become

apparent.

Classification is a different technique than clustering.

Classification is an important part of machine learning that

has attracted much of the research endeavors. Various

classification approaches, such as, k-means, neural networks,

decision trees, and nearest neighborhood have been

developed and applied in many areas.

A classification problem occurs when an object needs to be

assigned into a predefined group or class based on a number

of observed attributes related to that object. There are many

industrial problems identified as classification problems. For

examples, Stock market prediction, Weather forecasting,

Bankruptcy prediction, Medical diagnosis, Speech

recognition, Character recognitions to name a few [6-7].

Classification technique is capable of processing a wider

variety of data and is growing in popularity. The various

classification techniques are Bayesian network, tree

classifiers, rule based classifiers, lazy classifiers, Fuzzy set

approaches, rough set approach etc.

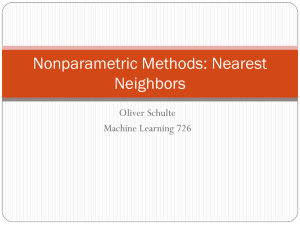

IV. K -NEAREST NEIGHBORS ( KNN )

In 1968, Cover and Hart proposed an algorithm the

K-Nearest Neighbor, which was finalized after some time.

K-Nearest Neighbor can be calculated by calculating

Euclidean distance, although other measures are also

available but through Euclidean distance we have splendid

intermingle of ease, efficiency and productivity [8].

Nearest Neighbor Classification is quite simple, examples

are classified based on the class of their nearest neighbors.

For example ,if it walks like a duck, quacks like a duck, and

looks like a duck, then it's probably a duck. The k - nearest

neighbor classifier is a conventional nonparametric classifier

that provides good performance for optimal values of k. In the

k – nearest neighbor rule, a test sample is assigned the class

most frequently represented among the k nearest training

samples. If two or more such classes exist, then the test

sample is assigned the class with minimum average distance

to it. It can be shown that the k – nearest neighbor rule

becomes the Bayes optimal decision rule as k goes to infinity

[9]. The K-NN classifier (also known as instance based

classifier) perform on the premises in such a way that

classification of unknown instances can be done by relating

the unknown to the known based on some distance/similarity

function. The main objective is that two instances far apart in

the instance space those are defined by the appropriate

distance function are less similar than two nearly situated

instances to belong to the same class [10].

The k-nearest neighbour (k-NN) technique, due to its

interpretable nature, is a simple and very intuitively

appealing method to address classification problems.

However, choosing an appropriate distance function for

k-NN can be challenging and an inferior choice can make the

classifier highly vulnerable to noise in the data. The best

choice of k depends upon the data; generally, larger values of

k reduce the effect of noise on the classification, but make

boundaries between classes less distinct. A good k can be

selected by various heuristic techniques.

In binary (two class) classification problems, it is helpful to

choose k to be an odd number as this avoids tied votes. The

K-Nearest Neighbor algorithm is amongst the simplest of all

machine learning algorithms: an object is classified by a

majority vote of its neighbors, with the object being assigned

to the class most common amongst its k nearest neighbors (k

is a positive integer, typically small). Usually Euclidean

distance is used as the distance metric; however this is only

applicable to continuous variables. In cases such as text

classification, another metric such as the overlap metric or

Hamming distance, for example, can be used.

K nearest neighbors is a simple algorithm that stores all

available cases and classifies new cases based on a similarity

measure (e.g., distance functions). KNN has been used in

statistical estimation and pattern recognition already in the

beginning of 1970’s as a non-parametric technique.

K nearest neighbor algorithm is very simple. It works based

on minimum distance from the query instance to the training

samples to determine the K-nearest neighbors. The data for

KNN algorithm consist of several attribute names that will be

used to classify. The data of KNN can be any measurement

scale from nominal, to quantitative scale.

The KNN algorithm is shown in the following form:

Input: D, the set of k training objects, and test object z= (x',

y').

Process: Compute d(x', x), the distance between z and every

object, (x, y) ∈ D. Select Dz ⊆ D, the set of k closet training

objects to z.

Output: y'= argmaxv ∑(xi, yi) ⊆ Dz I(v= yi)

v is a class label

yi is the class label for the ith nearest neighbors

I (.) is an indicator function that returns the value 1 if

its argument is true and 0 otherwise.

In this system, KNN algorithm is used the suitable result by

mixing the Euclidean distance among the various kinds of

distance metric. The Euclidean distance is as shown in below

:

d ij

x

i1

x j1 xi 2 x j 2 xip x jp

2

2

2

Where

d ij = the distance between the training objects and test

object

xi = input data for test object

xj = data for training objects stored in the database

In KNN algorithm, there are several advantages and

disadvantages:

2

All Rights Reserved © 2012 IJSETR

International Journal of Science, Engineering and Technology Research (IJSETR)

Volume 1, Issue 1, July 2012

Advantages

Robust to noisy training data

KNN is particularly well suited for

multi-modal classes as well as applications

in which an object can have many class

labels.

KNN is simple but effective method for

classification.

KNN is an easy to understand and easy to

implement classification technique.

Effective if the training data is large

Disadvantages

KNN is low efficiency for dynamic web

mining with a large repository.

Distance based learning system is not clear

which type of distance to use and which

attribute to use to produce the best results.

Need to determine value of parameter K

(number of nearest neighbors)

Computation cost is quite high because it

needs to compute the distance of each

query instance to all training samples.

V. PROPOSED SYSTEM DESIGN

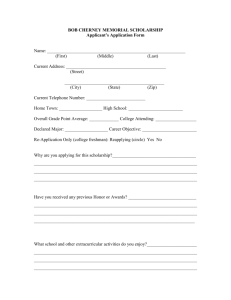

The proposed system design is illustrated in Figure 1.

In this system, the user firstly registered to enter and apply

for scholarship program in the system. If the user is new, he

must fill in the register completely for a new account and

then must login. If he doesn’t fill in fully, the system will

prompt the error message. After the user login, he can select

the educational location and university he need to apply. He

can enter consequently home page of the desired university

and download the scholarship application form by clicking

the download link. To submit scholar, firstly the user fill the

information required for scholarship program and click the

submit button. After submitting, the system calculates

distance using the KNN classifier and Euclidean distance

with training data in database. Finally the system decides the

appropriate result for scholarship according to distance.

Three scholarship universities is included to implement this

system.

Decision-making system for online scholarship application

form is implemented by C#.Net programming language on

Microsoft .NET framework 3.0 and above and Microsoft

Internet Information Services (IIS) is intended to use in this

system. SQL database is used to store the applicants’ data and

training data for scholarship universities. This is also used for

various kinds of other online applications.

VI. SYSTEM IMPLEMENTATION RESULTS

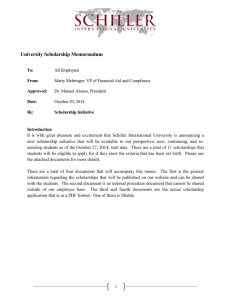

This section describes the implementation results. When

the system starts, “Home Page” appears as shown in Figure 2.

In this page, there are three menus: Register, User login,

Admin login.

Figure 2. Home page

When the user is a new one, the user creates a user account

at the registration page as shown in Figure 3. If the user

doesn’t input completely, the error message will be

prompted.

Figure. 1 Proposed system flow chart

Figure 3. User registration page

3

All Rights Reserved © 2012 IJSETR

International Journal of Science, Engineering and Technology Research (IJSETR)

Volume 1, Issue 1, July 2012

If user registration is complete successfully, the user login

with the correct login name and password to enter the system

as shown in Figure 4.

Figure 7. Scholarship submittion page

Figure 4. User Login page

Figure 5 shows the scholarship’s Home page including the

login user name who logged into this system.

For administrator page, the administrator must enter the

login by entering the valid administrator name and password

in the Figure 8. The administrator is one who authorizes to

manage the whole system.

Figure 8. Administrator Login page

Figure 5. Scholarship home page

Figure 6 and 7 illustrate the home page and the scholarship

submission page for Tokyo University. In the submission

page, user can view the application form for this university

from download link. The user must fill in the required data in

the submission form fully and will the user get the result

whether he is appropriate to attend at that university. If he is

incomplete, the error message will appear in the submission

form.

The administrator can add the rules for each university in

the system corresponding to the university’s requirements.

The rules adding page corresponding to the university is as

shown in Figure 9. In Figure 10, the rules table for Tokyo

university is illustrated as example .

Figure 6. Tokyo University’s home page

Figure 9. Rules adding page

4

All Rights Reserved © 2012 IJSETR

International Journal of Science, Engineering and Technology Research (IJSETR)

Volume 1, Issue 1, July 2012

[7] U. Khan, T. K. Bandopadhyaya, and S. Sharma,

“Classification of Stocks Using Self Organizing Map”,

International Journal of Soft Computing Applications, Issue

4, 2009, pp.19-24.

[8] Dasarathy, B. V., “Nearest Neighbor (NN) Norms,NN

Pattern Classification Techniques”. IEEE Computer Society

Press, 1990.

[9] ^ James Reason (1990). Human Error. Ashgate.

ISBN 1-84014-104-2.

[10] Man Lan, Chew Lim Tan, Jian Su, and Yue Lu,

“Supervised and Traditional Term Weighting Methods for

Automatic Text Categorization”, IEEE Transactions on

Pattern Analysis and Machine Intelligence, Vol. 31, No. 4,

April 2009.

Figure 10. Rules table

VII. CONCLUSION

This paper proposed an online decision making system for

scholarship. KNN classification algorithm is used to select

suitable candidate for scholarship program. This algorithm

classifies the instances based on the similarity function to the

instance in the training data (rules data). The system decides

the selection of appropriate candidates by using C#.NET

programming language. Internet Information Services (IIS)

as web server and SQL database to store the data are used.

This system is suitable for many online decision making

systems in various fields.

VIII. SYSTEM LIMITATIONS

In the proposed system, as KNN is a “lazy” learning

algorithm and results a high computational cost at the

classification time, the administrator of the proposed system

want to prepare and store the conforming rules of the each

corresponding universities’ standards clearly and correctly in

the database. The drawback of K-NN is its inefficiency for

large scale and high dimensional data sets. Thus, better

algorithms are more appropriate than KNN if the system’s

data sets is very large scale.

REFERENCES

[1] ^ James Reason (1990). Human Error. Ashgate. ISBN 184014-104-2.

[2]Annamma Abraham , Dept. of Mathematics

B.M.S.Institute of Technology, Bangalore, India ,

“ Comparison of Supervised and Unsupervised Learning

Algorithms for Pattern Classification”, (IJARAI) .

[3] Cover, T.M., Hart, P.E., “Nearest neighbor pattern

classification”,

IEEE

Trans.

Inform.

Theory,

IT-13(1):21–27, 1967

[4] K. ITQON, Shunichi and I. Satoru, “Improving

Performance of k-Nearest Neighbor Classifier by Test

Features”, Springer Transactions of the Institute of

Electronics, Information and Communication Engineers

2001.

[5] Michael Steinbanch and Pang-Nang Tan “kNN:

k-Nearest Neighbors”

[6] Moghadassi, F. Parvizian, and S. Hosseini, “A New

Approach Based on Artificial Neural Networks for Prediction

of High Pressure Vapor-liquid Equilibrium”, Australian

Journal of Basic and Applied Sciences, Vol. 3, No. 3, pp.

1851-1862, 2009.

5

All Rights Reserved © 2012 IJSETR