Final13-Sol

advertisement

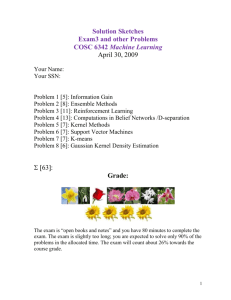

Final Exam

COSC 6342 Machine Learning

Solution Sketches

May 8, 2013

Your Name:

Your Student id:

Problem 1 [4]: Information Gain

Problem 2 [14]: Ensemble Methods + Other

Problem 3 [14]: Reinforcement Learning

Problem 4 [11]: Computations in Belief Networks /D-separation

Problem 5 [5]: Kernel Methods

Problem 6 [10]: Support Vector Machines

Problem 7 [10]: Write an Essay

Problem 8 [7]: K-means

Problem 9 [8]: Non-parametric Density Estimation

:

Grade:

The exam is “open books and notes” and you have 120 minutes to complete

the exam. The use of computers is not allowed! The exam is slightly too

long; you are expected to solve only 90% of the problems in the allocated

time. The exam will count about 33 % towards the course grade.

1

1) Information Gain [4]

Assume we have a classification problem involving 3 classes: professors, students, and

staff members. There are 800 students, 100 staff members and 100 professors. All

professors have blond hair, 50 staff members have blond hair, and 400 students have

blond hair. Compute the information gain of the test “hair_color=’blond’” that returns

true or false. Just giving the formula that computes the information gain is fine; you do

not need to compute the exact value of the formula! Use H as the entropy function in

your formula (e.g. H(1/3,1/6,1/2) is the entropy that 1/3 of the examples belong to class1

1/6 of the examples belong to class 2, and half of the examples belong to class 3). [4]

H(0.8,0.1,0.1)-0.55*H(400/550,50/550,100/550)-0.45*H(400/450,50/450,0)

One error: 0.5-1.5 points

Two errors: 0 points

2) Ensemble Methods [14]

a) Ensembles have been quite successful in generating supervised learning systems

which exhibit very high accuracies. What is the explanation for that? Why is it better

to use a team of diverse base classifiers rather than a single classification algorithm?

[4]

Building ensembles of different classifiers that make different kind of errors, leads to

higher accuracy (as long the base classifiers’ accuracy is above 50%), as classifiers which

make a wrong decision are usually outvoted by classifiers which make the correct

decision.

Other answers might get credit!

b) What is the key idea of AdaBoost and boosting in general to obtain an ensemble of

diverse base classifiers? [3]

The key idea is to use example weighting, assigning high weights to examples that were

misclassified by previously generated classifiers, encouraging the generation of

classifiers which classify those examples correctly obtaining a classifier that makes

different kind of errors, obtaining a set of diverse classifier in the end.

c) In ensemble learning it is important to obtain a set of base classifiers that are diverse in

the sense that the base classifiers are making different kind of errors. Propose a method

that measures the diversity of an ensemble; e.g. using your proposed method we can

quantify that the diversity of Ensemble1 is 20% higher than the diversity of Ensemble2.

Be specific! [7]

Let

c1,c2 be classifiers, which map feature vectors of examples into class labels

T be a training sets

|T| is the cardinality of the training set

We suggest to use the following distance d to assess the similarity between c1 and c2

d(c1,c2)= |{tT|c1(t)=c2(t)}|/|T|

2

3) Reinforcement Learning [14]

a) What are the main differences between supervised learning and reinforcement

learning? [4]

SL: static world[0.5], availability to learn from a teacher/correct answer[1]

RL: dynamic changing world[0.5]; needs to learn from indirect, sometimes delayed

feedback/rewards[1]; suitable for exploration of unknown worlds[1]; temporal

analysis/worried about the future/interested in an agent’s long term wellbeing[0.5], needs

to carry out actions to find out if they are good—which actions/states are good is

(usually) not know in advance1[0.5]

At most 4 points!

b) Answer the following questions for the ABC world (given at a separate sheet). Give

the Bellman equation for states 1 and 4 of the ABC world! [3]

U(1)= 5 + *U(4) [1]

U(4)= 3 + *max (U(2)*0.3+ U(3)*0.1+U(5)*0.6, U(1)*0.4+U(5)*0.6) [2]

No partial credit!

c) Assume you use temporal difference learning in conjunction with a random policy

which choses actions randomly assuming a uniform distribution. Do you believe that the

estimations obtained are a good measurement of the “goodness” of states, that tell an

intelligent agent (assume the agent is smart!!) what states he/she should/should not visit?

Give reasons for your answer! [3]

Not really; as we assume an intelligent agent will take actions that lead to good states and

avoids bad states, the agent that uses the random policy might not recognize that a state is

a good state if both good and bad states are successors of this state; for example,

S2: R=+100

S1:R=-1

S3: R=-100

Due to the agent’s policy the agent will fail to realize the S1 is a good state, as the agent’s

average reward for visiting the successor states of S1 is 0; an intelligent agent would

almost always go from S1 to S2, making S1 a high utility state with respect to TDlearning.

d) What role does the learning rate play in temporal difference learning; how does

running temporal difference learning with low values of differ from running it with

high values of ? [2]

It determines how quickly our current beliefs/estimations are updated based on new

evidence.

e) Assume you run temporal difference learning with high values of —what are the

implications of doing that? [2]

If is high the agent will more focus on its long term wellbeing, and will shy away from

taking actions—although they lead to immediate rewards—that will lead to the medium

and long term suffering of the agent.

3

4.) Computations in Belief Networks /D-separation [11]

Assume that the following Belief Network is given that consists of nodes A, B, C, D, and

E that can take values of true and false.

B

A

E

/

C

D

/

a) Using the given probabilities of the probability tables of the above belief network

(D|C,E; C|A,B; A; B; E) give a formula to compute P(D|A). Justify all nontrivial steps

you used to obtain the formula! [7]

P(D|A)=P(D,C|A)+P(D,~C|A)= P(C|A)*P(D|A,C)+ P(C|A)*P(D|A,~C)

As D and A are d-separable given evidence C—the path is blocked exhibiting pattern 2,

this formula can be simplified to:

= P(C|A)*P(D|C)+ P(~C|A)*P(D|A,~C)

As all these probabilities are in the probability tables, this formula represents one possible

answer to this question.

Incorrect solutions obtain at most 2.5 points.

b) Are C and E independent; is C| and E| d-separable? Give a reason for your answer!

denotes “no evidence given”[2]

Yes, the only path C-D-E is blocked (pattern 3, as D is not part of the evidence)

c) Is E|CD d-separable from A|CD? Give a reason for your answer! [2]

Yes, the only path A-C-D-E is blocked (pattern 2, as C belongs to the evidence).

4

5) Kernel Methods [5]

There has been a lot work in designing new kernels in machine learning. Why is this the

case? How can using kernels help in enhancing particular techniques such as PCA or Kmeans?

Mapping examples into a higher dimensional space frequently makes it easier to find us

more sophistated decision/cluster boundaries which are not available if the ML algorithm

is used in the original space [3] Leads to the creation of new features/new coordinate

systems that lead to a more successful results of the ML algorithms[2]. Other answers

and more detailed discussions of examples how a particular technique might benefit from

kernels might also deserve credit!

6) Support Vector Machine [10]

a) Why do most support vector machine approaches usually map examples to a higher

dimensional space? [2]

It is easier to successful separate the examples with a hyperplan in the mapped space.

b) The support vector regression approach minimizes the following objective function,

given below. Give a verbal description what this objective function minimizes! What

purpose does serve? What purpose does C serve? [5]

1

2

min w C t t subject to:

2

t

r t w T x w0 t

w

T

x w0 r t t

t , t 0

for t=1,..,n

It maximizes the width of the -envelope of the regression function while keeping the

error resulting from making predictions that fall outside -envelope the low[2.5]. C

determines the importance of each of the two objectives in the multi-objective

optimization procedure [1]. defines an upper bound on what kind of errors we are

willing to tolerate, as predictions that fall within the envelop are treated as ‘0 error’

predictions by the objective function! [1.5].

c) Assume you apply support vector regression to a particular problem and for the

obtained hyper plane t and t are all 0 for the n training examples (t=1,..,n); what does

this mean? [3]

All example fall within the -envelope of the regression function or all examples

predictions have an error that is lower than .

5

7) Write an Essay involving Machine Learning [10]

Choose one of the essay topics below (please use complete sentences) and write an essay

of 10-16 sentences length!

Topic1: Assume you are the owner of a company which sells music (e.g. songs, concert

recordings, CDs,…) online. How can machine learning help to make your business more

successful?

Topic2: Our society currently faces a lot of environmental challenges, such as air and

water pollution, oil and other spills, shortage of environmental resources such as oil and

water to name a few. How can machine learning help to better deal with environmental

challenges of our society?

6

8) k-Means [7]

a) K-means only finds clusters that are a local (but not a global) maximum of the

objective function J it minimizes. What does this mean? What are the implications of this

fact for using k-means to cluster real world datasets? [3]

K-means will not necessary find the best clustering with the tightest clusters and the

quality of its results strongly depend on initialization [1. 5].

Run K-means multiple times with different initializations/random seeds and choose the

clustering which has the lowest value for J. [1.5]

b) K-means has difficulties clustering datasets which contain a lot of outliers. Explain,

why this is the case! What could be done to alleviate the problem? [4+up to 3 extra

points]

As K-means requires that all objects need to be assigned; therefore, outliers have to be

assigned to a particular cluster, leading to non-descriptive centroids that no longer

capture the characteristics of most objects, belonging to a particular cluster[2].

Not answer given to the second question but some techniques that have some merit

include:

Remove outliers, prior to applying k-means

Use the cluster medoid, instead of the cluster centroid as the cluster summary

…

9) Non-parametric Density Estimation [8]

a) Assume we have a 2D dataset D containing 3objects: D={(0,0), (3,2) (3,4)}; moreover,

we use the Gaussian kernel density function to measure the density of D. Assume we

want to compute the density at point (3,3) and you can also assume h=1 (=1). It is

sufficient just to give the formula and not necessary to compute the actual density in

(3,3)! [5]

d ( x)

1

3 * 2

e

1 / 2

e 1 / 2 e 18 / 2

Zechun, please verify this solution, as it was produced in a hurry and might not be

correct!

If one error, at most 3 points; if two errors at most 0.5 points; exception if the fomula is

correct instead the constant in front you can give 4 points.

b) What role does the parameter h play in the above approach; how does it impact the

obtained density functions; how does the appearance of the obtained density function

change if we would use h=0.5 or h=2.0 instead? [3]

The obtained density functions will be more rugged(h=0.50/more smooth(h=2) and

contain more(h=0.5)/less local maxima/minima(h=2)!

7