Keyword Extraction Algorithm

advertisement

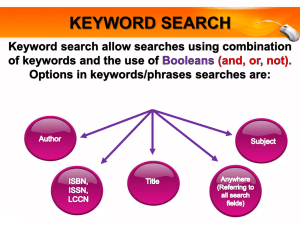

Keyword Extraction Algorithm Contents 1. 2. 3. Introduce ..................................................................................................................................................................... 2 1.1 Keyword ............................................................................................................................................................... 2 1.2 Keyword Extraction system................................................................................................................................. 2 Advantage and disadvantage ....................................................................................................................................... 3 2.1. Rapid Automatic Keyword Extraction .................................................................................................................. 3 2.2. Keyword extraction for text characterization ...................................................................................................... 4 2.3. Automatic keyword extraction from documents using conditional random fields ............................................. 5 2.4. Keyword extraction from a single document using word co-occurrence statistical information ....................... 7 Conclusion .................................................................................................................................................................... 9 1. 1.1 Introduce Keyword Keywords, which we define as a sequence of one or more words, provide a compact representation of a document’s content. Ideally, keywords represent in condensed form the essential content of a document. Keywords are widely used to define queries within information retrieval (IR) systems as they are easy to define, revise, remember, and share. In comparison to mathematical signatures, keywords are independent of any corpus and can be applied across multiple corpora and IR systems. Keywords have also been applied to improve the functionality of IR systems. Here are some characters that help us to define a keyword: - position method which defines relevant words according to their text position ( heading, title). - cue phrase indicator criteria (specific text items signal that the following/previous words are relevant). - frequency criteria (words which are infrequent in the whole collection but relatively - frequent in the given text, are relevant for this text). - connectedness criteria (like repetition, co-reference, synonymy, semantic association). 1.2 Keyword Extraction system To compare and searching document’s content, the best way is use keyword. Whit this way we can increase performance and still assurance the quality. To create capstone project management, we must build a keyword extraction system support searching content. This system runs when upload or update a project to extract keyword and insert to data. When searching, we don’t need run system to extract data again so this system don’t need to fast, but must has high accurate and high reliability when extract large document. To find out the suitable algorithm for this system, we study some keyword extraction algorithms which extract keyword in large individual document. Then compare advantages and disadvantages of each algorithm to pick one which most reliable. 2. Advantage and disadvantage 2.1. Rapid Automatic Keyword Extraction RAKE is based on our observation that keywords frequently contain multiple words but rarely contain standard punctuation or stop words, such as the function words and, the, and of, or other words with minimal lexical meaning. Stop words are typically dropped from indexes within IR systems and not included in various text analyses as they are considered to be uninformative or meaningless. This reasoning is based on the expectation that such words are too frequently and broadly used to aid users in their analyses or search tasks. Words that do carry meaning within a document are described as content bearing and are often referred to as content words. The input parameters for RAKE comprise a list of stop words (or stop-list), a set of phrase delimiters, and a set of word delimiters. RAKE uses stop words and phrase delimiters to partition the document text into candidate keywords, which are sequences of content words as they occur in the text. Co-occurrences of words within these candidate keywords are meaningful and allow us to identify word co-occurrence without the application of an arbitrarily sized sliding window. Word associations are thus measured in a manner that automatically adapts to the style and content of the text, enabling adaptive and fine-grained measurement of word co-occurrences that will be used to score candidate keywords. A. Advantages: This algorithm gets keywords only by compare frequency of keywords, it use the simple and basic formula like tf/idf so the complex is low, this make system run faster than others when work with large document, extract more keyword and less bug. For example, compare this algorithm with TextRank, when extracted keywords from the 500 abstracts used the same hard ware, RAKE extracted in 160 milliseconds and TextRank extracted keywords in 1002 milliseconds, over 6 times the time of RAKE. B. Disadvantages: Because the keyword not only determine by frequency but also by it’s mean. In addition this algorithm makes compound word get higher weight so they are nor accurate enough. 2.2. Keyword extraction for text characterization We use quadgram-based to extraction keyword. In this way, the vector space model is used for representing textual documents and queries and N-grams is used to calculate word weight. N-grams are tolerant of textual errors, but also well-suited for inflectional rich languages like German. Computation of N-grams is fast, robust, and completely independent of language or domain. In the following, we only consider quadgrams because in various experiments on our text collections, they have overtopped trigrams. A. Advantages: * Use vector space model, the weights of text are usually computed by measures like tf/idf * N-grams are well-suited for inflectional rich languages like German. Computation of N-grams is fast, robust, and completely independent of language or domain. * Use basic algorithm as tf/idf so algorithm’s complex is O(n*log(n)). As exemplary time effort (on Pentium II, 400 MHz): for the analysis of 45000 intranet documents, the step of keyword extraction needs 115 seconds using tf/idf-keywords and 848 seconds using quadgram-based keywords. B. Disadvantages: * Not accurate enough. Result is similar tf/idf so there are many words that are not keyword had listed. * N-grams are tolerant of textual errors. 2.3. Automatic keyword extraction from documents using conditional random fields Conditional random fields (CRFs) are a probabilistic framework for labeling and segmenting structured data, such as sequences, trees and lattices. The underlying idea is that of defining a conditional probability distribution over label sequences given a particular observation sequence, rather than a joint distribution over both label and observation sequences. The primary advantage of CRFs over hidden Markov models is their conditional nature, resulting in the relaxation of the independence assumptions required by HMMs in order to ensure tractable inference. Additionally, CRFs avoid the label bias problem, a weakness exhibited by maximum entropy Markov models (MEMMs) and other conditional Markov models based on directed graphical models. CRFs outperform both MEMMs and HMMs on a number of real-world tasks in many fields, including bioinformatics, computational linguistics and speech recognition. A. Advantages: * A key advantage of CRFs is their great flexibility to include a wide variety of arbitrary, non-independent features of the input. * Automated feature induction enables not only improved accuracy and dramatic reduction in parameter count, but also the use of larger cliques, and more freedom to liberally hypothesize atomic input variables that may be relevant to a task. * Many tasks are best performed by models that have the flexibility to use arbitrary, overlapping, multi-granularity and non-independent features. * Conditional Random Fields are undirected graphical models, trained to maximize the conditional probability of the outputs given the inputs. * CRFs have achieved empirical success recently in POS tagging (Lafferty et al., 2001), noun phrase segmentation (Sha & Pereira, 2003) and table extraction from government reports (Pinto et al., 2003). B. Disadvantages: * Even with this many parameters, the feature set is still restricted. For example, in some cases capturing a word tri-gram is important, but there is not sufficient memory or computation to include all word tri-grams. As the number of overlapping atomic features increases, the difficulty and importance of constructing only select feature combinations grows. * Even after a new conjunction is added to the model, it can still have its weight changed; this is quite significant because one often sees Boosting inefficiently “relearning” an identical conjunction solely for the purpose of “changing its weight”; and furthermore, when many induced features have been added to a CRF model, all their weights can efficiently be adjusted in concert by a quasi-Newton method such as BFGS. * Boosting has been applied to CRF-like models (Altun et al., 2003), however, without learning new conjunctions and with the inefficiency of not changing the weights of features once they are added. Other work (Dietterich, 2003) estimates parameters of a CRF by building trees (with many conjunctions), but again without adjusting weights once a tree is incorporated. Furthermore it can be expensive to add many trees, and some tasks may be diverse and complex enough to inherently require several thousand features. 2.4. Keyword extraction from a single document using word co- occurrence statistical information We present a new keyword extraction algorithm that applies to a single document without using a corpus. Frequent terms are extracted first, and then a set of co-occurrence between each term and the frequent terms, i.e., occurrences in the same sentences, is generated. Co-occurrence distribution shows importance of a term in the document as follows. If probability distribution of co-occurrence between term a and the frequent terms is biased to a particular subset of frequent terms, then term a is likely to be a keyword. The degree of biases of distribution is measured by the χ2measure. Our algorithm shows comparable performance to tfidf without using a corpus. A. Advantages: * We show that our keyword extraction performs well without the need for a corpus. * Coverage using our method exceeds that of tf and KeyGraph and is comparable to that of tfidf; both tf and tfidf selected terms which appeared frequently in the document (although tfidf considers frequencies in other documents). On the other hand, our method can extract keywords even if they do not appear frequently. The frequency index in the table shows average frequency of the top 15 terms. Terms extracted by tf appear about 28.6 times, on average, while terms by our method appear only 11.5 times. Therefore, our method can detect “hidden” keywords. We can use χ2 value as a priority criterion for keywords because precision of the top 10 terms by our method is 0.52, that of the top 5 is 0.60, while that of the top 2 is as high as 0.72. Though our method detects keywords consisting of two or more words well, it is still nearly comparable to tfidf if we discard such phrases. * The system is implemented in C++ on a Linux OS, Celeron 333MHz CPU machine. Computational time increases approximately linearly with respect to the number of terms; the process completes itself in a few seconds if the given number of terms is less than 20,000. * Main advantages of our method are its simplicity without requiring use of a corpus and its high performance comparable to tfidf. As more electronic documents become available, we believe our method will be useful in many applications, especially for domain-independent keyword extraction. B. Disadvantages: The number of keyword extracted by this algorithm is less than tfidf method. 3. Conclusion After view advantages and disadvantages of methods above we chose the method “Keyword extraction from a single document using word co-occurrence statistical information” to deployment. Because our system need extract keyword as well as possible and don’t need too fast so this algorithm is suitable. In addition this algorithm is not to complex, it reasonably with us. Now we will overview this algorithm. Step 1. Preprocessing: Stem words by Porter algorithm (Porter 1980) and extract phrases based on the APRIORI algorithm (Furnkranz 1998). Discard stop words included in stop list used in SMART system (Salton 1988). Step 2. Selection of frequent terms: Select the top frequent terms up to 30% of the number of running terms, Ntotal. Ex: Step 3. Clustering frequent terms: Cluster a pair of terms whose Jensen-Shannon divergence is above the threshold (0.95x log 2). Cluster a pair of terms whose mutual information is above the threshold (log(2.0)). The obtained clusters are denoted as C. Step 4. Calculation of expected probability: Count the number of terms co-occurring with c ∈ C, denoted as nc, to yield the expected probability pc = nc/Ntotal. Step 5. Calculation of χ’2value: For each term w, count co-occurrence frequency with c ∈ C, denoted as freq (w, c). Count the total number of terms in the sentences including w, denoted as nw. Calculate χ’2value following (2). o Pg as (the sum of the total number of terms in sentences where c appears) divided by (the total number of terms in the document). o nw as the total number of terms in sentences where c appears. Step 6. Output keywords: Show a given number of terms having the largest χ’2value.