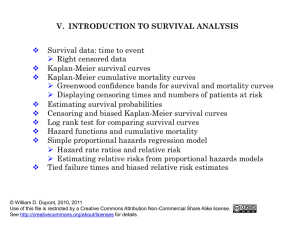

Survival analysis logrank details

advertisement

Survival analysis: Kaplan-Meier estimates and the log-rank test The following example is from the recommended course text, “Primer of Biostatistics” by Stanton Glantz, Chapter 12: How to analyze survival data. Kaplan-Meier estimates: Survival after exposure to second-hand smoke This study examines survival after exposure to second-hand smoke. 10 subjects are observed from the time of their first exposure to second-hand smoke until the subject’s death, the end-of-study (EOS), or the patient is lost to follow up (FU). Table 1 below shows the outcomes for the 10 subjects. Table 1. Survival outcomes for the 10 subjects Subject Time in study (years) Outcome A 7 Death B 12 Death C 7 Death D 12 Alive at EOS E 11 Lost to FU F 8 Death G 9 Death H 6 Death I 7 Alive at EOS J 2 Death Censored No No No Yes Yes No No No Yes No The study ends after 12 years. Subjects enter the study at different times, and may be alive at the end of the study. Seven subjects (A, B, C, F, G, H, J) died during the study period, so these observations are uncensored. Two subjects (D and I) were alive at the end of the study, so these observations are censored. One subject (E) was lost to follow-up after 7 years in the study, so this observation is also censored. Estimating the survival curve We want to estimate the survival function based on this sample of subjects. Survivor function S(t): S(t) = The probability that a subject survives longer than time t. S (t ) Number of individual s surviving longer tha n time t Total number of individual s in the population The time at which half the population is alive and half is dead is called the median survival time. Because most studies run for at most a few years, we often do not know the length of time every individual in the sample survives. Some observations are censored. We need a method to estimate the survival function with censored data. To do this, we compute the probability of surviving at each time we observe a death, based on the number at risk (known to be alive) immediately before that death. We list all the observations in the order of the time of death or censored observation, as shown in Table 2. Uncensored observations (where the time of death is known) are listed before censored observations. Censored observations are indicated with a “+”. Table 2. Survival time, deaths, and number at risk. Subject Survival time, ti J H A and C I F G E B D 2 6 7 7+ 8 9 11+ 12 12+ Number alive at beginning of interval, ni 10 9 8 5 4 2 - Number of deaths at end of interval, di 1 1 2 1 1 1 - The first death (J) occurred at time 2. The second death (H) occurred at time 6. Two deaths (A and C) occurred at time 7 Subject I is censored at time 7. etc. We now estimate the probability of death within any time period, based on the number of subjects that survive to the beginning of the time period (the number at risk). Just before the first death (J) at time 2, there are 10 subjects alive. There is one death at time two, so there are 10 – 1 = 9 survivors. Our best estimate of the probability of surviving past time 2 if alive just before time 2 is Fraction alive just before time 2 surviving past time 2 = n 2 d 2 10 1 9 0.9 n2 10 10 n2 is the number of individuals alive just before time 2 d2 is the number of deaths at time 2. At the beginning of the time interval ending at time 2, 100 percent of the subjects were alive, so we estimate the cumulative survival rate at time 2 is 1.00 * 0.9 = 0.9. The same calculations apply at the next death, at t=6. Fraction alive just before time 6 surviving past time 6 = n6 d 6 9 1 8 0.889 n6 9 9 At the beginning of the time interval ending at time 6, 90 percent of the subjects were alive, so the estimated cumulative survival rate at time 2, S(2) is 0.9 * 0.889 = 0.8. Table 3 shows these calculations for further time points. Subject Survival time, Number alive ti at beginning of interval, ni Number of deaths at end of interval, di J H A and C I F G E B D 2 6 7 7+ 8 9 11+ 12 12+ 1 1 2 1 1 1 - 10 9 8 5 4 2 - Fraction surviving interval, (ni – di)/ni .9 .889 .75 Cumulative survival rate .8 .75 .48 .36 .5 .18 .9 .8 .6 We have to do something different for the death at time 8, because one subject (I) was censored at time 7, and is no longer in the at-risk group at time 8. We know that the subject died sometime after time 7, but we don’t know exactly when. The next death is subject F at time 8. Because of the censoring of subject I, who was last observed at time 7, we don’t know if this subject was alive or dead at time 8. As a result, we drop subject I from the calculation of the survival function. Just before time 8, there are 5 subjects known to be alive (at risk), and 1 dies at time 8, so we use the formula as before. Fraction alive just before time 6 surviving past time 6 = n8 d 8 5 1 4 0. 8 n8 5 5 At the beginning of the time interval ending at time 8, 60 percent of the subjects were alive, so the estimate of the cumulative survival rate at time 8 is 0.6 * 0.8 = 0.48. This method of estimating the survival curve is known as the Kaplan-Meier product-limit estimate. The general formula for the Kaplan-Meier product-limit estimate of the survival curve is n d Sˆ(t j ) ( n i i ) i where there are ni individuals alive just before time ti and di deaths occur at time ti. The standard error of the Kaplan-Meier product-limit estimate at any time ti is estimated using Greenwood’s formula: S Sˆ (t ) Sˆ (t j j ) di i di ) n (n i The standard error may be used to calculate confidence intervals in the usual way. Here’s an example from the text, Statistical Analysis of Medical Data Using SAS, by Geoff Der and Brian Everitt. data melanoma; input weeks status$; censor=status='alive'; cards; 12.8 dead 15.6 dead 24.0 alive 26.4 dead 29.2 dead 30.8 alive 39.2 dead 42.0 dead 58.4 alive 72.0 alive 77.2 dead 82.4 dead 87.2 alive 94.4 alive 97.2 alive 106.0 alive 114.8 alive 117.2 alive 140.0 alive 168.0 alive ; proc lifetest data=melanoma plots=(s); time weeks*censor(1); run; proc greplay igout=work.gseg nofs; delete _all_; run; quit; The LIFETEST Procedure Product-Limit Survival Estimates weeks 0.000 12.800 15.600 24.000* 26.400 29.200 30.800* 39.200 42.000 58.400* 72.000* 77.200 82.400 87.200* 94.400* Survival 1.0000 0.9500 0.9000 . 0.8471 0.7941 . 0.7374 0.6807 . . 0.6126 0.5445 . . Failure 0 0.0500 0.1000 . 0.1529 0.2059 . 0.2626 0.3193 . . 0.3874 0.4555 . . Survival Standard Error 0 0.0487 0.0671 . 0.0814 0.0919 . 0.1014 0.1083 . . 0.1169 0.1221 . . Number Failed Number Left 0 1 2 2 3 4 4 5 6 6 6 7 8 8 8 20 19 18 17 16 15 14 13 12 11 10 9 8 7 6 97.200* 106.000* 114.800* 117.200* 140.000* 168.000* . . . . . . . . . . . . . . . . . . 8 8 8 8 8 8 5 4 3 2 1 0 NOTE: The marked survival times are censored observations. E x a mp l e 21. 1: HS V - 2 Ep i s o d e s wi t h g D2 Va c c i n e 1. 00 0. 75 0. 50 0. 25 0. 00 0 25 50 75 100 125 we e k s Legend: P r o d u c t - L i mi t E s t i ma t e Cu r v e Ce n s o r e d Ob s e r v a t i o n s 150 175 Comparing two survival curves: the logrank test This description is from the course text, Glenn Walker, “Common Statistical Methods for Clinical Research with SAS Examples”. Usually, event times are not well-modeled using the normal distribution. The log-rank test is a non-parametric test that does not require any assumptions about the distribution of the event times. If every patient were followed until the event occurrence, the event times could be compared between two groups using the Wilcoxon rank-sum test. However, some patients might drop out or complete the study before the event occurs. In such cases, the data are censored. The log-rank test adjusts for this censoring. The null hypothesis tested by the log-rank test is that of equal event time distributions among groups. Equality of the distributions of event times implies similar risk-adjusted event rates among groups not only for the clinical trial as a whole, but also for any arbitrary time point during the trial. Rejection of the null hypothesis indicates that the event rates differ among groups at one or more time points during the study. In this example we will use two treatment groups, but the method extends readily to more than two groups. Consider the following data-set. Group 1 Patient ID 101 103 104 … N1 Event time Y11 Y12 Y13 Censored Yes Y1N1 Group 2 Patient ID 102 105 106 … N2 Event time Y21 Y22 Y23 Censored Yes Y1N2 The essence of the log-rank test is that, at each time when there is an event, we create a contingency table (a 2x2 table) such as we use in a chi-square test. The entries in the 2*2 table is a count of the number of patients in each group who are event positive or event negative. At the time of an event, we have 1. The group that has the observed event(s) 2. The expected number of events in each treatment group. We calculate a chi-square statistic by summing the differences between observed (O) and expected (E) number of events at each time point, and dividing by the variance. (Oi Ei )2 Ei i 1 g 2 The test statistic has an approximate chi-square distribution with g-1 degrees of freedom where g is the number of groups (g=2 in the case of 2 treatment groups). If one group has more events than the other group, we will get a large chi-square statistic. Under the null hypothesis of no difference in event rates between the groups, we can calculate the probability that we would get as extreme a chi-square statistic as we see in the data. The calculations are shown in the texts by Glantz and Walker.