Title: From theory to `measurement` in complex interventions

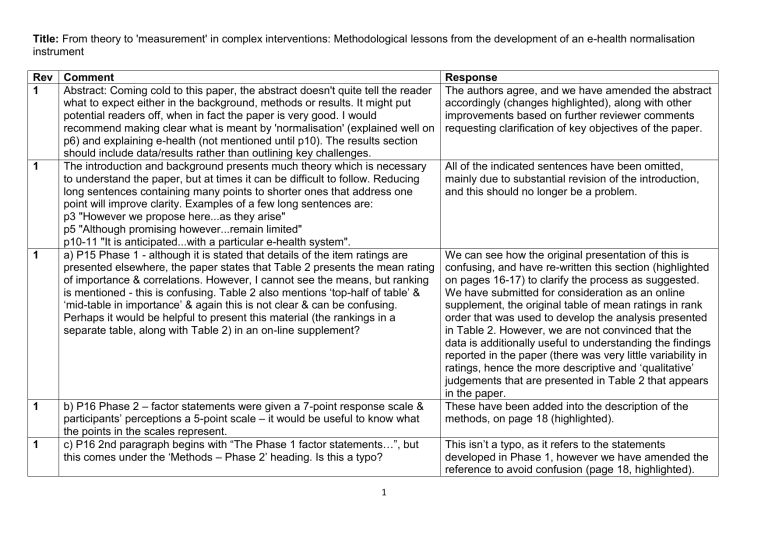

Title: From theory to 'measurement' in complex interventions: Methodological lessons from the development of an e-health normalisation instrument

Rev Comment Response

1 Abstract: Coming cold to this paper, the abstract doesn't quite tell the reader what to expect either in the background, methods or results. It might put potential readers off, when in fact the paper is very good. I would recommend making clear what is meant by 'normalisation' (explained well on p6) and explaining e-health (not mentioned until p10). The results section should include data/results rather than outlining key challenges.

The authors agree, and we have amended the abstract accordingly (changes highlighted), along with other improvements based on further reviewer comments requesting clarification of key objectives of the paper.

1 All of the indicated sentences have been omitted, mainly due to substantial revision of the introduction, and this should no longer be a problem.

1

1

1

The introduction and background presents much theory which is necessary to understand the paper, but at times it can be difficult to follow. Reducing long sentences containing many points to shorter ones that address one point will improve clarity. Examples of a few long sentences are: p3 "However we propose here...as they arise" p5 "Although promising however...remain limited" p10-11 "It is anticipated...with a particular e-health system". a) P15 Phase 1 - although it is stated that details of the item ratings are presented elsewhere, the paper states that Table 2 presents the mean rating of importance & correlations. However, I cannot see the means, but ranking is mentioned - this is confusing. Table 2 also mentions ‘top-half of table’ &

‘mid-table in importance’ & again this is not clear & can be confusing.

Perhaps it would be helpful to present this material (the rankings in a separate table, along with Table 2) in an on-line supplement? b) P16 Phase 2 – factor statements were given a 7-point response scale & participants’ perceptions a 5-point scale – it would be useful to know what the points in the scales represent. c) P16 2nd paragraph begins with “The Phase 1 factor statements…”, but this comes under the ‘Methods – Phase 2’ heading. Is this a typo?

We can see how the original presentation of this is confusing, and have re-written this section (highlighted on pages 16-17) to clarify the process as suggested.

We have submitted for consideration as an online supplement, the original table of mean ratings in rank order that was used to develop the analysis presented in Table 2. However, we are not convinced that the data is additionally useful to understanding the findings reported in the paper (there was very little variability in ratings, hence the more descriptive and ‘qualitative’ judgements that are presented in Table 2 that appears in the paper.

These have been added into the description of the methods, on page 18 (highlighted).

This isn’t a typo, as it refers to the statements developed in Phase 1, however we have amended the reference to avoid confusion (page 18, highlighted).

1

1

1

1

2 d) P16 data analysis in this phase states that “…perceptions relating to the

TARS items…were explored…with chi square…using pooled frequency categories”. What is meant here by ‘pooled frequency categories’? Was the

7-point scale collapsed to categories 0-3, 4-6 etc as shown in Table 6? If so, why are the categories different in sites 1 & 2 (Table 6)? And can the authors explain why they used a 7-point scale & then grouped points for analysis? e) P17 Results (Phase 2) – refers to Table 6 & the pooled frequencies to indicate item responses, but this table needs a key to indicate what is presented. The title indicates ‘means, standard deviations & frequencies’ but

I cannot see these. The text should provide an indication/summary of what the reader is seeing in Table 6. f) Tables 7 & 8. I’m not sure what the N stands for – maybe number of responses? But how does this tally with the numbers reported in Table 6? A key for these tables would aid clarity.

Needs considerable re-working and restructuring in the background section to really clarify what the 'selling points' of the paper are in comparison to already published papers. The reader needs walking through the background section so that the key selling points are clear and made very early on, and the paper is then focussed upon these. The use of theory for implementation is not a particularly recent advance (think of Grimshaw et al's review in 2004) and developing theory-based measures (i.e., questionnaires) is also not a recent advance. However, at the moment it reads as if the selling point for the paper is seen as being a recent push for theory-based implementation and the need for surveys to do this? If your selling point is the need for theories and, therefore, tools that pick up wider influences upon health professionals practice, this is OK but currently

The section on data analysis has been re-written to explain how and why the scales were collapsed into new categorical variables for non-parametric analysis, and all of these queries are now answered (page 19, highlighted).

The title of Table 6 was an error, and has been corrected to adequately convey the data it contains, and labels for the categorical variables that the frequencies represent have been included. The reference in the text of the paper gives a clearer description now of what Table 6 contains (page 19, highlighted).

Yes, the N in tables 7 and 8 represents the sample size for the ‘routinatisation’ category within the analysis

(with breakdown by agreement category provided in brackets). These numbers do not tally with those reported in Table 6, because the ‘don’t know’ responses were excluded from the chi-square analyses on a per-item basis. This has now been explained in the results section where the data is being referred t( highlighted on page 19).

We agreed with the reviewer about this, and have completely revised and re-structured the entire introduction/background section to make the selling points of this paper

– correctly identified by the reviewer as the need for models that capture the wider influences on implementation beyond those considered in psychological theories, and for tools to pick up these influences. This restructuring has involved a much clearer building up of the rationale for the paper, to emphasise what this paper ‘adds’. This required moving into the main background (now labelled

‘introduction’) material from both (a) the subsection on

2

2

2

2

2 doesn't jump out at the reader. In this case, the paper would need couching in a richer discussion of the current favouring of models focussed on the individual, and, therefore, what the strength of NPT is in comparison

I think the complexity of choosing decent outcome measures is important and good to have picked up on in your paper; however, this important issue lacked clarity whilst reading the paper. Nail it as a key point in your background section, discuss issues around outcome measures, and then refer back to it in later sections. For example, in phase 2 methods section I think paragraph 2 refers to outcome measures but this isn't clear

It is good to have had the questionnaire reviewed by multiple stakeholders.

However, what is very important with these sorts of questionnaires is face and content validity as judged by the health professionals and I see lack of consideration of this as quite a significant oversight. At the least this needs debate in the discussion section. What is also needed is reference to your response rates which appear to be omitted: low response rates can suggest issues with the design and saliency of the items in the questionnaire.

Response rates are very important for any survey, but particularly one that might be used for implementation activities.

I am unclear why chi square analyses were run rather than, for example, t-tests (Results section, phase 2) when the items in the survey had seven or five point response scales? This is either incorrect, or, if I have misunderstood, requires greater clarification in methods and results section as to why categories were collapsed together to create nominal level data from interval level data

(Discretionary revisions) Greater clarity would be gained from defining the terms 'intervention', 'implementation' and 'dissemination' early on, as

'intervention' could mean behaviour-change intervention, or it could mean, for example, a 'health technology intervention'. I think the terms may have

NPT and (b) the introduction of the section for the

TARS study, to make the progression of arguments much clearer (eg. more explanation of NPT was needed earlier, to make the case for the objectives of the paper). The objectives are now more clearly specified – both in the revised abstract and in the introduction.

This was an important suggestion, and a paragraph has been included (page 8, highlighted). This strengthens our arguments for the work presented in the paper (and helps distinguish NPT from other theoretical approaches that have been used). The reference to outcome measures in Phase 2 methods

(pg 18) is now explicit (and makes sense given earlier discussion in the intro), and it is also discussed (pg 23, highlighted).

We have both (a) included the response rates (pg 19), and (b) picked this up as a discussion point in the paper (pg 22, highlighted). Response rates had not been presented, because we did not have adequate information to calculate these accurately, but this is footnoted for information, to assist interpretation of the results.

The pattern of responses to the items were such that non-parametric analysis was more appropriate. This has been explained more clearly in the methods section (pg 19, highlighted).

We have added a definition for the term

‘implementation’ (from Linton, 2002) (pg 4, highlighted). Improved clarity of the key objectives of the paper has reduced possible confusing relating to

3

2

2

2

2

3

3

3 been used inter changeably a couple of times which can be confusing for the reader.

(Discretionary revisions) Some of the current references used to support assertions I feel could be better replaced with 'bigger' references, particularly, for example, reference 17

(Discretionary revisions) Martin Eccles has several papers on use of theory in implementation studies which would be worth including in background section, paragraph 2.

(Discretionary revisions) The statement made relating to reference 11 in the background section needs expanding as its far too vague and, therefore, not illuminating, at the moment.

(Discretionary revisions) Some concrete examples might help the reader understand constructs of NPT as they all read quite similar other terms.

This has been addressed, the revision process has resulted in several changes to references within the paper.

Two papers co-authored by Martin Eccles are included.

The statement has been omitted.

This has not been included – primarily because the paper is already quite long. We are confident that the revised introduction (and clarity with the paper overall) will facilitate the reader’s understanding.

These are important questions, and a paragraph has been re-written to answer them much more explicitly

(pg 12, highlighted). The purpose is to measure implementation processes, and for use by both researchers (evaluation) and practitioners (diagnostic and evaluation).

1. What is the primary purpose of the instrument? Is it to be used as a predictor, diagnostic, or evaluative tool? Or is it to be used as an assessment tool of the potential for implementation and normalisation of a new intervention? Is it a measure of process or outcome? Is it a summative or formative measure?

2. Who is expected to use the instrument? Is it for research teams to measure the use of the NPT in implementation science or is it to be used by practitioners who are trying to implement new procedures or both?

3. Why is there a focus on the collective action part of the NPT? If this is an instrument that has been developed from the NPT then why does it only over one of the 4 core dimensions? 27 out of the 30 questions cover the collective action construct with only one question covering thee constructs of coherence, cognitive participation and reflexive monitoring. This means that the instrument only covers one of the 4 key constructs. What implications does this have for the integrity of the NPT?

4. How does the use of additional empirical and expert derived information affect the overall integrity of the NPT? The research team have discussed involving different stakeholders in developing and devising new dimensions for the questions around the theory. However, what implications does this

This is partly explained by the shift in development of the theory

– from NPM to NPT – across the course of the study. This is now clearly explained in the methods section (pg 14, highlighted), and picked up as a discussion point in the discussion section, in a new paragraph that was suggested (below) to discuss implications for the theory (pg24-5, highlighted).

This is an important question – and we welcome the opportunity to develop the discussion in this respect.

Two paragraphs have been added (pg24-5, highlighted).

4

3

3

3 have for the overall integrity of the theory and does the development of these questions influence the constructs?

5. What is the connection between generative processes (implementation, embedding, integration page 6) constructs (coherence, cognitive participation, collective action and reflexive monitoring) and the theoretical assumptions on page 12 into the methodological approach to measurement and how the data in Table 1 inform and shape the consequent instrument?

It was not clear to me how these elements connected together to provide a refined process for developing the instrument.

6. Why was a LIkert scale used in designing the instrument? Why did the team not consider more qualitative instruments, particularly in the light of their conclusions (importance of context and creating flexible, implementation sensitive type measures)? For example, one of the key findings is reported on page 18 - "ratings made on the instrument items are related to participants' perceptions of how routinely the e-health systems are being used in their practice contexts". What if the research team decided to ask the question - " Is this new practice now part of your everyday routine?" If YES ask "How did this happen?" If NO ask " What more needs to be done to help you make it routine?" The discourse is missing in this paper about the real alternatives from a methodological point of view, particularly in the light of the conclusions drawn (i.e. the need for context sensitive, flexible process measures).

7. The two convenience sample sites are really quite different - a group of

In NPT, the four constructs outlined represent different sets of generative processes, that underline the three different kinds of activities (implementing, embedding, integrating) (Clarity about this should have improved with the restructuring of the intro/background). Table 1 represents a preliminary analysis of (a) interpretation of key assumptions of the theory relevant to developing measures and (b) the implications of these and/or challenges that they raise. Thus, the data in this table was not intended to connect with specific methodological steps, but was rather used as a general frame of reference for the instrument development process. The phrase ‘translating into methodological approach’ was therefore inaccurate

(and misleading), and this has been revised to more accurately convey its use in the process (highlighted, pg 13).

The objective of the study was to develop a tool that

(a) measured processes rather than outcomes and (b) could be easily used by practitioners and researchers to ‘quantify’ those processes. This has now been made much clearer in the section on the development of

TARS (pg 12, highlighted). We recognise that there is further work to do in relation to this instrument – and others to be developed from NPT – in determining appropriate ways of developing measures of outcomes. This was something raised by another reviewer, and the paper has benefited from a stronger discussion of the importance (and issues) relating to outcome measures from the introduction, methods, and into the discussion sections (see pgs 8, 18 and 23, highlighted).

Discussion of this is now included – pg 22-3.

5

3

3 community nurses trying to introduce e-health versus what seems to be a call centre purposely designed from the start to manage information electronically. The paper does not specifically describe these differences but it is important to explore them as they could explain the differences in results rather than the predictive ability of the NPT.

8. Also linked to the sites is the fact that the sample was skewed toward one professional group - nurses. Given the importance of NPT in describing role clarity and shared understanding it would be important for the team to acknowledge this as one key limitation.

9. Overall, it is not clear whether this paper was demonstrating how NPT could be used to inform the development of a theory based measure or whether it was showing how difficult and complex the process is. It has raised a number of conceptual and methodological challenges but it tends to create a sense of certainty in the abstract and concluding comments. It might be better (and more consistent) to acknowledge that this is the beginning of a very complex journey

This is now noted as a limitation in the discussion (pg

22, highlighted).

The paper aimed to do both of these things, but other reviewers’ comments also suggested lack of clarity about the objectives of the paper. Revisions throughout

– from the abstract, introduction, and returned to in the discussion, should now make this very clear. However, a final sentence has been added to the conclusion to reflect this comment – as this is indeed the message we wish to convey.

6