PR-2CS-Section(2)

advertisement

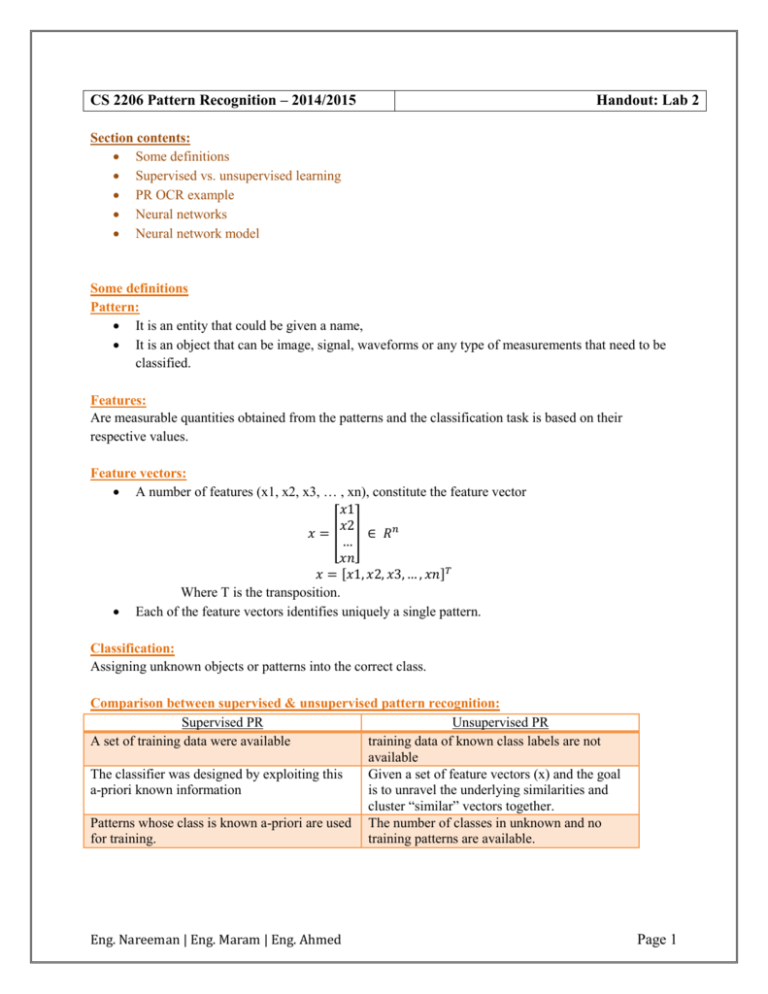

CS 2206 Pattern Recognition – 2014/2015 Handout: Lab 2 Section contents: Some definitions Supervised vs. unsupervised learning PR OCR example Neural networks Neural network model Some definitions Pattern: It is an entity that could be given a name, It is an object that can be image, signal, waveforms or any type of measurements that need to be classified. Features: Are measurable quantities obtained from the patterns and the classification task is based on their respective values. Feature vectors: A number of features (x1, x2, x3, … , xn), constitute the feature vector 𝑥1 𝑥2 𝑥 = [ ] ∈ 𝑅𝑛 … 𝑥𝑛 𝑥 = [𝑥1, 𝑥2, 𝑥3, … , 𝑥𝑛]𝑇 Where T is the transposition. Each of the feature vectors identifies uniquely a single pattern. Classification: Assigning unknown objects or patterns into the correct class. Comparison between supervised & unsupervised pattern recognition: Supervised PR Unsupervised PR A set of training data were available training data of known class labels are not available The classifier was designed by exploiting this Given a set of feature vectors (x) and the goal a-priori known information is to unravel the underlying similarities and cluster “similar” vectors together. Patterns whose class is known a-priori are used The number of classes in unknown and no for training. training patterns are available. Eng. Nareeman | Eng. Maram | Eng. Ahmed Page 1 Pattern Recognition for OCR Character (letter or number) recognition is another important area of pattern recognition, with major implications in automation (computerization) and information handling. Optical character recognition (OCR) systems are already commercially available and more or less familiar to all of us. An OCR system has a “front-end” device consisting of : light source, scan lens, document transport, detector. At the output of the light-sensitive detector, light-intensity variation is translated into “numbers” and an image array is formed. A Simple Pattern Recognition Problem (Statistical) Eng. Nareeman | Eng. Maram | Eng. Ahmed Page 2 Eng. Nareeman | Eng. Maram | Eng. Ahmed Page 3 A Simple Pattern Recognition Problem (Statistical) Neural networks: o o o Is a network that is represented by a set of nodes and arrows. o A node correspond to a neuron. o An error corresponds to a connection along with the direction of signal flow between neurons. Components of neural network model: o A set of weights: Which characterize the strength of the synapse linking a neuron to another. Synaptic weight linking a neuron “j” to neuron “i” could be identified by either “wij” or “wji”. A weight can take either a (+ve) or (-ve) value, which indicate an excitatory or an inhibitory synapse. o An adder: For summing the input neuron’s modulated by the respective connection weights, the operation constitute a linear combiner. 1 o An activation function: Which is applied on the input summation for limiting the amplitude of the neuron’s output. Neural network model: Neural networks: Is a network that is represented by a set of nodes and arrows. A node correspond to a neuron. An error corresponds to a connection along with the direction of signal flow between neurons. Components of neural network model: A set of weights: o Which characterize the strength of the synapse linking a neuron to another. Eng. Nareeman | Eng. Maram | Eng. Ahmed Page 4 o Synaptic weight linking a neuron “j” to neuron “i” could be identified by either “wij” or “wji”. o A weight can take either a (+ve) or (-ve) value, which indicate an excitatory or an inhibitory synapse. An adder: For summing the input neuron’s modulated by the respective connection weights, the operation constitute a linear combiner. An activation function: Which is applied on the input summation for limiting the amplitude of the neuron’s output. Neural network model: Input patterns x1 x2 x3 … xm Bias bk Wk1 Output Activation function Wk2 Wk3 ∑ Uk 𝑓 (. ) Yk Wkm Synaptic weights Eng. Nareeman | Eng. Maram | Eng. Ahmed Page 5