face recognition in unconstrained environments

advertisement

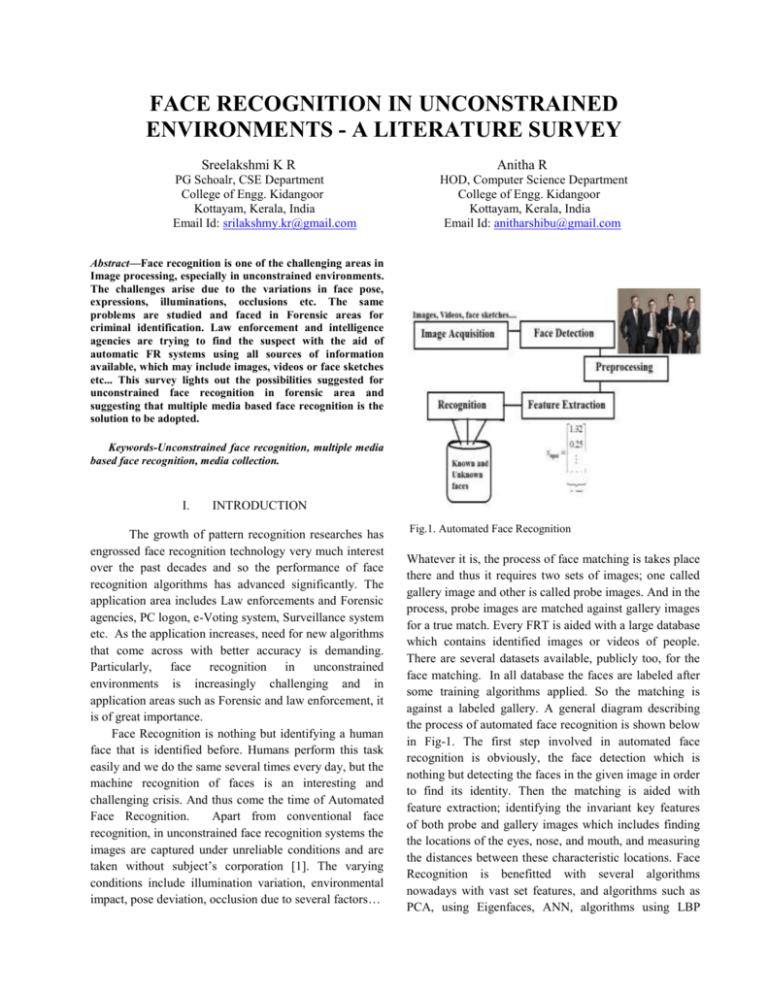

FACE RECOGNITION IN UNCONSTRAINED ENVIRONMENTS - A LITERATURE SURVEY Sreelakshmi K R PG Schoalr, CSE Department College of Engg. Kidangoor Kottayam, Kerala, India Email Id: srilakshmy.kr@gmail.com Anitha R HOD, Computer Science Department College of Engg. Kidangoor Kottayam, Kerala, India Email Id: anitharshibu@gmail.com Abstract—Face recognition is one of the challenging areas in Image processing, especially in unconstrained environments. The challenges arise due to the variations in face pose, expressions, illuminations, occlusions etc. The same problems are studied and faced in Forensic areas for criminal identification. Law enforcement and intelligence agencies are trying to find the suspect with the aid of automatic FR systems using all sources of information available, which may include images, videos or face sketches etc... This survey lights out the possibilities suggested for unconstrained face recognition in forensic area and suggesting that multiple media based face recognition is the solution to be adopted. Keywords-Unconstrained face recognition, multiple media based face recognition, media collection. I. INTRODUCTION The growth of pattern recognition researches has engrossed face recognition technology very much interest over the past decades and so the performance of face recognition algorithms has advanced significantly. The application area includes Law enforcements and Forensic agencies, PC logon, e-Voting system, Surveillance system etc. As the application increases, need for new algorithms that come across with better accuracy is demanding. Particularly, face recognition in unconstrained environments is increasingly challenging and in application areas such as Forensic and law enforcement, it is of great importance. Face Recognition is nothing but identifying a human face that is identified before. Humans perform this task easily and we do the same several times every day, but the machine recognition of faces is an interesting and challenging crisis. And thus come the time of Automated Face Recognition. Apart from conventional face recognition, in unconstrained face recognition systems the images are captured under unreliable conditions and are taken without subject’s corporation [1]. The varying conditions include illumination variation, environmental impact, pose deviation, occlusion due to several factors… Fig.1. Automated Face Recognition Whatever it is, the process of face matching is takes place there and thus it requires two sets of images; one called gallery image and other is called probe images. And in the process, probe images are matched against gallery images for a true match. Every FRT is aided with a large database which contains identified images or videos of people. There are several datasets available, publicly too, for the face matching. In all database the faces are labeled after some training algorithms applied. So the matching is against a labeled gallery. A general diagram describing the process of automated face recognition is shown below in Fig-1. The first step involved in automated face recognition is obviously, the face detection which is nothing but detecting the faces in the given image in order to find its identity. Then the matching is aided with feature extraction; identifying the invariant key features of both probe and gallery images which includes finding the locations of the eyes, nose, and mouth, and measuring the distances between these characteristic locations. Face Recognition is benefitted with several algorithms nowadays with vast set features, and algorithms such as PCA, using Eigenfaces, ANN, algorithms using LBP features etc. But still it is challenging because of unreliable conditions on images. In unconstrained face recognition problem, especially in forensic areas, some kind of face preprocessing is necessary other than face normalizations, including pose correction of faces in the image. Pose correction generally refers to the process of making the face frontal since matching is benefitted with frontal face image databases. With the help of pose correction algorithms a 3D face model is generated. And a 2D face image is rendered from the model which should be of frontal face and can be matched against the gallery. There is variety of algorithms for modeling and software solutions are also there. In this paper a survey of various unconstrained face recognition methods are conducted and concentrating mainly in forensic areas as face recognition has become an unavoidable forensic tool used by criminal investigators and law enforcement agencies. II. LITERATURE SURVEY Compared to automated face recognition, face recognition in forensic area is more difficult because it must be capable of handling facial images captured under uncontrolled conditions. In [2] improvements in forensic face recognition all the way through research with facial aging, facial marks, forensic sketch recognition, face recognition in video, etc are discussed. But still there are limitations in forensic face recognition due to pose variations, illumination, occlusion etc. Unconstrained face recognition is studied well in theory for several times. But most of them considered single media as input to the system. Among them two database oriented solutions are widely discussed and studied. Face recognition has benefited greatly from many databases that are used to study it. Most of them databases are created under restricted conditions to facilitate the study of particular parameters on the face matching problem. These parameters include variations in position, lighting, face expressions, image background, quality of camera used to capture the image, face occlusions, age, and gender and so on. Gary B. Huang et al [2] proposed such a database called LFW: Labelled Faces in the Wild. It is an initial attempt to supply a set of labeled face photographs with the range of conditions that is encountered by people day by day. In this database there are 13,233 images of 5749 different persons each of size 250 by 250 and are in JPEG format. Among them 1680 persons have 2 or more images and remaining 4069 persons have only single image in the database. Each person is given a unique name which is centered in the picture. Most of the images are color but some gray scale images are also present. The authors defined two “Views” for the database; one for algorithm development for others and other view is for performance reporting. Several discussions are conducted based on LFW dataset including [7] – [12]. But still results show deviations across different methods. A next step towards database creation in unconstrained environments is for recognition of faces from the videos. This is demanding much more nowadays in forensic field, since most of the evidence available for them is from ubiquitous surveillance cameras all around the world. When a crime is happened the proof available only are videos captured by surveillance cameras, may be of low quality. So recognition from videos is very important for law enforcement agencies. Lior Wolf [4] and et al proposed a complete database of labeled videos of faces in uncontrolled conditions called the ’YouTube Faces’. As we all know videos in nature provide more information about the person of interest than single images. Certainly, several existing methods have obtained remarkable recognition performances by exploiting the fact that a single face may become visible in a video in several successive frames. They defined a new similarity measure, called the Matched Background Similarity .This similarity is based on information from multiple frames while being resistant to pose, lighting conditions and other ambiguous factors. The YouTube Faces (YTF) database, which is out in 2011, is the video counterpart to LFW for unconstrained face recognition in videos. That means the database contains videos of persons which have labeled images in LFW database. The database contains 3,425 videos of 1, 595 persons. The unconstrained face recognition using YTF is conducted in [5], [7] and [10]. Also video to video matching is performed several authors in [15]-[18]. However, the video face recognition in unconstrained environments demands much more attention due to the abundant ubiquitous video sources of poor quality. Due to varying and confusing factors such as pose, lighting, expression, occlusion and low resolution, current face recognition technology deployed in forensic applications work in a semi-automatic manner; that is a human operator reviews the top matches from the system to manually determine the final match. So, it is essential to investigate the accuracies achieved by both the recognition by machines and by humans on unconstrained face recognition tasks. A typical framework designed to measure human precision on unconstrained faces in still images and videos is crowd sourcing on Amazon Mechanical Turk [4]. Amazon Mechanical Turk simply, MTurk, is a website used for "crowd sourcing" from a large number of human participants. By crowd sourcing we mean retrieving valuable information by the combined effort of more than one participant and they are called the “workers”. Mturk helps the individuals known as “Requesters” to find solutions to the tasks that currently impossible to do with computers, by posting some Human Intelligence Tasks (HITS) and collecting the results from workers. The process is handled in an economical way such that both workers and requesters are benefitted from it. This seems nice that will help to analyze the human versus machine performance and it is of very useful in forensic and security field. Face images in unconstrained environments usually are of considerable pose variation, which should reduce the performance of algorithms developed to recognize the frontal faces. So some sort of pose correction is necessary prior to matching of faces. There is lots of work which helps in pose normalizing the faces and authors of [13] propose a face recognition framework able to handle a range of pose variations within ±90° of yaw. Rotation about the vertical axis is called yaw angle. The first step in this framework is transformation of original poseinvariant face recognition problem into a partial frontal face recognition problem. And then render a 2D face image of frontal nature from the 3D model generated. This is used for matching which yields a better matching rate. So it can be say that in forensic identification one major task is to pose correct the image for better accuracy before applying any matching algorithm. Several pose correction algorithms are there which are studied on different datasets [19] - [21]. The accuracies vary across algorithms. So better face synthesis methods are expected which are able to generate facial shape and textures under varied poses, by using more features in the image or by combining multiple synthesis strategies. A detailed study on unconstrained face recognition is conducted by Jordan Cheney and Ben Klein et al in [5]. They considered nine different face detection frameworks which are acquired through government rules, open source, or commercial licensing. The data set utilized here for analysis is the IJB-A, a recently released unconstrained face recognition dataset which enclosed 67,183 labeled faces of 5,712 images and 20,408 video frames. The result of this study is that top performing detectors are still not able to detect the faces with severe pose, partial occlusion, or poor illumination. So, still the FRT need to be advanced. Now think on another direction of FRT which lets us to classify existing face recognition system into two broad categories; one is single media based UFR and multiple media based UFR. Single media based UFR takes only single input in the form of an image or a video etc. But, multiple media based recognition considers the images, videos, face sketches everything available for a person of interest as a media to identify the person as an input. Recently, NIST (National Institute of Science and Technology) conducted a study on single media based UFR and Multiple media based UFR [14]. The results shows, for various face datasets discussed far, that the recognition accuracy is increasing when combining multiple media sources to recognize a person. FRGC (Face Recognition Grand Challenge v.2) is an example for UFR based on multiple media, which tests across single image and single3D image vs. sing 3D image. And it yields 79% accuracy at 0.1% FAR (False Acceptance Ratio). These comments put forward that in unconstrained environments, a single face media probe, of poor quality, may not be reliable to provide an adequate picture of a face. This suggests the use of collection of face media which can include any sources of information that is accessible for a probe image. It is, thus, significant to establish how the face recognition accuracy can improve when input with a set of face media of different types, even if of diverse qualities, as probe. Thus the survey points towards the solutions associated with multiple media based algorithms and one best discussion that shows significant improvement in this area is [7] proposed by Best Rowden and et al. In this paper a media collection is proposed, that includes an image, one or more video frames of the subject, a forensic face sketch drawn by the expert artists, and some kind of demographic information (age, gender, race etc,.). The final aim is to recognize a person of attention, based on poor quality face images and videos; it is indeed to utilize anything available about the person. While traditional face recognition methods generally consider a single media (i.e., still face images, video tracks, 3D face models, 2D face image rendered from 3D model or face sketch) as input, this paper considers these all sources as the input to get a true mate for the person of interest. Fig2 from [7] suggests the different sources of subject that can be considered to identify the subject by combining the media. multiple media of a person it will give more accuracy. III. CONCLUSION A set of face media can be utilized to better identify a subject in forensic face recognition. Some sort of pose correction algorithms should improve the identification accuracy. So with media fusion and a good 3D face modeling technique a better FRT in unconstrained environment can results in a good TAR with minimum FAR. Again investigations in the direction of better face media quality measures can improve the recognition rate decrease the effort in the unconstrained environment. Our ongoing work investigates for the fully algorithmic approach of multiple media based face recognition in unconstrained environments. ACKNOWLEDGMENT Fig.2: A sample collection of face media for a subject[7] Also another fact is that Pose-corrected face images from the LFW database, pose corrected video frames from the YTF database used in this paper have been made available publicly. By referring these URLs anyone can easily utilize the datasets for the experiments: http://viswww.cs.umass.edu/lfw for LFW database and http://www.cs.tau.ac.il/∼wolf/ytfaces/ for YTF datasets. So this is of interesting and further study on this idea is sound good. A work in the direction of multiple media can be suggested on the light of a case study as depicted in [22]. On April 15, 2013, 2 bombs are exploded near Boston Marathon (US). And it was a missed opportunity for the existing single media based Automated Face recognition system. The existing technology couldn’t identify the 2 suspects; actually they were brothers, even if the images of both exist in the face database. It was the motivation behind the work in [7]. Two viable face recognition systems used in this survey were NEC NeoFace 3.1 and PittPatt 5.2.2. NeoFace [23] was the top matcher in the National Institute of Standards and Technology (NIST) Multiple Biometrics Evaluation (MBE) 2010 test. These two but failed to identify the suspects in Boston Marathon attack. So, concluding that, more advancement must be made in overcoming challenges such as pose, resolution, and occlusion in order to increase the recognition accuracy of existing system. And if it is by utilizing Sincerely thankful to Guide, other faculties and friends for supporting and helping to complete this work. REFERENCES [1] A. K. Jain, B. Klare, and U. Park, “Face matching and retrieval in forensics applications,” IEEE Multimedia. [2] Anil K. Jain, Brendan Klare and Unsang Park “Face Recognition: Some Challenges in Forensics,” 9th IEEE Int'l Conference on Automatic Face and Gesture Recognition, Santa Barbara, CA, March, 2011. [3] G. B. Huang, M. Ramesh, T. Berg, and E. Learned-Miller, “Labeled faces in the wild: A database for studying face recognition in unconstrained environments,” Univ. Massachusetts Amherst, Amherst, MA, USA, Tech. Rep. 07-49, Oct. 2007. [4] L. Wolf, T. Hassner, and I. Maoz, “Face recognition in unconstrained videos with matched background similarity,” in Proc. IEEE Conf. CVPR, June 2011 [5] L. Best-Rowden, B. Klare, J. Klontz, and A. K. Jain, “Video-tovideo face matching: Establishing a baseline for unconstrained face recognition,” in Proc. IEEE 6th Int. Conf. BTAS, September./October. 2013 [6] Jordan Cheney, Ben Klein, Anil K. Jainy and Brendan F. Klare “Unconstrained Face Detection: State of the Art Baseline and Challenges” [7] Lacey Best-Rowden, “Unconstrained Face Recognition: Identifying a Person of Interest From a Media Collection”. Information Forensics and Security, IEEE Transactions on (Volume:9,Issue:12)Biometrics Compendium [19] Y. Lin, G. Medioni, and J. Choi, “Accurate 3D face reconstruction from weakly calibrated wide baseline images with profile contours,” in Proc. IEEE Conf. CVPR, June 2010. [8] K. Simonyan, O. M. Parkhi, A. Vedaldi, and A. Zisserman, “Fisher vector faces in the wild,” in Proc. BMVC, 2013. [20] A. Asthana, T. K. Marks, M. J. Jones, K. H. Tieu, and M. Rohith, “Fully automatic pose-invariant face recognition via 3D pose normalization,” in Proc. IEEE ICCV, November 2011. [9] D. Chen, X. Cao, F. Wen, and J. Sun, “Blessing of dimensionality: High-dimensional feature and its efficient compression for face verification,” in Proc. IEEE Conf. CVPR, June 2013. [21] C. P. Huynh, A. Robles-Kelly, and E. R. Hancock, “Shape and refractive index from single-view spectro-polarimetric images,”Int..J.Comput. Vis., volume 101,no.1 [10] Y. Taigman, M. Yang, M. Ranzato, and L. Wolf, “DeepFace: Closing the gap to human-level performance in face verification,” in Proc. IEEE Conf. CVPR, June 2014. [22] J. C. Klontz and A. K. Jain, “A case study on unconstrained facial recognition using the Boston marathon bombings suspects,” Michigan State Univ., Lansing, MI, USA, Tech. Rep. MSU-CSE-13-4, May 2013 [11] Y. Sun, X. Wang, and X. Tang, “Deep learning face representation from predicting 10,000 classes,” in Proc. IEEE Conf. CVPR, June 2014. [12] S. Liao, Z. Lei, D. Yi, and S. Z. Li, “A benchmark study of large-scale unconstrained face recognition,” in Proc. IAPR/IEEE IJCB, September/October 2014. [13] Changxing Ding, Chang Xu, and Dacheng Tao, “Multi-task Pose-Invariant Face Recognition,” Ieee Transactions On Image Processing, Volume 24. [14] National Institute of Standards and Technology (NIST). (Jun. 2013). Face Homepage. [Online]. Available: http://face.nist.gov [15] L. Baoxin and R. Chellappa, “A Generic Approach to Simultaneous Tracking and Verification in Video," IEEE Transactions on Image Processing, volume 11. [16] K. Lee, J. Ho, M. Yang, and D. Kriegman, “Videobased Face Recognition Using Probabilistic Appearance Manifolds," volume 1 [17] N. Ye and T. Sim, “Towards General Motionbased Face Recognition," Proc. 2010 IEEE conference on Computer Vision and Pattern Recognition (CVPR). [18] X. Liu and T. Cheng, “Video-based Face Recognition Using Adaptive Hidden Markov Models.2," Proc. 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, volume 1. [23] www.nec.com/en/global/solutions/security/products/f ace recognition.html.