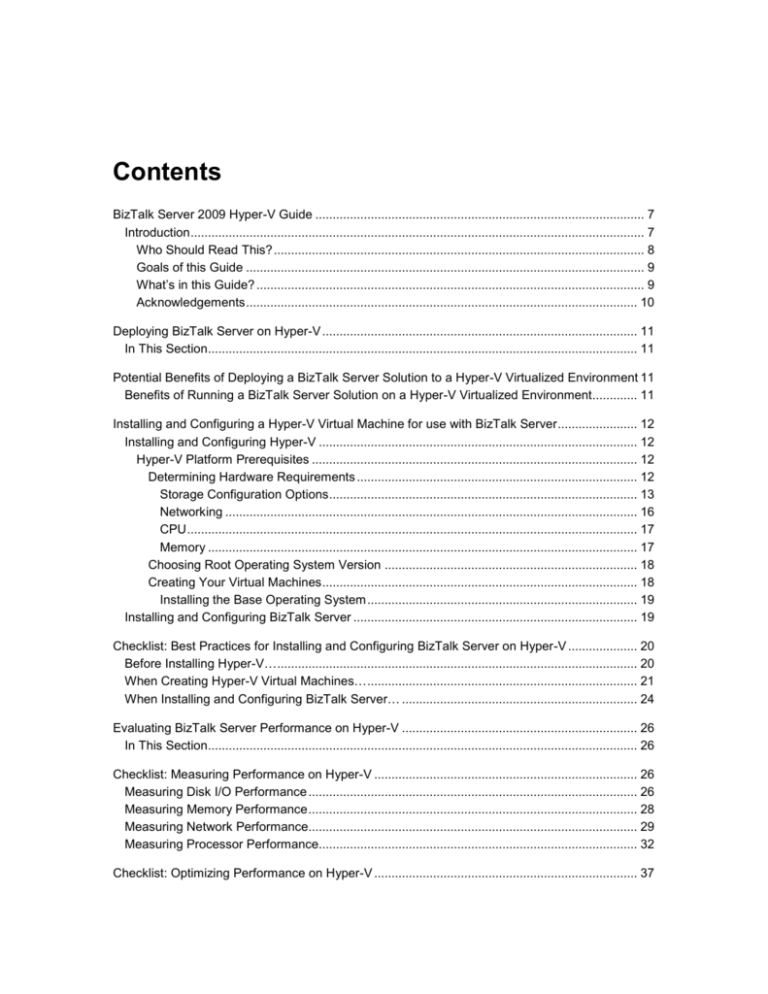

BizTalk Server 2009 Hyper-V Guide - Center

advertisement