A Review of Statistical Methods for Technical Performance

advertisement

Quantitative Imaging Biomarkers:

A Review of Statistical Methods for Technical Performance Assessment

by

Technical Performance Working Group*

*Author list in alphabetical order:

Corresponding Author:

David L. Raunig, Ph.D.

ICON Medical Imaging

2800 Kelly Rd.

Warrington, PA 18976

Abstract

Keywords: quantitative imaging, imaging biomarkers, reliability, linearity, bias,

precision, repeatability, reproducibility, agreement

1.

BACKGROUND

Medical imaging, originally developed for use in the clinic as a tool for the physician, has

developed to the point that images can now be used to reliably measure structural and

functional features. Improved resolution and modalities made imaging useful for

quantitative measurements of an anatomical region of interest, known as a quantitative

imaging biomarker (QIB). These QIBs and comparative changes made imaging useful for

analyzing changes over time and for estimating the effects of therapeutic intervention for

the treatment of disease. It is imperative, then, that these QIBs represent the true feature

measurement (e.g. volume) and that these measurements can be reliably made time after

time, or able to repeat the same measurement, and that the measurement system can be

exported to different measurement conditions. Reliability then is represented by both the

ability to represent the true measurement without bias and to do so with minimum

variability.

The explosive use of biomarkers within the last decade was not always preceded by

knowledge of QIB to reliably obtain reliable measurements of the imaging feature of

interest. Additionally, statistical methods to assess QIB performance when used in

patients are not typically standardized and may even be inappropriate. Some examples

are slopes determined for non-linear relationships, standard deviation versus coefficient

of variation, significant correlation for poor correlation, and so on . This inconsistent use

of statistical metrics can be confusing and may even be contradictory for two different

literature references. Similarly, designing a study to measure QIB reliability may not

consider design aspects to acquire the necessary data to adequately describe the QIB

performance. The range of necessary QIB values, sample sizes including number and

types of image acquisitions and conditions for QIB use are all necessary but are only

some of the things that need to be considered.

Examples: All TechPerform group: Need at least the following to comment

Lisa McShane

Rich Wahl

Jim Voyvodic

Provide citations and short summary

2.

MOTIVATION

<<Describe here why we are doing this (consistency) which may repeat what was said

before >>

RSNA QIBA reference

Goal of this paper: Provide framework for QIB assessment of biomarker

reliability

o Define reliability

o Review of study design considerations when evaluating a biomarker for

use in a clinical trial (endpoint, patient enrollment, etc) Estimability

(All Statisticians should provide input)

o Mention algorithms that determine the measurement

Claims. What can the biomarkers be used for? (Paul)

Once the QIB is measured through the application of a qualified and reliable algorithm,

the QIB itself must be evaluated for its ability to perform reliably enough to be used to

make intelligent and informed decisions. The ability to assess the reliability performance

of is critical to ensure that consistent and reliable quality of that QIB when used to

measure a disease feature such as size, biological activity, pharmacodynamic parameter

estimation or to measure physiological function, as examples.

We will refer to these qualities as the technical performance the QIB and will specifically

address the ability of the QIB to provide reliable measurements of change between two

different acquisitions. Therefore the problem can be stated as are the measurements

reliable enough to determine if there are changes to the QIB due to some factor such as

treatment or time. The three metrology areas that most directly address this overarching

question of technical performance are

•

Linearity:

The strength of the linear relationship of the biomarker to a known

or related standard reference, or more simply stated as the ability of the QIB

measure to unambiguously measure what it is supposed to measure,

•

Repeatability: The ability of the QIB to repeatedly and reliably measure the same

feature, or what is the variability of the imaging system, which may include the

patient, to obtain a measurement that is reliable enough to use in decision making,

and

•

Reproducibility: The ability of the QIB to be employed in different conditions

that may be experienced in its use, or the ability of the QIB to reliably obtain

measurements under different study conditions, as might be expected in a multiple

site clinical trial.

<< State that these concepts come from metrology and refer to the metrology

definitions >>

<< Refer to the algorithm performance for how these QIBs were obtained>>

3. OBJECTIVES

The objectives of the technology performance metrology group are to arrive at a

reasonable consensus among clinical, technology and statistical imaging experts to

establish the following:

•

Performance metrics needed to measure and report technical performance of a

QIB;

•

Methodologies to arrive at those metrics;

•

Study designs and considerations to arrive at meaningful and interpretable

assessment of technical performance of a QIB

The QIB, in its qualification for use in a clinical trial will include a claim that specifies

the details of that use that include the population, the imaging modality and possible

limitations <<ADD CLAIM ITEMS>>. Included within the evidence that the QIB is

qualified for the stated use is the specific evidence obtained for each of the technical

performance areas: bias/linearity, repeatability and reliability.

4.

QIB TYPES AND PERFORMANCE PARAMETERS

The QIBs fall into XX general types. The methods of measurement may be as simple as

electronic or physical calipers (e.g. length) or may be a complex measurement of a

functional parameter and require multiple images and a mathematical algorithm to derive.

These types of measurements specified in Table 4.1.

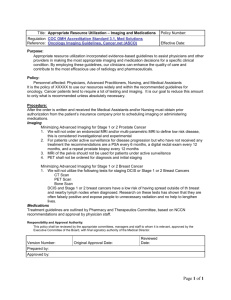

Table 4.1 Types of Measurements

Measurement Type Imaging Needs

Measurand

Structural Extent

Morphological and

Texture Features

Functional response

Single Image

Single or multiple images

V, L, A, D

CIR, IR, MS, AF,

SGLDM, FD, FT, EM

f(t), Ktrans, ROI(t)

Region of Interest

Physical Properties

Multiple Repeat Images under

different acquisition parameters

Multiple Images /Time series

ADC, BMD

<<Contrasted to Algorithm table 1.

<< What more needs to be said>>

5.

I.

TECHNICAL PERFORMANCE ANALYSIS SETUP

Steps to set up for a technical performance analysis

a. Define QIB and relationship to a truth measurement.

b. Define the study question. Can be defined as the study hypothesis but most

often should be in the form of what is the primary interest of the study and the

context of use of the results

i. Will be directly translatable to the claim and profile

ii. Define the statistical hypotheses if applicable

iii. Define strata that are identified within the claim that will be either

used or tested in the study

c. Define experimental unit,

i. May not be the patient/subject depending on study question

ii. State Inference and reason. Should be consistent with the profile claim

specifications

1. ROI (eg lesion)

2. Patient

d. Define parameters to be measured

e. Design the study as applicable (Provide quick examples that don’t

necessarily need citations)

i. Sample Size and justification which will probably include hypotheses

criteria

ii. Data requirements

1. data range,

2. Number of repeats

3. Etc.

iii. Random v Fixed Effects

iv. Strata/blocks

f. Estimate parameters

i. Test against a null hypothesis if applicable

ii. All parameters need estimates of confidence

6.

BIAS AND LINEARITY

The ultimate goal of any QIB is to provide an unbiased estimate of the actual, true

imaging physical or derived measurement over the entire range of expected values

defined in the claim. For example, when measuring the volume of a solid tumor, the

measured volume should, with random error, represent the actual volume of the lesion

and be able to do so for all lesion shapes and sizes within the expected spectrum of

lesions expected from within the claim.

A systematic bias from the true value is a bias that is caused by the imaging measurement

system and not a function of the actual measurement. Conversely, a non-systematic bias

is a difference from the true value that is dependent on the actual value in ways that are

not always able to be determined.

A linear response, as defined here, is a constant proportional relationship between the

actual true value (measurand) and the QIB over the entire range of the measurement (or

the measurement dynamic range)

7.

Definitions

o Bias

Motivation: Measure systematic bias; Identify nonsystematic bias in

the new biomarker

Definitions for measuring bias

Consistent with Terminology and Algorithm

Statistical and Descriptive definitions

Ground Truth available

Ground Truth not available

o Linearity

Motivation: Measure biomarker relationship to truth over the entire

range of claimed measurements

Definitions for measuring linearity

Range, dynamic range (?), lower and upper limits,

monotonicity, curvature

Correlation , curvature

Statistical Methodologies to Assess Technical Linearity Performance

o Standard reference

Methods

Plots: X-Y Scatter,

???

o Imperfect reference

Methods

Plots: BA, X-Y scatter, …

???

o No reference

Methods

Plots: Bar, histogram, …

???

REPEATABILITY

Repeated scans over different time intervals provide complementary information,

encompassing different sources of variability. For example, sequential repeats within the

same scanning session capture effects including scanner adjustments and finite SNR on

measurement variability. If the subject is taken out and repositioned, additional

variability due to slight differences in subject positioning will also be captured. Longer

intervals between repeat scans (e.g., days, weeks or months) will be subject to the

aforementioned effects as well as possible scanner performance drift/change and

physiological variation (or disease progression if long enough) over that time period.

The basic repeatability of the measurement per se, in the presumed absence of

physiological change (i.e., short time repeat) is critical to know but might not be

sufficient if the study protocol has treatment superimposed on longer term repeat scans.

In this case, the contribution of variance due both to the imaging measure per se and the

physiological variability will impact study design and interpretation.

Read-reread, or analysis-reanalysis, is especially critical in the case of any subjective

human input (cf. radiological reads) or stochastic elements to computational image

analysis algorithms. Analysis algorithms that are completely deterministic should by

definition not be subject to analysis-reanalysis variability: the same input data should lead

to the same numeric output.

o Definitions

VIM Definition (Nick, Mary, Lisa, Marina)

Practical definition(s) that address concerns by AJS

Within-Patient v within-(patient+reviewer+…)

o Statistical Methodologies to Assess Technical Repeatability Performance

Scan-Rescan

Test-Retest in all of its varieties.

Read-Reread (maybe)

Plots: BA with LOA;

Point this toward the desired claim

o Steps to set up for a technical performance analysis

Define QIB and repeatability conditions and source of variance to be

measured.

Within-(patient+reader+instrument)

Within-(patient+reader)+between-patient

Etc.

Define the study question much as before. Should directly relate to the

desired inference (eg. instrument, patient, patient+instrument, etc).

What is being repeated?

Will be directly translatable to the claim and profile

Define the statistical hypotheses if applicable

Strata are generally not included here but may be as part of the

design to evaluate different repeatability

Define experimental unit (related to Study question)

May not be the patient/subject depending on study question

(eg. individual lesion)

State Inference and reason. Should be consistent with the

profile claim specifications

o ROI (eg lesion)

o Patient

Define parameters to be measured

Variance and/or variance matrix

CV

ICC, CCC

8.

RC

LOA

REPRODUCIBILITY

For the QIB to be reliable for use in different conditions, the performance of the QIB

under those conditions needs to be assessed. The conditions for a clinical trial may

necessitate different patient populations, scanners, instrument technicians, image

raters/reviewers, scanning conditions and many more that may be specific to a modality.

Convert to text

{

Do reliability concepts and analysis methods differ for different uses of QIBs?

Screening & diagnosis

Risk stratification

Early response to therapy

Surrogate endpoint of clinical response to therapy

Do analysis methods differ for different aspects of RR? (this is the part we’d expected to

do, not fleshed out yet..)

Different readers, same image analysis approach

Different readers, different image analysis approach

Different scanner manufacturer

Different reconstruction method on same scanner manufacturer

Reproducibility of patient preparation during same disease state

Phantom studies may have a gold standard. Others will not.

analysis methods for big versus small perturbations?

analysis methods for comparing versions of the QIB, versus estimating LOA

}

o Definitions

VIM / ASTM / …

Practical definition

Considerations that determine what the reproducible factors are

Should tie back to profile specifications

o Steps to set up for a technical performance analysis

Define QIB

Define the study question. Can be defined as the study hypothesis but

most often should be in the form of what is the primary interest of the

study and the context of use of the results

Will be directly translatable to the claim and profile

Define the statistical hypotheses if applicable

9.

Strata are generally the question of interest unlike repeatability

(eg. Scanner, Country, populations, diseases, etc)

How are strata compared? Means, Variances or both

How do we separate differences in patient samples from

differences in reproducible factors? This section needs some

thought

Define experimental unit

Site, reader, scanner, country, etc.

Brief Explanation .

Define parameters to be measured

Means

Variance

ICC

RC

LOA

Significance covariate

Examples

STUDY DESIGN

In order for each of the above performance metrics to represent the true performance of

the QIB under the profile, an experiment to collect the images and measure the data must

be carefully and systematically designed with the study question in mind and specific

endpoints defined. Too many times do published reports of QIB performance fall victim

to haphazard designs that limit the conclusions to far short of the desired goal or even

produce misleading results that have no real utility when trying to use that information to

design the next trial.

Study designs that hold one factor constant or limit the factor to a small set of conditions

and vary the others define the performance under the conditions dictated by that one

constant factor but could not generalize to a greater set of conditions. Conversely, study

designs that spread all of the available study subjects or factors over too wide a range

may not have enough information to draw any conclusions.

< 2-3 Examples in the literature >>

9.1. LINEARITY

9.2. REPEATABILITY

9.3. REPRODUCIBILITY

10.

CLAIMS AND PROFILES

11.

DISCUSSION AND FUTURE DIRECTIONS