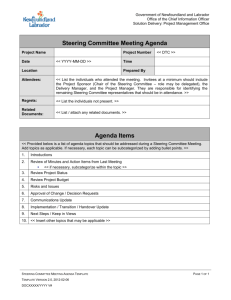

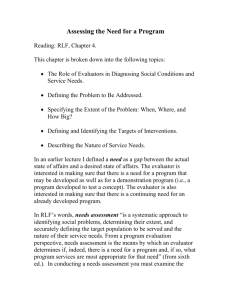

Definition of evaluation and its types

advertisement