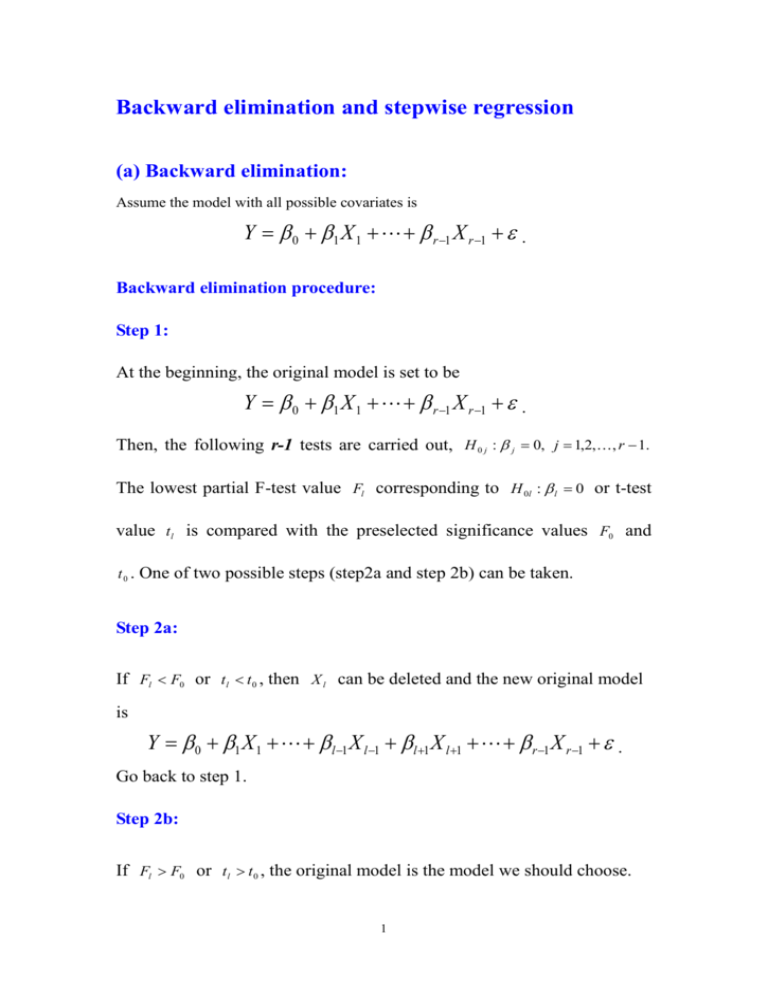

Backward elimination and stepwise regression

advertisement

Backward elimination and stepwise regression (a) Backward elimination: Assume the model with all possible covariates is Y 0 1 X 1 r 1 X r 1 . Backward elimination procedure: Step 1: At the beginning, the original model is set to be Y 0 1 X 1 r 1 X r 1 . Then, the following r-1 tests are carried out, H 0 j : j 0, j 1,2,, r 1. The lowest partial F-test value Fl corresponding to H 0l : l 0 or t-test value t l is compared with the preselected significance values F0 and t 0 . One of two possible steps (step2a and step 2b) can be taken. Step 2a: If Fl F0 or t l t 0 , then X l can be deleted and the new original model is Y 0 1 X 1 l 1 X l 1 l 1 X l 1 r 1 X r 1 . Go back to step 1. Step 2b: If Fl F0 or t l t 0 , the original model is the model we should choose. 1 Example (continue): Suppose the preselected significance level is 0.1. Thus, F0 F1,8, 0.9 3.14. Step 1: The original model is Y 0 1 X 1 2 X 2 3 X 3 4 X 4 . F3 0.018 corresponding to H 03 : 3 0 is the smallest partial F value. Step 2a: Fl 0.018 F0 3.14 . Thus, X 3 can be deleted. Go back to step 1. Step 1: The new original model is Y 0 1 X 1 2 X 2 4 X 4 . F4 1.86 corresponding to H 04 : 4 0 is the smallest partial F value. Step 2a: F4 1.86 F0 3.14 . Thus, X 4 can be deleted. Go back to step 1. Step 1: The new original model is Y 0 1 X 1 2 X 2 . 2 F1 144 corresponding to H 01 : 1 0 is the smallest partial F value. Step 2b: F1 144 F0 3.14 . Thus, Y 0 1 X 1 2 X 2 , is the selected model. (b) Stepwise regression: Stepwise regression procedure employs some statistical quantity, partial correlation, to add new covariate. We introduce partial correlation first. Partial correlation: Assume the model is Y 0 1 X 1 r 1 X r 1 . The partial correlation of X j and Y , denoted by rYX j ( X1 X 2X j1 X j1X r1 ) , can be obtained as follows: 1. Fit the model Y 0 1 X 1 j 1 X j 1 j 1 X j 1 r 1 X r 1 obtain the residuals e1Y , e 2Y , , e nY Also, fit the model 3 . X j 0 1 X 1 j 1 X j 1 j 1 X j 1 r 1 X r 1 obtain the residuals X X X e1 j , e2 j ,, en j . 2. e n rYX j X 1 X 2 X j 1 X j 1 X p1 Y i i 1 e n Y i i 1 e Y ei e e Xj e n Y 2 i 1 Xj i Xj e Xj 2 , where n eY eiY n i 1 n and e Xj e Xj i i 1 n . Stepwise regression procedure: The original model is Y 0 . There are r-1 covariates, X 1 , X 2 ,, X r 1 . Step 1: Select the variable most correlated Y, say X i1 , based on the correlation coefficient. Fit the model Y 0 i1 X i1 and check if X i1 is significant. If not, then Y 0 , is the best model. Otherwise, the new original model is Y 0 i1 X i1 and go to step 2. 4 Step 2: Examine the partial correlation rYX j X i , j i1 . Find the covariate 1 X i 2 with largest value of partial correlation rYX j X i . 1 Then, fit Y 0 i1 X i1 i2 X i2 and obtain partial F-value, Fi1 corresponding to H 0 : i1 0 and Fi2 corresponding to H 0 : i2 0 . Go to step 3. Step 3: Fi1 and Fi2 ) is compared with The smallest partial F-value Fl (one of the preselected significance F0 value. There are two possibilities: (a) If Fl F0 , then delete the covariate corresponding to Fl . Go back to step 2. Note that if Fl Fi2 , then examine the partial correlation rYX j X i , j i1 i2 . 1 (b) If Fl F0 , then Y 0 i1 X i1 i2 X i2 , is the new original model. Then, go back to step 2, but now examine the partial correlation rYX j ( X i , X i 2 ) , j i1 i2 . 1 The procedure will automatically stop when no variable in the new original model can be removed and all the next best candidate can not be retained in the new original model. Then, the new original model is our selected model. 5