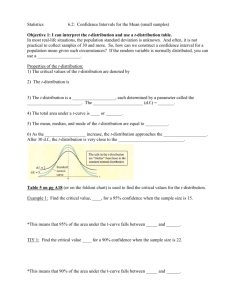

(Feb 4: t-distributions) I`m confused about the use of the t

advertisement

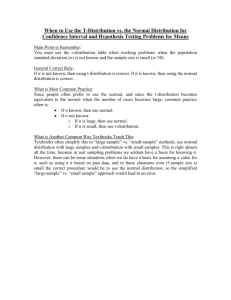

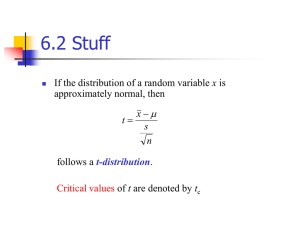

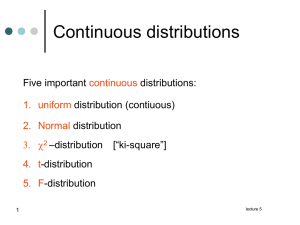

(Feb 7: Non-parametric statistics) It seems like ranking observations reveals relatively little about the spread of the data. Even so, in what types of circumstances would a non-parametric statistic test be better than a parametric one? In research, how common is it to find data that doesn’t seem to follow any sort of distribution? Very common, to answer your last question first. Most distributions are summarized by a few parameters (for the normal it’s just 2) and it’s often unrealistic to think that an entire rich dataset can be ``explained’’ by just a few summary statistics or parameters. If a dataset has many outliers, heavy skewing, more than one mode, or just shows some unusual pattern, a parametric procedure may be unreliable and give poor results. In this setting non-parametric procedures are preferred. They are also often easier to compute and their underlying theory more accessible. Non-parametric procedures can also give conservative “bounds” on results. For instance, if both your parametric and non-parametric test shows that an effect is significant then this gives added confidence to the result. You are correct that ranking observations reveals little about the spread, but that is precisely the point. It means that the procedure is insensitive to the actual values of the data. It’s analogous to why the median is useful as a measure of center. The median reveals little about the extreme values of the data, and therefore is not sensitive to outliers. If the data isn’t symmetric, the median is often preferred over the mean as a measure of center. If the data isn’t easily summarized by a few parameters, non-parametric procedures are preferred. One other advantage to the rank-based methods presented in Chapter 11 is that they are sometimes the only procedures that can be used with ordinal-type data. Ordinal data refers to measurements where only the comparisons “greater”, “less”, or “equal” being measurements are relative. For instance, when a person is asked to assign a number between 1 and 10 to their political position, where 1=left-wing and 10=right-wing, one is using an ordinal scale of measurement. Instead of 1 to 10 we could have used 10 to 100 or any increasing list of 10 numbers. For such data, what’s important isn’t the value of the numbers themselves but the comparisons between numbers. Rank-based methods are often the most appropriate procedures to use with ordinal data. Note, however, that the applicability of non-parametric statistics is much wider than this. (Feb 4: t-distributions) I’m confused about the use of the t-distribution. The t-distribution is like a normal distribution, but why do we use the t? What makes this distribution so useful? If you have a sample from a normal population, the mean of that sample will also have a normal distribution. And if you normalize the mean, that is subtract off the population mean (mu) and divide by the population standard deviation over root n (sigma/sqrt(n)) then that random quantity will have a standard normal distribution ( N(0,1) ) However, if you replace the population standard deviation with the sample standard deviation (which is itself random, being based on the sample), that quantity (x-bar - mu) / (s / sqrt(n) ) does not have a normal distribution. Theory tells us it has a t-distribution. That t-distribution also depends on n through some parameter which we call the "degrees of freedom". We say it has a t-distribution with n-1 degrees of freedom. The lab a few classes ago was meant to look at the differences between the normal distribution and the t-distribution. When n is large they're both fairly close. But when n is small the t-distribution has a lot more spread to it. So for small sample sizes, when the population is fairly normal, to get accurate confidence intervals and hypothesis test results when we use the sample mean, we need to use t-related procedures, as opposed to z-related (normal) procedures. . . . Related to this question is . . . (Feb 4: Degrees of freedom) When samples of two populations are used in the t test, they are assumed to have the same variance. When this isn’t the case, the degrees of freedom for a t distribution must be calculated from the estimated variances of the populations. Conceptually, what does variance have to do with degrees of freedom? Also, what exactly are degrees of freedom and why do we consider them in t-distributions? There are two answers to this: (a) One simple explanation is “it’s just a word!’’ That is, the tdistribution is a distribution that depends on n through some parameter (we could have called it theta). We just happen to give that parameter the ungodly name of “degrees of freedom.” The reason the t-distribution in statistical inference depends on the sample size n (unlike the normal distribution), is because we are using an estimator for the population variance (that is, using s for sigma), which depends on n. (The larger n is the better the variance estimate becomes.) (b) To give more explanation behind the terminology, the concept of degrees of freedom is widespread in statistics. Roughly it is used for the number of “independent” or “free” measurements needed to estimate the variability. With a sample of size n we start off with n independent measurements. However, the estimator for the sample variance relies on x-bar, which, in a sense, uses up one piece of information. That is, if I have n measurements, and the average of those measurements, I have a redundant piece of information. From n-1 measurements and x-bar I can calculate the nth measurement. In a two-sample t-test with two samples of sizes n and m, respectively, we have n+m total measurements. But we use x-bar and y-bar, the means of the two groups, giving up 2 “degrees of freedom.” That’s why the test statistic for that test has n+m-2 degrees of freedom. (Feb 4, what if the experiment isn’t truly random) If after an experiment was done we realize that the controls were not picked at random, what can we do to correct for this error? Do we have to start over again? A good statistical design must put its top priority on getting good data. This means getting the sample as representative of the population as possible with as little bias as possible. The most basic assumptions underlying all the procedures we do is that we have a genuine random sample. Without that, all of the inference fails. So to answer your question we might very well have to start back over at square one.