JCPSupporting

advertisement

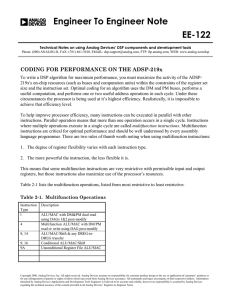

Supporting Information for Stochastic mapping of the Michaelis-Menten mechanism Éva Dóka, Gábor Lente* Department of Inorganic and Analytical Chemistry, University of Debrecen, Debrecen, Hungary. Fax: 36-52-518-660; Tel: 36-52-512-900 Ext.: 22373; E-mail: lenteg@delfin.unideb.hu Derivation of equations Equations (1)-(3): These are literature equations. Equation (4): The number of all possible states can be derived by counting all possible (e,s) pairs that are physically meaningful. (e,s) is physically meaningful if e, s, es, and p are nonnegative integers. e0 s0 es = e0 – e 0 e e0 p = s0 s e0 + e 0 s e s0 e0 The number of possibly states is counted in the following table: value of s possible values of e number of cases 0 0, 1, ....., e0 e0 + 1 1 0, 1, ....., e0 e0 + 1 .......j .......0, 1, ....., e0 .......e0 + 1 s0 e0 0, 1, ....., e0 e0 + 1 s0 e0 + 1 1, 2, ....., e0 e0 s0 e0 + 2 2, 3, ....., e0 e0 1 .......s0 e0 + j .......j, j+1, ....., e0 .......e0 j + 1 s0 e0 1 Therefore, the overall number of possible cases is: e0 m ( s0 e0 1)(e0 1) i ( s0 e0 1)(e0 1) i 1 ( s0 e0 1) (e0 1) 2 This is identical to equation (3). S2 e0 (e0 1) 2 Equation (5): The master equation can simply be derived from the scheme of the reaction with the transition probabilities. The following scheme shows the possible transitions. S3 Equation (6): A simple substitution into the formula can be used to check the validity of this enumerating function. For example, for s0 = 6 and e0 = 3 (m = 22) ( s 0 s e0 e 1)(e0 1) e f (e, s) (e s 1)(e s) e ( s0 s e0 e 1)(e0 1) 2 e 3 2 1 0 3 2 s 6 5 4 3 5 4 f(e,s) 1 2 3 4 5 6 e 1 0 3 2 1 0 s 3 2 4 3 2 1 f(e,s) 7 8 9 10 11 12 e 3 2 1 0 3 s 3 2 1 0 2 f(e,s) 13 14 15 16 17 e 2 1 3 2 3 if es if es s 1 0 1 0 0 f(e,s) 18 19 20 21 22 The logic behind the enumerating function can be seen better if it is given in terms of es (= e0 – e) and p (= s0 s e0 + e). p(e0 1) es 1 ( s 0 e0 p 1)( s 0 e0 p) f ( e, s ) p ( e 1 ) es 1 0 2 p 0 1 2 .... s0e0 s0e0+1 .... s01 s0 if p s 0 e0 if p s 0 e0 es 0 1 2 .... e0 1 e0 (e,s) f(e,s) (e,s) f(e,s) (e,s) f(e,s) (e0,s0) 1 (e0,s01) (e0+1)+1 (e0,s02) 2(e0+1)+1 .... (e0,e0) (s0e0)(e0+1)+1 (e0,e01) (s0e0+1)(e0+1)+1 .... (e0,1) (s0e0/2+1)(e0+1)2 (e0,0) (s0e0/2+1)(e0+1) (e01,s01) 2 (e01,s02) (e0+1)+2 (e01,s03) 2(e0+1)+2 .... (e01,e01) (s0e0)(e0+1)+2 (e01,e02) (s0e0+1)(e0+1)+2 .... (e01,0) (s0e0/2+1)(e0+1)1 - (e02,s02) 3 (e02,s03) (e0+1)+3 (e02,s04) 2(e0+1)+3 .... (e02,e02) (s0e0)(e0+1)+3 (e02,e03) (s0e0+1)(e0+1)+3 .... - .... (1,s0e0+1) e0 (1,s0e0) (e0+1)+e0 (1,s0e01) 2(e0+1)+e0 .... (1,1) (s0e0)(e0+1)+e0 (1,0) (s0e0+1)(e0+1)+e0 .... - (0,s0e0) e0+1 (0,s0e01) (e0+1)+e0+1 (0,s0e02) 2(e0+1)+e0+1 .... (0,0) (s0e0)(e0+1)+e0+1 - - .... (e,s) f(e,s) (e,s) f(e,s) (e,s) f(e,s) (e,s) f(e,s) S4 .... .... .... .... .... - .... - Equation (7)-(10): These are written using the statistical definition of expectation and standard deviation. Equation (11): This is the assumption made in the approximation. The analogy of this with the deterministic steady-state assumption is given by the fact that S values satisfy the following equation: k k 0 1 es (k 1 k 2 )(e0 e) S e0 e ,s0 s e e0 1 (e 1)( s 1) S e0 e1,s0 s e e0 N AV N AV k 1 (e0 e 1) S e0 e1,s0 s e e0 k 2 (e0 e 1) S e0 e 1,s0 s e e0 Equation (12): This equation is derived from equation (5), into which equation (11) is substituted: dRs0 s ee0 (t ) dt k S e0 e ,s e e0 1 es (k 1 k 2 )(e0 e) Rs0 s e e0 (t ) S e0 e ,s0 s e e0 N AV k1 (e 1)( s 1) Rs0 s e e0 (t ) S e0 e 1,s0 s e e0 k 1 (e0 e 1) Rs0 s e e0 (t ) S e0 e 1,s0 s e e0 N AV k 2 (e0 e 1) Rs0 s ee0 1 (t ) S e0 e 1,s0 s e e0 1 Summing all the equations from e = 0 to e = with p = s e + e0 held constant (the definition of is given in equation (13) in the main text) gives: dR p (t ) dt α Si ,p i 0 k1 R p (t ) ((e0 i )( s 0 p i ) S i , p ) (k 1 k 2 ) R p (t ) (iS i , p ) N AV i 0 i 0 α α 1 k1 R p (t ) ((e0 i 1)( s 0 p i 1) S i 1, p ) k 1 R p (t ) ((i 1) S i 1, p ) N AV i 1 i 0 α 1 k 2 R p 1 (t ) ((i 1) S i 1, p 1 ) i 0 This equation contains most of the terms with both positive and negative signs. It must also be remembered that equation (13) ensures that (e0 )(s0 p ) = 0. dR p (t ) dt α α i 0 i 0 i 1 Si , p k 2 R p (t ) (iS i , p ) k 2 R p1 (t ) (iS i , p1 ) S5 Considering the definition given in equation 13 and the fact that S i 0 i,p 1 , this is identical to equation (12). Notes on solving equation (12): This equation is easily solved in an iterative fashion. The general solution is: p R p (t ) Ap ,i e k 2 ES i t i 0 The coefficients Ap,i can be calculated iteratively: A0 ,0 1 A p 1,i ES p 1 for i p A p ,i ES p ES i for i p p 1 A p ,i A p ,i i 0 Equation (13): This equation displays the definition of the expectation for the number of ES molecules at constant p. Equation (14): This equation can be directly obtained from statistical thermodynamics using the concept of partition functions. The following considerations are necessary for this: The energy term is directly related to the Michaelis constant: e i e iG / kT (e G / kT ) ( VN A K M )i i The multiplicities are obtained with a combinatorical line of thought. There are e0 enzyme and s0 p substrate molecules that can build enzyme-substrate adducts. If i adduct molecules are formed, there are e 0 different possible ways to select i the enzyme molecules, s 0 p different possible ways to select the substrate molecules i and i! different permutations of enzyme-substrate pair formation. The multiplicity is then: e s p e0 ( s 0 p)! M 0 0 i! i i i ( s 0 p i)! The Si,p value is obtained as the energy multiplied by the multiplicity of a given state and divided by the entire partition function. S6 Equation (15): The first part of this equation is simply the definition of a statistical expectation. For the second part of the equation, the definition of the confluent hypergeometric function (1F1) is used: F (a ,b , z ) 1 1 1 a(a 1) z 2 a(a 1)( a 2) z 3 a z .... b b(b 1) 2! b(b 1)(b 2) 3! If a is a positive integer and z is a positive real number, the infinite series is reduced to a finite sum for 1F1(a,b,z): 1 F1( a ,b , z ) 1 a i 0 a a(a 1) z 2 a(a 1)( a 2) z 3 a(a 1)( a 2)...2 1 za z .... b b(b 1) 2! b(b 1)(b 2) 3! b(b 1)(b 2)...(b a 2)(b a 1) a! a! (b 1)! z i (a i)! (b 1 i)! i! Here, only the case = e0 will be dealt with. The other possibility, i.e. = s0 p is handled by an entirely similar sequence of thought. Transforming the original equation in a step-by step manner: ( s 0 p)! (VN A K M ) i i 1 0 p i )! e0 e0 ( s 0 p)! (VN A K M ) i i ( s p i )! i 0 0 i i (s e0 e0 e0 i (VN A K M ) i i 1 (e0 i )! i! ( s 0 p i )! e0 1 i ( s 0 p)! e0 ! (VN A K M ) i 0 (e0 i )! i! ( s 0 p i )! ( s 0 p)! e0 ! e0 i (VN A K M ) i i 1 (e 0 i )! i! ( s 0 p i )! e0 1 ( K MVN A ) e0 (VN A K M ) i i 0 (e 0 i )! i! ( s 0 p i )! ( K MVN A ) e0 e0 i ( K MVN A ) e0 i i 1 0 i )! i! ( s 0 p i )! e0 1 ( K MVN A ) e0 i i 0 (e 0 i )! i! ( s 0 p i )! (e At this point, a new auxiliary variable j = e0 i is introduced. S7 e0 1 e0 i ( K MVN A ) e0 i i 1 (e 0 i )! i! ( s 0 p i )! e0 1 ( K MVN A ) e0 i i 0 (e 0 i )! i! ( s 0 p i )! e0 ! j 0 e0 e0 ! j 0 e0 j ( K MVN A ) j j!(e0 j )! ( s 0 p e0 j )! 1 ( K MVN A ) j j!(e0 j )! ( s 0 p e0 j )! (e0 1)! ( K MVN A ) j ( s 0 p e0 )! e0 j! j 0 (e 0 j 1)! ( s 0 p e 0 j )! e0 1 e0 ! ( K MVN A ) j ( s 0 p e0 )! j! j 0 (e 0 j )! ( s 0 p e 0 j )! e0 (e0 1)! ( s 0 p e0 )! ( K MVN A ) j j! j 0 (e 0 j 1)! ( s 0 p e 0 j )! e0 1 e0 e0 e0 ! ( s 0 p e0 )! ( K MVN A ) j j! j 0 (e 0 j )! ( s 0 p e 0 j )! e0 F (e0 1, s 0 p e0 1, K M N AV ) 1 F1 ( e 0 , s 0 p e 0 1, K M N AV ) 1 1 The last line is the same as the last part of equation (15) if = e0 is taken into account. Equation (16): By definition, the standard deviation is given as e ( s 0 p)! e ( s 0 p)! i2 0 (VN A K M ) i i 0 (VN A K M ) i i i ( s 0 p i)! ( s 0 p i)! i 1 i 1 σp ( s p )! e0 e ( s 0 p)! 0 (VN A K M ) i (VN A K M ) i 0 i i ( s 0 p i )! i 0 i 0 ( s 0 p i)! Successive transformation of equation (16) gives: S8 2 ES p (e0 ES p )( s 0 p ES p ) ( s 0 p )! (VN A K M ) i p i )! i 1 0 VN A K M e0 s 0 p e0 ( s 0 p) ES ( e0 s 0 p )! i (VN A K M ) i ( s 0 p i )! i 0 ( s 0 p )! (VN A K M ) i i 1 0 p i )! VN A K M e0 s 0 p e0 ( s 0 p) ES e0 ( s 0 p )! i (VN A K M ) i ( s 0 p i )! i 0 2 2 p i i (s e0 p ( s 0 p )! e ( s 0 p)! (VN A K M ) i e0 ( s 0 p ) 0 (VN A K M ) i i ( s p i )! ( s 0 p i )! i 0 0 ES e0 ( s 0 p )! i (VN A K M ) i ( s 0 p i )! i 0 e VN A K M e0 s0 p i i0 i 1 2 p e0 K ES p M e0 s 0 p e0 s 0 ES VN A i i (s KM VN A p iK i 0 M e ( s 0 p )! VN A ie 0 is 0 ip e0 ( s 0 p) 0 (VN A K M ) i i ( s p i )! 0 ES ( s p )! e0 i 0 (VN A K M ) i ( s 0 p i )! i 0 2 p The term containing KM is handled separately for iK i 0 e ( s0 p)! e ( s0 p)! VN A 0 (VN A K M ) i i 0 (VN A K M ) i 1 i i ( s0 p i )! ( s0 p i )! i 1 M 1 ( s0 p)! e j e0 ( s0 p)! e0 j ( j 1 ) ( VN K ) ( j 1) 0 (VN A K M ) j j 1 j ( s 0 p j ) A M ( s p j 1 )! j 1 ( s p j )! 0 j 0 j 0 0 1 1 e0 ( s0 p)! e ( s0 p)! j j )( s p j ) ( VN K ) (e0 j )( s0 p j ) 0 (VN A K M ) j 0 0 A M j ( s p j )! j ( s0 p j )! j 0 0 (e j 0 The last part of this equation is true because the definition of (equation (13)) ensures that (e0 )(e0 p )=0. Returning to the original expression handled and substituting this new formula: S9 2 p iK i 0 M e ( s 0 p)! VN A ie 0 is 0 ip e0 ( s 0 p) 0 (VN A K M ) i i ( s 0 p i )! ES e0 ( s 0 p)! i (VN A K M ) i ( s 0 p i )! i 0 (e i 0 0 2 p e ( s 0 p)! i )( s 0 p i ) ie 0 is 0 ip e0 ( s 0 p) 0 (VN A K M ) i i ( s 0 p i )! ES e0 ( s 0 p)! i (VN A K M ) i ( s 0 p i )! i 0 e0 ( s 0 p)! (VN A K M ) i i ( s 0 p i )! i 0 ES e0 ( s 0 p)! i ( VN K ) i A M ( s 0 p i )! i 0 2 p i 2 2 p e ( s 0 p)! e ( s 0 p)! i 0 (VN A K M ) i i 0 (VN A K M ) i i i ( s 0 p i )! ( s 0 p i )! i 0 i 0 e0 ( s 0 p)! e ( s 0 p)! (VN A K M ) i (VN A K M ) i 0 i i ( s 0 p i )! i 0 i 0 ( s 0 p i )! 2 2 This proves equation (16). Equation (17)-20: These equations follow from the definitions of expectation and standard deviation. Equation (21): This equation gives an estimate of the initial time period after which the steady-state assumption can be used justifiably. This time is obviously related to the time needed to establish the equilibrium between species E, S and ES. A good estimate of the time can be given by deterministic kinetics using a sequence of thought similar to relaxation kinetics: d [ES] k1 [E][S] k 1 [ES] dt The concentrations are given by introducing a new variable x, the distance from the equilibrium value: [E] [E] x [S] [S] x [ES] [ES] x S10 The differential equation is then: dx k1 ([E] x)([S] x) k 1 ([ES] x) k1 x 2 (k1 [E] k1 [S] k 1 ) x dt Introducing the new constant k: k k1[E] k1[S] k 1 The transformed differential equation is then: dx k1 x 2 k x dt The standard solution method for separable ordinary differential equations gives: x [ES] k e k t k1 [ES] (1 e k t ) k Finding the value of t when x falls to a minute fraction of its initial value: δ[ES] [ES] k e ktδ k1[ES] (1 e ktδ ) k Rearranging this equation gives: e k tδ k δ k1δ[ES] k k1 [ES] k k1δ[ES] k / δ k1 [ES] Expressing t gives: tδ k k1 [ES] 1 ln k k / δ k1 [ES] This is the same as equation (17) with substituting = 0.05. Equation (23): The equation can be derived directly from the definition of expectation for random variables with continuous distribution: τ tk 2 ES 0 e 0 k 2 ES 0 t k 2 ES 0 (k 2 ES 0 ) 2 1 k 2 ES 0 S11 Figure S1. Comparison of expectations and standard deviations obtained for the enzyme substrate adduct (ES) and the product (P) obtained with the full solution and the stochastic steady-state approximation in the Michaelis-Menten mechanism. Solid lines: full solution; markers: steady-state approximation. k1/NAV = 1 s1, k1 = 1 s1, k2 = 1 s1, e0 = 10, s0 = 50 S12 Figure S2a Comparison of the dead times (t0.05) of the steady-state approximation in the Michaelis-Menten mechanism obtained from the full solution and the estimate given in equation 1. Varied variable: k1/NAV. Other parameters: k1 = 100 s1, k2 = 1 s1, e0 = 10, s0 = 50. Figure S2b Comparison of the dead times (t0.05) of the steady-state approximation in the Michaelis-Menten mechanism obtained from the full solution and the estimate given in equation 1. Varied variable: k1. Other parameters: k1/NAV = 100 s1, k2 = 1 s1, e0 = 10, s0 = 50. S13 Figure S2c Comparison of the dead times (t0.05) of the steady-state approximation in the Michaelis-Menten mechanism obtained from the full solution and the estimate given in equation 1. Varied variable: k2. Other parameters: k1/NAV = 100 s1, k1 = 100 s1, k2 = 1 s1, e0 = 10, s0 = 50. S14