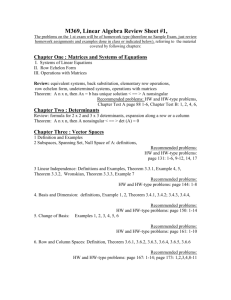

notes_6_matrices

advertisement

Chapter 1

Matrices

Definition 1.1. An nk matrix over a field F is a function A:{1,2,…,n}{1,2,…k}F.

A matrix is usually represented by (and identified with) an nk (read as “n by k”)

array of elements of the field (usually numbers). The horizontal lines of a matrix are referred

to as rows and the vertical ones as columns. The individual elements are called entries of the

matrix. Thus an nk matrix has n rows, k columns and nk entries. Matrices will be denoted by

capital letters and their entries by the corresponding small letters. Thus, in case of a matrix A

we will write A(i,j)=ai,j and will refer to ai,j as the element of the i-th row and j-th column of

A. On the other hand we will use the symbol [ai,j] to denote the matrix A with entries ai,j.

Rows and columns of a matrix can (and will) be considered vectors from Fk and Fn,

respectively, and will be denoted by r1,r2, … rn and c1,c2, … ,ck. The expression nk is called

the size of a matrix.

Definition 1.2. Let A be an nk matrix. Then the transpose of A is the kn matrix AT, such

that for each i and j, AT(i,j)=A(j,i).

In other words, AT is what we get when we replace rows of A with columns and vice

versa..

Proposition 1.1. For any n,kN the set of MTR(n,k) all nk matrices over F with ordinary

function addition and multiplication of functions by constants is a vector space over F,

dim(MTR(n,k))=nk.

Proof. MTR(n,k) is a vector space (see Example 4.4???). As a basis for MTR(n,k) we can use

1 if (i, j ) ( p, q)

the set consisting of matrices Ap,q, where A p.q (i, j )

0 otherwise

The operation of matrix multiplication is something quite different from the

abovementioned operations of matrix scaling and addition. It is not inherited from function

theory, it is a specifically matrix operation. The reason for this will soon become clear.

Definition 1.3. Suppose A is an nk matrix and B is a ks matrix. Then the product of A by B

k

is the matrix C, where ci,j=ai,1b1,j+ai,2b2,j+ … ai,kbk,j= a i ,t bt , j

t 1

In other words, ci,j is the sum of the products of consecutive elements of the i-th row of

A by the corresponding elements of the j-th column of B. Strictly speaking multiplication of

matrices in general is not an operation on matrices as it maps MTR(n,k)MTR(k,s) into

MTR(n,s). It is an operation though, when n=k=s. Matrices with the same number of rows and

column are called square matrices.

Notice that C=AB is an ns matrix. Notice also that for the definition to work, the

number of columns in A (the first factor) must equal the number of rows in B (the second

factor). In other cases the product is not defined. This suggest that matrix multiplication need

not be commutative.

0 2

2

1 1 2

Example 1.1. Let A

and B 1 3 1 . then

2 0 3

1 2 2

2 1 2 0 3 4 2 1 4 5 7 5

AB

=

and BA is not defined since the

4 0 3 0 0 6 4 0 6 1 6 2

number of columns of B is not equal to the number of rows of A

1 0

0 0

0 0

0 0

Example 1.2. Let A

and B

. Then AB

while BA

. This

1 0

0 0

1 1

2 0

example proves that AB may differ from BA even when both products exist and have the

same size.

1 if s t

Example 1.3. The nn matrix I defined as I ( s, t )

is the identity element for

0 if s t

n

matrix multiplication on MTR(n,n). Indeed, for every matrix A, AI(i,j) =

A(i, t ) I (t , j ) =

t 1

A(i,j) because A(i, t ) I (t , j ) is equal to A(i,j) when t=j and is equal to 0 otherwise. Hence

AI=A. A similar argument proves that IA=A. All entries of the identity matrix I are equal to 0

except for the diagonal entries that are all equal to 1. The term diagonal entries refers to the

diagonal of a square matrix, i.e. the line connecting the upper-left with the lower-right

position in the matrix. The line consists of all elements of the form C(i,i).

Example 1.4. Suppose A is an nk matrix over F and X=[x1,x2, … ,xk] is a single-row matrix

representing a vector from Fk. By the definition of the matrix multiplication, AXT is a singlecolumn matrix representing a vector from Fn. Hence if we fix A and let X vary over Fk we

have a function A: Fk Fn defined as A(X)=AXT.

Theorem 1.1. For every nk matrix A the function A(X)=AXT is a linear mapping of Fk into

Fn.

Proof. The i-th component of the vector A(pX) is ai,1px1+ai,2px2+ … +ai,kpxk =

p(ai,1x1+ai,2x2+ … +ai,kxk). Since ai,1x1+ai,2x2+ … +ai,kxk is the i-th component of A(X), we

have proved that A preserves scaling. Suppose Y=[y1,y2, … ,yk] and consider A(X+Y) =

A((X+Y)T) = A[x1+y1, x2+y2, … , xk+yk]T. By the definition of the matrix product, the i-th

component of A(X+Y) is equal to ai,1(x1+y1)+ai,2(x2+y2) … +ai,k(xk+yk) = ai,1x1+ai,2x2+ …

+ai,kxk + ai,1y1+ai,2y2+ … +ai,kyk and this is the i-th component of A(X)+ A(Y). This shows

that A preserves addition.

In case of coordinate vectors we will often leave out the transposition symbol T

allowing the context to decide whether the sequence should be written horizontally or

vertically.

Corollary. For every nk matrix A and for every two ks matrices B and C,

A(B+C)=AB+AC, i.e. matrix multiplication is distributive with respect to matrix addition.

Proof. From Definition 1.3 (of matrix multiplication) the i-th column of A(B+C) is the

product of A and the i-th column of B+C. Since the i-th column of B+C is the sum of the i-th

columns of B and C, by Theorem 1.1 we have that the i-th column of A(B+C) is equal to the

i-th column of AB+AC.

Theorem 1.1 says that with every nk matrix we can associate a linear mapping from

Fk into Fn. The following definition assigns a matrix to a linear mapping.

Definition 1.4. Let us consider a linear mapping :FkFn and let us choose a basis S={v1,v2,

… ,vk} for Fk and another, R={w1,w2, … ,wn} for Fn. For each vector viS there exist unique

scalars a1,i, a2,i, … , an,i such that vi=a1,i,w1+a2,i,w2 + … + an,iwn. The matrix [ai,j] is called the

matrix of with respect to bases R and S, and is denoted by M SR ( ) .

In other words, for each i, the i-th column of the matrix M SR ( ) is equal to [(vi)]R.

Notice that the matrix assigned to a linear mapping depends on the choice of the two

bases R and S. Choosing another pair of bases we will obtain another matrix for the same

linear mapping. Also, two different linear mappings may have the same matrix but with

respect to two pairs of bases. Two matrices of the same linear mappings are called similar.

We will study similarity of matrices in the next chapter???

Example 1.5. Let :R3R2, (x,y,z) = (x+2y2z,3xy+2z). We will construct the matrix for

in bases S={(0,1,1),(1,0,1),(1,1,0)} and R={(1,1),(1,0)}. We calculate the values of on

vectors from S and represent them as linear combinations of vectors from R. (0,1,1) = (0,1)

=1(1,1)+(1)(1,0), (1,0,1) = (1,5) = 5(1,1)+(6)(1,0) and (1,1,0) = (3,2) = 2(1,1)+1(1,0).

Finally we form the matrix placing the coefficients of the linear combinations in consecutive

5 2

1

columns. M RS ( )

1 6 1

Recall that [v]R denotes the coordinate vector of v with respect to a basis R. The

following theorem, states that matrices and linear mappings are essentially the same thing, or

rather, there is a 1-1 correspondence between matrices and linear mappings.

Theorem 1.2. Given a linear mapping :FkFn and bases S={v1,v2, … ,vk} for Fk, and

R={w1,w2, … ,wn} for Fn, for every vector x from Fk and for every kn matrix A we have

A[ x]S [ ( x)] R if and only if A M RS ( )

Proof. () Suppose that A M RS ( ) =[ai,j] and [x]S=[x1,x2, … ,xk]. We have (x) =

k

( x s v s ) =

s 1

k

x s (v s ) =

s 1

k

n

s 1

t 1

xs ( at , s wt ) =

k

n

xs at ,s wt =

s 1 t 1

n

k

t 1

s 1

( xs at , s )wt (the proof of

the last equality is left to the reader) which means that the t-th coordinate of [(x)]R =

k

xs at ,s . On the other hand, the t-th coordinate of M RS ( )[ x]S is also equal to

s 1

k

a

r 1

x .

t ,r r

() Now suppose that for every x from Fk, A[ x]S [ ( x)] R . Let us put x=vi. Then [vi]S =[0,

… ,1, … ,0]. The only 1 in the sequence is on the i-th place. Hence A[vi]S is equal to the i-th

column of A. By the remark following Definition 1.4 we are done.

Theorem 1.3. If :FkFn and :FnFp are linear mappings, and S,R,T are bases for

Fk,Fn,Fp, respectively, then M TS ( ) M TR ( ) M RS ( ) .

Proof. This is an immediate consequence of Theorem 1.2.

Theorem 1.4. Matrix multiplication is associative, i.e. for any three matrices A,B and C

A(BC) = (AB)C – provided the products exist.

Proof. This follows immediately from Theorem 1.3 and the fact that function composition is

associative.

Definition 1.5. Let A M RS ( ) . Then the rank of A is the rank of .