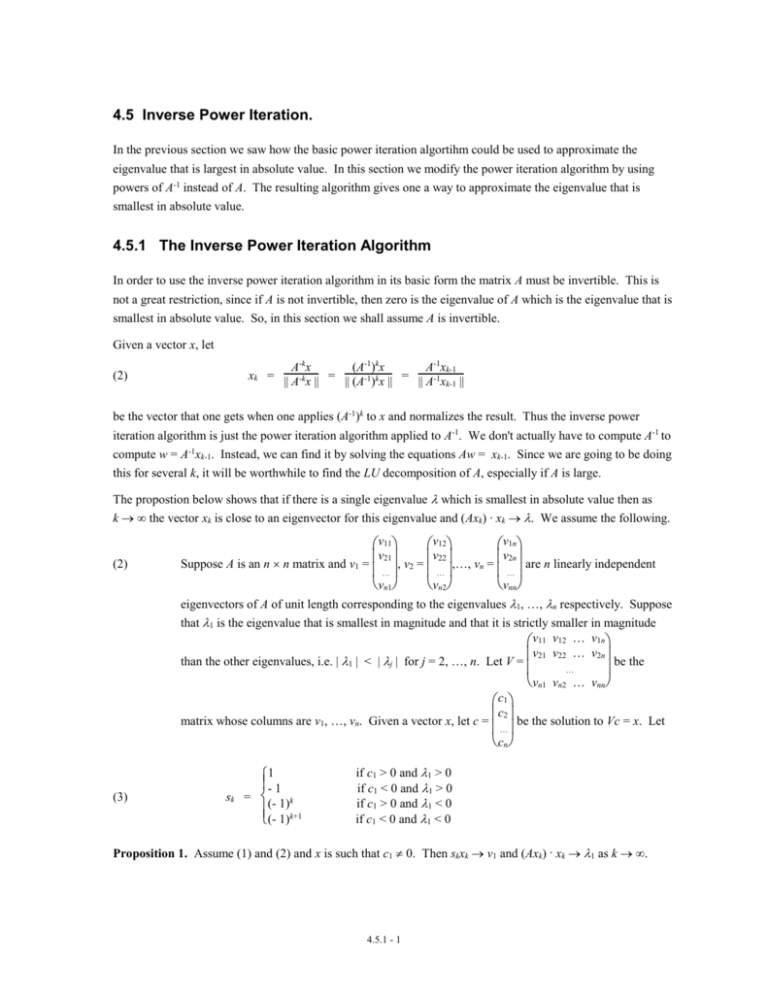

4.5.1 The Inverse Power Iteration Algorithm

advertisement

4.5 Inverse Power Iteration. In the previous section we saw how the basic power iteration algortihm could be used to approximate the eigenvalue that is largest in absolute value. In this section we modify the power iteration algorithm by using powers of A-1 instead of A. The resulting algorithm gives one a way to approximate the eigenvalue that is smallest in absolute value. 4.5.1 The Inverse Power Iteration Algorithm In order to use the inverse power iteration algorithm in its basic form the matrix A must be invertible. This is not a great restriction, since if A is not invertible, then zero is the eigenvalue of A which is the eigenvalue that is smallest in absolute value. So, in this section we shall assume A is invertible. Given a vector x, let (2) xk = A-kx (A-1)kx A-1xk-1 = = || A-kx || || (A-1)kx || || A-1xk-1 || be the vector that one gets when one applies (A-1)k to x and normalizes the result. Thus the inverse power iteration algorithm is just the power iteration algorithm applied to A-1. We don't actually have to compute A-1 to compute w = A-1xk-1. Instead, we can find it by solving the equations Aw = xk-1. Since we are going to be doing this for several k, it will be worthwhile to find the LU decomposition of A, especially if A is large. The propostion below shows that if there is a single eigenvalue which is smallest in absolute value then as k the vector xk is close to an eigenvector for this eigenvalue and (Axk) . xk . We assume the following. (2) v11 v12 v1n v v v2n 21 22 Suppose A is an n n matrix and v1 = , v2 = ,…, vn = are n linearly independent … … … vn1 vn2 vnn eigenvectors of A of unit length corresponding to the eigenvalues 1, …, n respectively. Suppose that 1 is the eigenvalue that is smallest in magnitude and that it is strictly smaller in magnitude v11 v12 … v1n v21 v22 … v2n than the other eigenvalues, i.e. | 1 | < | j | for j = 2, …, n. Let V = be the … vn1 vn2 … vnn c1 c2 matrix whose columns are v1, …, vn. Given a vector x, let c = be the solution to Vc = x. Let … cn (3) 1 - 1 sk = (- 1)k (- 1)k+1 if c1 > 0 and 1 > 0 if c1 < 0 and 1 > 0 if c1 > 0 and 1 < 0 if c1 < 0 and 1 < 0 Proposition 1. Assume (1) and (2) and x is such that c1 0. Then skxk v1 and (Axk) . xk 1 as k . 4.5.1 - 1 Proof. The key is that if v is an eigenvector of A corresponding to eigenvalue then v is also an eigenvector of A-1 but now corresponding to eigenvalue 1/. To see this note that Av = v. If we multiply by -1A-1 this becomes -1v = A-1v. Because of this connection between the eigenvectors and eigenvalues of A and A-1 the assumption (2) implies v1, v2,…, vn are eigenvectors of A-1 corresponding to the eigenvalues (1)-1, …, (n-1) respectively. Furthermore (1)-1 is the eigenvalue that is largest in magnitude and that it is strictly larger in magnitude than the other eigenvalues. It follows from Proposition 1 of section 4.4.1 that skxk v1 as k . This in turn implies (Axk) . xk as k . // Example 1 (continued). Let A = 1 3 and x = 1 . Find xk and (Axk) . xk as k grows and check for 4 2 0 convergence. 3 1 -2 1 -1 1 - 1 - 0.447 1 5 In this case A-1 = 10 4 - 1 . Then A-1x = 5 2 and x1 = and Ax1 = and 5 2 0.894 5 0 (Axk) . xk = - 1. For xk for larger k we use mathematical software; see the next section. As one can see in the 0.707 next section, x10 0.707 and (Ax10) . x10 -2.00009. Recall from section 4.1, that the eigenvalues of A are 5 3 1 1 and - 2 with eigenvectors 4 and - 1 . The normalized eigenvector corresponding to - 2 is 2 In this case xk converges quite fast to 1 2 1 . - 1 1 . However, for larger matrices the convergence is usually quite - 1 slow. If 2 is the second smallest eigenvalue in absolute value. Then the estimate from section 4.4.1 implies || skxk – v1 || 4C (1/2)/(1/1)k = 4C (1/2)k If 2 is close to 1 then 1/2 will be close to one and (1/2)k converges to zero slowly, so the convergence of skxk to v1 will be slow. The methods conidered in the next section will speed up the convergence. 4.5.1 - 2