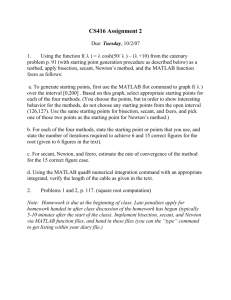

1. Solving Nonlinear Equations

advertisement

Chapter 1 Solving Nonlinear Equations

Introduction:

Solving Nonlinear Equations

Interval Halving (Bisection) Method

Linear Interpolation Method

Newton’s Method

Muller’s Method

Fixed-Point Iteration: x= g(x) Method

Newton’s Method for polynomials

Bairstow’s Method for Quadratic Factors

Other Methods for Polynomials

Multiple Roots

Solve f(x) = 0 Find the roots (zeros) of the equation

Algebraic functions

3x + 5 = 0

linear eq.

2

19x + 5x –3 = 0

quadratic eq.

45x3 + x2 - 5x +3 = 0 cubic eq.

:

anxn + an-1xn-1 + ……+ a1x1 + a0 = nth degree poly

Transcendental functions

4ex lnx + sin x + x2 - 5 = 0

curve

Find the root(s) of the eq. Graphically -- find where the curve crosses x-axis.

1. Bisection Method:

Intermediate Value Thm

If f(x) C[a, b] and k is any number between f(a) and f(b), then there exits a number

c in (a, b) for which f(c) = k.

A. Algorithm f(x) = 0

Assume that f(x) is continuous [x1 , x2]

Try to find two points x1 and x2 f(x1) * f(x2) < 0

(x1 and x2 are on the opposite side of x-axis)

Repeat

Set x3 = (x1 + x2) / 2

If f(x1) * f(x3) < 0

Set x2 = x3

Else

Set x1 = x3

Endif

Until (x1 - x2 / 2)< (tolerance value) or f(x3) ~ 0

1

A. Examples :

Page 42

f(x) = x3 + x2 - 3x - 3 = 0

Max. error is at most half the width of the last interval

Error after n iterations < (x1 - x2 / 2n ) <

:

How many iterations are required to obtain a max error of ?

Pick = 0.01 and x1 = 1, x2 = 2, then n =?

accurate up to one decimal place.

Note : the value of n is completely independent of the characteristic of the function f(x).

B. Convergence

When do we stop the iteration?

The problem is solved i.e. f(xk) = 0 for some xk

The iteration has converged xk xk-1 or xk - xk-1

The iteration has reached the tolerance value

0

C. Conclusion:

Adv and Disadv -- Can not handle multiple roots (Tangent to x-axis)

Slow, but completely reliable and good for all problems

(Algebraic or transcendental fcts)

Guaranteed to work -number of iterations can be calculated to achieve a specified accuracy

2

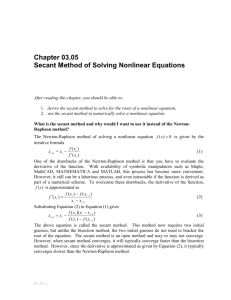

2. Linear Interpolation Method

● The Secant Method

● Linear Interpolation (False Position)

** The

Secant Method **

A. Algorithm:

To determine a root of f(x)

pick two points x0 and x1 that are near the root.

(not need to be opposite side of the f(x) along x-axis)

If f(x0) < f(x1)

swap x0 with x1

Repeat

Set x2 = x1 – f(x1) * {(x0 - x1) / [f(x0) - f(x1)} ----------- (1/m)

Set x0 = x1

Set x1 = x2

Until (f(x2) < (tolerance value) or f(x2) ~ 0

Derive the formula -Notation y = f(x), y0 = f(x0) , and y1 = f(x1) …..

y – y1 = m(x-x1) , where m = (y1 - y0) /(x1 - x0)

If (x2, 0 ) on the line 0 - y1 = m (x2 - x1)

Solve for x2

x2 - x1 = - y1 * (1/ m)

x3 = x2 - y2 * (1/ m)

x4 = x3 - y3 * (1/ m)

………

xi = xi-1 - yi-1 * (1/ m)

B. Examples

Page 46

x2 = x1 - y1 * (1/ m)

f(x) = x3 + x2 - 3x - 3

f(x) = 3x + sin (x) – e x

f(x) = x2 - 2

3

C. Convergence

In general it is faster than Bisection Method

i+1 = k(i)p

__

Secant Method ----- p = (1 + √ 5 ) / 2 ~ 1.62

Newton Method ----- p = 2

Muller's Method ----- p = 1.8

D. Conclusion

Advantage and disadv ---- pick any two points

--- conv pretty fast

--- Always uses the most recent infor and thus can't freeze at one point

--- Secant method does not always conv, but if it does it conv faster than

False Position method

If the slope of the secant line is close to 0… Div

One way to get around apply Bisection method to reach within a safe region then

Apply Secant method.

** Linear Interpolation (False Position) **

A. Algorithm:

Find two points x0 and x1 {f(x0) * f(x1)}<0

(x1 and x0 are on the opposite side of x-axis)

Repeat

Set x2 = x1 – f(x1) * {(x0 - x1) / [f(x0) - f(x1)]} ----------- (1/m)

If f(x2) * f(x0) < 0

Set x1 = x2

Else

Set x0 = x2

EndIf

Until (f(x2) < (tolerance value) or f(x2) ~ 0

4

B. Examples

f(x) = 3x + sin(x) - ex = 0 , x0 = 0, x1 = 1

f(x) = x3 + x2 - 3x - 3 = 0, x0 = 1, x1 = 2

iteration

1

2

3

4

5

interval

[1,

2]

[1.57142, 2]

[1.70542, 2]

[1.72788, 2]

[ 1.7324, 2]

x2

1.57142

1.70540

f(x0)

-4.0

-1.364

f(x1)

3.0

3.0

1.72788 -0.24784 3.0

1.73140 -0.03936 3.0

………….

f(x2)

-1.36449

-0.24784

-0.03936

-0.00615

C. Convergence

In general it is faster than Bisection Method

Check –

The iteration has reach the tolerance value

(f(xr) < (tolerance value) or f(xr) ~ 0

The iteration has converged (f(xk) - f(xk-1) <

D. Conclusion:

** Conver faster than Bisection and more accurate

(False position Method –uses straight line to predict the new point x2

The amount of computation per step requires more time than Bisection)

** If f(x) has significant curvature between x0 and x1, then it damages the

speed of the conv.

*** Newton’s Method ***

A. Algorithm: find xr f(xr) = 0

Find a point x0 such that x0 is reasonably close to the root.

Compute f(x0) and f (x0)

If f(x0) 0 and f (x0) 0

Repeat

Set x1 = x0

Set (x0 - x1 < tolerance value 1) OR (f(x0 ) < tolerance value 2)

5

B. Examples

1. f(x) = 3x + sin(x) - ex = 0 , x0 = 0.0

Page 49

iteration

1

2

x0

0.0

0.33333

x1

0.333333

0.36017

3

0. 36017

0.3604217

The root is correct up to seven significant digits

2. Find nth root of a function --------___

N

___

N

3

,

____

N

n

,

……

x2 = k where k = 1,2, 3, …

example -___

28

___

2

___

2

3

___

2

3K

____

540

5

or

___

3

3___

3

3K___

3

….

__

5

__

6

___

7

___

5

__

6

7

N

3K__

3K___

3

___

5

3K

3

__

6

3K

3___

7

___

N

3___

N

3. Complex Roots

f(x) = x2 + x +1 = 0, and f (x0) 2X + 1

let x0 = 1.0 + 1.0i

…….

6