Lecture 11

advertisement

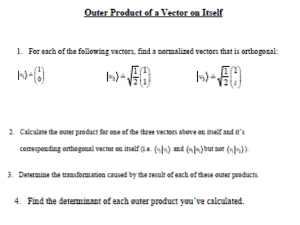

Lecture 11

Lecture annotation

Eigenvalue problem and its connection with the characteristic oscillation problems and

structural stability problems.

Introduction

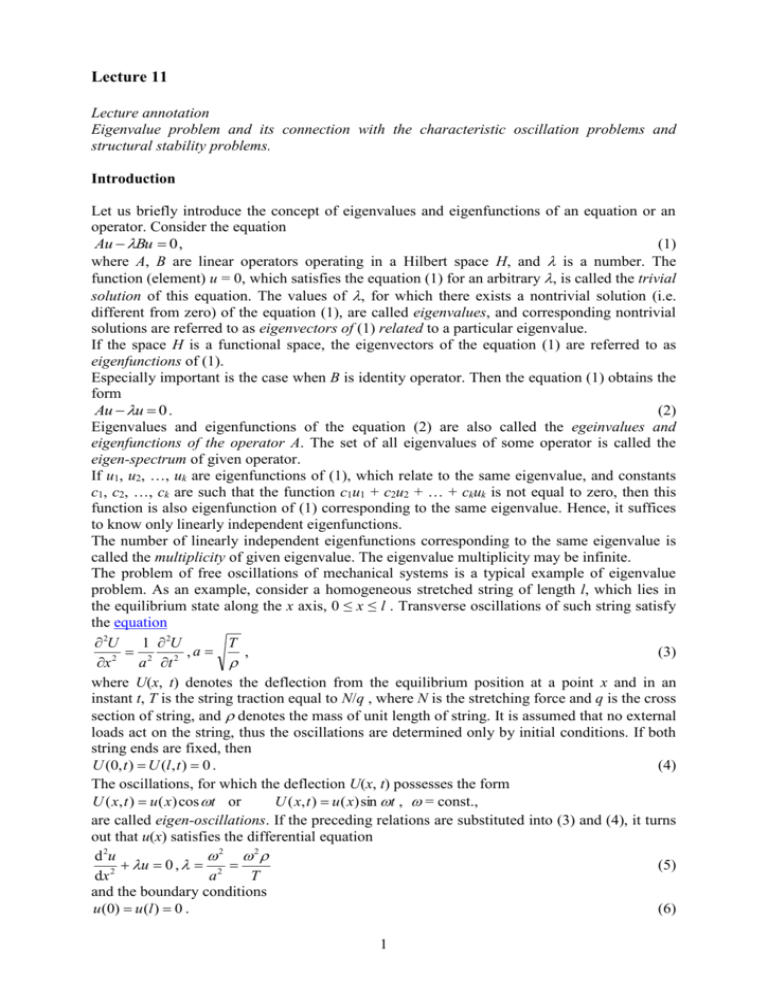

Let us briefly introduce the concept of eigenvalues and eigenfunctions of an equation or an

operator. Consider the equation

(1)

Au Bu 0 ,

where A, B are linear operators operating in a Hilbert space H, and is a number. The

function (element) u = 0, which satisfies the equation (1) for an arbitrary , is called the trivial

solution of this equation. The values of , for which there exists a nontrivial solution (i.e.

different from zero) of the equation (1), are called eigenvalues, and corresponding nontrivial

solutions are referred to as eigenvectors of (1) related to a particular eigenvalue.

If the space H is a functional space, the eigenvectors of the equation (1) are referred to as

eigenfunctions of (1).

Especially important is the case when B is identity operator. Then the equation (1) obtains the

form

(2)

Au u 0 .

Eigenvalues and eigenfunctions of the equation (2) are also called the egeinvalues and

eigenfunctions of the operator A. The set of all eigenvalues of some operator is called the

eigen-spectrum of given operator.

If u1, u2, …, uk are eigenfunctions of (1), which relate to the same eigenvalue, and constants

c1, c2, …, ck are such that the function c1u1 + c2u2 + … + ckuk is not equal to zero, then this

function is also eigenfunction of (1) corresponding to the same eigenvalue. Hence, it suffices

to know only linearly independent eigenfunctions.

The number of linearly independent eigenfunctions corresponding to the same eigenvalue is

called the multiplicity of given eigenvalue. The eigenvalue multiplicity may be infinite.

The problem of free oscillations of mechanical systems is a typical example of eigenvalue

problem. As an example, consider a homogeneous stretched string of length l, which lies in

the equilibrium state along the x axis, 0 ≤ x ≤ l . Transverse oscillations of such string satisfy

the equation

T

2U

1 2U

,a

,

(3)

2

2

2

x

a t

where U(x, t) denotes the deflection from the equilibrium position at a point x and in an

instant t, T is the string traction equal to N/q , where N is the stretching force and q is the cross

section of string, and denotes the mass of unit length of string. It is assumed that no external

loads act on the string, thus the oscillations are determined only by initial conditions. If both

string ends are fixed, then

U (0, t ) U (l , t ) 0 .

(4)

The oscillations, for which the deflection U(x, t) possesses the form

U ( x, t ) u ( x) cos t or

U ( x, t ) u ( x) sin t , = const.,

are called eigen-oscillations. If the preceding relations are substituted into (3) and (4), it turns

out that u(x) satisfies the differential equation

d 2u

2 2

u 0 , 2

(5)

dx 2

a

T

and the boundary conditions

u (0) u (l ) 0 .

(6)

1

It follows from physical considerations that u(x) ≠ 0. If it be to the contrary, the string would

not oscillate at all. Thus, the free oscillation problem for a string is reduced to the eigenvalue

problem for the operator– d2/dx2 with the boundary conditions (6). If the string is

inhomogeneous, then is a function of x, = (x). In this case, the eigen-oscillation problem

leads to the same boundary conditions (6), and to the equation

d 2u

(7)

( x)u 0 .

dx 2

In this case however, = 2T. The equation (7) is a special case of (1), in which A = −d2/dx2

and B is the operator, which corresponds to the multiplication by the function (x). Both

operators are defined for functions that equal to zero at the string ends.

Next, investigate the eigen-oscillations of membrane. If the membrane is not subjected to

external loads, the equation for transverse oscillations has the form

2U 2U 1 2U

(8)

U 2 2 2 2 .

x

y

a t

In the equilibrium, let the membrane occupy the domain of xy plane, with the boundary S.

If the membrane is fixed at the boundary, then

(9)

U S 0.

The eigen-oscillations of the membrane have the form

U ( x, y, t ) u ( x, y ) cos t

U ( x, y, t ) u ( x, y ) sin t .

or

This leads to the integration of differential equation

u u 0 ,

2

(10)

a2

in the domain with the boundary condition

(11)

u S 0.

Since u(x, y) ≠ 0, the problem is reduced to searching eigenvalues and eigenfunctions of the

operator −with the boundary condition (11).

Another class of problems that lead to the solution of eigenvalue problem concerns the

stability of mechanical systems. It will be explained using the following example:

Consider the deflection of a plate subjected to in-plane loading proportional to some

parameter . Let this loading generates stresses Tx, Txy, Ty. Transverse loading does not

exist and the plate boundary is clamped. The plate deflection satisfies the homogeneous

differential equation (because transverse loading q(x, y) ≡ 0)

h 2 w

2w

2w

2

(12)

w

Tx

2Txy

Ty 2 0

D x 2

xy

y

and homogeneous boundary conditions

w

(13)

0.

wL 0,

n L

The system of equations (12), (13) has trivial solution. This indicates that the undeflected

plate is an equilibrium state. If is sufficiently small, i.e.

CD

,

(14)

2 Nh

where N denotes max(Tx, Txy, Ty), then the trivial solution is a single solution, and thus the

undeflected shape of the plate is unique. Simultaneously it is obvious that sufficiently small

changes of do not disturb the inequality (14), and thus they do not disturb the undeflected

equilibrium shape of the plate, which will be stable with respect to small changes of the

parameter . If does not satisfy the inequality (14), it may happen that for some values of ,

called critical ones, the equation (12) will have a nontrivial solution, which fulfils the

boundary conditions (13). It this case, in addition to undeflected shape of the plate, also

2

deflected (buckled) shape may occur. When approaches the critical value, the undeflected

shape of the plate turns to be instable.

Apparently, it is important to find critical values of the parameter (especially the lowest

critical value), which is, in principle, the eigenvalue problem for the equation (12) with

boundary conditions (13).

It may be generally stated that the stability problem reduces to the eigenvalue problem for a

linear equation whereas the nonlinear equations, describing given problem, form a starting

point of analysis.

Eigenvalues and eigenfunctions of symmetric operator

Theorem 1. Symmetric operator has real eigenvalues.

Let 0 be an eigenvalue and 0 be corresponding eigenfunction of symmetric operator A. Then

it holds

A0 00 .

Multiply the preceding equality by0 to form the scalar product and get

( A0 ,0 )

0

.

(15)

2

0

The operator A is symmetric, hence (A0, 0) = (0, A0). At the same time it holds (0, A0)

= ( A0 ,0 ) (property of the scalar product). The number (A0,0) is equal to its complex

conjugate, thus it is a real number. According to (15), 0 is a real number.

Remark 1. Eigenvalues of a positive and a positive definite operator are positive numbers. It follows

immediately from the relation (15) (Further we will consider only real spaces with respect to eigenvalue

problems).

Theorem 2. Eigenfunctions of symmetric operator corresponding to different eigenvalues are

mutually orthogonal.

Let 1, 2 be distinct eigenvalues of the symmetric operator A. Let 1, 2 be corresponding

eigenfunctions. Then

(16)

A1 11 , A2 22 .

Multiply scalarly the first equation by 1 and the second equation by 2. Subtract both

equations and obtain

( A1,2 ) (1, A2 ) (1 2 )(1,2 ) .

The left-hand side of the preceding equation is equal to zero because the A is symmetric.

Hence, if 1 − 2 ≠ 0, it must hold (1, 2) = 0.

If more eigenfunctions belong to a given eigenvalue, these eigenfunctions can be

orthogonalized using Schmidt’s orthogonalization process. Thus, in the next, we will assume

that the set of eigenfunctions of symmetric operator forms an orthonormal system.

Theorem 3. Eigenfunctions of a positive operator are orthogonal according to energy.

Let 1 be an eigenvalue and 1 be an eigenfunction of the positive operator A so that

(17)

A1 11

and let 2 ≠ 1 be another eigenfunction of the same operator. According to the previous

assumptions, 1, 2 are orthogonal in ordinary sense, i.e. (1, 2) = 0. If the equation (17) is

scalarly multiplied by 2, we get (A1, 2) = 0, which completes the proof.

3

Remark 2. It happens quite often that the system of eigenfunctions is not only orthogonal but also complete

according to energy. Hence, such system can be used to construct the solution of the equation

(18)

Au f

in the form of the orthogonal series expansion.

Let the system of eigenfunctions of a positive definite operator be complete according to

energy. Besides, it is clear that this system is orthonormal also in ordinary sense. Denote by

n, n = 1, 2, …, the eigenfunctions of the operator A and n are corresponding eigenvalues (if

more eigenfunctions belong to the same eigenvalue, some of the numbers n will be

indentical); then it holds

0, n m,

[ m , n ] 0 ,

A n n n .

( n , m )

n m,

1, n m,

If the last equality is scalarly multiplied by n, we get

2

( A n , n ) n n .

(19)

The equation (19) shows that the functions n are not orthonormal according to energy. If we

denote

n

n

,

n

(20)

we get the system of functions, which are orthonormal according to energy and the system is,

according to the presumption, complete according to energy.

Energetic theorems for eigenvalue problems

Eigenvalue problem can be reduced under specified conditions to a certain variational

problem. Let the symmetric operator A satisfies the inequality

2

( Au, u ) k u ,

(21)

where k is a real number, which need not be necessary positive. Such operator is called

bounded below. Specially, every positive operator is bounded below because it satisfies the

inequality (21) for k = 0.

If A is the operator bounded below, then (Au, u)/(u, u) ≥ k. If the value (Au, u)/(u, u) is

bounded below, it has a infimum d. Obviously, d ≥ k. We prove following theorems:

Theorem 5. Let A be operator bounded below and let d be infimum of values of the functional

( Au, u )

.

(22)

(u, u )

If there exists a function u0 DA such that

( Au0 , u 0 )

d,

(23)

(u 0 , u 0 )

then d is the lowest eigenvalue of the operator A and u0 is the corresponding eigenfunction.

Proof. Let be an arbitrary function from the domain of definition DA and t is an arbitrary

real number. The function of real variable t

( A(u0 t ), u0 t ) t 2 ( A , ) 2t ( Au0 , ) ( Au0 , u0 )

(t )

(u0 t , u0 t )

t 2 ( , ) 2t (u0 , ) (u0 , u0 )

attains its minimum for t = 0. Then, however, '(0) = 0. If we calculate '(0), we easily obtain

the equation

(u0 , u0 )( Au0 , ) ( Au0 , u0 )(u0 , ) 0 ,

or, when we use the equation (23), we get

( Au0 du0 , ) 0 .

(24)

4

The set DA is dense in basic Hilbert space, and it follows from (24) that Au0 − du0 = 0, i.e. d is

the eigenvalue and u0 is the eigenfunction of the operator A.

The fact, that d is the lowest eigenvalue of the operator A, follows from the relation (15).

Indeed, if 1 is an eigenvalue and u1 is a corresponding eigenfunction of the operator A, then

( Au1 , u1 )

( Au, u )

1

min

d.

(25)

(u1 , u1 )

(u , u )

Using the theorem 5, the eigenvalue problem for symmetric operator, bounded below, is

reduced to the problem of searching a function that minimizes the functional (22).

This problem can be reformulated in a more suitable form. Let = u/||u||. Then

1

and

( Au, u )

(26)

( A , ) .

(u, u )

Replace again by the letter u. Our variational problem can now be stated as follows: Find

the minimum of the functional

( Au, u ) ,

(27)

subject to restriction

(u , u ) 1 .

(28)

Now we show how to find other eigenvalues.

Theorem 6. Let 1 ≤ 2 ≤ … ≤ n be first n successive eigenvalues of the symmetric operator

A, bounded below, and u1, u2, …, un are corresponding orthonormal eigenfunctions. Let there

exist a function u = un + 1 ≠ 0 minimizing the functional (22) subject to subsidiary conditions

(29)

(u, u1 ) 0, (u, u2 ) 0 , … (u, un ) 0 .

Then un + 1 is the eigenfunction of the operator A which corresponds to the eigenvalue

( Aun1 , u n1 )

n1

(30)

(u n1 , u n1 )

This eigenvalue is the nearest successive eigenvalue of n.

Proof. Let be an arbitrary function from the domain of definition DA of the operator A. Let

n

( , u k )u k

k 1

Then satisfies the conditions (29). Indeed,

n

( , u m ) ( , u m ) ( , u k )(u k , u m ) ,

m 1, 2, , n .

(31)

k 1

According to the presumption, the functions uk are orthonormal. Therefore

0, k m

(u k , u m )

1, k m

and thus

( , um ) ( , um ) ( , um ) 0 .

Together with , also the product t satisfies the conditions 29), where t is an arbitrary

number. Thus, also the sum un + 1 + t satisfies (29). The function of variable t

( A(u n1 t ), u n1 t )

(u n1 t , u n1 t )

attains a minimum for t = 0. If we repeat the same considerations that were used in the proof

of the theorem 5 we found that

( Aun1 n1un1 , ) 0 .

(32)

Focus to the expression

5

( Aun1 n1un1 , ) .

According to the relations (31) and (32) it holds

( Aun1 n1u n1 , )

n

( Aun1 n1u n1 , ) (u k , )( Aun1 n1u n1 , u k )

k 1

n

(u k , )( Aun1 n1u n1 , u k ).

k 1

Further,

( Aun1 n1un1 , uk ) ( Aun1 , uk ) n1 (un1 , uk ) .

It can be shown without difficulty that the last expression equals to zero. Indeed, the second

term on the right-hand side drops out using the conditions (29). The first term equals to

( Aun 1 , uk ) (un 1 , Auk ) .

However, uk, as an eigenfunction of the operator A, satisfies the equation Auk = uk. Thus

( Aun 1 , uk ) k (un 1 , uk ) 0 .

It follows form there that

( Aun 1 n 1un 1 , ) 0 ,

where is an arbitrary function of the dense set DA, hence Aun + 1 – n+1un + 1 = 0, i.e. n + 1 is

an eigenvalue and un + 1 is a corresponding eigenfunction. It remains to prove that n + 1 is the

lowest eigenvalue following the eigenvalue n. Let ’ be an eigenvalue of the operator A,

larger than n and u’ is a corresponding eigenfunction. According to the theorem 6, it fulfils

the conditions (29). Further, according to the relation (15) it holds

( Au' , u ' )

'

n 1 ,

(u ' , u ' )

since n + 1 is the minimum of the functional (27) subject to conditions (29). Similarly as in the

case of the lowest eigenvalue, the problem of finding n+1 can be reduced to the variational

problem: Find the minimum of the functional (7) subject to subsidiary conditions (28) and

(29).

The previous theorems have a relative character − they describe the method of construction of

eigenvalues, if their existence is proved in another way. Here, we introduce a theorem that

sets down the existence conditions of eigenvalues of positive definite operator.

Theorem 7. Let A be positive definite operator, which operates in the Hilbert space H. Let

every set of functions bounded according to energetic norm is compact in H. Then the

operator A

a) has infinite number of eigenvalues

n n

0 1 2 n ,

b) corresponding eigenfunctions form a complete set both in H and in HA.

Ritz method in the eigenvalue problem

Let A be the operator bounded below, hence

2

( Au, u ) k u .

The eigenvalue problem for the operator A can easily be reduced to the eigenvalue problem

for a positive definite operator. Indeed, let c be an arbitrary number larger | k |. The equation

Au u 0 ,

which defines eigenvalues of the operator A, can be rewritten in the form

~

~

Au u 0 ,

~

~

~

where A u = Au + cu, = + c. The operator A is positive definite because

6

~

2

( A u, u ) ( Au, u ) c(u , u ) (c k ) u

~

~

and c + k > 0. If is an eigenvalue of the operator A , then = – c is an eigenvalue of the

operator A and vice versa. Hence, in what follows we will always assume that A is positive

definite operator. Let

( Au, u )

.

(33)

d inf

uD A (u , u )

According to the theorem 5, d is the lowest eigenvalue of the operator A, if there exists such a

function u0, so that

( Au0 , u0 )

d

.

(u0 , u0 )

If we assume that such function exits, then the problem of finding the lowest eigenvalue of the

operator A can be reduced to searching a minimum of the functional (33), or, searching a

minimum of the functional

( Au, u )

(34)

subject to restriction

(u , u ) 1 .

(35)

We show that this problem can be solved by means of the Ritz method. Consider the sequence

of base functions n, n = 1, 2, …, which possess the following three properties:

1. n DA, n = 1, 2, …;

2. for arbitrary n, the functions 1, 2, …, n are linearly independent;

3. the system{n} is complete according to energy.

Let

n

u n ak k ,

k 1

where ak are arbitrary coefficients. We choose these coefficients such that un satisfies the

relation (35) and the product (Aun, un) attains a minimum. Hence, it requires to find a

minimum of the function of n variables

( Aun , un )

n

( A ,

k , m 1

k

m

(36)

)ak am

with subsidiary condition

(un , un )

n

( ,

k , m 1

k

m

)ak am 1 .

(37)

The problem will be solved using the method of Lagrange multipliers. Set up the function =

(Aun, un) – (un, un), where is yet unknown coefficient, and the partial derivatives of with

respect to am are set equal to zero. Obtain the set of equations

n

a [( A ,

k 1

k

k

m

) ( k , m )] 0 ,

m = 1, 2, …, n.

(38)

The system (38) is linear and homogeneous with respect to am, not all of which can be equal

to zero (because the equation (37) would not hold any longer). Therefrom it follows that the

determinant of (38) must equal to zero. It provides the equation determining

( A1 ,1 ) (1 ,1 ) ( A2 ,1 ) (2 ,1 ) ( An ,1 ) (n ,1 )

( A1 ,2 ) (1 ,2 ) ( A2 ,2 ) (2 ,2 ) ( An ,2 ) (n ,2 )

( A1 ,n ) (1 ,n ) ( A2 ,n ) (2 ,n ) ( An ,n ) (n ,n )

0.

When the sequence {n} is orthonormal, the equation (39) simplifies to the form

7

(39)

( A1 ,1 )

( A2 ,1 )

( A1 ,2 )

( A2 ,2 )

( A1 ,n )

( A2 ,n )

( An ,1 )

( An ,2 )

( An ,n )

0

(40)

As it was stated, the functions 1, 2, …, n are linearly independent for arbitrary n. Then the

equation (39) is exactly of n-th degree, since the coefficient at (–1)nn is Gram’s determinant

of functions 1, 2…,n. Therefrom it follows that the equation (39) has just n roots. Let ak( 0 ) ,

k = 1, 2, …, n, be also a solution. Then ak( 0 ) , where is an arbitrary coefficient, also satisfies

the system (38). If ak( 0 ) is substituted into (37), the value of can be found. If now the notation

ak( 0 ) is replaced by ak( 0 ) , we can further understand ak( 0 ) as a solution of the system (38),

which satisfies the equation (37). When = 0 and ak = ak( 0 ) is substituted in(38) we get the

equality as

n

n

a

k 1

(0)

k

( A k , m ) 0 ak( 0 ) ( k , m ) ,

m = 1, 2, …, n.

(41)

k 1

Multiply (41) by ak( 0 ) and sum with respect to m. Get

n

( Ak ,m )ak(0)am(0) 0

k , m 1

n

( ,

k , m 1

k

m

)ak( 0) am(0) .

(42)

It follows from the equation (37) that the right-hand side of (42) is equal to 0. The left-hand

side of (42) is equal to (A uk( 0 ) , uk( 0 ) ), where

n

uk( 0 ) ak( 0 ) k .

k 1

Hence

0 ( Aun( 0) , un( 0) ) .

(43)

The formula (43) shows, that the equation (39) has only real roots if the operator A is

symmetric. Further, one of the functions un( 0 ) minimizes the expression (36). Hence, the

relation (43) eventually shows that this minimum is equal to the lowest of the roots of

equation (39).

Now, we will deal with the determination of subsequent eigenvalues. To obtain an

approximate value of the second eigenvalue, we will seek a minimum of of the product (36)

subject to subsidiary conditions (un, un) = 1 and

(un( 0) , un )

n

( ,

k

k , m 1

where un( 0)

n

a

k , m 1

m

)ak(0) am 0 ,

(44)

k stands for the approximation of the first normalised eigenfunction of

( 0)

k

the operator A. According to the method of Lagrange multipliers set up the expression

( Aun , un ) (un , un ) 2 (un , un( 0 ) )

and its partial derivatives with respect to ak set equal to zero. Obtain the system

a [( A ,

n

k 1

k

k

m

) ( k , m )] ( k , m )ak( 0 ) 0 ,m = 1, 2, …, n.

(45)

Both sides of (45) multiply by ak( 0 ) and sum with respect to m:

n

(0)

(0) (0)

a

a

[(

A

,

)

(

,

)]

a

a

(

,

)

km

k m k m 0.

k

m

k

m

k , m 1

k , m 1

n

8

(46)

The second summation can be shown that equals to (un( 0 ) , un( 0 ) ) 1 . In the first summation,

interchange the subscripts m and k , and firstly sum with respect to k and then with respect to

m. The first summation takes the form

n

n

m 1

k 1

am ak(0)[( Am ,k ) (m ,k )] .

(47)

The inner sum equals to

n

a

k 1

(0)

k

[( k , A m ) ( k , m )] ,

or, since A is symmetric operator,

n

a

k 1

(0)

k

[( A k , m ) ( k , m )] .

Applying the equations (38), which the numbers ak( 0 ) must satisfy for = (n0 ) , the last

expression provides

n

n

((n0) ) ak( 0) (k ,m ) ((n0) ) ak( 0)k ,m

k 1

k 1

(0)

(0)

(0)

( 0)

(n )(un ,m ) (n )(m , un ).

The expression (47) takes then the form

n

((n0 ) ) am (m , un( 0) ) ((n0 ) )(un , un( 0 ) ) ,

k 1

which, according to (44), equals to zero. It follows now from (45) that = 0 and the system

(45) is equivalent to the system (38). Therefrom we infer (as previously) that the searched

minimum is equal to , which is the root of the equation (39). Obviously, in this case, we

need to choose the second root (if they are sized up) of the mentioned equation.

Analogously, we can construct subsequent approximate eigenvalues. All are roots of the

equation (39).

Remark 3. Any of the weighed residual procedures can also be employed to compute eigenvalues.

Problems

1. For the fixed-hinged column compute buckling loads. Use the Ritz method and

compare an approximate result with the exact value. The governing differential

equation for column axially loaded by compressive force N0 is EIw N 0 w 0 .

9