As a general explanation of the notation P(X

advertisement

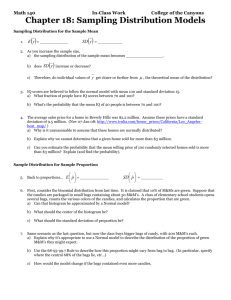

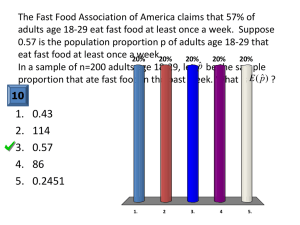

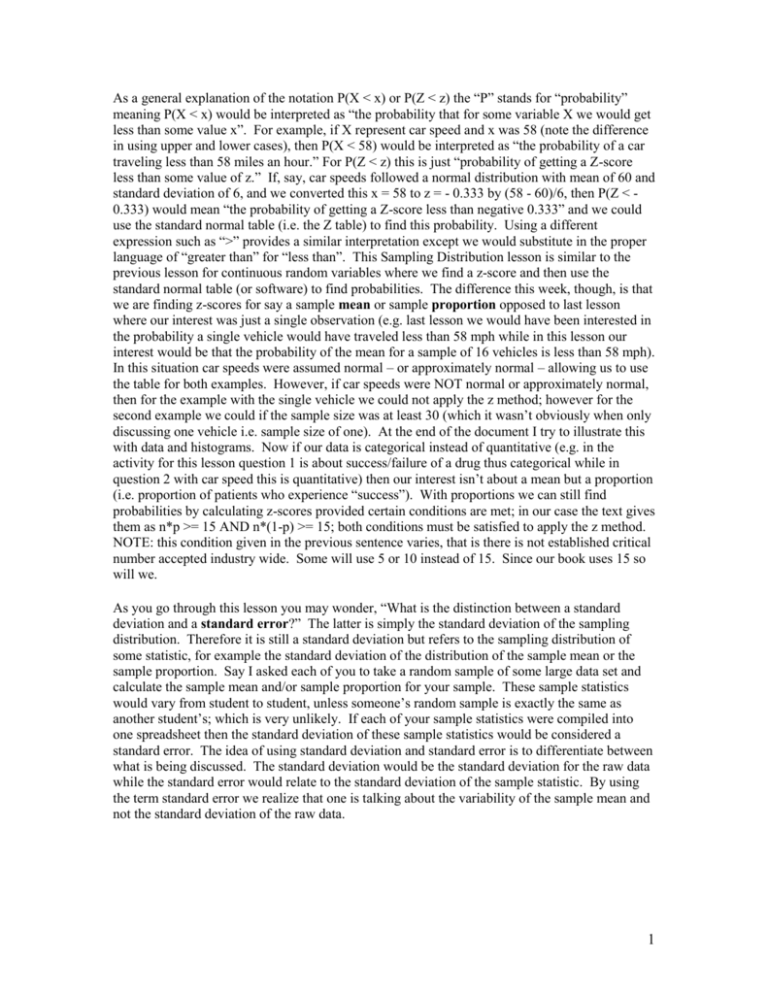

As a general explanation of the notation P(X < x) or P(Z < z) the “P” stands for “probability” meaning P(X < x) would be interpreted as “the probability that for some variable X we would get less than some value x”. For example, if X represent car speed and x was 58 (note the difference in using upper and lower cases), then P(X < 58) would be interpreted as “the probability of a car traveling less than 58 miles an hour.” For P(Z < z) this is just “probability of getting a Z-score less than some value of z.” If, say, car speeds followed a normal distribution with mean of 60 and standard deviation of 6, and we converted this x = 58 to z = - 0.333 by (58 - 60)/6, then P(Z < 0.333) would mean “the probability of getting a Z-score less than negative 0.333” and we could use the standard normal table (i.e. the Z table) to find this probability. Using a different expression such as “>” provides a similar interpretation except we would substitute in the proper language of “greater than” for “less than”. This Sampling Distribution lesson is similar to the previous lesson for continuous random variables where we find a z-score and then use the standard normal table (or software) to find probabilities. The difference this week, though, is that we are finding z-scores for say a sample mean or sample proportion opposed to last lesson where our interest was just a single observation (e.g. last lesson we would have been interested in the probability a single vehicle would have traveled less than 58 mph while in this lesson our interest would be that the probability of the mean for a sample of 16 vehicles is less than 58 mph). In this situation car speeds were assumed normal – or approximately normal – allowing us to use the table for both examples. However, if car speeds were NOT normal or approximately normal, then for the example with the single vehicle we could not apply the z method; however for the second example we could if the sample size was at least 30 (which it wasn’t obviously when only discussing one vehicle i.e. sample size of one). At the end of the document I try to illustrate this with data and histograms. Now if our data is categorical instead of quantitative (e.g. in the activity for this lesson question 1 is about success/failure of a drug thus categorical while in question 2 with car speed this is quantitative) then our interest isn’t about a mean but a proportion (i.e. proportion of patients who experience “success”). With proportions we can still find probabilities by calculating z-scores provided certain conditions are met; in our case the text gives them as n*p >= 15 AND n*(1-p) >= 15; both conditions must be satisfied to apply the z method. NOTE: this condition given in the previous sentence varies, that is there is not established critical number accepted industry wide. Some will use 5 or 10 instead of 15. Since our book uses 15 so will we. As you go through this lesson you may wonder, “What is the distinction between a standard deviation and a standard error?” The latter is simply the standard deviation of the sampling distribution. Therefore it is still a standard deviation but refers to the sampling distribution of some statistic, for example the standard deviation of the distribution of the sample mean or the sample proportion. Say I asked each of you to take a random sample of some large data set and calculate the sample mean and/or sample proportion for your sample. These sample statistics would vary from student to student, unless someone’s random sample is exactly the same as another student’s; which is very unlikely. If each of your sample statistics were compiled into one spreadsheet then the standard deviation of these sample statistics would be considered a standard error. The idea of using standard deviation and standard error is to differentiate between what is being discussed. The standard deviation would be the standard deviation for the raw data while the standard error would relate to the standard deviation of the sample statistic. By using the term standard error we realize that one is talking about the variability of the sample mean and not the standard deviation of the raw data. 1 An attempt at illustrating the central limit theorem as it applies to the sample mean Please see below a series for histograms with a normal bell shape overlaid to illustrate this sample mean concept. The first histogram is for 2010 baseball player salaries (in millions of dollars, e.g a player making $8,500,000 would be listed as $8.5). As you can see the shape of these salaries is heavily skewed right (obscene in amount paid no doubt!). From this data I randomly selected samples of three sizes: 15, 30, and 50 and calculated the mean of each sample. This sampling process was repeated 1000 times. For example, the software randomly selected 15 salaries, calculated the mean of the 15; randomly selected 15 salaries, calculated the mean of the 15, put them back; etc. repeating this process 1000 times. This was repeated for sample sizes of 30 and 50. At the end of this process there were 1000 sample means for the sample size of 15; 1000 means for the sample of size 30; and 1000 means for the sample of size 50. Histograms were then drawn for each. Notice how the histograms for the sample means are vastly different from the histogram of the individual salaries. Theoretically the “acceptable” sample size where the histogram reaches approximately normal is 30 - this is the driving point behind the central limit theorem: as the sample size increases the distribution of the sample mean approaches a normal distribution regardless from what shape the raw data comes. Now if we consider this data as it applies to the last two lessons: for last lesson on Probability Distributions we could not use this data as obviously salaries are not at least approximately normal. So if we used this data and you were asked to find, e.g., the probability that a randomly selected player earned less than 2 million dollars you could not answer using z-score and the table – because the data doesn’t satisfy being normal or approximately normal. However, on this lesson of Sampling Distributions if you were asked to find the probability that the sample mean for 35 randomly selected players was less than 2 million dollars then you could apply these z-score methods since the sample of size 30 would satisfy the central limit theorem. Histogram of Salaries, Means N = 15, Means N = 30, Means N = 50 Normal Salaries Means N = 15 100 300 75 200 50 Frequency 100 0 25 -6 0 6 12 18 24 30 0 1 2 Means N = 30 120 75 90 50 60 25 30 1.6 2.4 3.2 4.0 4 5 6 7 8 4.8 5.4 Means N = 50 100 0 3 4.8 5.6 0 1.8 2.4 3.0 3.6 4.2 2 An attempt at explain the central limit theorem as it applies to the sample proportion Let us say that Election Day has come and gone. Leading up to that day, however, various polling groups will try to estimate what people are going to do. They do this using a random sample of registered voters because asking everyone is too timely and costly; plus not all registered voters actually vote. So each sample produces a sample proportion – remember this is categorical data as the polling company is asking those sampled who they are going to vote for and they tally the percentage of responses for each candidate. These random samples vary - i.e. do not include the same people in each sample and thus can lead to different sample proportions who say they will vote for candidate X. You may recall during the last presidential election that the various polling agencies had different estimates for the proportion of people who were going to vote for then Senator Obama. The standard error is then calculated as a "theoretical" estimate of the standard deviation of these various sample proportions. Think of say 1000 polling agencies conducting a poll involving the same sample size (say 3000 people). Each poll randomly selects 3000 people from the same group of registered voters. Unless one poll had the exact same sample of people as another poll, you would expect to have several different sample proportions; not necessarily each sample proportion being different but certainly would not expect the 1000 polls to have exactly the same sample proportion. If you put all of these 1000 sample proportions into a column in some software and calculated the standard deviation of this column that would represent the standard error of the sample proportion. In reality, however, nobody has time to conduct their own 1000 polls but instead just does one (e.g. Gallup does not conduct 1000 polls just the one poll, same with New York Times, etc.). But the theory to calculate the standard error of the proportion is applied as long as the necessary assumptions are met (i.e. np and n(1-p) being at least 15). Note that since we can only estimate p by p-hat, the sample proportion, we substitute p-hat into this equation. In any event, the np would be the number in the sample who said Yes to voting for Candidate X and n(1-p) would be the number who said they would not. The “p” represents the true proportion or what actually happens on Election Day - the final proportion who voted for Candidate X, while p-hat is the sample statistic an estimate of what percent of the population will vote for Candidate X on Election Day. 3