Lecture 18 – Chapter 7

advertisement

Chapter 7

Extra Sum of Squares

Example: Body Fat Page 257

Variables: Body Fat(Y), Triceps(X1), Thigh(X2) and Midarm(X3)

Fit models: Y on X1; Y on X2; Y on X1 and X2; Y on X1, X2, and X3 (i.e. full model)

SSRx1 = reg SS w/ only x1 = 352.27

SSEx1 = 143.12

SSRx2 = 381.97

SSEx2 = 113.42

SSRx1,x2 = 385.44

SSEx1,x2 = 109.95

SSRx1,x2,x3 = 396.98

SSEx1,x2,.x3 = 98.41

Note that SST for all models is 495.39. Why?

Evaluating Extra SS

SSRx2|x1 = SSEx1 – SSEx1,x2 = 143.12– 109.95 = 33.17 or alternately we could use

SSRx2|x1 = SSRx1,x2 – SSRx1 = 385.44 – 352.27 = 33.17

SSRx2|x1 is referred to as “extra sum of squares for Thigh Circumference given Triceps

Skinfold Thickness is in the model”. This measures marginal effect of adding X2 to

model when X1 is already in the model. So if this is “large” it means adding X2 to the

model with X1 is significant.

We can also consider the marginal effect of adding more than one variable to a model:

SSRx2,x3|x1 = SSEx1 – SSEx1,x2,x3 = 143.12 – 98.41 = 44.71 or

SSRx2,x3|x1 = SSRx1,x2,x3 – SSRx1 = 396.98 – 352.27 = 44.71

Note: SSRx1,x2 = SSRx1 + SSRx2|x1 = SSRx2 + SSRx1|x2

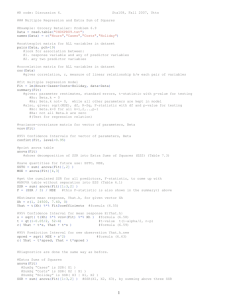

ANOVA Table – Table 7.4 Page 262

Source

SS

Regression

369.98

X1

352.27

X2|X1

33.17

X3|X1,X2

11.55

Error

98.40

Total

495.39

DF

3

1

1

1

16

19

MS

132.33

352.27

33.17

11.55

6.15

1

Test whether a single Bk = 0: Two Approaches

Two methods are:

1. t-test approach by t* = bk/s{bk} using DF = n – p where p is the number of

estimated coefficients in model

( SSEr SSEf ) /( p q)

2. general linear approach (see CH 2) using F* =

SSEf /(n p)

(SSR F SSRR ) /( p q)

=

where p is the number of estimated coefficients in the

SSE F /( n p)

full model and q is the number of estimated coefficients in the reduced model.

Note that the denominator is simple the MSE of the full model. Alternatively,

and probably easier, is to use the following equivalent expression:

(Sequential SS for additional variable(s) m)

where m is equal to

SSEf /(n p)

the number of variables being tested.

F*

Note that book uses dfR – dfF which is identical to that given here.

The hypotheses statements are as follows:

Ho: Bk = 0 versus Ha: Bk ╪ 0

Example 1: Body Fat – Test if X3 can be dropped from model containing X1, X2

Ho: B3 = 0 versus Ha: B3 ╪ 0

b3

2.186

1.37 From table B.2 using 16 degrees of freedom we find the

=

1.595

s{b3}

p-value ranges from 0.1 < p < 0.2, or from Minitab the p-value is 0.190. So we would

not reject alpha for any usual alpha value since p-value is greater than alpha. We would

conclude that Midarm Circumference(X3) can be dropped from the model containing X1

and X2.

t* =

( SSEr SSEf ) /( p q) (Sequential SS for additional variable(s) m)

=

SSEf /(n p)

SSEf /(n p)

[SSR(x3|x1,x2)] m 11.55 1

1.88

=

SSEf /(n p )

6.15

F* =

A partial F-test is done to test the significance of adding Midarm(X3) to a model

containing Triceps(X1) and Thigh(X2) by F* = MSx3|x1,x2/MSE = 11.55/6.15 = 1.88.

The p-value associated with F* with numerator and denominator DF of 1 and 16 from

Table B.4 is compared to alpha. Alternately, you can use Data > Probability

2

Distributions > F, select Cumulative Probability and enter 1 for Numerator DF, 16 for

Denominator DF, select Input Constant and enter 1.88. From the output we get 0.810739

which we then subtract from 1 to get p-value = 0.189261. The test is that Ho: B3 = 0

versus Ha: B3 ≠ 0. From this approach we do not reject Ho and conclude that adding

Midarm Circumference(X3) does not provide statistically significant improvement to the

model containing Triceps(X1) and Thigh(X2). This is called the General Linear

Approach which we discussed in Chapter 2. Note that in this case when we only

considered an additional model with one added covariate, the F* = t* squared. So this

General Linear Approach is similar to testing whether a single Bk = 0 by using t =

bk/s{bk} ~ tn-p

However, sometimes you may want to test several Bk’s = 0 at one time. So….

General Rule

1. Fit full model: Yi = Bo + B1Xi1 + ……+ Bp-1Xip-1 + ei

2. Fit reduced model: Yi = Bo + B1Xi1 + ……+ Bq-1Xiq-1 + ei where q < p

Test: Ho: Bq = Bq+1 = …=Bp-1 = 0 versus Ha: at least one of these Bk’s in Ho ≠ 0

(This Ho is just the Bk’s in Full model that are not in the Reduced model)

F* =

( SSEr SSEf ) /( p q) MSR[( Xq..... Xp 1) | ( X1.... Xq 1)]

~ Fp q ,n p

Ho

SSEf /(n p)

MSE

Example: Suppose Full Model: Yi = Bo + B1Xi1 + B2Xi2 + B3Xi3 + ei

We want to test Ho: B2=B3=0 versus Ha: at least one of B2, B3 ≠ 0

Fit Reduce Model: Yi = Bo + B1Xi1 + ei

[ SSE ( x1) SSE ( x1x 2 x3)]/(4 2) SSR( x2 x3 | x1) / 2

Use F* =

SSEf /(n 4)

MSE ( x1x 2 x3)

Example for Body Fat Data pg 265: Test whether both X2 and X3 can be dropped from

the model containing X1 at α = 0.05.

Ho: B2 = B3 = 0 versus Ha: at least one of B2 or B3 ╪ 0

( SSEr SSEf ) /( p q) SSR( x2 x3 | x1) / 4 2 [ SSR( x2 | x1) SSR( x3 | x1, x2)]/ 2

SSEf /(n p)

MSE ( x1, x 2, x3)

MSE ( x1, x 2, x3)

F* =

[ SeqSS ( x2) SeqSS ( x3)]/ 2 (33.17 11.55) / 2 22.36

3.64

MSE ( x1, x 2, x3)

6.15

6.15

Finding the p-value for F* = 3.64 with DF of 2, 16 is 0.025 < p < 0.05 from Table B.4

using denominator of 15 DF, or from Minitab is 0.049785. So technically we would

reject Ho, however, if we would have rounded F* to three digits (3.636) we would not

have rejected Ho. Rejecting Ho means that at least one of B2 or B3 is not equal to zero.

3

Special Cases

1. If q = 1 we have reduced model Yi = Bo + ei so we test Ho: B1=B2=….=Bp-1 = 0 by

using the overall F-test from ANOVA. F = MSR/MSE

2. If q = p-1 we are only testing a single Bk = 0 and we can then use the t-statistic.

Alternately:

SSEf = (1 – R2f)SST and SSEr = (1 – R2R)SST so we can substitute:

F* =

( RF2 RR2 ) /( p q)

~ Fp q ,n p

(1 RF2 ) /(n p) Ho

(0.801 0.778) /(4 3) (0.023/1)

1.85

(1 0.801) /(20 4)

(0.199 /16)

which is similar to what we found earlier with rounding error.

Body Fat Example for adding X3: F* =

Coefficients of Partial Determination

Example Body Fat page 270: Yˆ 117 4.33 X1 2.86 X 2 2.19 X 3 SSE(X1) = 143.12

NOTE: you can find this by finding the Seq SS for X2 and X3 and adding these to SSE

for the full model. Thus, SSE(X1) = 11.55 + 33.17 + 98.40 = 143.12. This works since

this Seq SS reports the reduction in SSE as the variables are added to the model. So to

get to the SSE(X1) we would simply “add back” the SSR we “took out” when we added

X2 then X3 to the model containing X1 and X2 respectively.

Question: Should X2 be added to the model? SSE(X1,X2) = 109.95

SSE ( x1) SSE ( x1, x 2) SSR ( x 2 | x1) 33.17

=

=

= 0.232

143.12

SSE ( x1)

SSE ( x1)

This indicates that the effect of x2 in reducing the variability in Y when x1 is already in

the model is 23.2%

Ry22|1

SSE ( x1, x 2) SSE ( x1, x 2, x3) SSR( x3 | x1, x 2) 11.55

=

=

= 0.105 --- add

109.95

SSE ( x1, x 2)

SSE ( x1, x 2)

SeqSS(x3) to SSE(x1,x2,x3) to get SSE(x1,x2)

R y23|1, 2

This indicates that the effect of x3 in reducing the variability in Y when x1 and x2 are

already in the model is 10.5%

Ry21|2 we need to regress Y on x2, x1 in that order.

4

SSR( x1| x 2)

3.47

3.47

0.031 Note that previously adding x2 to

SSE ( x 2)

(109.95 3.47) 113.92

x1 the error SS of x1 was reduced 23.2% but when adding x1 to x2 the error SS of x2

was reduced by only 3.1%.

Ry21|2

Coefficient of Partial Correlation

ry 2|1 Ry22|1 0.232 = 0.0.482 and is + since B2 is + in the model containing only x1

and x2.

ry 2|1 measures the linear association between Y(Body Fat) and x2(Midarm) when holding

x1(Triceps) constant.

ry 3|1,2 Ry23|1,2 0.105 0.324 and is negative because B3 is negative in the model

containing x1, x2 and x3.

In Minitab: To get partial correlation:

1. Regress Y on the conditioned variables – store residuals

2. Regress the new X on the conditioned variables – store residuals

3. Find correlation between these two sets of residuals for partial correlation

Tests and Confidence Intervals for ρ12

Test of Ho: ρ12 = 0 is equivalent to Ho: B1 = 0 since B1 = 12

t* =

r n2

1 r2

1

. To test, we use:

2

Ho

~ tn 2

Test of Ho: ρ12 = ρo which is NOT equivalent to Ho: B1 = 0, we use Fisher’s Z

transformation, z’

1 1

1 1 r12

1

ln

for n 25 and can use Table B.8

~ N ln

,

2 1 r12

2 1 n 3

instead of the formula. Therefore, for a large enough N, we can test Ho: ρ12 = ρo by:

z’ =

1 1 r 1 1

ln

ln

2 1 r 2 1 Ho

*

Z

~ N (0,1) and this test statistic can be compared to the

1

n3

critical value from the standard normal table for (1 – α/2) for a 2-sided test or (1 – α) for a

1- sided test. Or, you can find the p-value of Z* by P(Z* < Z).

The (1 – α)100% confidence interval for ρ12 is found by:

5

1.

2.

z ' z(1 / 2) {z '} z '

z(1 / 2)

n3

which gives the CI for z’, then to convert this to a

CI for ρ12;

We go to Table B.8 and locate the values under z’ that are closest to the bounds

for z’ found in step1 to select the bounds for ρ12, keeping the signs for z’ the same

The interpretation of this interval follows the usual interval interpretations.

Example: Data on 30 individuals was taken including Age and Systolic Blood Pressure

(SBP). The regression line is AGE 98.75 0.97SBP with r = 0.66. The regression

line is AGE 98.75 0.97SBP with r = 0.66. Using the correlation model to test B1 =

0.66 28

4.62 From MTB, 2P(t28 >4.62) = 0.00008, so reject Ho that

0 we get t =

1 0.662

B1 = 0. To test Ho: ρ12 = 0.85 vs Ha: ρ12 < 0.85 we compute the Fisher Z by:

1 1 0.66 1 1 0.85

ln

ln

2 1 0.66 2 1 0.85

*

Z

2.41 and P(Z* < -2.41) = 0.008 so reject Ho.

1

30 3

z

1 1 r12

A 99% confidence interval for ρ12 = z ' (1 / 2) where z’ = ln

begins with

2 1 r12

n 3

1 1 0.66

2.575

z’= ln

= 0.297 ≤ z’ ≤ 1.289 and converting z’ to

0.793 >>> 0.793 ±

2 1 0.66

27

a confidence interval for ρ12 use Table B.8 to find the limits for ρ12. From the table we

get the values under z’ closest to these limits which are 0.2986 and 1.2933 which gives

the 99% CI for ρ12: 0.29 ≤ ρ12 ≤ 0.86

Example: Body Fat page 271:

rY2|1: Regress Y on X1; regress X2 on X1; find the correlation of the residuals (0.481).

rY3|12: Regress Y on X1,X2; regress X3 on X1,X2; find the correlation of the residuals

(-0.324).

You find rY2|1

6