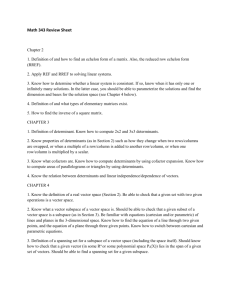

Orthogonality and Least Squares

advertisement

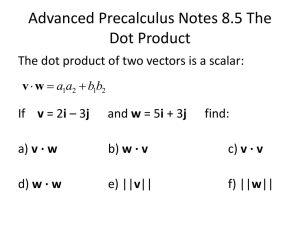

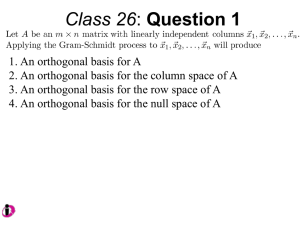

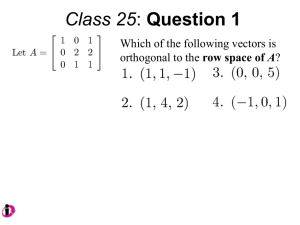

Orthogonality and Least Squares. An inner product on a vector space V is a function that associates with each pair of vectors x and y a scalar denoted by x, y such that, if x, y, and z are vectors and c is a scalar, then (a) x, y = y, x ; (b) x, y z = x, y + x, z ; (c) cx, y = c x, y ; (d) x, y 0; x, x 0 if and only if x 0. The dot product of two vectors x = ( x1 , x2 ,..., xn ) and y = ( y1 , y 2 ,..., y n ) in n is defined to be x y x1 y1 x2 y 2 ... xn y n Note: The dot product in n is an inner product (sometimes called the Euclidean inner product). Thus x y x, y = x T y The length of the vector x = ( x1 , x2 ,..., xn ) [denoted by | x | ] is defined as follows: |x|= (x x) = ( x12 x 22 ... x n2 )1 / 2 . | x y | | x | | y |. Cauchy-Schwarz Inequality: The angle between two nonzero vectors x and y, where 0 , is given by xy cos x y The vectors x and y are called orthogonal provided that xy 0 The distance between two vectors x = ( x1 , x2 ,..., xn ) and y = ( y1 , y 2 ,..., y n ) in n [denoted by d (x, y ) ], is defined as d (x, y ) = | x - y | = [( x1 y1 ) 2 ( x 2 y 2 ) 2 ... ( x n y n )]1 / 2 . The Triangle Inequality: | x y | | x | + | y |. If the vectors x and y are orthogonal, then the triangle inequality yields the Pythagorean formula: 2 2 2 xy x y Relation between orthogonality and linear independence: If the nonzero vectors v 1 , v 2 ,..., v k are mutually orthogonal, then they are linearly independent. Exercise: Is the converse of the above statement true? Justify your answer. Note: Any set of n mutually orthogonal nonzero vectors in n constitutes a basis for n called an orthogonal basis. Exercise: Give an example of an orthogonal basis for n . Note: The vector x in n is a solution vector of Ax = 0 if and only if x is orthogonal to each vector in the row space Row (A). Definition: The vector u is orthogonal to the subspace W of n provided that u is orthogonal to every vector in W. The orthogonal complement W of W is the set of all those vectors in n that are orthogonal to the subspace W. Note: W is itself a subspace. Theorem: Let A be an m x n matrix. Then the row space Row(A) and the null space Null(A) are orthogonal complements in n . That is, If W is Row(A), then W is Null(A). Finding a basis for the orthogonal complement of a subspace W of n : 1. Let W be a subspace of n spanned by the set of vectors v 1 , v 2 ,..., v k ; 2. Let A be the k x n matrix with row vectors v 1 , v 2 ,..., v k ; 3. Reduce the matrix A to echelon form; 4. Find a basis for the solution space Null(A) of Ax = 0. Since W is Null(A), the basis obtained in 4. will be a basis for the orthogonal complement of W. Note: 1. dim W + dim W = n 2. The union of a basis for W and a basis for W is a basis for n . The linear system Ax = b is called overdetermined if A is m x n with m > n, i.e., the system has more equations than variables. Assumption in the following discussion: Matrix A has rank n, which means that its n column vectors a1 , a 2 ,..., a n are linearly independent and hence form a basis for Col(A). Our discussions here are on the situation when the system Ax = b is inconsistent. Observe that the system may be written as b x1a 1 x2 a 2 ... xn a n , and inconsistency of the system simply means that b is not in the n-dimensional subspace Col(A) of m . Motivated by the situation, we want to find the orthogonal projection p of b into Col(A) i.e., given a vector b in m that does not lie in the subspace V of m with basis vectors a1 , a 2 ,..., a n , we want to find vectors p in V and q in V such that b=p+q The unique vector p is called the orthogonal projection of the vector b into the subspace V = Col(A). Algorithm for the explicit computation of 'p': 1. We first note that if A is m x n with linearly independent column vectors a1 , a 2 ,..., a n , then Ay = p where y = ( y1 ,y 2 ,...,y n ) ; 2. The normal system associated with the system Ax = b is the system: AT Ay AT b (Note that AT A is nonsingular); 3. Consequently, the normal system has a unique solution y ( AT A) 1 AT b ; 4. The orthogonal projection p of b into Col(A) is given by p Ay A( AT A) 1 AT b . Definition: Let the m x n matrix A have rank n. Then by the least squares solution of the system Ax = b is meant the solution y of the corresponding normal system AT Ay AT b . Note: 1. If the system Ax = b is inconsistent, then its least squares solution is as close as possible to being a solution to the inconsistent system; 2. If the system is consistent, then its least squares solution is the actual (unique) solution. 3. The 'least sum of squares of errors' is given by | p - b | 2 . Example: The orthogonal projection of the vector b into the line through the origin in m , and determined by the single nonzero vector a, is given by a Tb ab p ay T a a aa a a Example: To find the straight line y a bx that best fits the data points ( x1 , y1 ), ( x2 , y 2 ),..., ( xn , y n ) , the least squares solution x = (a, b) is obtained by solving the normal equations: n n na ( xi )b yi 1 1 n n n 1 1 1 ( xi )a ( xi2 )b xi yi