Notes

Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2007]

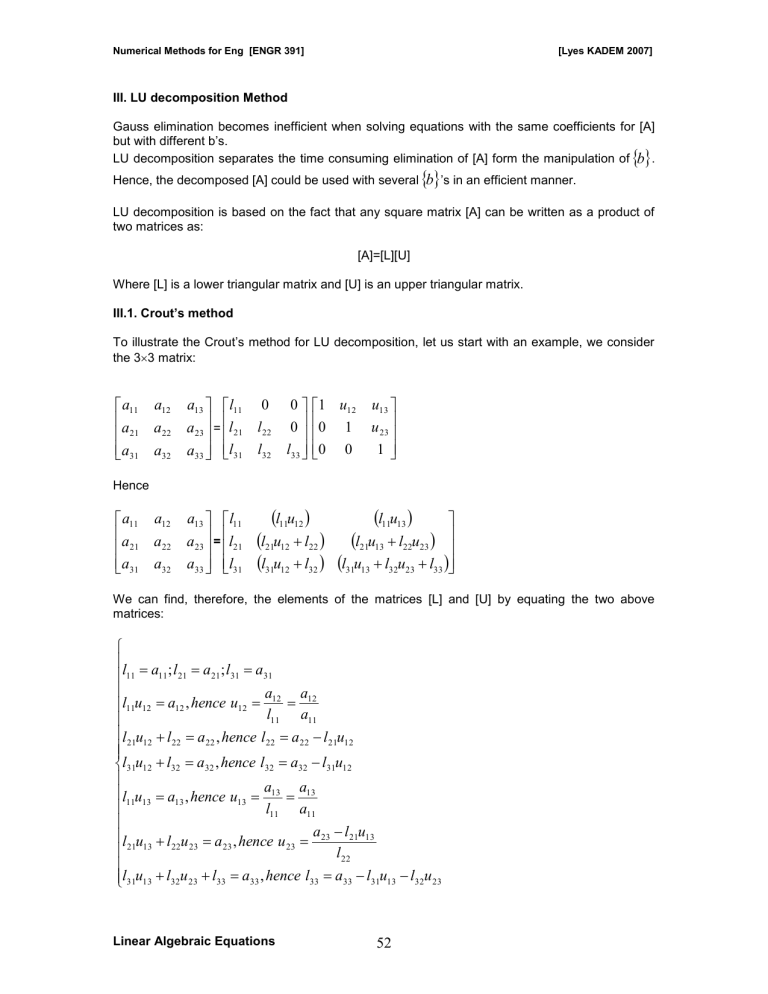

III. LU decomposition Method

Gauss elimination becomes inefficient when solving equations with the same coefficients for [A] but with different b’s.

LU decomposition separates the time consuming elimination of [A] form the manipulation of

Hence, the decomposed [A] could be used with several

’s in an efficient manner.

.

LU decomposition is based on the fact that any square matrix [A] can be written as a product of two matrices as:

[A]=[L][U]

Where [L] is a lower triangular matrix and [U] is an upper triangular matrix.

III.1. Crout’s method

To illustrate the Crout’s method for LU decomposition, let us start with an example, we consider the 3

3 matrix:

a a

11 a

21

31 a a a

12

22

32 a

13 a

23 a

33

=

l l l

11

21

31

0 l

22 l

32

0

0 l

33

1

0

0 u

12

1

0 u

13 u

23

1

Hence

a a

11

21 a

31 a

12 a

22 a

32 a

13 a

23 a

33

=

l l l

11

21

31

l

11 u

12

l

21 u

12 l

31 u

12

l

22 l

32

l

11 u

13

l

21 u

13 l

31 u

13

l

22 u l

32 u

23

23

l

33

We can find, therefore, the elements of the matrices [L] and [U] by equating the two above matrices:

l

11

l l

11 u

12

21 u

12 a

11

l

l

l

31 u

12

l

11 u

13

21 u

13

31 u

13

l l l

; a l

12 l

22 a

32

13

22

32

21 u u

,

,

23

23 a a a hence

21 hence

22

32 l

,

, a

23

33

; l

31 u u

,

12

13

hence hence hence a

33 a l l

,

31

32 l a

22 a l

12

11

13

11

u

23 a a hence a a

22

32 a a

12

11

13

11

a l

33 l l

21

31

23

l u u

a

12

12 l

22

33

21 u

13

l

31 u

13

l

32 u

23

Linear Algebraic Equations 52

Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2007]

Oooooooooouffffffffff !!!.

For a general n

n matrix, you have to apply the following expressions to find the LU decomposition of a matrix [A]: l ij

a ij

k

1 j

1 l ik u kj

; i

j; i= 1 ,2,…, n u ij

a ij

i

1 k

1 l ik u kj l ii

; i<j; j= 2 ,3, …, n and u ii

1 ; i=1,2,…,n

IMPORTANT NOTE

As for the 3

3 matrix (see above), it is better to follow a certain order when computing the terms of the [L] and [U] matrices. This order is: l i1

,u

1j

; l i2

,u

2j ; …;

l i,n-1

,u n-1,,j

; l nn

.

Example

Find the LU decomposition of the follo wing matrix using Crout’s method:

[A]=

a a a

11

21

31 a a a

12

22

32 a a

13

23 a

33

=

III.2. Solution of equations

2

4

3

1

3

2

1

2

1

Now to solve our system of linear equations, we can express our initial system:

Under the following form

To find the solution

, we define first a vector

:

Our initial system becomes, then:

Linear Algebraic Equations 53

Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2007]

As [L] is a lower triangular matrix the of

can be found using the equation:

can be computed starting by z

1

until z n

. Then the values

as [U] is an upper triangular matrix, it is possible to compute

using a back substitution process starting x n

until x

1

. [You will better understand with an example …]

The general form to solve a system of linear equations using LU decomposition is: z

1 z i

b

1 l

11

b i

i k

1

1 l ik z k

; l ii

And x n

z n i

2 , 3 ,..., n x i

z i

k n

i

1 u ik x k

; i

n

1 , n

2 ,..., 2 , 1

Example

Solve the following equations using the LU decomposition:

2

4

3 x x x

1

1

1

x

3

2

2 x x

2

2

x

3 x

2

3 x

3

4

6

15

Note on storage of [A]; [L]; [U]

1- In practice, the matrices [L] and [U] do not need to be stored separately. By omitting the zeroes in [L] and [U] and the ones in the diagonal of [U] it is possible to store the elements of [L] and [U] in the same matrix.

2- Note also that in the general formula for LU decomposition once an element of the matrix [A] is used, it is not needed in the subsequent computations. Hence, the elements of the matrix generated in point (1) above can be stored in [A]

Linear Algebraic Equations 54

Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2007]

III.3. Choleski’s method for symmetric matrices

In many engineering applications the matrices involved will be symmetric and defined positive. It is, then, better to use the Choleski’s method.

In the Choleski’s method, our matrix [A] is decomposed into:

[A]=[U] T [U] where [U] is an upper triangular matrix.

The elements of [U] are given by:

u

11

u

1

u ii

u ij u ij j

a ii u

0

a u

1

; ii

1 i

11 j

11

;

1 / j

a ij j

2 k

1 i

1

2 , 3 ,..., n u ki

2 k

1 i

1

1 / 2

; i u ki u kj

; i

2 , 3 ,..., n

2 , 3 ,..., n and j

i

1 , i

2 ,..., n

III.4. Inverse of a symmetric matrix

If a matrix [A] is square, there is another matrix [A] -1 , called the inverse of [A]; such as:

[A][A] -1 =[A] -1 [A]=[I] ; identity matrix

To compute the inverse matrix, the first column of [A] -1 is obtained by solving the problem (for 3

3 matrix):

1

0

0

; second column:

0

1

0

; and third column;

1

0

0

The best way to implement such a calculation is to use LU decomposition.

In engineering, the inverse matrix is of particular interest, since its elements represent the response of a single part of the system to a unit stimulus of any other part of the system.

III.5. Matrix condition number

III.5.1. Vector and matrix norms

A norm is a real-valued function that provides a measure of the size or length of multicomponent mathematical entities:

Linear Algebraic Equations 55

Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2007]

For a vector

x :

x

The Euclidean norm of this vector is defined as:

x

x

1

2 x

2

2

.

x x

1

.

.

x

2 n

...

x n

2

2

1

In general, L p

–norm of a vector

x is defined as:

L

P

i n

1 x i

P

1

P

Note tend to the value of the largest component of

norm will x :

L

max x i

For a matrix; the first and infinity norms are defined as:

1

max

1

j

n i n

1 a ij

= maximum column sum

1 max

i

n j n

1 a ij

= maximum of row sum.

III.5.2. Matrix condition number

The matrix condition number is defined as:

Cond

A A

1

For a matrix [A], we have that: cond [A]

1 and

Linear Algebraic Equations 56

Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2007]

x x

Cond

A

A

Therefore, the error on the solution can be as large as the relative error of the norm of [A] multiplied by the condition number.

If the precision on [A] is t-digits (10 -t ) and Cond[A]=10 C , the solution on [x] may be valid to only t-c digits (10 c-t ).

III.6. Jacobi iteration Method

The Jacobi method is an iterative method to solve systems of linear algebraic equations.

Consider the following system:

.

a

11 x

1

.

a n 1 x

1

a

12 a n 2 x

2 x

2

a

13 a n 3 x

3 x

3

...

...

a

1 n

a x n

b

1 nn x nn

b n

This system can be written under the following form:

.

x

1

.

x

1

a a

1

11

1 nn

b

1 b n

a

12 a n 1 x

2 x

1

a

13 a n 2 x

3 x

2

...

...

a

1 n x n

a n , n

1 x n , n

1

The general formulation is: x i

a

1 ii

b i

j n

1 ,

i a ij j x j

; i=1,2,…,n

Here we start, by an initial guess for x

1

; x

2

; …; x n

, and we compute the new values for the next iteration. If no good initial guess is available, we can assume each component to be zero.

We generate the solution at the next iteration using the following expression: x i k

1

1 a ii

b i

j n

1 ,

i j a ij x k j

; i=1,2,…,n; and for k=1,2, …, convergence

The calculation must be stopped if: x i k

1 x i k x i k

;

is the desired precision.

It is possible to show that a sufficient condition for the convergence of the Jacobi method is:

Linear Algebraic Equations 57

Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2007] a ii

j n

1 ,

i a ij j

III.7. Gauss-Seidel iteration Method

It can be seen that, in Jacobi iteration method, all the new values are computed using the values at the previous iteration. This implies that both the present and the previous set of values have to be stored. Gauss-Seidel method will improve the storage requirement as well as the convergence.

In Gauss-Seidel method, the values x

1 k

1

, x

2 k

1

,..., x i k

1

computed in the current iteration as well as x i k

2

, x i k

3

,..., x n k

, are used in finding the value x i k

1

1

. This implies that always the most recent approximations are used during the computation. The general expression is: x i k

1 a

1 ii

b i

j

1 i

1 a

x

j k

1

NEW

j n

i

1 a ij x

OLD i k

; i=1,2,...,n; k=1,2,3, …..

Note

- The Gauss-Seidel method will converge to the correct solution irrespective of the initial estimate, if the system of equations is diagonally dominant. But, in many cases, the solution will converges, even if the system is weakly diagonally dominant.

Example

Find the solution of the following equations using the Gauss-Seidel iteration method:

2

2

5 x

1 x

1 x

1

x

2 x

2

6 x

2

7

3

2 x

3 x x

3

3

1

2

32

III.7.1. Improvement of convergence using relaxation

Relaxation is used to enhance convergence, the new value is written under the form: x i

NEW x i

NEW

( 1

) x i

OLD

And usually, 0<

<2

If 0<

<1 we are using under-relaxation, used to make a non-convergent system converge or to damp the oscillations.

Linear Algebraic Equations 58

Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2007]

If 1<

<2 we are using over-relaxation, to accelerate the convergence of an already convergent system.

The choice of

depends on the problem to be solved.

III.8. Choice of the method

1- If the equations are to be solved for different right-hand-side vectors, a direct method, like

LU decomposition, is preferred.

2- The Gauss-Seidel method will give accurate solution, even when the number of equations is several thousands (if the system is diagonally dominant). It is usually twice as fast as the Jacobi method.

Linear Algebraic Equations 59