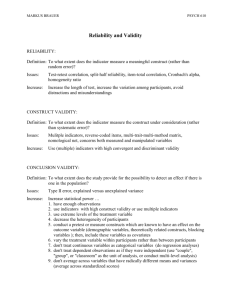

Validity - pantherFILE

advertisement

1 Introduction to Validity Validity is related to how well a scale fulfills the function for which it is being used or how “correct” are the inferences that are made based on performance on the scale. It has been described as “scientific inquiry into test (scale) score meaning” (Messick, 1989). A scale is valid if it measures what it is supposed to be measuring. The inferences and conclusions that are reached on the basis of scores on the measure are being validated NOT the scale itself. Construct Validity All other types of validity can be considered under the umbrella of construct validity (Messick, 1989) This type of validity is directly concerned with the theoretical relationships of scores from a measure or scale to other variables. Used when no criterion or universe of content is accepted as entirely adequate (is this ever not true?) It is the extent to which a scale or measure "behaves" the way that it should with respect to established measures of other constructs and can be considered to be the degree to which the measure assesses the theoretical construct or trait that it was designed to measure. This type of validation requires multiple types of evidence, including both logical and empirical evidence Logical evidence can be obtained by examining the items for face validity and clear precise language. If either of these are lacking, then the construct validity of the measure is reduced. Note that the author of your text discounts this as a form of validity. Empirical evidence can be obtained by making and testing predictions about how scores on the measure should behave in various situations These predictions could be based on differences in demographic information, performance criteria, or measures of other constructs for which the relationship between the constructs has already been validated Procedures for Assessing Construct Validity 1. Correlational studies between the test and other related measures 2. Differentiation between groups, such as finding the difference between the average scores of schizophrenic and non-schizophrenic persons on a measure designed to evaluate mental health 3. Factor analysis 2 4. Multi-trait Multi-method Matrix Must measure the construct using two (or more) ways (i.e observation, selfreport, spouse report) and then identify other constructs that can be measured in the same ways AND at different related constructs that can be measured in the same ways. Reliability correlations (using coefficient alpha) should be high and represent item homogeneity within a construct Convergent validity correlations, obtained between measures of the same construct measured using different formats, should be high Discriminant validity correlations, obtained between measures of different constructs measured using either the same (heterotrait-monomethod) or different (heterotrait-heteromethod) formats should be low Content Validity Useful in situations when a measure is to be used to draw inferences about how a person would perform on a larger universe of items, similar to those on the measure itself. This is easiest to evaluate when the domain is well defined (i.e. two-digit addition). When measuring constructs dealing with beliefs, attitudes or dispositions it is more difficult. In theory, a scale has content validity when its items are randomly chosen from the universe of appropriate items. Steps that we have taken to describe the subscales of our scale have helped to ensure content validity. There are three steps to assessing the content validity of a measure: 1. Describe the content domain of the instrument, in detail. For achievement tests these are usually the instructional objectives. 2. Determine what area within the content domain is measured by each item 3. Compare the structure of the measure to that of the content domain and evaluate whether items adequately represents the domain This type of validity is based on individual subjective judgement, rather than empirical evidence. Quite often “experts” are used. Practical Considerations 1. Should objectives be weighted equally or given different weighting based on importance? 2. How should the task be structured when experts are asked to evaluate whether an item is representative of the performance domain or instructional objectives? Five-point scale or dichotomous scale? 3. What aspects of the item should be examined? Subject matter? Cognitive process? Level of complexity? Item format? Response format? 3 4. How should results be summarized? Percentage of items matched to objectives? Percentage of items matched to objectives with high importance rating? Percentage of objectives NOT assessed by any items on the test? Issues 1. Even if all items fit the domain, the domain may not adequately represent the construct 2. Should ethnic, racial, or gender differences be considered? Consider “story” problems in mathematics 3. Should item and/or test performance data be considered? Criterion-Related Validity A criterion is a measure that could be used to determine the accuracy of a decision. This type of validity is useful in situations when scores from a scale are to be used to make decisions about how a respondent would perform on some external behavior, or criterion measure, of practical importance This type of validity is more of a practical issue than a scientific one because it is only concerned with predicting a process, as opposed to understanding it. This type of validity does not imply causal relationships, even when time is an element. Predictive validity relates to how well scores predict success on future performance on the criterion measure. There are two steps in this type of a study: 1. Obtain scores from a group of respondents, but do not use the scores, in any way, for decisions making 2. At some later date, obtain performance measures for the same group of people and correlate these measures with scores to obtain a predictive validity coefficient. This approach is somewhat impractical, since it requires that the sample used in the study be similar to the population. Therefore, selection decisions must be based on a random basis. This could have negative consequences for both the individual and the decision maker. Concurrent validity relates to the current relationship between test scores and the criterion measure. This biases the results because only those who have already been selected are used in the study. Hence, the sample used in the study may be quite different from the population. However, these types of studies are easier and more practical, and research has shown that validity coefficients from these types of studies are similar to those found in predictive validity studies, although they tend to seriously underestimate the population validity due to restriction of range. 4 Practical Problems 1. Criterion measures that are readily available and easily measured are often not sufficiently complete or important 2. Criterion measures that are substantial and important are often difficult to define and measure and typically are better suited to observational assessment 3. Large samples (200 or more) are needed to reflect validity levels that reflect the population accurately at least 90% of the time 4. If those who influence scores on criterion measure are aware of scores on the predictor measure the results may be biased or contaminated 5. Restriction of range, due to selection or ceiling/floor effects, affect correlation coefficients, however this can be corrected for statistically. 6. Correlation coefficients do not reveal how many cases have been correctly classified. 5 Example: Multitrait – Multimethod Matrix H Teacher A I H Tests A I H Observer A I Teacher Ratings Honesty Aggressiveness Intelligence (H) (A) (I) 0.89 0.51 0.89 0.38 0.37 0.76 0.57 0.22 0.09 0.22 0.57 0.10 0.11 0.11 0.46 0.56 0.22 0.11 0.23 0.58 0.12 0.11 0.11 0.45 Observers’ Ratings Tests H A I H A I 0.93 0.68 0.59 0.67 0.43 0.34 0.94 0.58 0.42 0.66 0.32 0.84 0.33 0.34 0.58 0.94 0.67 0.58 0.92 0.60 0.85 Reliability correlations are represented on the diagonal should be high and represent item homogeneity within a construct Convergent validity correlations are underlined. These are the correlations between measures of the same construct measured using different formats. They should be high. Discriminant validity correlations are italicized. These are the correlations obtained between measures of different constructs measured using either the same (heterotrait-monomethod) or different (heterotrait-heteromethod) formats. These should be low