Normal Curve Tests of Means and Proportions

advertisement

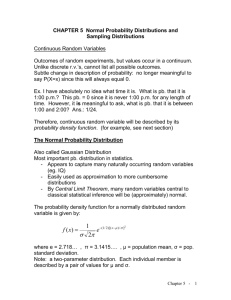

Normal Curve Tests of Means and Proportions Normal curve means tests, commonly called simply "hypothesis tests," are a basic method of exploring possible differences between two samples, or of testing the null hypothesis that an observed sample mean does not differ significantly from zero. The normal curve test is a parametric test assuming a normal distribution, but when its assumptions are met it is more powerful than corresponding two-sample nonparametric tests. The normal curve z-test is used when sample sizes are larger (ex., > 29), but with smaller samples the t-test is used. The two tests are equivalent. Key Concepts and Terms Deviation scores are the observed scores minus the mean, for any given variable. By definition, the average deviation score is always zero since half of deviations are above the mean and half below. Standard deviation. For any given variable, if we get rid of the signs (so they don't average to zero) by squaring the deviations , then add them up and divide by sample size (n), then get rid of the squaring by taking the square root, we have the standard deviation: s.d. = SQRT[(SUM(x - xmean)2)/n] Sample standard deviation is a conservative adjustment statisticians sometimes make when dealing with sample data. It is simply the formula above, but with (n - 1) in the denominator rather than n, the sample size. Variance is the square of the standard deviation. Standard error. If we took several samples of the same thing we would, of course, be able to compute several means, one for each sample. If we computed the standard deviation of these sample means as an estimate of their variation around the true but unknown population mean, that standard deviation of means is called the standard error. Standard error measures the variability of sample means. However, since we normally have only one sample but still wish to assess its variability, we can compute estimated standard error by this formula: SE = sd/SQRT(n - 1) where sd is the standard deviation for a variable and n is sample size. Often estimated standard error is just called 'standard error.' Confidence limits set upper and lower bounds on an estimate for a given level of significance (ex., the .05 level). The confidence interval is the range within these bounds. For instance, for normally distributed data, the confidence limits for an estimated mean are the sample mean plus or minus 1.96 times the standard error, as discussed below. Some researchers recommend reporting confidence limits wherever point (ex., mean) estimates and their significance are reported. This is because confidence limits provide additional information on the relative meaningfulness of the estimates. Thus significance has a different meaning when, for example, the confidence interval is the entire range of the data, as compared to the situation where the confidence interval is only ten percent of the range. Binomial distribution. The binomial distribution is the frequency distribution which occurs when one follows the rules of probability. The figure below, for instance, reflects the distribution of two things (R=Republicans, D=Democrats), each with a .5 probability of selection, taken four at a time with recurrences allowed. The binomial distribution follows the formula, (p + q)n, where p is the probability of one thing (Republicans, in this example, with p = .5) and q is the probability of non-occurrence (q = 1 - p), and n is the number of trials (4 in this example). Thus, (p + q)4 = (1/2 + 1/2)4 = 1p4 + 4p3q + 6p2q2 + 4pq3 + 1q4 = 1/16 + 4/16 + 6/16 + 4/16 + 1/16 As can be seen, this binomial expansion corresponds to the distribution shown in the figure above. . A normal distribution is similar to a binomial distribution, but for continuous interval data and large sample size. A normal distribution is assumed by many statistical procedures. Normal distributions take the form of a symmetric bell-shaped curve. The standard normal distribution is one with a mean of 0 and a standard deviation of 1. Standard scores, also called z-scores or standardized data, are scores which have had the mean subtracted and which have been divided by the standard deviation to yield scores which have a mean of 0 and a standard deviation of 1. Normality can be visually assessed by looking at a histogram of frequencies, or by looking at a normal probability plot output by most computer programs. Tests of normality are discussed further in the section on testing assumptions. The area under the normal curve represents probability: 68.26% of cases will lie within 1 standard deviation of the mean, 95.44% within 2 standard deviations, and 99.14% within 3 standard deviations. Often this is simplified by rounding to say that 1 s.d. corresponds to 2/3 of the cases, 2 s.d. to 95%, and 3 s.d. to 99%. Another way to put this is to say there is less than a .05 chance that a sampled case will lie outside 2 standard deviations of the mean, and less than .01 chance that it will lie outside 3 standard deviations. This statement is analogous to statements pertaining to significance levels of .05 and .01. Thus, if the mean in our sample is 20 and the standard deviation is 12, then if the data are normally distributed and randomly sampled, we would estimate that 95% of the cases will be within the range of 20 plus or minus 1.96*12 = 23.52, which is the range -3.52 to 43.53. By the same token the chance of a given case being 43.53 or higher, or -3.52 or lower, is .05. This calculation is a two-tailed test. The chance of a given case being 43.53 or higher is .025, which is the corresponding one-tailed test. Note that the significance level of a two-tailed test is numerically twice that of a one-tailed test, but since the lower the significance numerically (closer to 0) the better the significance substantively (less likelihood of the observation being just due to the chance of random sampling), the one-tailed test has substantively better significance by a factor of two. Normal curve means tests ("hypothesis tests"). The sampling distribution represented in a normal curve can be used to test hypotheses about means. An hypothesis of this sort might be as follows: Someone claims the average age of members of Congress is 55, but you think it is higher. You take a sample of the members of Congress and find the mean age is 59 -- but is 59 significantly different from 55, or could this just be due to the chance of sampling when the real mean age was in fact 55? In normal curve terms, if we hypothesize that there is a normal distribution of ages around a mean of 55 and were to take samples from this distribution, what percentage of the time would we get a sample mean age which is 4 years or more different from 55? This is similar to the two-tailed test illustrated in the figure above. If the distance from the hypothesized real mean of 55 to the sample mean of 59 (4 years) is 1.96 standard errors or greater, then the proportion of cases in the tail is .025 or less, and the proportion in both tails is .05 or less. Recall standard error is the standard deviation of sample means, which is what this example involves, but the logic is the same as for standard deviations of cases. We want the two-tail situation because the hypotheses dealt with "different from," whereas had it dealt only with "more than" then we would want the one-tail test. Dividing 1.96 into 4, if the standard error is 2.04 or less, then 59 is at least 1.96 standard errors away. We can then say that we can be 95% confident that our sample mean of 59 is significantly different from the hypothesized real mean of 55. Equivalently, we can say that the sample mean is significantly different at the .05 significance level. o The confidence interval for the example above is the sample mean, 59, plus or minus 1.96 times the standard error, for the 95% confidence level. This corresponds to a finding of significance at the .05 level when the hypothesized mean is outside the 95% confidence limits around the sample mean. The Statistics, Summarize, Explore menu choice in SPSS displays the 95% confidence interval for the mean. One Sample Formula for z values for means tests. It is conventional to denote the value we look up in a table of areas under the normal curve as "z." In the example above, z was 1.96, but it may calculate to any number according to this formula: z = (meansample - meanpopulation)/(s.d./SQRT(n - 1)) where s.d. is the sample standard deviation, used as an estimate of the unknown population standard deviation, and n is the sample size. Note that the denominator term is the standard error, discussed above. The researcher uses this formula to compute the z value, then sees how it compares with the critical value (ex., 1.96 for significance=.05) in a table of areas under the normal curve. In a two-tailed test, if z is 1.96 or higher, then the difference of means is significant at the .05 level. Normal curve proportions tests. Identical logic may be used to test the difference in proportions (percentages) rather than means. Let Po be an observed sample proportion, such as 55% of students favoring letter grades rather than pass-fail grades; let Pt be the hypothesized true proportion in the population, say 45%; and let Qt be 1 - Pt. Then the one sample formula for testing hypotheses about proportions is: z = (Po - Pt)/SQRT(Pt*Qt/n) The sample z value is compared to critical values found in a table of areas under the normal curve, as in means tests. Independent two-sample tests. Slightly different formulas apply when there are two samples, though the researcher is still computing a z value which is then compared with critical values in the table of areas under the normal curve, following the same inference logic as in one-sample tests. Two sample tests apply to a situation such as testing the difference in mean age or in percent Democrat in a sample from City A and a sample from City B. In the formulas below, the following notation applies: n1, n1: sample sizes in samples 1 and 2 xmean1, xmean2: means for samples 1 and 2 P1, P2: proportions for samples 1 and 2 Q1, Q2: 1 minus the proportions for samples 1 or 2 s12, s22: variances for samples 1 and 2 Independent Samples (Uncorrelated Data) Means Test z = (xmean1 - xmean2)/ SQRT[(s12/(n1-1)) + (s22/(n2-1))] Proportions Test z = (P1 - P2)/ SQRT[(P1Q1/n1) + (P2Q2/n2) ] Correlated two-sample tests. Data in two samples are correlated if the responses of person #1 in the first sample are associated with the responses of person #1 in the second sample. This happens in before-after studies of the same people, or in matched-pair tests of similar people. When data in the two samples are correlated, that correlation must be factored into the formulas for two-sample means and proportions tests. Notation is as for independent two-sample tests, with the addition of r12, which is the Pearson correlation of the given variable between samples 1 and 2 Dependent Samples (Correlated Data) Means Test z = (xmean1 - xmean2)/ SQRT[(s12/(n1-1)) + (s22/(n2-1)) - 2r12*(s1/SQRT(n1-1))*(s2/SQRT(n2-1)) ] Proportions Test z = (P1 - P2)/ SQRT[(P1Q1/n1) + (P2Q2/n2) - 2r12*(SQRT(P1Q1/n1) *(SQRT(P2Q2/n2) ] Assumptions Normal distribution. The normal curve means and proportions tests assume the variable of interest is normally distributed in the population. The z tests are parametric tests because they assume the parameter of normally distributed data. Non-parametric statistics are ones which do not require an assumption be made about the distribution of the data. Interval data are assumed since the calculations involved, starting with the calculation of deviations (observed scores minus mean scores) assume equal intervals. Sample size should not be small. Normal curve z tests assume than sample size n is large enough to form a normal curve. There is no accepted cutoff, but typically if n < 30, then t-tests should be used in place of z-tests. The t-tests are computed identically to z tests for larger samples, but a table of the t distribution is consulted. In such a table, degrees of freedom is (n - 1) for means tests and is (n1 + n2 - 2) for proportions tests. Homogeneity of variances is assumed in two-sample tests. The variance of the given variable should approach being equal in the two groups. Can I use normal curve means tests with ordinal data in spite of the assumption to the contrary? It is common in social science to use ordinal data with interval procedures provided there are at least five ordinal categories. Most researchers find that assumptions of normality are too grossly violated when there are fewer scale points. See further discussion in the section on testing assumptions. Bibliography Any introductory statistics textbook.