Hierarchical Models (Full Bayes)

advertisement

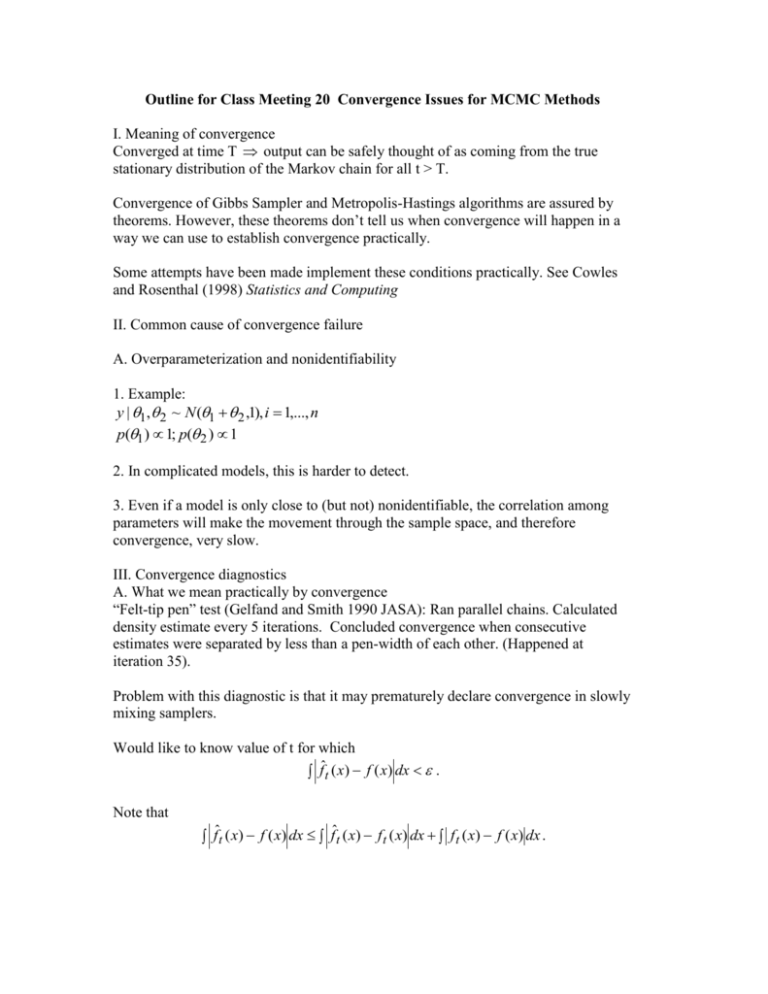

Outline for Class Meeting 20 Convergence Issues for MCMC Methods I. Meaning of convergence Converged at time T output can be safely thought of as coming from the true stationary distribution of the Markov chain for all t > T. Convergence of Gibbs Sampler and Metropolis-Hastings algorithms are assured by theorems. However, these theorems don’t tell us when convergence will happen in a way we can use to establish convergence practically. Some attempts have been made implement these conditions practically. See Cowles and Rosenthal (1998) Statistics and Computing II. Common cause of convergence failure A. Overparameterization and nonidentifiability 1. Example: y | 1, 2 ~ N (1 2 ,1), i 1,..., n p(1 ) 1; p( 2 ) 1 2. In complicated models, this is harder to detect. 3. Even if a model is only close to (but not) nonidentifiable, the correlation among parameters will make the movement through the sample space, and therefore convergence, very slow. III. Convergence diagnostics A. What we mean practically by convergence “Felt-tip pen” test (Gelfand and Smith 1990 JASA): Ran parallel chains. Calculated density estimate every 5 iterations. Concluded convergence when consecutive estimates were separated by less than a pen-width of each other. (Happened at iteration 35). Problem with this diagnostic is that it may prematurely declare convergence in slowly mixing samplers. Would like to know value of t for which fˆt ( x) f ( x) dx . Note that fˆt ( x) f ( x) dx fˆt ( x) f t ( x) dx f t ( x) f ( x) dx . First term called “Monte Carlo noise” becomes small as m→∞. The second term is called “bias component” or “convergence noise” becomes small as t→∞. The test above (and almost any test of convergence made from sampler itself) is really concerned only with fˆ ( x) fˆ ( x) dx , which is directly related to none of t t k the terms. B. Characteristics of diagnostics A variety of diagnostic methods have been suggested. They vary on: 1. Diagnostic goal Most diagnostics address issue of bias, but a few also consider variance. 2. Output format Some produce single number summary; others are qualitative 3. Replication requirement Some may be implemented using only a single chain, while others require m > 1. 4. Dimensionality Some consider one parameter at a time, while others attempt to diagnose convergence of the full joint posterior. 5. Algorithm Some apply only to Gibbs sampler; others to any MCMC scheme 6. Ease of use, availability Generic code available for some, not others. C. Some particular methods 1. Gelman and Rubin (1992) Statistical Science Start m chains at different points, overdispersed with respect to true posterior. Run each chain for 2N iterations. Test whether variation of a particular parameter within the chains equals variation between the chains for the latter N iterations. Specifically, monitor convergence by the estimated scale reduction factor N 1 m 1 B df , Rˆ mN W df 2 N where B/N is the variance between the means from the m parallel chains, W is the average of the m within-chain variances, and df is the degrees of freedom from an approximating t density to the posterior. The factor is the factor by which the scale parameter would shrink if sampling were continued indefinitely. The authors show it must approach 1 as N→∞. Can obtain S code for this from lib.stat.cmu.edu/S.itsim. Also is included in suite of software in CODA (Convergence diagnosis and output analysis). Can get CODA from BUGS site. Applicable to any MCMC algorithm. Criticisms: - Univariate - Depends on picking overdispersed starting points - Attempts to monitor convergence noise only, not Monte Carlo noise. 2. Raftery and Lewis (1992) Run one chain, retain only every kth sample after burn-in, with k large enough that retained samples can be considered independent. Use Markov chain theory to determine when a particular quantile is estimated to specified accuracy. Addresses both bias and variance, applicable to general MCMC algorithms, and is easy to implement (lib.stat.cmu.edu/S/gibbsite or CODA). Criticism: - “answer” on when convergence occurs depends on quantile chosen 3. Choices: See Cowles and Carlin (1996) JASA for a comparison of performance in two simple models. Bad news: all can fail to detect the sorts of convergence failures they were designed to identify. IV. Variance estimation A. One summary for parameter θ from MCMC results is its mean. Suppose you wish to assess standard error of the mean. You must consider MCMC design. 1. m chains, each of length N (after burn-in), and estimator for posterior mean 1 m ˆ1 (j N ) . m j 1 Then an estimate of variance is m s2 1 (N ) 2 Vˆiid (ˆ1 ) ( j ˆ1 ) m m(m 1) j 1 2. single chain of length N (after burn-in), and estimator for posterior mean 1 N (t ) N t 1 If we use the same type of variance estimate, i.e., N 1 (t ) 2 Vˆiid (ˆ2 ) ( ˆ2 ) , N ( N 1) t 1 ˆ2 it will be biased downward. B. Alternative Estimators for single (or a few) MCMC chain designs 1. Systematic subsampling of chain Return only every kth observation from the chain, where k is large enough that the observations appear independent. Disadvantages: (1) wastes data (2) MacEachern and Berliner (TAS 1994) showed that this overestimates variance. 2. Effective sample size approach The “information” about the mean from the sample from a single chain is less than a same sized random sample from the posterior. Thus we can adjust the standard error estimate by dividing by the “corrected” sample size (much as design effects are used for estimating variances for complex sample designs.) The effective sample size is ESS N / ( ), where ( ) 1 2 k ( ) k 1 and k ( ) is the autocorrelation between sampled θ’s at lag k. These autocorrelations can be estimated from the data. Then we use as an estimator of variance N 1 (t ) 2 VˆESS (ˆ2 ) ( ˆ2 ) , ˆ ( ESS )( N 1) t 1 where ESˆS N / ˆ ( ) . 3. Batch method Divide the single long run into batches of length k (N=mk), with batch means B1, …, Bm. Since ˆ2 B , we can use as an estimator of variance m 1 2 ( Bi B ) . (m)( m 1) i 1 This is approximately unbiased as long as k is large enough that the batch means are approximately independent. Vˆbatch (ˆ2 ) C. Whichever method of variance estimation is used, one can construct a 95% c.i. for ˆ as ˆ z Vˆ . .025 V. BUGS info A. BUGS provides (STATS on inference menu) an estimate of standard error of the mean, which it calls “Monte Carlo error.” It is calculated using batch method described above. B. BUGS also provides Gelman and Rubin statistic (GRdiag on inference menu). It provides the following: - plots of the width of a central interval of the posterior constructed from pooled runs (plotted in green) - average width of central 80% intervals constructed from each run (plotted in blue) - ratio R = pooled/within in red - both widths are scaled so their maximum is 1 You can convert the plot to numeric output by - double-clicking on the plot - then control-left-mouse-click on the window What you want to see (to support convergence) is BGR statistic close to 1 and convergence of both pooled and within interval widths to stability. (N.B. the statistic computed is actually a slight correction of the Gelman and Rubin statistic that was described by Brooks and Gelman (1998).) Summary of a Practical Approach 1. Run a few (3 to 5) parallel chains, with starting points drawn (perhaps systematically) from a distribution thought to be overdispersed wrt posterior (say covering +/- 3 prior standard deviations from the mean) 2. Visually inspect these chains by overlaying their sampled values on a common graph for each parameter, or for very high-dimensional models, a representative subset of the parameters. 3. Calculate Gelman and Rubin statistic and lag 1 autocorrelations for each graph (latter helps to interpret former, as Gelman and Rubin statistic can be inflated because of slow mixing) 4. Investigate crosscorrelations among parameters suspected of being confounded, just as you might do for collinearity in linear regression.