Stat 411 Lecture Notes on Non

advertisement

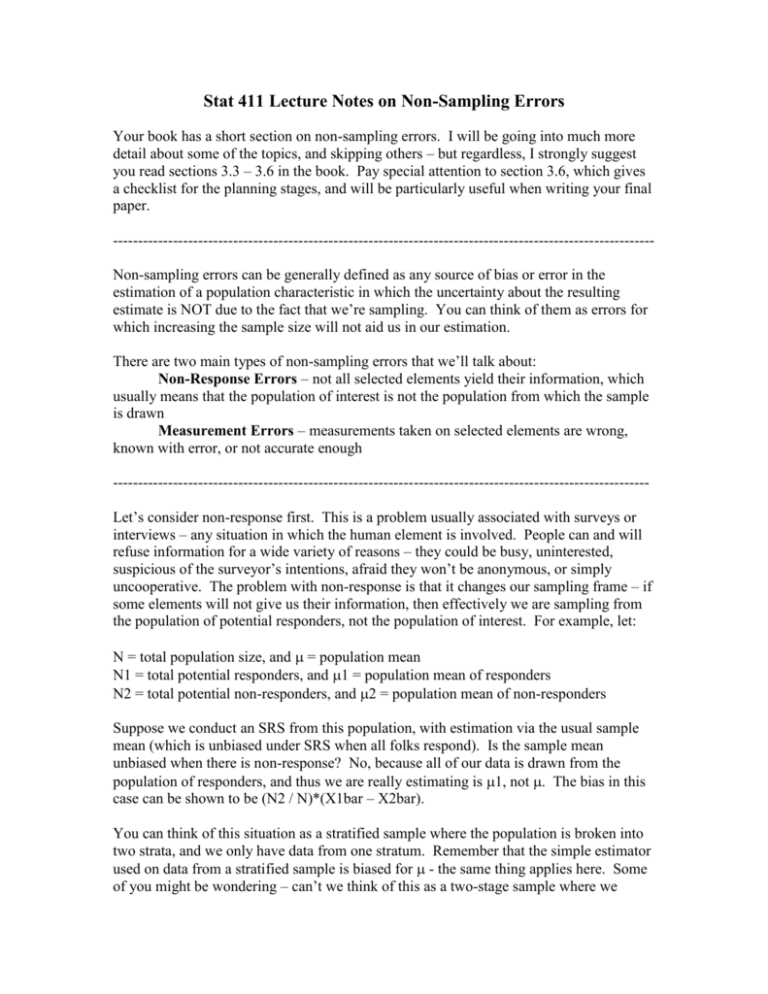

Stat 411 Lecture Notes on Non-Sampling Errors Your book has a short section on non-sampling errors. I will be going into much more detail about some of the topics, and skipping others – but regardless, I strongly suggest you read sections 3.3 – 3.6 in the book. Pay special attention to section 3.6, which gives a checklist for the planning stages, and will be particularly useful when writing your final paper. -----------------------------------------------------------------------------------------------------------Non-sampling errors can be generally defined as any source of bias or error in the estimation of a population characteristic in which the uncertainty about the resulting estimate is NOT due to the fact that we’re sampling. You can think of them as errors for which increasing the sample size will not aid us in our estimation. There are two main types of non-sampling errors that we’ll talk about: Non-Response Errors – not all selected elements yield their information, which usually means that the population of interest is not the population from which the sample is drawn Measurement Errors – measurements taken on selected elements are wrong, known with error, or not accurate enough ----------------------------------------------------------------------------------------------------------Let’s consider non-response first. This is a problem usually associated with surveys or interviews – any situation in which the human element is involved. People can and will refuse information for a wide variety of reasons – they could be busy, uninterested, suspicious of the surveyor’s intentions, afraid they won’t be anonymous, or simply uncooperative. The problem with non-response is that it changes our sampling frame – if some elements will not give us their information, then effectively we are sampling from the population of potential responders, not the population of interest. For example, let: N = total population size, and = population mean N1 = total potential responders, and 1 = population mean of responders N2 = total potential non-responders, and 2 = population mean of non-responders Suppose we conduct an SRS from this population, with estimation via the usual sample mean (which is unbiased under SRS when all folks respond). Is the sample mean unbiased when there is non-response? No, because all of our data is drawn from the population of responders, and thus we are really estimating is 1, not . The bias in this case can be shown to be (N2 / N)*(X1bar – X2bar). You can think of this situation as a stratified sample where the population is broken into two strata, and we only have data from one stratum. Remember that the simple estimator used on data from a stratified sample is biased for - the same thing applies here. Some of you might be wondering – can’t we think of this as a two-stage sample where we choose m=1 of the M=2 strata, then take an SRS within that group? Not quite. And the reason is because we are not randomly choosing the group that we take in the first stage – we are forced to take the group of responders. IF there was equal chance of getting either group, THEN we could use a two-stage estimator. Notice that if 1 = 2, in other words, if the populations of responders and nonresponders are the same, then 1 = , and we’re out of the woods – we can do everything in the same manner as we have all along. Evaluating whether or not the responders and non-responders are the same involves making an assumption, and that assumption is more or less reasonable depending on each specific situation. So what if we can’t reasonably assume that the groups of responders and non-responders are similar, or if we prefer not to let our analysis ride on a subjective assessment? There are some alternatives. The most obvious (but practically speaking, usually the hardest and/or most expensive) method of reducing non-response bias is to convert non-responders into responders. Recall the equation for non-response bias: (N2 / N)*(X1bar – X2bar). One way to reduce the absolute value of this quantity is to reduce N2/N, i.e., reduce the proportion of non-responders in the population. The ways to do this are numerous. Here is a mediumsized list, with short discussions of pros and cons. Some are specific, some are general, some are practical and some are psychological. They appear in no particular order. Ways to Convert Non-Responders Into Responders 1. If you are conducting a telephone or face-to-face interview, make sure you call/visit at times when the person to be interviewed is likely to be home. For the average working Joe, this means sometime in the evening after 6pm. But don’t call too late either, or you may incur non-response because of a sleepy and annoyed individual. Sometime between 6 and 8 is best. 2. If you intend to send a mail survey, confirm that the people you wish to survey still live at the address you have on file - registries of this sort become obsolete quickly (20% of American families move each year). If a particular individual does not respond, you may want to send a representative to the address to find out if they are there, or perhaps to find out to where they have moved. If you want to sample whoever is currently living in the address you’ve selected, label the envelope, for example, “Mr. and Mrs. Smith or current resident.” 3. For mailed surveys in particular, studies have shown that using attractive, high quality, official-looking envelopes and letterhead can improve response significantly. Include a carefully typed cover letter explaining your intentions, and guaranteeing their confidentiality. Get a big-wig from your company or organization to sign it (personally, if possible). Always send materials through first-class mail, and include a return envelope with first-class postage. 4. Keep surveys and interviews as short as possible. As a general rule, the more questions you ask, the less likely you are to get accurate (or any) information. 5. Use the guilt angle whenever possible (but do it implicitly, don’t beg). What I mean by this is simply to increase the amount and quality of personal contact with your population. Psychologically speaking, for most people it’s easy to throw away a mailed survey, considerably harder to hang-up on an interviewer, and harder yet to walk away. Therefore, choose a face-to-face interview over a phone interview, and choose a phone interview over a mailed survey, whenever it is practical to do so. 6. Publicizing or advertising your survey often helps with non-response. This lets people know they’re not the only one being surveyed and helps with credibility. Use endorsements by celebrities, important individuals, or respected institutions if you are able. 7. Offer an incentive. Money is by far the best, because it has the most universal appeal. Be careful when using other incentives, because you do not want to elicit responses from some specific subgroup of the population who happens to want or like what you’re offering. Whether to offer the incentive up-front or upon return of the survey is basically a toss up in terms of effectiveness – but the former will be considerably more expensive. In addition to the above, there is one more method that requires a bit more attention, called ‘double sampling.’ At the core, it is really just a two-stage sample. In the first stage, try to elicit responses through a cheap and easy method, such as a mailed survey. In the second stage, go after a random sample of the non-responders from stage 1 with the big guns – telephone or face-to-face interviewing. This is a fairly well studied method, with suggested estimators and such, but I’ll go through the details in class. Clearly a lot of effort has been put into figuring out how to get people to respond. But it is a sad fact that even after we’ve done everything in our power to get people to respond, there will still almost surely be some missing values in our data set. Next I’ll talk about how to deal with these missing values. The context for the next bit will be to assume that we’ve coerced a potential participant to give us answers to at least some of the questions we asked. For example, suppose we get the following results from a survey of the class (dashes (-) indicate missing values): Subject 1 2 3 4 Height (in) 72 63 74 65 Shoe Size 9 10 6 We can assume that height is a known auxiliary variable. Weight (lb) 150 175 - Well, probably the easiest thing to do is simply delete the records with any missing values, but this is generally considered a bad idea. Deletion of this sort greatly reduces the sample size (in our example, it cuts it in half), and worse yet, the non-responders might have something in common (in our case, they tend to be shorter), that could bias the estimate. The next most intuitive solution would be to replace the missing values with the mean value of the existing data. [For future reference, any method by which we substitute possible values for the missing ones is called imputation.] If we did this, the completed set would look like this (imputed values in bold): Subject 1 2 3 4 Height (in) 72 63 74 65 Shoe Size 9 8.33 10 6 Weight (lb) 150 162.5 175 162.5 This is slightly better than deletion, but still has some inherent problems. We still have the problem that the non-responders could be similar, in which case the mean of the remaining values could be a far cry from the mean of the missing values (in our case, it is pretty unlikely that everyone who is slightly over 5ft tall will weigh 162.5 lbs.) Also, since the missing values are all replaced by the same value, the estimated variance will be significantly reduced compared to the real thing. To circumvent the above problems, we could use the known auxiliaries and some of the existing values of the other variables to perform a linear regression and impute the values. I did this below: Subject 1 2 3 4 Height (in) 72 63 74 65 Shoe Size 9 5.09 10 6 Weight (lb) 150 37.5 175 62.5 You can see this was met with mixed success - the shoe size looks reasonable, but the weights are much too small. Seemingly this would work a bit better if the data set was larger (specifically, if we had some actual weights for people with heights in the 64 inch range). Also, it may not be reasonable to fit a straight line to the relationship between height and weight. An alternative to regression is the ‘Hot Deck’ method. In this procedure, the data file is sorted in a meaningful way based on auxiliary variables, and then the missing values are simply filled in with the corresponding previous value. In this way, the auxiliaries are used somewhat implicitly, and therefore the computational effort is reduced, as are the occasionally unreasonable results from a rigorous regression. This is the preferred method of the US Census Bureau – not sure if that’s a plus or a minus. The method is a little cumbersome to write out more specifically, so I’ll do an example in class. All the methods we’ve discussed so far have attempted to create a single value for each missing value. The final method seeks to impute multiple values for each missing value, and then calculates estimates of the population characteristics for every possible arrangement of the missing values. (Again, I’ll do an example in class.) By far the best thing about this method is that it allows us to see how the estimates of population characteristics would change depending on how we impute the values. If the estimates are relatively stable regardless of the imputed values, we can be confident in our results, but if the estimates vary wildly depending on the imputed values, we are less certain. By far the worst thing about this method is that it takes a ton of computing power, especially when the number of missing values and/or the number of possible values to impute per missing value is large. Multiple imputation has been around for a while, but has come into vogue only recently, simply because we now have computers that are fast enough to make it reasonable. Now, let me completely shift gears to talk about measurement error. The assumption here is that we have complete information, but that the values may not be exactly right. The simplest form of this is when we have instruments with a minimum detection level, such as a ruler that only has marks at the centimeter, or a scale that only measures to the gram. Then the value we get from the instrument will be only approximate or within a range. We could also imagine errors in measurement resulting from outright erroneous equipment or human error in recording or summarizing the values. Models that adjust for these kinds of errors exist, but are extremely complex, so I will not even attempt to cover them here. We can, however, briefly discuss the implications of measurement error. In the case where measurements are wrong, rather than known with error (say, if our scale consistently gives the weight of an object as 1 gram heavier than it should be), then the only effect on the estimates is a bias in the direction of the error. If we could determine the magnitude of the error (say, get a properly working scale), we could calculate the bias in our estimate and correct for it. Generally, though, it is next to impossible to determine the amount of bias. Not much we can do about that. When measurements are known with error, the resulting estimates based on our standard estimation methods could be biased, have incorrect variance, or both. As a general rule, the variance based on our standard calculations will be too small, because we are not including the variance of the measurement errors. Intuitively, uncertainty about the measured value leads to additional uncertainty about the estimate, which means a larger variance of the estimate. In surveys or interviews of humans, the ‘measurement instrument’ is the individual being questioned. We can ‘calibrate’ the responses (and thereby decrease our measurement error) by changing the way we phrase or present the questions. This will be the subject of the remainder of my little rant about measurement errors. Studies have shown that the way a question is asked has a huge impact on the answer. Massive texts have been written on how to ask questions in an unambiguous fashion (at the end of these notes, I’ll offer some references). A reasonably complete coverage of this topic would be enough material to fill an entire semester (and the university, probably the psychology or sociology department, may actually offer such a class). Therefore, I’ll give you just a flavor. Overwhelmingly, my advice to you is to use common sense. Most of you will be able to tell when a question is poorly worded or not specific enough. The problem with this is that, as the question creator, your verdict on the ambiguity of a question is clouded by the fact that you know what you’re trying to ask. Easy solution – give the survey to a test group, and see if they answer as you expect them to. If not, revise the question (perhaps with recommendations from the study group), and try again. Repeat until you achieve the desired results. The final topic (hooray!) I want to discuss about non-sampling errors is what is called randomized response. This is a method that can be used to encourage honesty when sensitive questions are being asked. I will go through an example of this in class as well. References for Non-Sampling Errors “Non-Sampling Errors in Surveys”, Judy Lessler & William Kalsbeek, 1992 “The Phantom Respondents”, John Brehm, 1993 “Mail and Phone Surveys: The Total Design Method”, Don Dillman, 1978 “Sampling Design in Business Research”, Ed Deming, 1960 “Multiple Imputation for Non-Response in Surveys”, Don Rubin, 1987