the National Patient Safety Foundation at the AMA.

advertisement

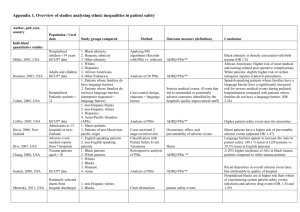

Biomedical Instrumentation and Technology (2004, 45, pp 387-391). Why it is not sufficient to study errors and incidents – Human Factors and Safety in Medical systems. Daniel Gopher Ph.D. Faculty of Industrial Engineering and Management Technion – Israel Institute of Technology Haifa, 32000, Israel There is little doubt that the study of errors, incidents, and accidents in health care, has played a major role in bringing the topic of patient safety to the public and professional eye (1, 2, 3). Without this focus, the public, the policy makers, and the professional communities would not have recognized or fully grasped the seriousness of the problem; namely, the dangers and hazards that have accompanied the revolutionary progress in health care capabilities: diagnostic, surgery, and treatment technology. Studies of errors and adverse events underline the importance of focusing on improving all components of patient safety and well being, along with the adoption of new technologies and procedures. Notwithstanding its public and political merit, the present commentary examines the scientific value of studying medical errors as the sole, or even a prime source of scientific information for improving safety and health of patients and medical staff. Drawbacks of the study of errors A non-representative sample: One drawback of error studies is that the reported, analyzed or investigated events (whether errors, incidents or adverse events) are only a small and most likely biased sample, from the total number of such events. Researchers in this area estimate that only 1-5 percent of the total number of events is reported (3). Even with the well-established report culture of the aviation community, it is believed that the relative percentage of incident reports is not high (3, 4). It is further argued that even with the strongest encouragement and resource investment, and even if the percentage of reports will double or triple, it will still be only a small fraction of their total number. Moreover, the sample is not only very partial, but there are several reasons to believe that it is biased and non-representative. Powerful factors, (legal, financial, social, moral etc.) lead people to hide errors and bias their reports to protect themselves, their status and self-image. Biasing factors equally influence data obtained from existing medical records, solicited reports, or active investigation of accidents and adverse events (3,4, 5). Moreover, studies of errors are frequently guided by the seriousness of their outcomes or the ease of obtaining information, both are inadequate criteria to assure a good representation. Quality and completeness of information: A related problem is the quality of the obtained data. Even if not intentionally biased, as discussed above, there are limiting factors to the quality of data obtained with different techniques of error investigation. The use of medical records as a source of data is limited by the fact that these are written in different formats, are usually very sketchy, and are not generated for the purpose of studying errors. Solicited error reports depend of the memory of the reporter. As such, they are retrospective, subjective and incomplete. Direct observations are constrained to the immediate present of an interaction. They are limited in their ability to consider a more general present context. For example, an observer in a surgery may be able to see and be aware of only a small part of the on going activities, interacting team members and communications. He also misses the antecedents and future consequences of an event. Absence of a reference base: The absence of a proper reference and comparison base is a critical problem in the interpretation and evaluation of a data set solely based on error or adverse events. This is unfortunately the situation in the vast majority of the reported studies. When there is no normative information on the context of activities in which an error event occur and the 2 frequency of such activities in normal conduct (e.g. administration of medications, setting IV flow rate, etc.), there is little ability to evaluate the overall impact of an event, understand its causes, and develop appropriate preventive steps. Using the population of accumulated errors as a comparative base for evaluating the relative frequency, type and seriousness of events, may introduce serious biases and distortions. In the human decision making literature, such biases have been related to a failure to consider the proper base rates (6). For example, in their study of errors in an intensive care unit, Donchin, Gopher and their colleagues (7) report that physicians and nurses were about equal contributors to the accumulated population of error reports. This finding should have led one to a conclusion that the two are equally prone to commit an error. However, when adding to this finding the fact that nurses performed 87% of all activities in the unit, our perspective was dramatically changed. It was clear that physicians were much more susceptible to commit errors. Similar diversions were revealed in many other segments of the study. Thus, limiting a study to the collection of errors and adverse events puts severe constraints on one’s ability to provide valid interpretations and propose adequate solutions. The wisdom of hindsight: Using information and insights gained in the analysis of errors for the purpose of planning future behavior also suffers from the drawback of constituting ”wisdom of hindsight”. Namely, it has the value of a retrospective explanatory review, but lacks the power of a prospective hypothesis or a predictive model. This distinction is very important to understand when considering the value of information obtained from the collection and analysis of error reports. In daily life, people can frequently recount and reason in detail the way that led them to a certain state, say marry their spouse, or land in a specific professional career. However, it is also clear that in many or even most of these cases, the final state could not be predicted early or even at a rather late stage of the process. This is because there are many variables and interests involved in each stage, many uncertainties, and more than one possible outcome. Hence, while being highly capable of understanding how and why a certain outcome has been obtained when we observe it, we are severely handicapped in our ability to predict beforehand that this specific outcome will occur. The moral is important in the scientific study of errors. After all, we investigate the past for the purpose of improving the future. A passive, reactive approach: An additional drawback to be considered, is that the study of adverse event represents a passive, reactive approach, to be contrasted with an active and preventive approach. When studying errors, one does not initiate, generate or manipulate activities and evaluations in any systematic manner. Rather, one passively responds to selected outcomes of complex and dynamic interactions. The limits on the quality and type of information obtained by this approach have been discussed above. Needless to say that is not the best use of limited research and application funds. To conclude this critical review, it is clear that the study of errors has many drawbacks as a scientific source of information for the advancement of the health care system and the improvement of patient safety. This conclusion has an important implication for future investments of efforts and resources. Continued investigation of medical errors is important mainly for maintaining awareness of the topic in the public eye and the professional community. However, its scientific value is limited. Attention and effort should be directed to alternative approaches and sources of information, that can provide the knowledge and tools necessary for fulfilling the aspired goals. Continued investments in the investigation of adverse events are likely to have rapidly diminishing returns. At the same time they may reinforce counterproductive, interfering factors such as promoting the blame culture, elevating malpractice claims and insurance costs, and increasing defensive rather than therapeutic medical costs (8). Such investigations are likely to further reduce the willingness of involved personnel to open up, share information, and participate in the process of improving the situation -- prerequisites to assure development and progress. Complementary approaches to the study of patient safety 3 A fresh look at the subject of patient safety requires a conceptual shift, recruitment of knowledge from domains outside the immediate medical circle, and adoption of alternative methodologies. A Conceptual shift: Modern medical systems are complex entities. The vast majority of medical procedures are segmented into many stages and processes that involve a variety of technologies, a number of concurrent and sequential team members with diversified expertise, and a massive amount of detailed information which has to be transferred efficiently from one stage to another. For example, few moments after entering an emergency unit (EMU), a patient splits into two entities, one is the patient in his body, who is being seen by several professionals and may be moved around to imaging, diagnosis and treatment facilities. A second entity is that patient’s information file, which builds up and develops almost independently, as tests are sent to laboratories, results are received, information flows in, and reports are written. Matching, coordinating and synchronizing the physical and temporal movement of these two entities is one of the most difficult challenges of an EMU, and a cause of many delays and adverse events. Another good example is preplanned surgery. In a recent study of surgical procedures at Hadassah, Hebrew University Hospital in Jerusalem, we showed that the procedure, which starts at the hospital ward the night before the operation, and ends when the patient returns to the ward from the recovery room, can be best described as a relay competition, involving 16 major relays, taking place across 7 physical locations, involving more than a dozen team members with different specializations and educational backgrounds, who transfer responsibility for, and information about the patient. In both examples, it is clear that the direct medical activity (diagnosis in the EMU, and the operation in the surgical procedure) is only one of many stages and processes which are interlinked, and are an integral part of the procedure. A problem or an error at any one of them may propagate through the system and have serious consequences on the patient’s well being. It is quite obvious that patient safety and well being are determined by the successful functioning of a complex system in which the direct medical activities may constitute only a small part. Our conceptual view of the patient safety issue should be formulated as an overall system optimization problem. The perspective of concerns about patient safety should be expanded much beyond the evaluation of the direct medical care. Although many researchers have made this argument repeatedly over the last decade (3, 4, 5, 8) it has had relatively little influence on the focus of studies, the way in which information has been collected, procedures have been evaluated and changes have been introduced. These have been clearly dominated by studies of adverse events and knowledge bases, which were acquired within the narrow medical circle (e.g. the attempt to develop standard medical practices) Recruitment of complementary knowledge bases and expertise. Conceptualizing the patient safety issue as an optimization problem of a complex system performance implies that we also should broaden our perspective with knowledge domains that may contribute to the modeling and study of this topic. Pertinent domains include: System theory, Cognitive Engineering, Human Factors Engineering, Cognitive Psychology and Social Psychology. System theory investigates the operation rules of systems, their variables and determinants. Cognitive engineering is a subset of system theory focusing on formalizing the information needs and decision rules required to govern the operation of dynamic, multi-element systems. Human Factors Engineering is concerned with human performance and engineering design of the interface between humans and technology. Cognitive psychology focuses on topics of human perception, information processing, memory, decision making, response abilities, their fundamental characteristics and limitations. Social psychology is relevant particularly in aspects related to team work, team coordination, team decision making and shared situational awareness. The relevance of each of the above topics for assuring a successful operation of medical systems is easy to observe. However, it is important also to realize that each domain is accompanied by a set of methods and tools that best represent its concerns and collect data on system operation from that perspective. We are hence broadening the scope both in the phenomena that are observed, as well as the methods by which they are studied. 4 Adoption of alternative methodologies. To overcome the discussed drawbacks of adverse event research as a scientific source of information, we need to propose methodologies that are representative, active and prospective. These requirements are independent of the scientific domain within which a method anchors. As my own expertise is in the domains of Cognitive Psychology and Human Factors Engineering, I shall limit my examples to these domains. The fundamental tool of human factor engineering is task analysis. Task analysis identifies the overall objectives of a system (e.g. a surgical procedure, radiotherapy treatment, etc) and the allocation of responsibilities between human operators and engineering elements in fulfilling these objectives. It then maps and restates objectives and interactions in terms of their processing and response demands on the human, task workload, required knowledge and acquired skills (10). Difficulties in task performance, reduced efficiency, errors and performance failures are all interpreted to reflect a mismatch between task demands and the ability of the performer to meet them. Among the corrective steps are alternative engineering designs and changing work procedures. A similar logic is applied to the analysis of the interaction between several individuals in systems in which team performance is called upon. The essence of task analysis is discovering difficulties and mismatches between task requirements and the ability of performers to cope with them followed by an attempt to reduce the gap. A large number of specific techniques are used in human factors analysis, but they can all be reduced to the above described task analysis logic. The significance of using a human factors based task analysis approach for the evaluation of medical systems is that it provides a more general framework for the identification, evaluation and solving problems of system performance. Overall, such problems increase the likelihood of medical adverse events to occur, and reduce the efficiency of detecting and recovering from them. Such effects are predicted despite the fact that there may not be a direct one to one mapping between a system problem and the occurrence of an error. For example, our task analysis of the surgical procedure identified 4 major dimensions of difficulty: Many stages and transitions, multiple physical locations, a massive volume of information, and human factors design problems. Existence of many stages and transitions is associated with problems in the transfer of responsibility and recorded and informal information, difficulty in perceiving changes in details, and a higher probability of escalating errors. Multiple physical locations lead to problems in coordination and communications, difficulties in the relocation of patients and equipment. A massive volume of information that has to be recorded, transferred and communicated often results in erroneous or missed information. Access to information is difficult and time consuming. Information is not transmitted at transition points, when the patient and his file are moving from one phase to another. No staff member has a detailed, complete and coherent status map of the patient and the procedure. Human factors design problems show themselves in such aspects as design features of equipment, its suitability for the task, or the complete absence of required instruments and devices; problems in the design of information recording, retrieval and display; absent procedures (e.g. a clear procedure for moving the patient from the surgery bed after surgery), or procedures not appropriate for the capabilities of the team or suitable for the objective of the intended process. It should be noted that analysis of the surgical procedure from this perspective pointed out problematic areas. For each of the areas there exists an ensemble of evaluation tools and improvement know how. To further validate the pattern of difficulties that has been identified by the task analysis, we conducted an observational study and recorded critical events in 48 surgeries. A critical event was defined as an event that can affect patient safety (i.e. constitute or lead to an adverse event). During the 48 surgeries we observed a total 204 critical events. Major categories were: Team work, following procedures, human Factors design problems, performing non-routine procedures, availability of equipment, existence and sharing of knowledge, lack of competence and expertise. Note that these categories do not use medical terminology, but rather identify problems that influence the performance quality of the system. Nonetheless, they are associated with and reflect 5 on the probability of occurrence of medical adverse events and their severity. Using the same philosophy we have been recently experimenting with a new reporting system. Rather than reporting incidents, accidents and adverse events, we ask medical personnel in hospital wards to report difficulties and hazards in the conduct of their daily work and activities. Reports cover such aspects as working with forms, preparation and administration of drugs, physical setting, devices and equipment, work structure, etc. Common to the exemplified task analysis, observational study and new reporting system, is that they assess the medical system from a general perspective, combining constructs from human performance, cognitive psychology and system theory. In contrast to the study of errors, these methods are active, prospective and provide a systematic and representative analysis of system functioning. Furthermore, though linking the medical work domain with the generic context within which these scientific domains were developed, the medical profession can enrich its insights and capitalize on a well developed toolbox. In conclusion, we believe that the time is ripe for a major investment in the development of a new database, using system constructs and its associated methodologies. Such an investment may create a radical modification in the patient safety arena and lead to a major improvement. It may also change the general atmosphere and the public view of the topic away from the blame culture towards a cooperative effort to develop a safer and more user friendly system. Acknowledgement I am indebted to Sue Bogner and Yoel Donchin for their constructive comments on an earlier version of this paper. References 1. Cooper, J.B., Newbower, R. 7 Kitz,R. (1984) An analysis of major errors and equipment failures in anesthesia management : considerations for prevention and detection. Anesthesiology, 60, 34-42. 2. Leape, L.L., Brennan, T.A., Laird, N. M., Lawthers., (1991). The nature of adverse events in hospitalized patients: Results form the Harvard Medical practice Study II. New England Journal of Medicine, 324, 377-384. 3. Cook, R.I., Woods, D.D., Miller, C. (Eds.), (1998). A Tale of Two Stories: Contrasting Views on Patient Safety. National Health Care Safety Council of the National Patient Safety Foundation at the AMA. 4. Kohn, L.T., Corrigan, J.M., Donaldson, J.C.(Eds.), (2001). To Err is Human: Building a Safer Health System. Washington, D.C.: National Academy Press. 5. Bogner, M.S., (Ed.), (1994). Human Error in Medicine. Hillsdale, NJ, Lawrence Erlbaum Associates. 6. Tversky, A., Kahneman, D., (1981). The framing of decisions and the psychology of choice. Science, 211, 453-458/ 7. Donchin-Y; Gopher-D; Olin-M; Badihi-Y; Biesky-M; Sprung-CL; Pizov, R., Cotev-S (1995) A look into the nature and causes of human errors in the intensive care unit. Critical Care Medicine, 23(2): 294-300. 8. Studdert, D.S., Mello, M.M, Brennan, T.R. (2004). Medical Malpractice. New England Journal Of Medicine, 350:3, 283-291. 6 9. Cook R.I., Render M., Woods D., (2000). Gaps in the continuity of care and progress in patient safety. The British Medical Journal, 320 (18) 791-794. 10. Wickens D.C., Hollands, J.G., (2000). Engineering Psychology and Human Performance. Prentice-Hall, NJ