AMS 572 Lecture Note 7

advertisement

AMS 572 Lecture Notes #10

October 28th, 2011

Ch. 9. Categorical Data Analysis

Quantitative R.V:

Numbers associated with the measurements are meaningful.

continuous R.V.: height, weight, IQ, age, etc.

discrete R.V.: time(day) , # successes, etc.

Qualitative R.V:

Numbers associated with the measurements are not meaningful.

A natural categorical variable:

Eye color

code

percentage

count

Brown

1

60%

1200

Blue

2

10%

200

Green

3

…

…

Gray

4

Hazel

5

Others

6

100%

2000

Total

Sometimes we categorize quantitative data.

e.g. Age group: Children (years): <17; Young adults: [17, 35];

Middle aged adults: [36, 55]; Elderly adults: >55

1

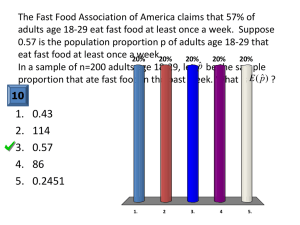

1. Inference on One Population Proportion

* A special categorical R.V. -- Binary Random Variables:

Eg. Jerry has nothing to do. He decided to toss a coin 1000 times to see

whether it is a fair coin. Of the 1000 tosses, he got 510 heads and 490 tails. Is

a fair coin?

1

H 0 : p 2

H : p 1

a

2

Here the outcome variables Xi = 1 (heads) or 0 (tails), i = 0, 1, …, 1000

The total number of heads = X1+ X2+ … + X1000 (= 510 in this example)

* Binomial Experiment and the Binomial Distribution:

Def: A Binomial experiment consists of n trials. Each trial will result in 1of 2

possible outcomes, say “S” and “F”. The probability of obtaining an “S”

remains the same from trial to trial, say P. (the probability of obtaining an

“F” is 1-P). These trials are independent (previous outcomes will not

influence the future outcomes)

# of “S” = X ~ Bin(n, p) (p is population proportion)

Sample proportion:

X

n

n

P( X x) p x (1 p) n x , x 0,1, 2,

x

,n

n

n

n

m.g.f of X: M x (t ) E (etx ) etx p( X x) etx p x (1 p) n x

i 0

i 0

x

n

n

(et p) x (1 p) n x (et p 1 p) n

i 0 x

n

n

[ note: (a b) n a xb n x , Newton’s binomial theorem ]

x 0 x

2

E.g. Let X ~ Bin(n1, p) , Y ~ Bin(n2 , p) . Furthermore X and Y are independent. What is

the distribution of X+Y?

Solution: M X Y (t ) M X (t ) M Y (t ) [et p (1 p)]n1 n2

Hence, X Y ~ Bin(n1 n2 , p)

When n is large. n 30

n

X

X

i 1

n

i

X n

p(1 p)

N ( p,

) , by CLT.

n

n

(Note: Here the random sample is X1, X2, … ,Xn : they are i.i.d. Bernoulli(p) R.V.’s.)

Bernoulli Distribution

Toss a coin, and get the result as following: Head(H), H, Tail(T), T, T, H, …

1, if the i th toss is head .

Let X i

th

0, if the i toss is tail.

A proportion of p is head, in the population.

X i ~ Bernoulli( p) P( X i xi ) p xi (1 p )1 xi , xi =0, 1

(*Binomial distribution with n = 1)

Inference on p – the population proportion:

n

① Point estimator: pˆ

X

n

X

i 1

n

i

, p̂ is the sample proportion and also, the

sample mean

② Large sample inference on p:

pˆ ~ N ( p,

p (1 p )

)

n

③ Large sample (the original) pivotal quantity for the inference on p.

3

Z

pˆ p

~ N (0,1)

p(1 p)

n

*

Alternative P.Q. Z

pˆ p

~ N (0,1)

pˆ (1 pˆ )

n

④ Derive the 100(1-)% large sample C.I. for p (using the alternative P.Q.):

Z*

pˆ p

~ N (0,1) if n is large

pˆ (1 pˆ )

n

P ( z / 2 Z * z / 2 ) 1

pˆ p

P ( z / 2

z / 2 ) 1

pˆ (1 pˆ )

n

pˆ (1 pˆ )

p pˆ z / 2

n

P ( pˆ z / 2

P ( pˆ z / 2

pˆ (1 pˆ )

p pˆ z / 2

n

Hence, 100(1-)% CI for p is pˆ z / 2

pˆ (1 pˆ )

) 1

n

pˆ (1 pˆ )

) 1

n

pˆ (1 pˆ )

.

n

⑤ Test:

H 0 : p p0

H a : p p0

H 0 : p p0

H a : p p0

H 0 : p p0

H a : p p0

Test statistic (large sample – using the original P.Q.):

Z0

pˆ p0

p0 (1 p0 )

n

H0

~ N (0,1)

At the significance level , we reject H 0 in favor of H a : p p0 if z0 z .

4

P(reject H0 | H0 ) P Z0 C | H0 : p p0 , C z .

At the significance level , we reject H 0 in favor of H a if p-value<.

Two-sided p-value= 2*min{P(Z0 z0 | H a ), P(Z0 z0 | H a )} .

Note: One can use either the original or the alternative P.Q. for constructing the large

sample CI or performing the large sample test. The versions we presented here are the

most common choices.

E.g. A college has 500 women students and 1,000 men students. The introductory zoology

course has 90 students, 50 of whom are women. It is suspected that more women tend to take

zoology than men. Please test this suspicion at α =.05.

SOLUTION:

Inference on one population proportion, large sample.

The hypotheses are:

H 0 : p 1/ 3 vs H a : p 1/ 3

The test statistics is:

Z0

pˆ p0

p0 1 p0

n

50 / 90 1/ 3

1/ 3 1 1/ 3

90

4.47

Since 4.47 >1.645, we reject H0 at the significance level of 0.05 and claim that more

women tend to take zoology than men.

5

⑥ Sample size determination based on the maximum error E or the length of the C.I.

L:

L pˆ z / 2

pˆ (1 pˆ )

pˆ (1 pˆ )

pˆ (1 pˆ )

( pˆ z / 2

) 2 z / 2

n

n

n

1

1

2

4 z2 / 2 pˆ (1 pˆ ) 4 z / 2 2 (1 2 ) z2 / 2

n

2

L2

L2

L

Maximum error E

P(| pˆ p | E ) 1 E L / 2

n

4 z2 / 2 pˆ (1 pˆ ) z2 / 2 pˆ (1 pˆ ) z2 / 2

(2 E )2

E2

4E 2

E.g. Thanksgiving was coming up and Harvey's Turkey Farm was doing a land-office business.

Harvey sold 100 gobblers to Nedicks for their famous Turkey-dogs. Nedicks found that 90 of

Harvey's turkeys were in reality peacocks.

(a) Estimate the proportion of peacocks at Harvey's Turkey Farm and find a 95% confidence

interval for the true proportion of turkeys that Harvey owns.

Solution: Let p be the proportion of peacocks and q be the proportion of turkeys.

90

p 100

0.9,

q 1 p 0.1 and n 100 .

95% C.I on q is

q z / 2

q (1 q )

0.1 0.9

0.1 1.96

[0.0412,0.1588] .

n

100

(b) How large a random sample should we select from Harvey's Farm to guarantee the length of

the 95% confidence interval to be no more than 0.06? (Note: please first derive the general

formula for sample size calculation based on the length of the CI for inference on one

population proportion, large sample situation. Please give the formula for the two cases: (i) we

6

have an estimate of the proportion and (ii) we do not have an estimate of the proportion to be

estimated. (iii) Finally, please plug in the numerical values and obtain the sample size for this

particular problem.)

Solution: L 0.06,

(i) E z / 2

E L / 2 0.03.

q (1 q )

n

2

( z )2

1.96

n / 22 q (1 q )

0.1 0.9 385

E

0.03

(ii) if q is unknown,

2

1 1 1

q (1 q ) q

2 4 4

2

4( z / 2 ) 2 1 z / 2

n

.

L2

4 L

2

1.96

n

1068.

0.06

⑦ Power of the test, at the significance level ,

H 0 : p p0

H a : p p1 p0

At the significance level , we reject H 0 if Z 0 z .

P( fail to reject H 0 | H a )

1 P(reject H 0 | H a ) P( Z 0 z | H a : p p1 p0 )

P(

P(

pˆ p0

z | p p1 )

p0 (1 p0 )

n

pˆ

p0 (1 p0 )

n

p0

p0 (1 p0 )

n

z | H a : p p1 p0 )

7

p0 (1 p0 )

p0 p1 | p1 )

n

P ( pˆ p1 z

P(

pˆ p1

p1 (1 p1 )

n

1

p0 p1

p1 (1 p1 )

n

p0 p1

z

p1 (1 p1 )

n

z

p0 (1 p0 )

| p1 )

p1 (1 p1 )

p0 (1 p0 )

p1 (1 p1 )

⑧ Sample size calculation with power.

H 0 : p p0

, significance level , power= 1

H a : p p1 p0

a)

- Z

( z

n [

[

p0 p1

z

p1 (1 p1 )

n

p0 (1 p0 )

p1 (1 p1 )

p0 (1 p0 )

Z ) p1 (1 p1 )

p1 (1 p1 )

]2

p1 p0

z p0 (1 p0 ) Z p1 (1 p1 )

p1 p0

]2

H 0 : p p0

, significance level , power= 1

H a : p p1 p0

b)

n [

z p0 (1 p0 ) Z p1 (1 p1 )

p1 p0

]2

H 0 : p p0

, significance level , power= 1

H a : p p1 p0

c)

8

z

n [

p0 (1 p0 ) Z p1 (1 p1 )

]2

2

p1 p0

The definition of large sample is n 30 by the usual convention of CLT

However, the more accurate definition for inference on one population

proportion p is:

x 5, n x 5 (*some books use a more conservative threshold of 10)

E.g. A pre-election poll is to be planned for a senatorial election between two

candidates. Previous polls have shown that the election is hanging in delicate balance. If

there is a shift (in either direction) by more than 2 percentage points since the last poll,

then the polling agency would like to detect it with probability of at least 0.80 using a

0.05-level test. Determine how many voters should be polled. If actually 2500 voters are

polled, what is the value of this probability?

Solution:

For a power of at least 1 0.8 in detecting a 2 percentage point shift from equally

favored candidates,

z

n

p0 q0 z

p1 q1

2

1.96 (0.5)(0.5) 0.84 (0.48)(0.52)

0.02

2

= 4897.6 or 4898

If 2500 voters are actually sampled, the power would be

( p p 0 ) n z

(0.52)

p1 q1

p0 q0

0.02 2500 1.96 (0.5)(0.5)

(0.52)(0.48)

9

(0.040) 0.5160

Exact test on one population proportion:

Data: sample of n, X are # of “Successes”, n-X are # of “Failures”

n

X ~ B(n, p) , P( X x) p x (1 p) n x , x 0,1, 2,

x

,n

H 0 : p p0

H a : p p0

(1)

n

n

p value P( X x | H 0 : p p0 ) p0i (1 p0 )1i

ix i

H 0 : p p0

H a : p p0

(2)

x

n

p value P( X x | H 0 : p p0 ) p0i (1 p0 )1i

i 0 i

H 0 : p p0

H a : p p0

(3)

p value 2*min{P( X x | H 0 ), P( X x | H 0 )} .

10