Faculty of Sciences and Mathematics, University of Niš, Serbia

advertisement

Faculty of Sciences and Mathematics, University of Niš, Serbia

Available at: http://www.pmf.ni.ac.yu/filomat

Filomat 21:2 (2007), 285–290 _____________________________________

A TRUST REGION ALGORITHM FOR LC1 UNCONSTRAINED

OPTIMIZATION

Nada Đuranović-Miličić

Abstract: In this paper a trust region algorithm for minimization of locally

Lipschitzian functions, which uses the second order Dini upper directional derivative is

considered. A convergence proof is given, as well as an estimate of the rate of convergence.

Keywords and phrases: Directional derivative, second order Dini upper directional

derivative, uniformly convex functions, trust region algorithm.

2000 Mathematics Subject Classification: 65K05, 90C30.

Received: November 21, 2006.

1. INTRODUCTION

We shall consider the following LC1 problem of unconstrained optimization

min f ( x) | x D R n ,

(1)

where f : D R n R is a LC1 function on the open convex set D , that means the

objective function we want to minimize is continuously differentiable and its gradient

f is locally Lipschitzian, i.e.

f ( x) f ( y) L x y

for x, y D

for some L 0 .

We shall present an iterative algorithm which is based on the results from [2] and [3]

for finding an optimal solution to problem (1) generating the sequence of points {xk } of

the

following

form:

xk 1 xk dk , k 0,1,...,

dk 0,

where the directional vector dk is defined by the particular algorithm.

285

(2)

The algorithm which we are going to present is a trust region algorithm, which uses

the second order Dini upper directional derivative instead of the Hessian in the

quadratic approximatiom model.

The convergence of trust region algorithms has been usually proved for C 2 functions,

i.e. for twice continuously differentiable functions ( see for example [2]). The aim of

this paper is to establish convergence under weaker assumptions, if f LC1 .

2. PRELIMINARIES

We shall give some preliminaries that will be used for the remainder of the paper.

Definition (see [3]) The second order Dini upper directional derivative of the function

n

n

f LC 1 at xk R in the direction d R is defined to be

f ( xk d ) f ( xk )

f ( x ; d ) lim sup

T

D

k

d

0

If f is directionally differentiable at xk , we have

f xk d f xk d

f xk ; d f xk ; d lim

T

"

D

"

0

for all d R n .

Lemma 1 (See [3]) Let f : D R n R be a LC1 function on D , where D R n is an

open subset. If x is a solution of LC1 optimization problem (1), then:

f ( x; d ) 0

and f D( x; d ) 0, d R n .

Lemma 2 (See [3]) Let f : D R n R be a LC1 function on D , where D R n is an

open subset. If x satisfies

f ( x; d ) 0

and f D( x; d ) 0, d 0, d R n , then x is a strict local minimizer of (1).

3. THE OPTIMIZATION ALGORITHM

286

At the k-th iteration we want to find a solution dk of the following direction finding

subproblem

min k d f xk d

T

1 "

f D xk , d

2

(3)

s.t. d k

for some bound k 0 , where the norm is arbitrary, but is usually chosen as the l2 norm. We choose the radius k to be as large as possible depending on the agreement

between k dk and f xk dk . We can measure this agreement by comparing the

actual reduction in f on the k-th step

Aredk f k f xk f xk dk

and the corresponding predicted reduction

Pr edk k k 0 k dk .

Hence, if the ratio k

Ared k

is closer to unity, the better is the agreement beteween

Pr ed k

the quadratic model k d and f xk dk .

The trust region algorithm for LC1 optimization

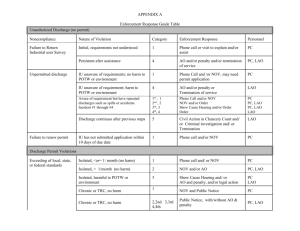

Step 0. Given x0 D, 0, 0 0, 0 1 2 1, k=0;

Step 1. If f xk , STOP; otherwise find a solution dk to the subproblem (3);

Step 2. Compute f xk dk and k ;

Step 3. k 1

1 d k if k 1 ;

2 k if k 2 and d k k ;

k , otherwise.

Step 4. If k 0, set xk 1 xk ; otherwise xk 1 xk dk ;

Step 5. set k 1 k , go to step 1.

Proposition. If the function f LC1 satisfies the condition

287

c1 d

f D( x; d ) c2 d

2

2

(4)

then: 1) the function f is uniformly and, hence, strictly convex, and, consequently; 2)

the level set L( x0 ) x D : f ( x) f ( x0 ) is a compact convex set for any x0 D ; 3)

there exists a unique point x* such that f ( x* ) min f ( x) .

xL ( x0 )

.

Proof. See [1].

Lemma 3.

Pr ed k k 0 k d k

f xk

2

f xk

min k ,

c2

.

Proof is analogous to the proof of Lemma 5.2 in [3].

Convergence theorem. If the function f LC1 satisfies the condition (4) and if any

level set

x D | f x f x

is bounded, then for any initial point xo D,

k

k 1, inf k 0 and xk x as k , where x is a unique minimal point.

Proof. The algorithm generates a subsequence xk , k K for which either

a) k 1 and k 1 0 ( and hence k 0 ), or

b) k 1 and inf k 0 .

From the boundness of level sets

x D | f x f x

k

it follows that

xk , k K

belongs to a bounded set. Hence there exist accumulation points of xk , k K . Let x

be any accumulation points of such a subsequence. We only have to prove that

f x 0 since, according to the Proposition, the stationary point is a unique

minimizer.

We shall show that x can not arise from case a) but only from case b). Namely, since

f LC 1 from (3) it follows that there exists a sequence k (see [3]) converging to

zero such that

f x f x

k

k

dk k 0 k dk k k , i.e.

Aredk Pr edk k k .

(5)

Dividing (5) by Pr ed k and taking the limit as k , k K gives k 1. Hence

k 1 and inf k 0 ; so x can arise only from case b). In case b)

288

f x1 f x f k

implies

kK

f k 0 since

f x1 f x

is constant.

Hence

k 0 since k 1 .

From Lemma3 it follows that

Pr ed k k 0 k d k

f xk

2

f xk

min k ,

c2

.

Since k 0 and inf k 0 , it follows that f xk 0. From continuity of f we

have that f x 0. Following Theorem 4.4 from [3] it can be analogously proved that

xk

converges Q-superlinearly to x .

4. CONCLUSION

In this paper we presented a trust region algorithm which is based on the results from

[2] and [3]. We proved the convergence of the trust region method for LC1 class of

functions using the second order Dini upper directional derivative instead of the

Hessian in the quadratic approximatiom model, аs it has been done in [2]. In [2] the

convergence proof of the trust region algorithm is given for f C 2 , hence under

stronger assumptions. In [3] a trust region algorithm is also defined for LC1 functions.

It uses the exact solution dk and an inexact solution d k of the subproblem (3) and it

requires that the decrease in k d k must be at least a fraction of the optimal decrease in

k d k . In [3] the convergence proof is given under a little stronger assumptions than

it has been done in this paper.

5. ACKNOWLEDGMENT

This research was supported by Science Fund of Serbia, grant number 144015G ,

through

Institute

of

Mathematics,

SANU.

REFERENCES

[1] Djuranovic-Milicic, N., On minimization of locally Lipschitzian functions,

Proceedings of SYM-OP-IS 2003, H.Novi, 347-350, 2003.

[2] Fletcher, R., Practical methods of optimization, Vol.1, J. Wiley, New York,1980.

[3] Sun, V.J., Sampaio, R.J.B., and Yuan, Y.J., Two algorithms for LC1 unconstrained

optimization, Journal of Computational Mathematics 6 , 621-632, 2000.

289

[4] Vujčić, V., Ašić, M., and Miličić, N., Mathematical programming (in Serbian),

Institute of Mathematics, Belgrade, 1980.

Department of Mathematics, Faculty of Technology and Metallurgy, University of

Belgrade, Belgrade, Serbia

290