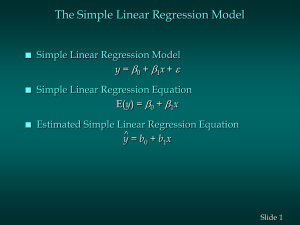

14.3 R-square, correlation and regression

14.3 Coefficient of Determination (

r

2

), Correlation and Regression

(a) r

2

: r

2 i n

1

( i n

1

(

ˆ i y i

y )

2

y )

2

SSR

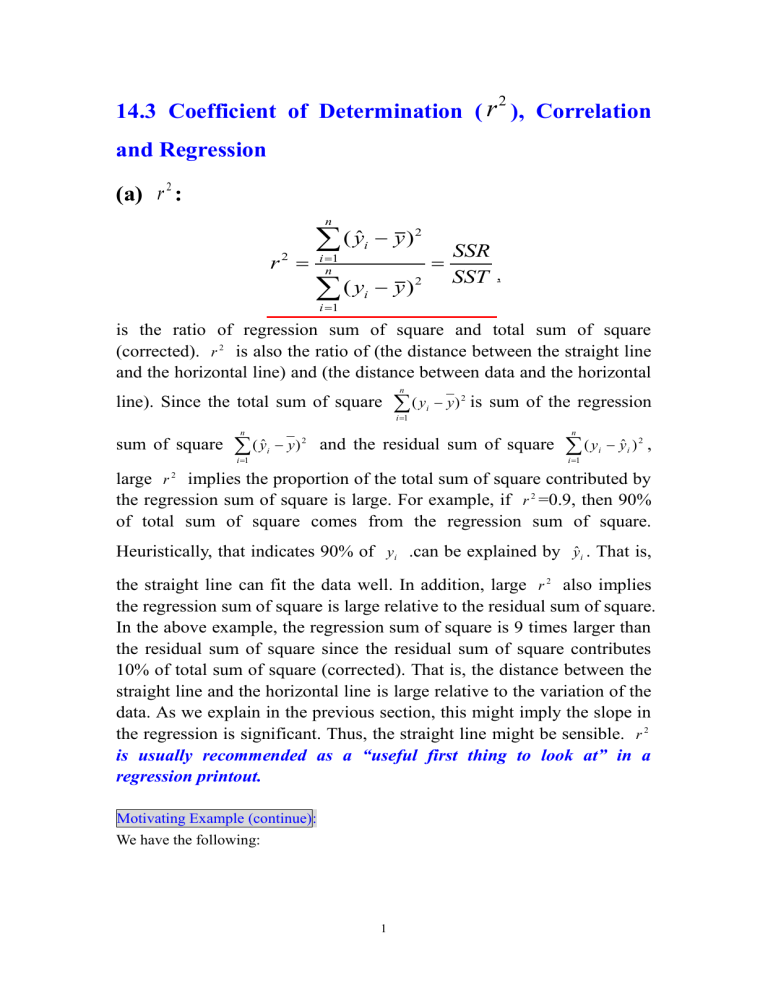

SST , is the ratio of regression sum of square and total sum of square

(corrected). r

2 is also the ratio of (the distance between the straight line and the horizontal line) and (the distance between data and the horizontal line). Since the total sum of square i n

1

( y i

y )

2 is sum of the regression sum of square i n

1

( i

y )

2 and the residual sum of square i n

1

( y i

i

)

2 , large r

2 implies the proportion of the total sum of square contributed by the regression sum of square is large. For example, if r

2 =0.9, then 90% of total sum of square comes from the regression sum of square.

Heuristically, that indicates 90% of y i

.can be explained by ˆ i

. That is, the straight line can fit the data well. In addition, large r

2 also implies the regression sum of square is large relative to the residual sum of square.

In the above example, the regression sum of square is 9 times larger than the residual sum of square since the residual sum of square contributes

10% of total sum of square (corrected). That is, the distance between the straight line and the horizontal line is large relative to the variation of the data. As we explain in the previous section, this might imply the slope in the regression is significant. Thus, the straight line might be sensible. r

2 is usually recommended as a “useful first thing to look at” in a regression printout.

Motivating Example (continue):

We have the following:

1

b

1

5 , s

XY

2840 , SSR

b

1 s

XY

5

2840

14200 ,

SST

10 i

1

y i

y

2 i

10

1 y i

2

10 y 2

184730

10

130 2

15730

Thus, r 2

SSR

SST

14200

15730

0 .

9027

.

For this pizza example, we can conclude that 90.27% of the total sum of squares can be explained by using the estimated regression equation y

ˆ

60

5 x to predict quarterly sales. In other words, 90.27% of the variation in quarterly sales can be explained by the linear relationship between the size of the student population and quarterly sales.

(b) Correlation:

The correlation coefficient between the variables x and y is r

XY

i n

1

( x i

x )( y i

y ) i n

1

( x i

x )

2 i n

1

( y i

y )

2

s

XY s

1 / 2

XX s

1 /

YY

2

,

1

r

XY

1

As y i

ax i

b , then r

XY

1 or r

XY

1 . That is, r

XY

1 implies a significant linear relationship between x and y . The correlation coefficient is also associated with the regression coefficient b

1

. b

1

s

XY s

XX

1 / s

YY

2 s 1 /

XX

2

s

XY s 1 / 2

XX s 1 /

YY

2

s

YY s

XX

1 / 2 r

XY

.

As b

1

0

r

XY

0

a positively linear relation.

As b

1

0

r

XY

0

a negatively linear relation

As b

1

0

r

XY

0

there is no significantly linear relation between x and y.

2

Note: r

XY

measures linear association between x and y, while b

1

measures the size of the change in y due to a unit change in x. r

XY

is unit-free and scale-free. Scale change in the data will affect b

1

but not r

XY

Note: the value of a correlation r

XY

shows only the extent to which x and y are linearly associated. It does not by itself imply that any sort of casual relationship exists between X and Y. Such a false assumption has lead to erroneous conclusions on many occasions.

Note that r is also associated with

XY

R

2 since r 2 i n

1

ˆ i

y

2 i n

1

y i

y

2

b

1 s

XY s

YY

s

XY s

XX s

YY s

XY

s

2

XY s

XX s

YY and r

XY

s

XY s

1 / 2

XX s

1 /

YY

2

( sign .

of .

b

1

)

1 / 2

( sign .

of .

b

1

) r

2

, where r

XY

has the same sign as b

1

.

The above equation indicates that large between the variables x and y . r

2 implies strong correlation

Note: r

XY

( sign .

of .

b

1

) r

2

, only holds for the simple linear regression Y

0

1

X

.

3

The correlation between y and the fitted value y

ˆ is r

Y Y

ˆ

i n

1

( y i

y )( y

ˆ i

y

ˆ

) i n

1

( y i

y )

2 i n

1

( y

ˆ i

y

ˆ

)

2

r

2

, where y

ˆ n

i

1 n i

.

Motivating Example (continue):

Since b

1

5 , r 2

0 .

9027

r

XY

sign of b

1

r 2

0 .

9027

0 .

9501 indicates x and y are highly correlated and a strong positive linear association between x and y .

Example 2 (continue):

Suppose the model is y i

0

1 x i

i

, i

1 , , 20 ,

i

~ N

, and i

20

1 x i

1330 , i

20

1 y i

1862 .

8 , i

20

1 x i

2

90662 ,

20 i

1 y i

2

173554 .

26 , i

20

1 x i y i

124206 .

9

(d) Determine

2 r .

[solution:] r

2

SSR

SST

49 .

220

53 .

068

0 .

9275

Online Exercise:

Exercise 14.3.1

4