Notes 13 - Wharton Statistics Department

advertisement

Statistics 550 Notes 13

Reading: Sections 3.1-3.2

In Chapter 3, we return to the theme of Section 1.3 which is

select among point estimators and decision procedures the

“optimal” estimator for the given sample size, rather than

select a procedure based on asymptotic optimality

considerations. In Chapter 1.3, we studied two optimality

criteria – Bayes risk and minimax risk – and found Bayes

and minimax estimators by computing the risk functions of

all candidate estimators. This is intractable for many

statistical models. In Chapter 3, we develop several

tractable tools for finding the Bayes and minimax

estimators.

I. Bayes Procedures

Review of the Bayes criteria:

Suppose a person’s prior distribution about is ( and

the probability distribution for the data is that X | has

probability density function (or probability mass function)

p( x | ) .

This can be viewed as a model in which is a random

variable and the joint pdf of X , is ( p ( x | ) .

The Bayes risk of a decision procedure for a prior

distribution ( , denoted by r ( ) , is the expected value

1

of the loss function over the joint distribution of ( X , ) ,

which is the expected value of the risk function over the

prior distribution of :

r ( ) E [ E[l ( , ( X )) | ]] E [ R( , )] .

For a person with prior distribution ( , the decision

procedure which minimizes r ( ) minimizes the expected

loss and is the best procedure from this person’s point of

view. The decision procedure which minimizes the Bayes

risk for a prior ( is called the Bayes rule for the prior

( .

A nonsubjective interpretation of the Bayes criteria: The

Bayes approach leads us to compare procedures on the

basis of

r ( ) R( , ) ( )

if is discrete with frequency function ( or

r ( ) R( , ) ( )d

if is continuous with density ( .

Such comparisons make sense even if we do not interpret

( as a prior density or frequency, but only as a weight

function that reflects the importance we place on doing

well at the different possible values of .

Computing Bayes estimators: In Chapter 1.3, we found the

Bayes decision procedure by computing the Bayes risk for

each decision procedure. This is usually an impossible

2

task. We now provide a constructive method for finding

the Bayes decision procedure

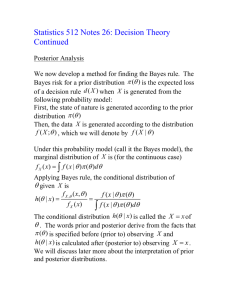

Recall from Chapter 1.2 that the posterior distribution is

the subjective probability distribution for the parameter

after seeing the data x:

p( x | ) ( )

p( | x)

p( x | ) ( )d

The posterior risk of an action a is the expected loss from

taking action a under the posterior distribution p ( | x ) .

r (a | x ) E p ( | x ) [l ( , a)] .

The following proposition shows that a decision procedure

which chooses the action that minimizes the posterior risk

for each sample x is a Bayes decision procedure.

Proposition 3.2.1: Suppose there exists a function

* ( x) such that for all x ,

r ( * ( x) | x) inf{r (a | x) : a } ,

(1.1)

*

where A denotes the action space, then ( x) is a Bayes

rule.

Proof: For any decision rule , we have that the Bayes risk

r ( ) can be written as

r ( ) E[l ( , ( X ))] E[ E[l ( , ( X )) | X ]] (1.2)

By (1.1), for all x

3

E[l ( , ( X )) | X x] r ( ( x) | x) r ( * ( x) | x) E[l ( , * ( X )) | X x]

Therefore, for all x ,

E[l ( , ( X )) | X x] E[l ( , * ( X )) | X x]

and the result follows from (1.2).

The value of Proposition 3.2.1 is that it enables us to

compute the action for the Bayes rule based on the sample

data x by just minimize the posterior risk r ( a | x ) , i.e., we

do not to find the entire Bayes procedure.

Consider the oil-drilling example (Example 1.3.5) again.

Example 2: We are trying to decide whether to drill a

location for oil. There are two possible states of nature,

1 location contains oil and 2 location doesn’t contain

oil. We are considering three actions, a1 =drill for oil,

a2 =sell the location or a3 =sell partial rights to the location.

The following loss function is decided on

(Drill)

(Sell)

a1

a2

0

10

1

(Oil)

(Partial rights)

a3

5

(No oil) 2

6

12

1

An experiment is conducted to obtain information about

resulting in the random variable X with possible values 0,1

and frequency function p( x, ) given by the following

table:

Rock formation

4

X

1

(No oil) 2

(Oil)

0

0.3

1

0.7

0.6

0.4

Application of Prop. 3.2.1 to Example 1.3.5:

Consider the prior (1 ) 0.2, (2 ) 0.8 . Suppose we

observe x 0 . Then the posterior distribution of is

p ( x 0 | ) ( )

p ( | x 0) 2

p ( x 0 | ) ( ) ,

i

i 1

i

1

8

p

(

|

x

0)

,

p

(

|

x

0)

1

2

which equals

9

9 .

Thus, the posterior risks of the actions a1 , a2 , a3 are

1

8

r (a1 | 0) l (1 , a1 ) l (2 , a1 ) 10.67

9

9

r (a2 | 0) 1.79, r (a3 | 0) 5.89

Therefore, a2 has the smallest posterior risk and for the

*

Bayes rule ,

* (0) a2 .

Similarly,

r (a1 |1) 8.35, r (a2 |1) 3.74, r (a3 |1) 5.70

and we conclude that

* (1) a2 .

Bayes procedures for common loss functions:

5

Bayes decision procedures for point estimation of g ( ) for

some common loss functions using Proposition 3.2.1:

2

(1) Squared Error Loss ( l ( , a) ( g ( ) a) ): The action

(point estimate) taken by the Bayes rule is the action that

minimizes the posterior expected square loss:

* ( x ) arg min E p ( | x ) [(a g ( )) 2 ]

a

*

By Lemma 1.4.1, ( x) is the mean of g ( ) for the

posterior distribution p ( | x ) .

(2) Absolute Error Loss ( l ( , a) | g ( ) a | ). The action

(point estimate) taken by the Bayes rule is the action that

minimizes the posterior expected absolute loss:

* ( x ) arg min E p ( | x ) [| a g ( ) |]

(1.3)

a

The minimizer of (1.3) is any median of the posterior

distribution p ( | x ) so that a Bayes rule is to use any

median of the posterior distribution.

Proof that the minimizer of E[| X a |] is a median of X:

Let X be a random variable and let the interval m0 m m1

1

1

P

(

X

m

)

,

P

(

X

m

)

be the medians of X, i.e.,

2

2.

For m1 c and a continuous random variable,

6

E[| X c |] E[| X m |]

m

c

m

c

(c m)dP( x) ((c x) ( x m))dP( x) (m c)dP( x)

(c m)[ P( X m) P( X m)] 2

(c x)dP( x)] 0

m x c

A similar result holds for c m0 . A similar argument

holds for discrete random variables.

(3) Zero-one loss

| a g ( ) | c

1

l ( , a)

| a g ( ) | c

0

The Bayes rule is the midpoint of the interval of length

2c that maximizes the posterior probability that

g ( ) belongs to the interval.

Example 1: Recall from Notes 2 (Chapter 1.2), for

X 1 , , X n iid Bernoulli( p ) and a Beta(r,s) prior for p, the

posterior distribution for p is

Beta( r i 1 xi , s n i 1 xi ).

n

n

Thus, for squared error loss, the Bayes estimate of p is the

n

n

mean of Beta( r i 1 xi , s n i 1 xi ), which equals

r i 1 xi

n

n

r i 1 xi s n i 1 xi

n

r i 1 xi

n

rsn .

7

For absolute error loss, the Bayes estimate of p is the

median of the Beta( r i 1 xi , s n i 1 xi ) distribution

n

n

which does not have a closed form.

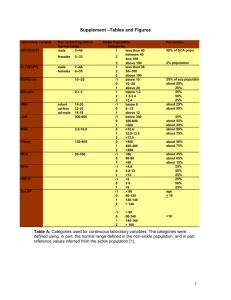

For n=10, here are the Bayes estimators and MLE for the

Beta(1,1) = uniform prior.

10

i 1

0

1

2

3

4

5

6

7

8

9

10

Xi

MLE

Bayes

absolute error

loss

.0611

.1480

.2358

.3238

.4119

.5000

.5881

.6762

.7642

.8520

.9389

.0000

.1000

.2000

.3000

.4000

.5000

.6000

.7000

.8000

.9000

1.0000

Bayes

squared error

loss

.0833

.1667

.2500

.3333

.4167

.5000

.5833

.6667

.7500

.8333

.9137

2

2

Example 2: Suppose X 1 , , X n iid N ( , ) , known,

2

and our prior on is N ( , b ) .

We showed in Notes 3 that the posterior distribution for

is

8

nX

2 b2

1

N

,

n

1

n

1

.

2

b2 2 b2

The mean and median of the posterior distribution is

nX

2

b2

n

1 , so that the Bayes estimator for both squared error

2 b2

nX

2

2

b

(X )

loss and absolute error loss is

n

1 .

2 b2

Note on Bayes procedures and sufficiency: Suppose the

prior distribution ( ) has support on and the family

of distributions for the data { p( X | ), } has

sufficient statistic T ( X ) . Then to find the Bayes

procedure, we can reduce the data to T ( X ) .

This is because the posterior distribution of | X is the

same as the posterior distribution of | T ( X ) since

p( | X ) p( X | ) ( )

p( X | T ( X ), ) p(T ( X ) | ) ( ) p(T ( X ) | ) ( )

where the last uses the sufficiency of T ( X ) .

9