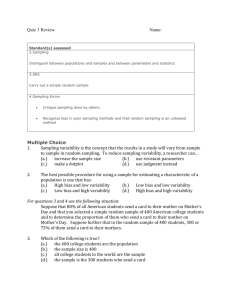

a framework for teaching statistics

advertisement