4.4 Comparison of Poisson means

advertisement

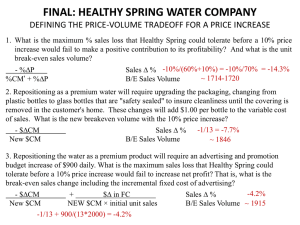

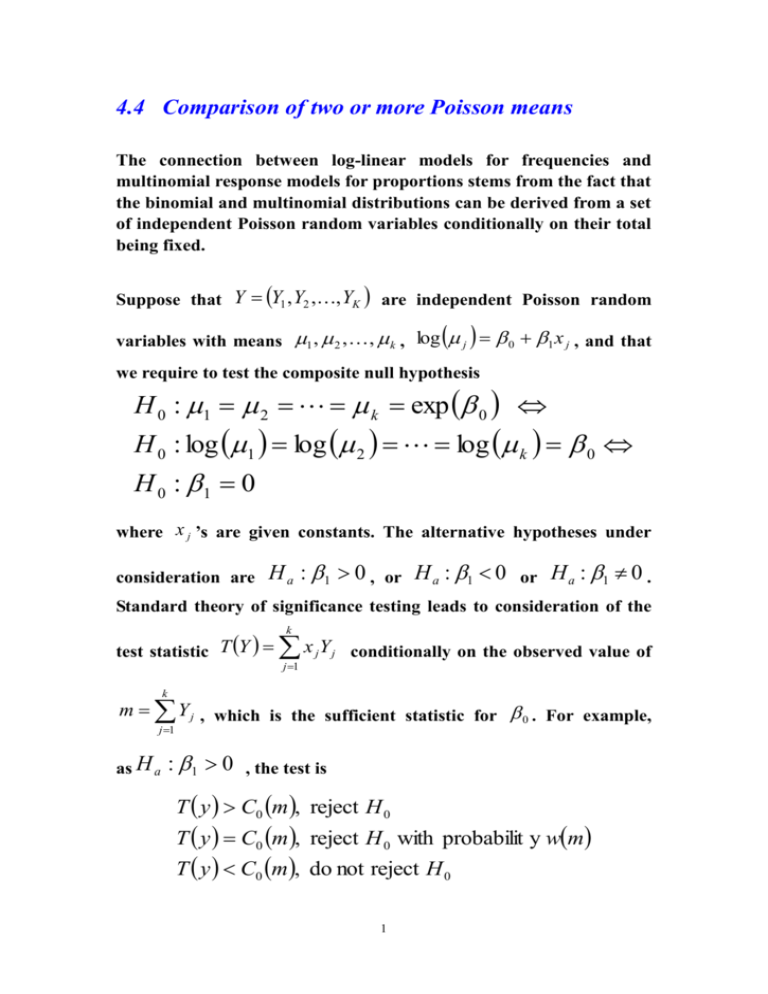

4.4 Comparison of two or more Poisson means The connection between log-linear models for frequencies and multinomial response models for proportions stems from the fact that the binomial and multinomial distributions can be derived from a set of independent Poisson random variables conditionally on their total being fixed. Suppose that Y Y1 , Y2 ,, YK are independent Poisson random variables with means 1 , 2 , , k , log j 0 1 x j , and that we require to test the composite null hypothesis H 0 : 1 2 k exp 0 H 0 : log 1 log 2 log k 0 H 0 : 1 0 where x j ’s are given constants. The alternative hypotheses under consideration are H a : 1 0 , or H a : 1 0 or H a : 1 0 . Standard theory of significance testing leads to consideration of the test statistic T Y k x Y j 1 j j conditionally on the observed value of k m Y j , which is the sufficient statistic for 0 . For example, j 1 as H a : 1 0 , the test is T y C0 m , reject H 0 T y C0 m , reject H 0 with probabilit y wm T y C0 m , do not reject H 0 1 given m k Y j 1 j , where C0 m wm and can be obtained by solving k P T Y C0 m | Y j m, H 0 is true j 1 k wm P T Y C0 m | Y j m, H 0 is true j 1 and wm is some constant depending on m. Note that under null hypothesis, we regard the data as having multinomial distribution with index m and parameter vector 1 1 1 , , , independent of 0 , i.e., k k k k P Y y , , Y y | Y m , H is true 1 1 k k j 0 j 1 k m m! k y j ! 1 k yj j 1 j 1 y j ! j 1 m! k y m! k yj 1 j 1 k j ! 1 km j 1 Note: Let Y Y1 , Y2 ,, YK has the probability density function or probability distribution function r f y f y1 , y2 ,, yk C , exp T y j M j y h y j 1 where 1 , 2 ,, r and M y M1 y , M 2 y ,, M r y . 2 H 0 : 0 v.s. H a : 0 , an UMP (uniformly Then, for testing most powerful) unbiased level-test given M Y m is T y C0 m , reject H 0 T y C0 m , reject H 0 with probabilit y wm T y C0 m , do not reject H 0 where the constants C0 m and wm depending on m can be obtained by solving PT Y C0 m | M Y m, H 0 is true wm PT Y C0 m | M Y m, H 0 is true Therefore, for independent Poisson random variables Y1 , Y2 , , Yk , with means 1 , 2 , , k , log j 0 1 x j , the probability distribution function is k f y j 1 j yj y j! e j k k 1 y exp j exp log j j k j 1 j 1 y j! j 1 k k 1 exp j exp y j log j k j 1 j 1 y j! j 1 k k 1 exp j exp y j 0 1 x j k j 1 j 1 y j! j 1 k k k 1 exp j exp 1 x j y j 0 y j k j 1 j 1 j 1 y j! C 1 , 0 exp 1T y 0 M y h y 3 j 1 where k k k C 1 , 0 exp j , T y x j y j , M y y j , h y j 1 j 1 j 1 1 k y ! j j 1 Note: Under H 0 : 1 0 , the unconditional moments of T Y are k yj k k k k E T Y E x jY j x j E Y j x j exp 0 x j j 1 k j 1 j 1 j 1 j 1 k y since E Y j j exp 0 and j 1 j is the estimate of k j. Also, k y j k k k k 2 j 1 VarT Y Var x jY j x j Var Y j x 2j exp 0 x 2j k j 1 j 1 j 1 j 1 Note that the unconditional moments of T Y depend on 0 . On the other hand, under the null hypothesis, denote Y k Y j , y j 1 k y j 1 j m. Then, k E T Y | Y y E x j Y j | Y y j 1 k y xj k j 1 k yj k j 1 x j k j 1 4 x E Y k j 1 j j | Y y since Y j | Y y ~ B y , The unconditio 1 y E Y j | Y y . k k estimate of the VarT Y | Y y k Var x jY j | Y y j 1 Var x jY j | Y y 2 Covx jY j , xiYi | Y y k j 1 j i 1 x 2j y 1 k j 1 1 1 1 2 xi x j y k k k j i y k 2 x j 1 k j 1 1 1 2 xi x j k k j i k y k k 2 1 k 2 x x 2 x x j i j j k j i j 1 j 1 2 y k 2 1 k x j x j k j 1 k j 1 y k 2 2 x j kx denote x k j 1 k y 2 x j x k j 1 k yj k 2 j 1 x j x k j 1 k x j 1 k 5 j CovY j , Yi | Y y 1 1 y , j i k k nal variance of T Y is quite different from the exact conditional variance. The conditional variance is unaffected by the addition of a constant to each component of x j ’s. ◆ The Poisson log-likelihood function for 0 , 1 in this problem is lY 0 , 1 y j log j j k j 1 y j 0 1 x j exp 0 1 x j k j 1 0 y j 1 x j y j exp 0 1 x j Denote k k k j 1 j 1 j 1 exp k j 1 1 x j j . k 0 j 1 Then, the log-likelihood for , 1 becomes lY , 1 0 y j 1 x j y j exp 0 1 x j k k k j 1 j 1 j 1 k 0 y 1 x j y j j 1 k y log 1 x j y j y log 0 y j 1 y log 1 x j y j y log exp j 1 0 k k y log 1 x j y j y log exp 1 x j j 1 j 1 lY lY |Y 1 k 6 where lY y log is the Poisson log-likelihood for based on m y Y ~ P and k lY |Y 1 1 x j y j y log exp 1 x j j 1 j 1 k is the multinomial log-likelihood for 1 based on conditional distribution, Y1 , , Yk | Y m ~ Mm, 1 , , k , Mm, 1 , , k is a multinomial distribution with index m and parameters j exp 1 x j exp 1 x j k . j 1 Note: The Poisson marginal likelihood based on Y mainly depends only on while the multinomial conditional likelihood based on Y1 ,,Yk | Y depends only on 1 . Provided that no information is available concerning the value of 0 and consequently of , then all of the information concerning 1 is in the conditional likelihood based on Y1 ,,Yk | Y . Note: The Fisher’s information for , 1 is 7 1 0 k 2 0 j x j x j 1 and these parameters are said to be orthogonal. Under suitable limiting conditions, the estimate ˆ and ˆ1 must be approximately independent. 8