Iterative Methods for Linear Equations

advertisement

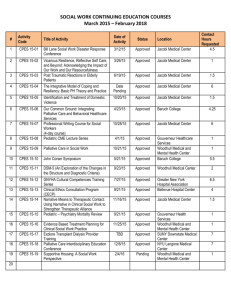

Iterative Methods for Linear Equations Iterative methods for solving general, large sparse linear systems have been gaining popularity in many areas of scientific computing. A number of efficient iterative solvers were discovered and the increased need for solving very large linear systems triggered a noticeable and rapid shift toward iterative techniques in many applications. Also, iterative methods are gaining ground because they are easier to implement efficiently on high-performance computers than direct methods. Those methods are called direct that terminate after finitely many operations with an exact solution (up to rounding errors). The best known direct method is Gauss elimination. In the general case the Gauss elimination for the solution of a system of n equations Ax=b requires 2n 3 / 3 + O(n 2 ) operations The storage amounts to n 2 n . Because of round-off errors, direct methods become less efficient than iterative methods when they applied to large systems. In addition, the amount of storage space required for iterative solutions on a computer is far less than the one required for direct methods when the coefficient matrix of the system is sparse. Thus, especially for sparse matrices, iterative methods are more attractive than direct methods. For the iterative solution of a system of equations, one starts with an arbitrary starting vector x 0 and computes a sequence of iterates x m for m=1,2,….: x 0 x1 x 2 x m x m 1 In the following x m 1 is dependent only on x m , so that the mapping x m x m 1 determines the iteration method. The choice of the starting value x 0 is not part of that method. 8 Let A be an nxn nonsingular matrix and Ax=b the system to be solved. a11x1 a12 x2 a1n xn b1 a21x1 a22 x2 a2 n xn b2 an1 x1 an 2 x2 ann xn bn We assume that the diagonal terms a11, a22 ,, ann are all nonzero. Then Jacobi’s method is xik 1 (bi aij x kj ) / aii , k=0,1,… j i Rewrite in matrix form, let D be the diagonal matrix containing the diagonal elements of A, and Aoff=A-D.Then x k 1 d Hx k where H D 1 Aoff , d D 1b The iterative process is terminated when a convergence criterion is satisfied. Convergence Test It is necessary to test for convergence of the iterates and there are two common tests: x k 1 x k or x k 1 x k x k It is also relatively common to use the residual, r k or r k r 0 and it is called relative error test. The convergence or divergence of the iterative process in the Jacobi method does not depend on the initial guess, but depends only on the character of the matrices themselves. However, a good first guess in case of convergence will make for a relatively small number of iterations. 9 Defintion An nxn matrix A is strictly diagonally dominant if n a aii j 1, j i ij It is a sufficient condition for Jacobi to convergence. Estimate the error Suppose that the eigenvalues are ordered such that 1 2 n Then note that x ( k 1) x ( k ) T k ( x (1) x ( 0) ) VkV 1 ( x (1) x ( 0) ) ~ 1kV kV 1 ( x (1) x ( 0 ) ) ~ where diag (1, 2 / 1 , , n / 1 ) e ( k 1) T k 1e ( 0) Vk 1V 1V ( I ) 1V 1 ( x (1) x ( 0) ) Vk 1 ( I ) 1V 1 ( x (1) x ( 0) ) ~ 1k 1V k ( I ) 1V 1 ( x (1) x ( 0 ) ) Therefore, we have e ( k 1) x ( k 1) x (k ) 11 1 1 so e ( k 1) x ( k 1) x ( k ) 11 1 One may estimate 1 by looking at the residuals. The limiting behaviour of r k 1 implies that r ( k 1) 1r ( k ) as k . Thus one may estimate 1 as follows by least squares 1 r ( k 1) ' r ( k ) r (k ) ' r (k ) 10 This leads to the following error estimate: e ( k 1) ( k 1) E x ( k 1) x ( k ) r (k ) ' r (k ) 1 r ( k 1) ' r ( k ) Algorithm of Jacobi method There are two different algorithms to run Jacobi method Algorithm 1: for k=1:itmax+1 for i =1:n S=0; for j = 1:n if (j~=i) S=S+A(i,j)*x0(i,j) end end x(i)=(-S+b(i))/A(i,i); end end Algorithm 2: Adiag=diag(A); d=diag(Adiag); Aoff=A-d; for k=1:itmax+1 x=(b-Aoff*x)./Adiag end From the profile report, we know that method 1 needs more time than method 2 in calculation. Method 1 Method 2 Time 0.04 0.02 So the following problem solved by jacobi method, we use algorithm 2. 11 Gauss-Seidel is almost the same as for Jacobi, except that each x-value is improved using the most recent approximations to the values of the other variables. xik 1 (bi aij x kj 1 aij x kj ) / aii j i k=0,1,… j i Rewrite in matrix form, let Aup and Alow be the strictly upper and strictly lower triangular parts of A. Then x k 1 d Hx k where H ( D Alow) 1 Aup , d ( D L) 1 b Because the new values can be immediately stored in the location that held the old values, the storage requirement for x with the Gauss-Seidel method is half what it would be the Jacobi method and the rate of convergence is more rapid. Example 1 Let A be 6x6 matrix 2 0 3 1 6 1 1 0 8 3 2 1 2 0 7 4 2 0 , Assume A 1 4 1 2 0 0 5 5 4 3 9 0 2 1 0 1 2 5 54 25 0 b 16 16 19 Result: Jacobi method convergent after 25 iterations and G-S method just needs 16 iterations. It shows that G-S method convergence faster. Jacobi G-S Iterations Time 25 16 0.02 0.01 Notice: Echo on ECHO ON – turns on echoing of commands inside Script files, so it may not necessary to use this command. 12