REVIEW - Michigan State University

advertisement

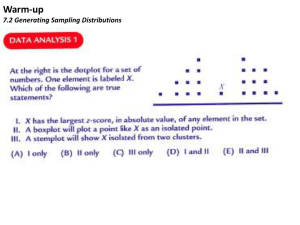

REVIEW : KEY RESEARCH CONCEPTS/TERMS - See Glossary at course website for sample definitions. applied research, basic research=pure research exploratory, descriptive, explanatory, predictive research evaluation , research, evaluation research concepts, hypotheses, propositions reactivity, ecological fallacy, causality deductive and inductive reasoning, variables, dependent, independent variables ways of knowing: science, common sense, experience, traditional knowledge research design cross sectional, longitudinal research , panel study, trend study qualitative, quantitative research unit of analysis nominal and operational definitions measurement validity, reliability ; random and systematic error (bias) continuous and discrete variables nominal, ordinal, interval, ratio measurement scales population, sample ; probability, non-probability samples, sampling frame purposive, quota, judgement, snowball, ...sampling simple random sample, systematic sample stratified, area sampling sampling error ; standard error of the mean statistic, parameter survey, questionnaire, interview pilot study, pretest experiment, observation closed, open ended questions, probes response rate ; non-response bias evaluability assessment evaluation research formative, summative evaluation, process evaluation needs assessment weighted average coding, cleaning of data descriptive and inferential statistics univariate statistics- frequency, mean, median, standard deviation, variance confidence interval, null hypothesis, significance level bivariate statistics- crosstab, correlation, comparison of means , chi square, t-test, ANOVA experiment, experimental group, control group, treatment pre-test, post-test with control design experimental errors - NB- premeasurement, interaction, history, selection * informed consent, anonymity vs confidentiality, UCRIHS *ethical issues in research & evaluation 1 REVIEW - KEY CONCEPTS/ POINTS 1. Distinguishing scientific from other ways of knowing/evaluating. 2. Types/categories of research 3. Steps in research process - general problem solving framework. Define problem, objectives, methods, data gathering, analysis, conclusions, communication/ implementation. 4. General research designs/data gathering approaches. On-site designs only capture users/visitors; household designs for general populations. Surveys to describe, experiments to study causal relationships, longitudinal to study trends/change over time. 5. Role of concepts, hypotheses, theory in research. 6. Operational definitions= systematic measurement procedures. 7. Reliability and validity of measures, random and systematic errors. 8. Levels or scales of measurement dictate which statistics are appropriate. 9. Variables, dependent, independent. Socio-economic, cognitive, affective and behavioral information. 10. Define study population first, then sampling approach. Sample statistics estimate population parameters. 11. Probability vs nonprobablity sampling. 12. Key characteristic of sample is its representativeness of the population from which it is drawn. Rely on laws of probability or judgement to draw sample. 13. Sampling error can be estimated for probability samples. Varies with size of sample, & homogenity of population. 14. Stratify sample to more heavily sample subgroups with higher variance or assure a given number of cases within each subgroup. Cluster sample to lower data gathering costs. 15. Pros and cons of mailed surveys, telephone and personal interviews. 16. Questionnaire design issues 17. Pretests, cover letters, follow-ups, nonresponse bias and related issues in survey research. 18. Qualitative vs quantitive approaches. 19. Problems suitable for observational approaches. 20. Basics of hypothesis testing and confidence intervals. Descriptve and inferential statistics. Comparing means, crosstabs/chi square 21. Experimental design - understand the pre-test-post-test with control design and how it controls for each of the kinds of experimental errors. 22. Research ethics - informed consent, truth in reporting, confidentiality issues, not use research as guise for selling. 23. Reporting - write/speak to the intended audience; executive summaries, oral presentations, technical reports, proposals , tables and figures. 24. Examples of typical applications of various research designs/approaches Study tips 1. Read through the lecture topic outlines 2. Skim Powerpoint presentations 3. Try the sample exam questions and check answers. 4. Make sure you can define each of terms in the list above/glossary, know what the term relates to (where it fits in course), and can distinguish between groups of related terms. 5. Re-read selected readings in Trochim, your other text, or course links Recommended : Leones – Guide to designing and conducting surveys. ASA Series on Surveys, U Wisconsin evaluation series. 2 SAMPLE EXAM QUESTIONS A. TRUE/FALSE ___ 1. Only researchers need to be knowledgeable about research and evaluation methods, as managers and recreation practitioners can hire consultants to do whatever research may be needed. ___ 2. Researchers assess the validity of measurements by repeating the measurement to see if they get the same results. ___ 3. The measurement of importance and satisfaction in the Huron Clinton Metroparks Visitor Study were ordinal measures. ___ 5. Race/ethnic status is usually measured at an ordinal scale. ___ 6. In a mail survey, identification numbers are included on the survey so that the investigator can identify who has returned a questionnaire. If the subject's identity is never associated with their individual responses in any reporting of results, the subjects are anonymous. ___ 7. To test a relationship between two nominal scale variables, one could use a correlation. ___ 8. The chi square statistic tests for differences in means of two subgroups. ___ 9. When testing at the 95% confidence level, one should reject the null hypoythesis if the reported significance level is greater than .05. ___ 10. Inferential statistics describe the characteristics of a sample. B. MULTIPLE CHOICE. ___ 1. Which of the following is a reason for using an outside consultant to conduct a survey for a recreation organization: a) they know the organization best, b) they know technical details of conducting a survey, c) they can do the study more cheaply, d) they are more knowledgeable about your customers. ___ 2. The first step in a research study is a) reviewing previous studies b) determining the sample size, c) designing the questionnaire, d) identifying the study's purpose. ___ 3. Applied research studies generally a) have a specific client for the research, b) have a fairly immediate time frame for using the results, c) seek to advance theories of human behavior, d) both a and b, e) all of the above. ___ 4. The most important factor in determining the research design for a given problem is a) the designs and methods the researcher is most familiar with, b) the nature of the problem, c) the designs scientists have used in the past, d) the methods the client feels comfortable with. ___* 5. Your text, Applied Social Research, claims that science is provisional. By this they mean a) it is based on observations in the real world, b) uses inductive and deductive logic, c) is self-corrective, or d) is wishy-washy in drawing conclusions. ___ 6. If we attempted to describe visitors to a park based on a very small random sample (say 50 people), our estimates would likely be a) unreliable, b) invalid, c) both of the above, d) neither of the above. ___ 7. If we attempt to describe visitors to a park across the entire year by using a large summer season survey, our estimates would likely be a) unreliable, b) invalid, c) both of the above, d) neither of the above. ___ 8. The fundamental tradeoff in most research design is between accuracy of results and a) protecting the rights of subjects, b) time and costs of the study, c) usefulness of results, d) level of detail. ___ 9. Crystal Mt. Ski Area conducted a lift line survey to determine where visitors came from, how often they skied last year, and how many times they had been to Crystal Mt. last winter. This study is an example of a(n) a) exploratory, b) descriptive, c) explanatory, or d) predictive study. 3 __ 10. The Rockville Parks Department wishes to increase the number of Black and Hispanic visitors to its parks. It uses a nominal group process to brainstorm ideas and select the most promising options to work on. The nominal group process is followed by focus groups to flesh out and evaluate each alternative before deciding which to implement. This study is an example of a(n) a) formative, b) summative, c) process, or d) outcome evaluation study. C. FILL IN BLANKS. Fill in the blanks . 1. The _________________ variable in a study is generally the one we wish to predict or explain. 2. A(n) ___________ is a scientific statement that predicts or specifies a relationship between two or more variables and is tested through research. 3. The prefered approach to identify cause-effect relationships is ___________________. D. Short Answer 1a. List the four levels of measurement in order of increasing power & precision. Give an example of a variable that would usually be measured at each level. Then place each of the following statistics next to the level to which it best applies. (Statistics: median, frequency, mode, mean, range, standard deviation, inter-quartile deviation). Measurement Level _______________ _______________ _______________ _______________ Example _________________ _________________ _________________ _________________ Appropriate Statistics _________________ _________________ _________________ _________________ 2.. Distinguish between each of the following pairs of concepts: a. b. c. d. e. f. g. h. i. reliability and validity of a measure probability and nonprobability sample descriptive and inferential statistics a parameter and a statistic a survey and an experiment independent and dependent variable anonymity and confidentiality standard deviation and standard error pre-measurement (testing), history, and interaction errors in an experiment 3. You have been given a research report that presents the methods and findings from a study evaluating the image of Michigan as a travel destination in the surrounding five state region. Identify the three most important things you would look for in the report to assess the credibility of the findings. 4 . The manager of Sleepy Hollow State Park about 20 miles northeast of Lansing wants to identify the market area from which the majority of current visitors come. He also wants to be able to compare campers and day users and determine what proportion of each participates in fishing at the park. The park has a year to complete the study. a. Write ONE research objective for this study. b. Define a suitable study population for this study (element, unit, extent, time). c. Recommend the overall research approach (i.e. choose the location (household, on-site, lab), ... and data gathering approach (observation, phone, personal interview, etc.). d. Based on the brief problem statement , identify four important variables to measure in the study. 4 e. What would be your greatest concern (potential problem or source of error) in designing this study? 5. The PRR 844 instructor wishes to use an experiment to evaluate the contribution of micro-computer labs to performance in the class. Next year the class will be divided into two sections, one with a computer lab and one without. Students research/evaluation skills will be evaluated using an exam given before and after the class. a. b. c. d. Diagram the experimental design being used here, Identify the treatment, experimental group, control group, and the measure of the effect. How would you assign students to the two groups. If the experimental group's scores increase from 35 to 70 and the control group's increase from 35 to 50, what is the "effect" of the computer labs? 6. a. A survey of 200 participants in a fitness program finds that 30% would enroll in the program again. . Using the table for sampling errors for a binomial distribution (attached) , compute a 95% confidence interval for this estimate. Sampling Errors for a binomial distribution (95% confidence interval) Distribution in the population Sample size 50/50 60/40 70/30 80/20 100 10.0% 9.8% 9.2% 8.0% 200 7.1% 6.9% 6.5% 5.7% 400 5.0% 4.9% 4.6% 4.0% 1000 3.2% 3.1% 2.9% 2.5% 1500 2.6% 2.5% 2.4% 2.1% 2000 2.2% 2.2% 2.0% 1.8% 90/10 6.0% 4.2% 3.0% 1.9% 1.5% 1.3% b. In a survey of 100 randomly chosen downhill skiers, the average spending on the trip was $500. If the standard deviation in the sample was $400, compute a 95% confidence interval for the spending estimate in the population. 7. For each of the following research problems, choose what you feel is the best approach (from the list) Briefly identify the study population and explain why you chose the given design. Designs to choose from Household survey (Telephone, Mailed, or Personal Interview) On-site survey (self-administered or personal interview) Observation Experiment Secondary data analysis Qualitative approach (focus group, in depth interview or case study) a. b. c. d. e. MSU wishes to assess the percentage of bicyclists on campus wearing helmets. A Lake Michigan marina wants to assess the potential market for a new full service marina. A Lake Michigan marina wants to see how many of its existing slip holders would stay with the marina if it raises its rates by 20% next year. East Lansing wishes to determine community attitudes about a proposed new swimming facility. MSU wants to determine if SAT scores are a good predictor of performance in college, as judged by grade point averages. 5 ANSWERS TO SAMPLE EXAM: A. 1. F – managers must understand enough about research to communicate needs to researchers, participate in studies and interpret and evaluate reports. 2. F – reliability, not validity refers to repeatability of measures 3. T – most attitude scales including very important, somewhat, … etc are ordinal 5. F – race/ethnicity is nominal ; unordered categories 6. F - confidential if we can associate responses with an individual, but don’t 7. F – correlations are for interval scale, for nominal use chi square test 8. F – chi square tests for patterns in a table, F or t-tests (compare means) compare means. 9. F – reject null hypothesis if SIG < .05. Think of the significance level (.05) as the probability the sample could look like this if null hypothesis were true. Small probability leads us to reject the null hypothesis. 10. F – descriptive statistics describe; inferental stats test hypotheses/infer to population. B. 1. B , 2. D, 3. D , 4. B, 5. C , 6. A, 7. B, 8. B, 9, B, 10. A C. dependent, hypothesis, experiment D. 1. see lecture outlines page 34, examples race (N), any attitude (SA, A, N D, SD) is ordinal or any interval characteristic measured in broad categories, age, height, weight, days participated in an activity, amount spent are interval. 2. a. reliability measures repeatability of a measure (random error), validity measures absence of systematic errors or bias – are we measuring what we think? b. probability –each person has known chance of selection, otherwise non-prob c. descriptive stats describe characteristics of sample (freq, avg), while inferential stats test hypotheses or generalize from sample to population. d. statistic is summary measure of a variable in sample; parameter,in a population. e. survey measures things as they are, experiment manipulates at least one variable to test effect on others. f. dependent varable is one you want to explain or predict, independent vars are the ones which do the explaining. g. anonymity – can’t identify respondents, confidential if can but won’t. h. standard dev is measure of variation (spread) of a variable in a population; standard error (SE) is the standard deviation of sampling distribution = spread of means for different samples of same size drawn at random from a given population. SE = STD Dev/ sqrt(n) , where n is sample size. i. these are errors that can occur in an experiment; pre-measurement is the effect of a pre-test on post-test, history covers changes in any other variable besides the treatment that could cause a change in the effect, interaction captures an exaggerated or dampened effect of the treatment due to an interaction with the pre-test. 3. Some good answers are a) how big a sample, b) how did they measure image (reliable and valid?), c) is sample representative of intended population, d) what was the response rate (non-response bias?), e) who did the study and who was the sponsor, f) is report written clearly and objectively, g) use phone, mail, or personal interview. 4. Sample answers, others are possible a. Determine market area of the park ; Compare activities of campers and day users – note thesee follow directly from problem statement. b. Individuals (16 years and older) in vehicles leaving Sleepy Hollow State park between Jan 1 and Dec 31, 2002. c. On-site survey, short personal interview at exit gate: ask zipcode of residence, whether they were camping, and what activities they did on this visit. d. zipcode of residence, camping or not on this visit, participate in fishing, maybe gender, bring a boat? e. main problem will be obtaining representative sample across time of day, day of week and season. Stratify sample by these time periods and use use counts to adjust to total population. 5. Standard pre-test /post-test with control group 6 a. Diagram MBe MBc X MAe MAc - experimental group - control group b. treatment is the micro-lab (X), measure of effect is change in experimental group – change in control group = (MAe-MBe ) - ( MAc-MBc ) c. Randomly assign to two groups by picking every other student on alphabetical class list to be in experimental group. d. (70-35) – (50-35) = 35-15 = 20 - twenty point increase due to micro-lab 6a. sampling error is 6.5% (n=200, and the 70/30 column) so 95% CI is 30% plus or minus 6.5% = ( 23.5, 36.5) 6b. standard error is standard deviation/sqrt(n) = 400/sqrt(100) = 400/10=40 95% conf interval is two standard errors either side of mean = $500 plus or minus $80 = ($420, $580) 7. Often more than one reasonable alternative. Key is explaining your answer. a. observation, whenever variable is easily observed; pick random locations and times to observe. b. household survey to a list of registered boaters (large boats within X miles) or possibly an “on-site” survey of boaters at nearby marinas; might also suggest a set of focus groups of current large boat owners/marina slip renters in the area for a more qualitative assessment. c. On-site survey of current slip renters at the marina, experiment would be bolder but likely not feasible/wise, e.g. send out notices of price increases to half of slip renters and ask who plans to return. Compare with other half who are given the current rate. d. household telephone survey of EL residents for quantitative estimates; or a set of focus groups, community forums/workshops for qualitative assessment of attitudes. e. secondary data using records of students for the past five years – inexpensive. 7 Glossary of Research and Evaluation Terms (from Monette, Sullivan and DeJong) Anonymity: a situation in which no one, including the researcher, can link individual's identities to their responses or behaviors that serve as research data. Applied research: designed with a practical outcome in mind and with the assumption that some group or society as a whole will gain specific benefits from the research. Area Sampling: a multistage sampling technique that involves moving from larger clusters of units to smaller and smaller ones until the unit of analysis, such as the household or individual, is reached. (Cluster sampling). Sampling by dividing the population into groups or clusters and drawing samples from only some groups. Available Data: observations collected by someone other than the investigator for purposes that differ from the investigator's but that are available to be analyzed. (Secondary data) Baseline: a series of measurements of a client's condition prior to treatment that is used as a basis for comparison with the client's condition after treatment is implemented. Basic Research: research conducted for the purpose of advancing knowledge about human behavior with little concern for the immediate or practical benefits that might result. Bivariate Statistics: statistics that describe the relationship between two variables. Blocking: a two stage system of assigning subjects to experimental and control groups whereby subjects are first aggregated into blocks according to one or more key variables; members of each block are then randomly assigned to experimental and control groups. Causality: the situation where an independent variable is the factor - or one of several factors - that produces variation in a dependent variable. Closed Ended Questions: questions that provide respondents with a fixed set of alternatives from which they are to choose. Coding: the categorizing of behavior into a limited number of categories. Common Sense: practical judgments based on the experiences, wisdom, and prejudices of a people. Concepts: mental constructs or images developed to symbolize ideas, persons, things, or events. Concurrent Validity: a type of criterion validity in which the results of a newly developed measure are correlated with results of an existing measure. Confidentiality: ensuring that information or responses will not be publicly linked to specific individuals who participate in research. Construct Validity: a complex approach to establishing the validity of measures involving relating the measure to a complete theoretical frame work, including all the concepts and propositions that the theory comprises. Content Analysis: a method of transforming the contents of documents from a qualitative, unsystematic form to a quantitative, systematic form. Content Validity: an approach to establishing the validity of measures involving assessing the logical relationship between the proposed measure and the theoretical definition of the variable. Continuous Variables: variables that theoretically have an infinite number of values. Control Group: the subjects in an experiment who are not exposed to the experimental stimulus. Control Variables: variables whose value is held constant in all conditions of an experiment. Convenience Samples: samples composed of those elements that are readily available or convenient to the researcher. Cost-Benefit Analysis: an approach to program evaluation wherein program costs are related to program benefits expressed in dollars. Cost-Effectiveness Analysis: an approach to program evaluation wherein program costs are related to program effects, with effects measured in the units they naturally occur. Cover Letter: a letter that accompanies a mailed questionnaire and serves to introduce and explain it to the recipient. Criterion Validity: a technique for establishing the validity of measures that involves demonstrating a correlation between the measure and some other standard. Double Blind Experiment: an experiment conducted in such a way that neither the subjects nor the experimenters know which groups are in the experimental and which are in the control condition. Ecological Fallacy: inferring something about individuals from data collected about groups. Ethics: the responsibilities that researchers bear toward those who participate in research, those who sponsor research, and those who are potential beneficiaries of research. 8 Cross Sectional Research: research based on data collected at one point in time. Data Analysis: the process of placing observations in numerical form and manipulating them according to their arithmetic properties to derive meaning from them. Data Archives: a national system of data libraries that lend sets of data, much as ordinary libraries lend books. Deductive Reasoning: inferring a conclusion from more abstract premises or propositions. Descriptive Research: research that attempts to discover facts or describe reality. Descriptive Statistics : procedures that assist in organizing, summarizing, and interpreting the sample data we have at hand. Dimensional Sampling : a sampling technique designed to enhance the representativeness of small samples by specifying all important variables and choosing a sample that contains at least one case to represent all possible combinations of variables. Direct Costs: a proposed program budget or actual program expenditures. Discrete Variables: variables with a finite number of distinct and separate values. Evaluability Assessment: a preliminary investigation into a program prior to its evaluation to determine those aspects of the program that are evaluable. Evaluation Research: the use of scientific research methods to plan intervention programs, to monitor the implementation of new programs and the operation of existing programs, and to determine how effectively programs or clinical practices achieve their goals. Experiential Knowledge: knowledge gained through firsthand observation of events and based on the assumption that truth can be achieved through personal experience. Experimental Group: those subjects who are exposed to the experimental stimulus or treatment. Experimental Stimulus or Treatment: the independent variable in an experiment that is manipulated by the experimenter to assess its effect on behavior. Experimental Variability: variation in a dependent variable produced by an independent variable. Experimentation: a controlled method of observation in which the value of one or more independent variables is changed in order to assess its causal effect on one or more dependent variables. Explanatory Research: research with the goal to determine why or how something occurs. External Validity: the extent to which causal inferences made in an experiment can be generalized to other times, settings, or people Extraneous Variability: variation in a dependent variable from any source other than an experimental stimulus. Face Validity: another name for content validity. See Content Validity. Field Experiments: experiments conducted in naturally occurring settings as people go about their everyday affairs. Field Notes: detailed, descriptive accounts of observations made in a given setting. Formative Evaluation Research: evaluation research that focuses on the planning, development, and implementation of a program. Fraud (scientific): the deliberate falsification, misrepresentation, or plagiarizing of data, findings, of the ideas of others. Grant: the provision of money or other resources to be used for either research or service delivery purposes. Guttman Scale: a measurement scale in which the items have a fixed progressive order and that has the characteristic of reproducibility. Human Services: professions with the primary goal of enhancing the relationship between people and societal institutions so that people may maximize their potential. Hypotheses: testable statements of presumed relationships between two or more concepts. Independent Variable: the presumed active or causal variable in a relationship. Index: a measurement technique that combines a number of items into a composite score. Indicator: an observation assumed to be evidence of the attributes or properties of some phenomenon. Inductive Reasoning: inferring something about a whole group or class of objects from knowledge of one or a few members of that group or class. Inferential Statistics: procedures that allow us to make generalizations from sample data to the populations from which the samples were drawn. Informed Consent: telling potential research participants about all aspects of the research that might reasonably influence their decision to participate. 9 Internal Validity: an issue in experimentation concerning whether the independent variable actually produces the effect it appears to have on the dependent variable. Interval Measures: measures that classify observations into mutually exclusive categories with an inherent order and equal spacing between the numbers produced by a measure. Interview: a technique in which an interviewer reads questions to respondents and records their verbal responses. Interview Schedule: a document, used in interviewing, similar to a questionnaire, that contains instructions for the interviewer, specific questions in a fixed order , and transition phrases for the interviewer. Item: a single indicator of a variable, such as an to a question or an observation of some behavior or characteristic. Judgmental Sampling: a non-probability sampling technique in which investigators use their judgement and prior knowledge to choose people for the sample who best serve the purposes of the study. Measurement Scale: a measurement device allowing responses to a number of items to be combined to form a composite score on a variable. Laboratory Experiments: experiments conducted in artificial settings constructed in such a way that selected elements of the natural environment are simulated and features of the investigation are controlled. Measures of Association: statistics that describe the strength of relationships between variables. Levels of Measurement: rules that define permissible mathematical operations on a given set of numbers produced by a measure. See Nominal, ordinal, interval and ratio. Likert Scale: a measurement scale consisting of a series of statements followed by five response alternatives, typically: strongly agree, agree, no opinion, disagree, or strongly disagree. Longitudinal Research: research based on data gathered over an extended time period. Matching: a process of assigning subjects to experimental and control groups in which each subject is paired with a similar subject in the other group. Measurement: the process of describing abstract concepts in terms of specific indicators by the assignment of numbers or other symbols to these indicants in accordance with rules. Measurement Scale: a measurement device allow responses to a number of items to be combined to form a composite score on a variable. Measures of Association: statistics that describe the strength of relationships between variables. Measures of Central Tendency: statistics, also known as averages, that summarize distributions of data by locating the "typical" or "average" value. Measures of Dispersion: statistics that indicate how dispersed or spread out the values of a distribution are. Misconduct (scientific). scientific fraud, plus such activities as carelessness or bias in recording or reporting data, mishandling data, and incomplete reporting of results. Missing Data: incomplete data found in available data sets. Multidimensional Scaling: a scaling technique designed to measure complex variables composed of more than one dimension. Multistage Sampling: a multiple tiered sampling technique that involves moving from larger clusters of units to smaller and smaller ones until the unit of analysis, such as the household or individual, is reached. Multi-trait Multi-method Approach to Validity: a particularly complex form of construct validity involving the simultaneous assessment of numerous measures and numerous concepts through the computation of inter-correlations. Multivariate Statistics: statistics that describe the relationships among three or more variables. Needs Assessment: collecting data to determine how many people need particular services and to assess the level of services or personnel that already exist to fill that need. Nominal Definitions: verbal definitions in which one set of words or symbols is used to stand for another set of words or symbols. Nominal Measures: measures that classify observations into mutually exclusive categories but with no ordering to the categories. Non-probability Samples: samples in which the probability of each population element being included in the sample is unknown. Non-reactive Observation: observation in which those under study are not aware that they are being studied and the investigator does not change their behavior by his or her presence. Observational Techniques: the collection of data through direct visual or auditory experience of behavior. 10 Open Ended Questions: questions without a fixed set of alternatives, which leaves respondents completely free to formulate their own responses. Operational Definitions: definitions that indicate the precise procedures or operations to be followed in measuring a concept. Opportunity Costs: the value of foregone opportunities incurred by funding one program as opposed to some other program. Ordinal Measures: measures that classify observations into mutually exclusive categories that have an inherent order to them. Panel Study: research in which data are gathered from the same people at different times. Parameter : summary description of a variable in a population. Participant Observation: a method in which the researcher is a part of, and participates in, the activities of the people, group, or situation that is being studied. Physical Traces: objects or evidence that result from people's activities that can be used as data to test hypotheses. Pilot Study: a trial run on a small scale of all procedures planned for a research project. Population: all possible cases of what we are interested in studying. Positivism: the perspective that human behavior should be studied only in terms of behavior that can be observed and recorded by means of some objective technique. Predictive Research: research that attempts to make projections about what will occur in the future or in other settings. Predictive Validity: a type of criterion validity wherein scores on a measure are used to predict some future state of affairs. Pre-experimental Designs: crude experimental designs that lack the necessary controls of the threats to internal validity. Pretest: a preliminary application of the data gathering technique to assess the adequacy of the technique. Privacy: the ability to control when and under what conditions others will have access to your beliefs, values, or behavior. Probability Samples: samples in which each element in the population has a known chance of being selected into the sample. Probes: follow-up questions used during an interview to elicit clearer and more complete responses. Propositions: statements about the relationship between elements in a theory. Pure Research: research conducted for the purpose of advancing our knowledge about human behavior with little concern for any immediate or practical benefits that might result. Purposive Sampling: a non-probability sampling technique wherein investigators use their judgment and prior knowledge to choose people for the sample who would best serve the purposes of the study. Qualitative Research: research that focuses on data in the form of words, pictures, descriptions, or narratives. Quantitative Research: research that uses numbers, counts, and measures of things. Quasi Experimental Designs: designs that approximate experimental control in non-experimental settings. Questionnaire: a set of written questions that people respond to directly on the form itself with out the aid of an interviewer. Quota Sampling: a type of non-probability sampling that involves dividing the population into various categories and determining the number of elements to be selected from each category. Random Assignment: a process for assigning subjects to experimental and control groups that relies on probability theory to equalize the groups. Random Errors: measurement errors that are neither consistent nor patterned. Ratio Measures: measures that classify observations into mutually exclusive categories with an inherent order, equal spacing between the categories, and an absolute zero point. Reactivity: the degree to which the presence of a researcher influences the behavior being observed. Reliability: the ability of a measure to yield consistent results each time it is applied. Representative Sample: a sample that accurately reflect the distribution of relevant variables in the target population. Research Design: a detailed plan outlining how a research project will be conducted. Response Bias: responses to questions that are shaped by factors other than the person's true feelings, intentions or beliefs. 11 Response Rate: the percentage of a sample that completes and returns a questionnaire or agrees to be interviewed. Sample: one or more elements selected from a population. Sampling Error: the extent to which the values of a sample differ from those of the population from which it was drawn. Sampling Frame: a listing of all the elements in a population. Scale: a measurement technique, similar to an index, that combines a number of items into a composite score. Science: a method of obtaining objective knowledge about the world through systematic observation. Secondary data Analysis: the reanalysis of data previously collected for some other research project. Semantic Differential: a scaling technique that involves respondents rating a concept on a scale between a series of polar opposite adjectives. Simple Random Sampling: a sampling technique wherein the target population is treated as a unitary whole and each element has an equal probability of being selected for the sample. Single Subject Designs: quasi-experimental designs featuring continuous or nearly continuous measurement of the dependent variable on a single research subject over a time interval that is divided into a baseline phase and one or more additional phases during which the in dependent variable is manipulated; experimental effects are inferred by comparisons of the subjects responses across baseline and intervention phases. Snowball Sampling: a type of non-probability sampling characterized by a few cases of the type we wish to study leading to more cases, which, in turn, lead to still more cases until a sufficient sample is achieved. Social Research: a systematic examination (or re examination) of empirical data collected by someone firsthand, concerning the social or psychological forces operating in a situation. Standard deviation – a measure of spread or variation in a distribution, equals the square root of the average squared deviation from the sample mean. The standard deviation squareed is called the variance of the distribution. Standard error of the mean : standard deviation of the sampling distribution. A 95% confidence interval around the estimate of the population mean is the sample mean plus or minus 1.96 * standard error. The standard error is the standard deviation in the population divided by the square root of the sample size. Statistic: A summary description of a variable in a sample. Statistics: procedures for assembling, classifying, and tabulating numerical data so that some meaning or information is derived. Stratified Sampling: a sampling technique wherein the population is subdivided into strata with separate sub-samples drawn from each strata Summated Rating Scales: scales in which a respondent's score is determined by summing the numbers of questions answered. Summative Evaluation Research: evaluation research that assesses the effectiveness and efficiency of programs and the extent to which program effects are generalizable to other settings and populations. Survey: a data collection technique in which information is gathered from individuals, called respondents, by having them respond to questions. Systematic Errors: measurement errors that are consistent and patterned. Systematic Sampling: a type of simple random sampling wherein every nth element of the sampling frame is selected for the sample. Theory: a set of interrelated propositions or statements, organized into a deductive system, that offers an explanation of some phenomenon. Thurstone Scale: a measurement scale consisting of a series of items with a predetermined scale value to which respondents indicate their agreement or disagreement. Time Sampling: a sampling technique used in observational research in which observations are made only during specified pre-selected times. Traditional Knowledge: knowledge based on custom, habit, and repetition. Trend Study: research in which data are gathered from different people at different times. True Experimental Designs: experimental designs that utilize randomization, control groups, and other techniques to control threats to internal validity. 12 Uni-dimensional Scale: a multiple item scale that measures one, and only one, variable. Units of Analysis: the specific objects or elements whose characteristics we wish to describe or explain and about which data are collected. Uni-variate Statistics: statistics that describe the distribution of a single variable. Unobtrusive Observation: observation in which those under study are not aware that they are being studied and the investigator does not change their behavior by his or her presence. Validity: the degree to which a measure accurately reflects the theoretical meaning of a variable. Variables: operationally defined concepts that can take on more than one value. Verification: The process of subjecting hypotheses to empirical tests to determine whether a theory is supported or refuted. Verstehen: the effort to view and understand a situation from the perspective of the people actually in that situation. 13