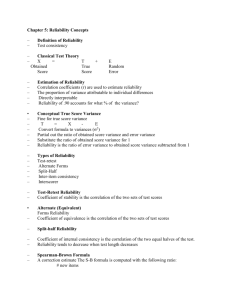

Basics of Alpha

advertisement