Between, Random, and Fixed Effects Models

advertisement

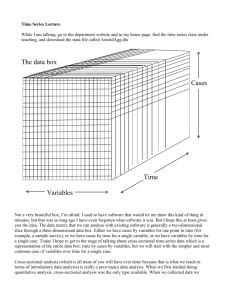

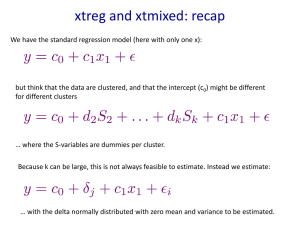

Between, Random, and Fixed Effects Models Taken from the STATA web page http://www.stata.com/support/faqs/stat/xt.html Question I understand the basic differences between a fixed-effects and a random-effects model for a panel dataset, but what is the "between estimator"? The manual explains the command, but I cannot figure out what would lead one to choose (or not choose) the between estimator. Answer Let's start off by explaining cross-sectional time-series models. One usually writes cross-sectional time-series models as y_it = X_it*b + (complicated error term) and explores different ways of writing the complicated error term. In this discussion, I want to avoid that, so let's just write y_it = X_it*b + noise I want to put the issue of noise aside and focus on b because, it turns out, b has some surprising meanings. Let's focus on one of the X variables, say, x1: y_it = x1_it*b1 + x2_it*b2 + ... + noise b1 says that an increase in x1 of one unit leads me to expect, in all cases, that y will increase by b1. The emphasis here is on "in all cases": I expect the same difference in y if 1. I observe two different subjects with a one-unit difference in x between them, and 2. I observe one subject whose x value increases by one unit. Some variables might act like that, but there is no reason to expect that all variables will. For example, pretend that y is income and x1 is "lives in the South of the United States": 1. If I compare two different people, one who lives in the East (x1=0) and another who lives in the South (x1=1), I expect the earnings of the person living in the South to be lower because, on average, all prices and wages are lower in the South. That is, I expect the coefficient on x1, b1, will be less than 0. 2. On the other hand, if I observe a person living in the East (x1=0) who moves to the South (x1=1), I expect that the earnings increased, or why else would that person move? That is, I expect b1>0. There are really two kinds of information in cross-sectional time-series data: D:\687317856.doc 1 1. The cross-sectional information reflected in the changes between subjects. 2. The time-series or within-subject information reflected in the changes within subjects. xtreg, be estimates using the cross-sectional information in the data. xtreg, fe estimates using the time-series information in the data. The random-effects estimator, it turns out, is a matrix-weighted average of those two results. Under the assumption that b1 really does have the same effect in the cross-section as in the time-series — and that b2, b3, ... work the same way — we can pool these two sources of information to get a more efficient estimator. Now, let's discuss testing the random-effects assumption. Indeed, it is the expected equality of these two estimators under the equality-of-effects assumption that leads to the application of the Hausman test to test the random-effects assumption. In the Hausman test, one tests that b_estimated_by_RE == b_estimated_by_FE As I just said, it is true that b_estimated_by_RE = Average(b_estimated_by_BE, b_estimated_FE) and so the Hausman test is a test that Average(b_estimated_by_BE, b_estimated_FE) == b_estimated_by_FE or equivalently, that b_estimated_by_BE == b_estimated_by_FE I am being very loose with my math here but there is, in fact, literature forming the test in this way and, if you forced me to go back and fill in all the details, we would discover that the Hausman test is asymptotically equivalent to testing b_estimated_by_BE=b_estimated_by_FE by more conventional means, but that is not important right now. What is important is that the Hausman test is cast in terms of efficiency, whereas thinking about b_estimated_by_BE == b_estimated_by_FE has recast the problem in terms of something real: 1. b1 from b_estimated_by_BE is what I would use to answer the question "What is the expected difference between Mary and Joe if they differ in x1 by 1?" 2. b1 from b_estimated_by_FE is what I would use to answer the question "What is the expected change in Joe's value if his x1 increases by 1?" It may turn out that the answer to those two questions are the same (Random Effects), and it may turn out that they are different. More general tests exist, as well. If they are different, does that really mean the random-effects assumption is invalid? No, it just means the silly random-effects model that constrains all betas between person and within person to be equal is invalid. Within a random-effects model, there is nothing stopping me from saying that, for b1, the effects are different: . egen avgx1 = mean(x1), by(i) . gen deltax1 = x1 - avgx1 D:\687317856.doc 2 . xtreg y avgx1 deltax1 x2 x3 ..., re In the above model, _b[avgx1] measures the effect within the cross section, and _b[deltax1] the effect within person. The model constrains the other effects to have the same effect within the cross-section and within-person (the random-effects model). In fact, if I make this decomposition for every variable in the model: . egen avgx1 = mean(x1), by(i) . gen deltax1 = x1 - avgx1 . egen avgx2 = mean(x2), by(i) . gen deltax2 = x2 - avgx2 . ... . xtreg y avgx1 deltax1 avgx2 deltax2 ..., re The coefficients I obtain will be exactly equal the coefficients that would be estimated separately by xtreg, be and xtreg, fe. Moreover, I now have the equivalent of the Hausman specification test, but recast with different words. My test amounts amounts to testing that the cross-sectional effects equal the withinperson effects: . test avgx1 = deltax1 . test avgx2 = deltax2, accum . ... Not only do I like these words better, but this test, I believe, is a better test than the Hausman test for testing random effects, because the Hausman test depends more on asymptotics. In particular, the Hausman test depends on the difference between two separately estimated covariance matrices being positive definite, something they just have to be, asymptotically speaking, under the assumptions of the test. In practice, the difference is sometimes not positive definite, and then we have to discuss how to interpret that result. In the above test, however, that problem simply cannot arise. This test has another advantage, too. I do not have to say that the cross-sectional and withinperson effects are the same for all variables. I may very well need to include x1="lives in the South" in my model — knowing that it is an important confounder and knowing that its effects are not the same in the cross-section as in the time-series — but my real interest is in the other variables. What I want to know is whether the data cast doubt on the assumption that the other variables have the same cross-sectional and time-series effects. So, I can test . test avgx2 = deltax2 . test avgx3 = deltax3, accum . ... and simply omit x1 from the test. Why, then, does Stata include xtreg, be? One answer is that it is a necessary ingredient in calculating random-effects results: the randomeffects results are a weighted average of the xtreg, be and the xtreg, fe results. D:\687317856.doc 3 Another is that it is important in and of itself if you are willing to think a little differently from most people about cross-sectional time-series models. xtreg, be answers the question about the effect of x when x changes between person. This can usefully be compared with the results of xtreg, fe, which answers the question about the effect of x when x changes within person. Thinking about and discussing the between and within models is an alternative to discussing the structure of the residuals. I must say that I lose interest rapidly when researchers report that they can make important predictions about unobservables. My interest is peeked when researchers report something that I can almost feel, touch, and see. D:\687317856.doc 4