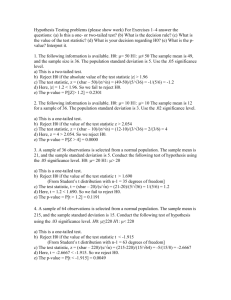

Significance/Hypothesis Testing

advertisement

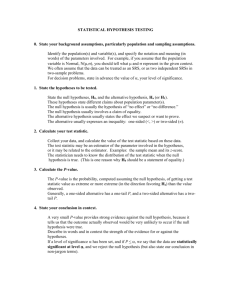

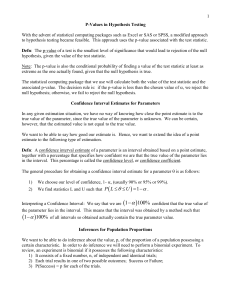

Hypothesis Testing The research process normally starts with developing a question that can be stated in terms of a testable hypothesis. The question is typically based on theorizing or observing or, in the business world, the need to determine the answer to a business question that would allow the hypothesis testing method to be used. Example questions that might lend themselves to this method of inquiry might include Is there a greater return on advertising expenditures for television commercials than for web page ads? Did our program to improve employee morale result in higher levels of employee job satisfaction? Will our plans for laying employees off subject our company to discrimination charges due to disproportionately laying off protected class members? Does an employment test that we’re considering administering to job applicants predict, beyond random chance, employee performance? Is there a difference among our different regional operations in production defect rates? Will allowing employees an extra half hour off for lunch make them more productive in the afternoon? Does dollar cost averaging produce greater returns on stock investing than market timing? The next step in this process is to design a study that would allow the question to be answered, then collect the data (conduct the study), and the final step would be to analyze the data. Hypothesis testing starts with the Ho, the null hypothesis. The null hypothesis is a simple way of saying that there’s nothing going on in the data. In other words there are no differences between groups, no relationship between two variables, no effect of one variable on another variable, etc. In our examples above the null hypotheses, simply stated, would be Returns based on television advertising are no different than returns based on web page ads. Employee morale levels after the program are not greater than those before the program. The impact of the layoff plans does not differentially impact any employee group. Scores on the employment test are not related to employee performance. There are no differences among our regions in production defect rates. Allowing employees an additional half hour off for lunch does not increase their productivity. There’s no difference in investment returns between dollar cost averaging and market timing? The alternative hypothesis, Ha, is that something is going on in the data. That is, there is a difference between two groups, there is a relationship between two variables, there is an effect of one variable on another variable, etc. In our examples above the alternative hypotheses, simply stated, would be Returns based on television advertising are great than those based on web page ads. Employee morale levels are greater after the program than before it. Layoff plans differentially impact one or more protected class groups. Employment test scores are positively related to employee performance. There are differences in defect rates between regions. Employees who receive an additional half hour for lunch perform better than those who do not. Dollar cost averaging produces higher returns than market timing. In hypothesis testing we are testing whether Ho or Ha is supported by the results of our study. According to the prominent philosopher Karl Popper (1902 – 1994), one can never know that Ha is true for certain, only that the data may support Ha. In other words, he believed (and many subscribe to this view) that when the study provides results that support Ha, the alternative hypothesis is in a state of not yet being disproven. In this course we will follow this guidance that when we conclude that Ha is supported by our analysis, we will not conclude that Ha is true, just that our results support it. There are many “facts”, based on research, that have been accepted as true and later disproven (Check out Wikipedia for Obsolete Scientific Theories). In the following diagram our approach to hypothesis testing is depicted. The basic assumption here is that we can never know the truth for certain. Thus, either Ho or Ha can be true, but we’ll never know which one is, in fact, the truth. However, based on the analysis of our data, we decide whether Ho or Ha is supported. In the upper left quadrant, we get it right. We conclude, based on our analysis, that Ho is supported when it is indeed true. Also, in the bottom right quadrant we get it right. We conclude that Ha is supported and is indeed true. However we can make two errors. In the bottom left quadrant we mistakenly conclude that Ha is supported when it is actually false. This error is known as a Type I Error. If we conclude that Ho is supported when it is actually false, as in the upper right quadrant, we’ve made what is known as a Type II Error. The scientific community has decided that the greater of the two errors is the Type I Error and this is the error that researchers attempt to minimize Essentially researchers set an error rate, known as alpha or α, that they’re willing to tolerate in their analysis. The typical rate for α is .05 or .01. In the social or behavioral sciences it is customary to use an α of .05. Since one can never know whether Ho or Ha is true, one can never know if the decision to support Ho or Ha is correct or in error. But, with an α of .05, the researcher, on average, would make a Type I Error no more than 5% of the time. With all the statistics that we’ll cover in this course that pertain to hypothesis testing, there’s a p-value that’s associated with the statistic. Basically the p-value represents the probability that the calculated statistic would be this large (and Ho would be true) simply due to chance. So, for a given statistic as the value gets larger, the probability of obtaining a statistic of that magnitude, if Ho is true, decreases. When a statistic gets to the size that the associated p-value falls lower than our α, normally .05, we conclude that our results are statistically significant. Thus, the p-value is the probability of getting a test statistic equal to or greater than the statistic, given that the null hypothesis, Ho, is true. When the p-value is less than α, we reject Ho and conclude that Ha is supported. The value of the statistic where the p-value falls below the α is known as the critical value of the test statistic. Thus, there are two methods to determine whether to conclude Ho or Ha is supported. They are 1) whether the p-value is greater or less than the α level, or 2) whether the test statistic is greater or less than the critical value of the statistic. It should be noted that most major statistics software packages provide the pvalue while Excel provides both the p-value and the critical value for the statistic. Basically the p-value and the statistic’s critical value are synonymous. One final note about the p-value and statistical significance: In classical statistics the pvalue and its relationship to the α level is simply a decision rule. If the p-value is less than the α level, the researcher concludes Ha and rejects Ho. Others argue that the pvalue reflects the strength of the relationship. In other words, lower p-values reflect stronger relationships.