Test Bias and IRT

advertisement

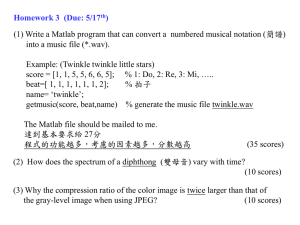

1 Test Bias and Differential Item Functioning Psychological tests are often designed to measure differences in favorable characteristics (e.g. intelligence, college aptitude, etc.) among people. However, oftentimes certain ethnic groups tend to score lower, on average, on these tests. It is extremely difficult to develop a test that measures innate intelligence without introducing cultural bias. This has been virtually impossible to achieve. Some have argued that these differences are due to: 1. Environmental factors 2. Biological factors The big question is: Are the tests biased or are they simply reflecting true differences? Are standardized tests as valid for minority examinees as they are for white examinees? Some psychologists argue that the tests are differentially valid for African-Americans and whites. However differences in test performance among ethnic groups do not necessarily indicate test bias. The question is truly one of validity. Does the test have different meanings for different ethnic groups? Most attempts to reduce cultural bias in testing have depended on the content validity of items. Differential Item Functioning (DIF) is one such approach. It is a statistical test that determines whether an item functions differentially in favor of one group after matching the two groups on overall ability, as measured by other test items. Introduction to Item Response Theory (IRT) IRT is model-based measurement in which an estimate of a person’s underlying trait (i.e. ability, personality, organizational efficacy) depend both on that person’s response and the properties of the items used to measure the trait. IRT is the basis for almost all large-scale psychological measurement, yet most test users are unfamiliar with IRT and are unaware that the basis of testing has changed. When most psychologists or researchers think of measurement, they think of the principles of classical test theory (CTT), such as reliability. Although IRT is derived from many of the principles taken from CTT some of the “rules of measurement” have changed. On the other hand, without a large number of “examinees” it is not possible to apply IRT and therefore CTT, and extensions of CTT, can and should be used in these instances. 2 Limitations of Classical Measurement Models 1. Examinee and test characteristics are confounded; each can be interpreted only in the context of the other. a. An examinee’s “true score” is defined as the expected observed score for an examinee on the test of interest. b. The difficulty of an item is defined as the proportion of examinees in a group of interest that answered the item correctly. This makes it difficult to compare examinees that take different tests and to compare item characteristics for items obtained using different groups of examinees. 2. It is nearly impossible to create parallel tests, yet the main concept in the CTT conceptual framework, reliability, is defined as the correlation between scores on parallel forms of the test. 3. The standard error of measurement in CTT is constant for all examinees, which is implausible. 4. CTT is test oriented, rather than item oriented, so it is impossible to predict how an individual will perform on a particular item. A Comparison of CTT and IRT In CTT the standard error of measurement (SEM) applies to all scores in a particular population; in IRT the SEM not only differs across scores, but generalizes across populations. The SEM describes the expected differences in scores that are due to measurement error and is critical to score interpretations at both the individual level and the overall level In CTT, larger tests are more reliable than shorter tests; in IRT shorter tests can be more reliable than longer tests. In CTT the Spearman-Brown prophesy states that as test length increases true score variance increases faster than error score variance and so reliability is increased. In IRT, given item pools that are large enough to ensure a multitude of items of different difficulty levels, adaptive tests can be comprised so that examinees are given items that are most reliable at their ability level (i.e. 3 neither too easy nor too hard), thereby increasing decreasing the standard error of measurement for all examinees. In CTT, comparing test scores across forms is best when forms are parallel; in IRT comparing test scores (i.e. ability estimates) across forms is best when forms are not parallel and the level of test difficulty varies between persons. When two groups of examinees receive different forms of a test designed to measure the same trait, differences in ability distributions between the two groups and item characteristics are controlled for by equating measures. In CTT, strict conditions must be met before test scores can be equated, specifically forms must be parallel which requires equal means and variances across forms. However, these conditions are seldom met in practical testing situations and oftentimes we are interested in comparing scores from quite different measures. It is possible to use regression to find comparable scores from one test form to another but errors in equating are influenced greatly be differences in test difficulty levels. In IRT test forms can be adapted so that each examinee receives only those items that are optimally appropriate for that person (i.e. neither too difficult nor too hard). Then ability can be estimated separately for each person using an IRT model that controls for differences item difficulty. Then estimates of the latent trait or ability can be compared, rather then overall test score. In CTT, item characteristics will be unbiased only if they come from a representative sample; in IRT item characteristics will be unbiased even if they come from an unrepresentative sample. In CTT, item statistics can vary dramatically across sample if the samples are unrepresentative of the population. In CTT, the “meaning” of test scores is obtained by comparing scores to those obtained form a “norm” group; in IRT the “meaning” of test scores (i.e. ability estimates) is obtained by comparing scores from their distance between items. In IRT, item characteristics and ability estimates are on the same scale so it is possible to locate a person’s ability estimate on a continuum that orders items by their level of difficulty. This can then be interpreted such that a person has a probability greater than 0.5 of obtaining the correct answer to any item that falls below that person’s ability estimate and a probability less than 0.5 of 4 obtaining the correct answer to any item that falls above that person’s ability estimate. It should be noted that it is still possible in IRT to obtain norm-referenced information. In CTT, interval scale properties can only be achieved by a non-linear transformation of observed scores, specifically normalizing scores; in IRT interval scale properties are achieved by merely applying justifiable models. In CTT, mixed item formats (i.e. multiple-choice and open-ended) lead to unbalanced impact on total test score; in IRT mixed item formats may lead to optimal scores. In CTT, increasing the number of categories for an item will lead to different total test scores that may be lead to different conclusions being drawn about an examinee’s ability. This may or may not be what is wanted. In IRT there are models specifically designed for tests that have a mixed item format and increasing the number of categories for an item. In CTT, difference scores cannot be meaningfully compared when the scores obtained at the first time point differ; in IRT, difference scores can be meaningfully compared when the scores obtained at the first time point differ. For difference or change scores to be meaningful when initial scores differ they must come an interval level of measurement. In CTT this is not easily justifiable, whereas in IRT, interval level measurement is directly justifiable by the model.