Econ415_out_part3

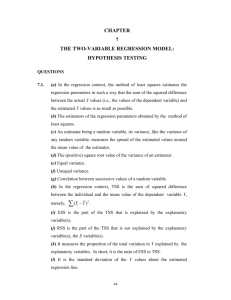

advertisement

SIMPLE CLASSICAL LINEAR REGRESSION MODEL

STATISTICAL MODEL

The next step in an empirical study is to specify a statistical model. The statistical model

describes the data generation process. It is supposed to describe the true economic relationship in

the population that produced the data.

Statistical Model as a Reasonable Approximation

The statistical model should be a reasonable approximation of the true unknown data generation

process. If the model is not a reasonable approximation, then it is very likely that the conclusions

we draw from the data about the economic relationship of interest will be incorrect.

SIMPLE CLASSICAL LINEAR REGRESSION MODEL

The simple classical linear regression model (SCLRM) is a model that describes an economic

data generation process. It is “simple” because it has a dependent variable and one explanatory

variable. If we believe the SCLRM is a reasonable approximation of the true data generation

process, then we should adopt it for our empirical study; if not, we shouldn’t.

SPECIFICATION OF THE MODEL

An econometric model is a model that describes the statistical relationship between two or more

economic variables. It can be written in general functional form as Yt = ƒ(Xt1, Xt2…Xtk) + μt. To

specify an econometric model, we make a set of assumptions about the economic relationship of

interest. There are three types of assumptions. 1) An assumption about the variables involved in

the relationship. 1) An assumption about the functional form of the relationship. 2) Assumptions

about the error term.

VARIABLES

The SCLRM assumes the economic relationship involves two variables, a dependent variable (Y)

and one explanatory variable (X). X is the only important variable that affects Y. All other

variables that affect Y are unimportant. These other variables are included in the error term, μ. μ

represents the net effect of all factors other than X that affect Y. Given this assumption, we have

Yt = ƒ(Xt) + μt.

Random and Nonrandom Variables

The variables Y and μ are random variables. The variable X can be either a random variable or a

nonrandom variable.

Random Variable

A random variable is a variable whose value is uncertain. The value of a random variable is

determined by the outcome of an experiment. Before the experiment takes place, the value that a

random variable will take is unknown and cannot be predicted with certainty. A random variable

can be discrete, continuous, observable, or unobservable.

Nonrandom Variable

A nonrandom variable is a variable whose value is known with certainty before an experiment

takes place.

Marginal, Joint, and Conditional Probability Distributions

The behavior of a random variable is described by a probability distribution. A discrete random

variable, X, has a discrete probability distribution (X). (X) is a rule that assigns to each value

of X one and only one probability weight, such that the sum of the probability weights equal one.

A continuous random variable, Y, has a probability density function (Y). (Y) allows you to

calculate a probability weight for any interval of Y-values as the area under the graph of (Y)

corresponding to this interval. The total area under the graph of (Y) is equal to one. A

probability distribution has 3 important characteristics. 1) Mean (expected value). 2) Variance. 3)

Standard deviation. The mean of a random variable is a probability weighted average of the

values of the random variable. For a discrete random variable, X, the mean is: = E(X) =

Xi(Xi). For a continuous random variable, Y, the mean is: = E(X) = Y (Y) dY. The

variance of a random variable is a probability weighted average of the squared mean deviations.

For a discrete (X) and continuous random variable (Y) the variance is: 2 = Var(X) = E[(X - )2]

= (Xi - )2(Xi), and 2 = Var(Y) = E[(Y - )2] = (Y - )2 (Y) dY. The standard deviation, ,

is the square root of the variance. Shorthand notation for the probability distribution of a random

variable X is: X ~ (x, x2). This is read, “X has a distribution with mean x, and variance x2.

Joint Probability Distribution

The behavior of a bivariate or multivariate random variable can be described by a joint

probability distribution. A discrete bivariate random variable (X, Y) has a discrete probability

distribution xy(X,Y). xy(X,Y) is a rule that assigns to each pair of values of X and Y one and

only one probability weight, such that the sum of the probability weights equal one. A continuous

bivariate random variable (X, Y) has a continuous probability density function xy(X,Y).

xy(X,Y) allows you to calculate a probability weight for any given interval of X and Y-values as

the volume under the surface of xy(X,Y) corresponding to this interval. The total volume under

the surface of xy(X,Y) is equal to one. A joint probability distribution has 4 important

characteristics. 1) mean of X and mean of Y. 2) Variance of X and variance of Y. 3) Standard

deviation of X and standard deviation of Y. 4) Covariance of X and Y.

Conditional Probability Distribution

The behavior of a bivariate or multivariate random variable can also be described by a conditional

probability distribution. A discrete bivariate random variable (X, Y) has discrete conditional

probability distributions (Y|X) and (X|Y). (Y|X) is a rule that assigns to each value of Y one

and only one probability weight for a given value of X, such that the sum of the probability

weights equal one. Note that: (Y|X) = xy(X,Y) / x(X). A continuous bivariate random variable

(X, Y) has continuous conditional probability density functions (Y|X) and (X|Y). (Y|X)

allows you to calculate a probability weight of any interval of Y-values for a given X value as the

area under the graph of (Y|X) corresponding to this interval for a given value of X. The total

area under the graph of (Y|X) for each value of X is equal to one. A conditional probability

distribution has 3 important characteristics. 1) Conditional mean of Y given X, denoted E(Y|X).

The conditional variance of Y given X, denoted Var(Y|X). The conditional standard deviation of

given X, denoted Sd(Y|X).

Conditional Mean Function

We want to analyze the statistical relationship between two random variables, X and Y. We want

to know if X has an effect on average Y in the population, and if so the direction and size of this

effect. Therefore we are interested in the relationship between E(Y|X) and X. This relationship

can be expressed as a conditional mean function E(Y|X) = ƒ(X).

Possible Violations of Assumption

The SCLRM assumes that X is the only important variable that affects Y. This assumption is

violated if one or more variables other than X have an important effect on Y. If this assumption is

violated, then the SCLR may not be a reasonable approximation of the true data generation

process.

FUNCTIONAL FORM

The SCLRM assumes that the conditional mean function, also called the population regression

function, is linear: E(Y|X) = ƒ(X) = α + βX, where α and β are called parameters. A parameter is

a quantifiable characteristic of a population. It is always unknown and unobservable.

Interpretation of Parameters

The parameter α is the vertical intercept of the line. It measures the average value of Y when X =

0. This parameter is usually not of primary interest. The parameter β is the slope parameter. It is

given by β = Δ E(Y|X) / ΔX. It measures the change is average Y when X changes by one unit. It

is the marginal effect of X on Y. This parameter is of primary interest. The 3 important questions

we want to address can be restated as follows (parentheses). 1) Does X effect Y? (Is β zero or

nonzero?) 2) What is the direction of the effect? (Is the algebraic sign of β positive or negative?)

3) What is the size of the effect? (What is the magnitude of β?).

Meaning of Linearity

The SCLRM assumes that the functional form is linear in parameters. This allows us to choose

from a wide variety of functional forms such as double-log, exponential, quadratic, etc.

Possible Violations of Assumption

Two ways the assumption of linear functional form can be violated. 1) The true functional form is

nonlinear in parameters. 2) We choose the wrong linear in parameters functional form. If the

assumption of linearity is violated, then the SCLR may not be a reasonable approximation of the

true data generation process.

ERROR TERM

The SCLRM assumes that the observed value of Y for the tth unit (Yt) has two components. 1)

Systematic component. 2) Random component. The systematic component is represented by the

conditional mean function. The random component is represented by the error term. Therefore,

the statistical relationship between Yt and Xt is given by Yt = α + βXt + μt. The error term is a

random variable that represents the “net effect” of all factors other than Xt that affect Yt for the

tth unit in the population. By definition, the error term measures the deviation in Yt from the

mean for the tth unit: μt = Yt – (α + βXt). The error term μt is unknown an unobservable. This is

because the parameters α and β are unknown and unobservable.

Assumptions

The error term is an unobservable random variable. We can describe its behavior by a conditional

probability distribution ƒ(μ|X). For each value of X there is a probability distribution for μ. The

SCLRM makes the following assumptions about the conditional probability distributions of the

error term.

1. Error Term Has Mean Zero.

2. Error Term is Uncorrelated with the Explanatory Variable.

3. Error Term Has Constant Variance.

4. Errors Are Independent.

5. Error Term Has a Normal Distribution.

E(μt|Xt) = 0

Cov(μt, Xt) = 0

Var(μt|Xt) = σ2

Cov(μt, μs) = 0

μt ~ N

Possible Violations of Assumptions

Assumptions 1 and 2 may be violated by confounding variables and reverse causation.

Assumption 3 is violated if the conditional variance of Y depends on X. Assumption 4 may be

violated for nonrandom samples and time series data. If any assumptions about the error term are

violated, then the SCLR may not be a reasonable approximation of the true data generation

process.

RELATIONSHIP BETWEEN ERROR TERM AND DEPENDENT VARIABLE

The assumptions about the conditional probability distribution of the error term ƒ(μ|X) imply

assumptions about the conditional probability distribution of the dependent variable ƒ(Y|X). This

is because Y and μ are random variables, and Y is a linear function of μ. In this case, if we know

the properties of μ we can deduce the properties of Y.

SIMPLE CLASSICAL LINEAR REGRESSION MODEL CONCISELY STATED

The SCLRM can be written concisely in any of the following 4 ways.

Yt = α + βXt + μt

E(μt|Xt) = 0

Var(μt|Xt) = σ2

Cov(μt, μs) = 0

μt ~ N

Yt = α + βXt + μt

E(Yt|Xt) = α + βXt

Var(Yt|Xt) = σ2

Cov(Yt, Ys) = 0

Yt ~ N

Yt = α + βXt + μt

μt ~ iid N(0, σ2)

Yt ~ iid N(α + βXt, σ2)

ESTIMATION

The next step in an empirical study is to use the sample data to obtain estimates of the parameters

of the statistical model: Yt = α + βXt + μt ; μt ~ iidN(0, σ2). The model has 3 unknown and

unobservable parameters: α, β, and σ2.

TYPES OF ESTIMATES

We can make two types of estimates. 1) Point estimate. 2) Interval estimate. A point estimate is

an estimate that is a single number. An interval estimate is an estimate that is an interval of

numbers within which we expect to find the true value of the parameter, with some given

probability. It is a conclusion in the form of a probability statement. It indicates the degree of

certainty or confidence we can have in our point estimate. An interval estimate is also called a

confidence interval.

ESTIMATOR

To obtain estimates of the parameters, we must choose an estimator. An estimator is a rule that

tells us how to use the sample data to obtain estimates of a population parameter. Let α, β, and σ2

be population parameters. We will designate the estimators for these parameters with a hat: α^, β^,

and σ2^.

Accurate and Reliable Estimator

We want to choose an estimator that is accurate and reliable. An accurate and reliable estimator

will produce an estimate that is close to the true value of the population parameter. Our goal is to

get the best possible estimate with our sample data. The best possible estimate is one that comes

as close as possible to the true population parameter.

How Do We Know if an Estimator is Accurate and Reliable?

To determine if an estimator is accurate and reliable, we need to describe the behavior of the

estimator from sample to sample.

Behavior of an Estimator

Let β^ be the estimator of β. We know two things about the behavior of an estimator. 1) For any

given sample, the estimate β^ will probably not equal the true value of β. The difference β^– β is

estimation error. 2) The estimates will be different for different samples.

An Estimator is A Random Variable

The estimator β^ is a random variable, because its value is uncertain from sample to sample.

Sampling Distribution

Because β^ is a random variable, we can describe its behavior by a probability distribution called

a sampling distribution. The sampling distribution for β^ can be derived from an experiment or

using probability theory.

Mean and Variance of Sampling Distribution

The sampling distribution of β^ has a mean and a variance (or standard deviation). The mean tells

us the average of the estimates from sample to sample. The variance tells us how spread-out the

estimates are from sample to sample.

Form of Sampling Distribution

The sampling distribution of β^ will have some specific form. The form of the sampling

distribution illustrates the long-run pattern of β^ from sample to sample.

Standard Error of the Estimator

The standard deviation of the sampling distribution of β^ is called the standard error of β^. Why?

If the mean of the sampling distribution of β^ equals the true value of β, then the standard

deviation of β^ tells us the average error of the estimate for a large number of samples.

Definition of an Accurate and Reliable Estimator

To define an accurate and reliable estimator we use the mean and variance of the sampling

distribution of the estimator. We use the mean to define accuracy. We use the variance (standard

error) to define reliability. For an estimator to be accurate and reliable, the mean and variance

must have specific properties.

Small Sample Properties

The small sample properties of an estimator are the properties that the mean and variance of the

sampling distribution of an estimator must have for any finite sample size. For an estimator to be

accurate and reliable, it must have two small sample properties. 1) Unbiasedness (Accuracy). 2)

Efficiency (Reliability).

Unbiasedness

An estimator is accurate if it is unbiased. An estimator is unbiased if the mean of the sampling

distribution is equal to the true value of the population parameter being estimated: E(β^) = β. An

estimator is inaccurate if it is biased. It is biased if the mean of the sampling distribution does not

equal the true value of the parameter. An estimator is biased upward (downward) if the mean is

above (below) the true value of the parameter; that is, E(β^) > β and E(β^) < β. A biased estimator

systematically overestimates or underestimates the parameter from sample to sample.

Efficiency

An estimator is most reliable if it is efficient. An estimator is efficient if it has minimum variance

in the class of unbiased estimators. If there are two unbiased estimators of β, denoted β1^ and β2^,

then β1^ is the efficient estimator if Var(β1^) < Var(β2^).

Sampling (Random) Error

An efficient estimator has the smallest sampling error of any unbiased estimator. Sampling error

is random estimation error that occurs from sample to sample, because different samples have

different subsets of units from the population. It is the result of chance.

Standard Error of Estimator and Precision

An efficient estimator has the smallest standard error of any unbiased estimator, and therefore the

smallest average estimation error. Therefore, an efficient estimator will be the most precise

estimator.

Large Sample Properties

Sometimes we don’t know the sampling distribution of an estimator for a finite sample, or we

can’t find an estimator that is unbiased and/or efficient in finite samples. In these instances, to

define an accurate and reliable estimator we use the mean and variance of the large sample

(asymptotic) sampling distribution of the estimator.

Large Sample Properties

The large sample properties of an estimator are the properties that the mean and variance of the

sampling distribution of an estimator must have as the sample size becomes infinitely large. For

an estimator to be accurate and reliable, it must have one large sample property: consistency. An

estimator is a consistent estimator of a population parameter if the sampling distribution of the

estimator collapses to the true value of the population parameter as the sample size becomes

infinitely large. A consistent estimator has two properties. 1) If the estimator is biased, as the

sample size increases the bias decreases. 2) As the sample size increases, the variance decreases.

Thus, the larger the sample size, the smaller the systematic and random estimation error of the

estimator.

Properties of an Estimator: Conclusions

The most accurate and reliable estimator is an estimator that is unbiased, efficient, and consistent.

This estimator will have no systematic error and minimum sampling error for any given size

sample you might use to obtain your estimate of β. The larger your sample, the smaller the

sampling error. Therefore, for a given sample of a given size, it will produce an estimate that is as

close as possible to the true unknown value of the population parameter.

ESTIMATOR FOR α AND β

To obtain estimates of the regression coefficients, α and β, we will use the ordinary least squares

(OLS) estimator. The ordinary least squares estimator tell us to choose as our estimates of α and

β the numbers that minimize the residual sum of squares for the sample.

Residual Sum of Squares Function

To calculate the OLS estimates for α and β, we need to find the unknown values, α^ and β^, that

minimize a residual sum of squares function for the sample data. The residual for the tth

observation is defined as ut = Yt - - Xt. The residual sum of squares function is given by

RSS(, ) = ∑(Yt - - Xt)2, where RSS denotes residual sum of squares. Because the

sample is given, we know the values of Yt and Xt, and therefore they are treated as constants.

Because we don’t know the values of and and they are treated as unknowns.

Deriving the OLS Estimators for and

To derive the OLS estimators for α and β, we find the values of and that minimize the

function RSS. This yields, the following system of two equations in two unknowns

∑Yt = n - ∑Xt

∑XtYt = ∑Xt + ∑Xt2

These are called the normal equations. The normal equations are the first-order necessary

conditions for minimizing the residual sum of squares function. We can solve these two

equations sequentially. Doing so yields the following expressions for and .

= ∑(Xt – XBAR)(Yt – YBAR) / ∑(Xt – XBAR)2 = Covariation(Y)/Variation(X)

= Cov(Y)/Var(X)

= YBAR – XBAR

These are the OLS estimators for α and β for the SCLRM. It is a rule that tells us how to use the

sample data to obtain estimates of the unknown population parameters.

Are the OLS Estimators for α and β Accurate and Reliable?

To determine if the OLS estimators α^ and β^ are accurate and reliable, we need to derive their

sampling distributions and analyze their small and large sample properties.

Form of the Sampling Distributions

The SCLRM assumes that the error term has a normal distribution. Given this assumption, it

follows that the sampling distributions of the OLS estimators and have normal

distributions. Therefore, ~ N(Mean, Variance) and ~ N(Mean, Variance).

Mean of the Sampling Distributions

The SCLRM assumes that that error term has mean zero, and therefore the error term is

uncorrelated with the explanatory variable. Given this assumption(s), it follows that the means of

the sampling distributions are E() = and E() = . Therefore, ~ N(, Variance) and

~ N(, Variance).

Variances, Covariance, and Standard Deviations of the Sampling Distributions

The SCLRM also assumes that the error term has constant variance and the errors are

independent. Given the assumptions that the error term has mean zero, constant variance, and the

errors are independent, it follows that the variances and covariance of the sampling distributions

are

Var() = 2 / ∑(Xt – XBAR)2

Var() = [(∑Xt2) / n] [2 / ∑(Xt – XBAR)2]

Cov(, ) = - XBAR [2 / ∑(Xt – XBAR)2]

Therefore, ~ N(, 2 / ∑(Xt – XBAR)2) and ~ N(, [(∑Xt2) / n] [2 / ∑(Xt – XBAR)2]).The

square roots of the variances are the standard deviations of the sampling distributions, called the

standard errors of the estimators, and denoted s.e.( ) and s.e. ().

Variance/Covariance Matrix of Estimates

It is standard practice to use the variances and covariances to construct what is called a variancecovariance matrix of estimates. The general form of this matrix is as follows.

Var()

Cov =

Cov(, )

Small Sample Properties

Cov(, )

Var()

Because E() = and E() = , the OLS estimators are unbiased. It can also be shown that

in the class of linear unbiased estimators the OLS estimators have minimum variance, and

therefore they are efficient. The small sample properties can be summarized by the GaussMarkov theorem: “Given the assumptions of the classical linear regression model, the OLS

estimators and are the best linear unbiased estimators of the population parameters and

.”

Large Sample Properties

It can be shown that the large sample (asymptotic) sampling distributions of the OLS estimators

are normal distributions and consistent estimators.

Conclusions about OLS Estimators

If the assumptions of the CLRM are satisfied, and therefore the CLRM is a reasonable

approximation of the true data generation process, then the OLS estimators of α and β are the best

estimators. This is because we can’t find an alternative estimator that produces more accurate

and reliable estimates than OLS; that is, estimates that will consistently come closer to the true

values of the population parameters. However, the following caveats must be noted.

Best May Not Be Very Good

The OLS estimator will be more reliable and precise than any other estimator. However, this may

not be very reliable and precise. The smaller the variation in X and/or the larger the error

variance, the bigger the variance of the sampling distributions of α^ and β^, and therefore the less

reliable and precise the estimates.

Bias in the OLS Estimator

The OLS estimator will be biased if the error term does not have mean zero. The error term will

not have mean zero if it is correlated with the explanatory variable. The error term will be

correlated with the explanatory variable if there are omitted confounding variables, reverse

causation, sample selection problems or measurement error in the explanatory variable.

ESTIMATOR FOR σ2

To obtain an estimate of the error variance 2, we will use the estimator: 2 = RSS / df

= RSS / (n – k). where n is sample size, and k is the number of regression coefficients (2).

Standard Error of the Regression

The standard error of the regression is the square root of the estimate of the error variance. It

measures how spread-out the data points are around the regression line. It is an estimate of the

standard deviation of Y after the effect of X has been taken out.

ESTIMATOR FOR THE VARIANCE, COVARIANCE, AND STANDARD ERROR OF THE

OLS ESTIMATORS σ^ AND β^

To obtain measures of the precision of the point estimates, construct interval estimates, and test

hypotheses, we need to obtain estimates of the variances, covariances, and standard errors of the

sampling distributions of the OLS estimators σ^ and β^. We will use the following estimators.

Var()^ = 2^ / ∑(Xt – XBAR)2

Var()^ = [(∑Xt2) / n] [2^ / ∑(Xt – XBAR)2]

Cov(, )^ = - XBAR [2^ / ∑(Xt – XBAR)2]

The estimators for s.e.( ) and s.e.( α^) are the square roots of the estimates of the variances.

INTERVAL ESTIMATES of σ^ and β^

There are many different formulas for constructing interval estimates for different estimators. For

the OLS estimators σ^ and β^, the general formula for constructing an interval estimate is

Point Estimate ± (Critical Value) • (Standard Error of the Estimate)

The critical value and the standard error of the estimate are obtained from the sampling

distribution of the estimator. To determine the critical value, you choose a degree of confidence.

The degree of confidence is the probability that the interval will contain the true value of the

population parameter. If the sampling distribution of the estimator is a normal distribution, then

the critical value is taken from the standard normal distribution (true standard error) or the tdistribution (estimated standard error). The standard error of the point estimate is the standard

deviation of the sampling distribution of the estimator that produced the point estimate. It

measures the precision of the point estimate.

Margin of Error of the Point Estimate

The product of the critical value and the standard error is called the margin of error of the point

estimate. It measures the maximum error in the point estimate, with some given probability. This

tells us how certain or confident we can be that the point estimate is close to the true value of the

population parameter.

Rule-of-Thumb for Constructing a 95% Interval Estimate

A rule-of-thumb often times used to construct a 95% interval estimate is to use 2 as the critical

value, which is the approximate critical value for a 95% degree of confidence from the tdistribution for a sample size of 30 or larger.

Rule-of-Thumb 95% OLS Interval Estimates for α and β

For the OLS estimator, the rule-of-thumb 95% interval estimates for the regression coefficients α

and β are given by β^ ± 2 • s.e.(β^)^ and α^ ± 2 • s.e.(α^)^, where s.e.(β^)^ = √ 2^ / ∑(Xt –

XBAR)2, and s.e.(α^)^ = √ [(∑Xt2) / n] [2^ / ∑(Xt – XBAR)2] are the estimated standard errors of the

estimates.

HYPOTHESIS TESTING

The next step in an empirical study is to test hypotheses about the parameters of the statistical

model.

Hypothesis

An hypothesis is an assertion about the value of one or more population parameters. It is

expressed as a restriction on the parameters of a statistical model.

LOGIC OF HYPOTHESIS TESTING

Suppose we want to test the hypothesis β = 0. Choose an estimator β^. Use the sample data to

obtain an estimate, β^ = 1.00. Because β^ ≠ 0, should we reject the hypothesis and conclude it’s

false? Not necessarily. The estimate will almost always be different from the true value of the

population parameter because of random sampling estimation error. To decide if the hypothesis is

likely to be true or false, we compare the estimate β^ = 1.00 to the hypothesized value β = 0. If the

estimate is reasonably close to the hypothesized value, then we conclude that the difference is

likely to be the result of random sampling error (chance), and therefore we accept the hypothesis

β = 0. If the estimate is significantly different from the hypothesized value, then we conclude that

the difference is likely to be a real difference, and therefore we reject the hypothesis β = 0. How

do we determine if the estimate β^ = 1.00 is likely to be reasonably close or significantly different

from the hypothesized value β^ = 0? We use the sampling distribution of the estimator β^ to

calculate the probability of obtaining an estimate at least as large as β^ = 1.00 if the hypothesis β

= 0 is true. If this probability is small, say 5% or less, then we conclude that β^ = 1.00 is

significantly different from β = 0 and we reject the hypothesis. If this probability is large, say

greater than 5%, then we conclude that β^ = 1.00 is reasonably close to β = 0 and we accept the

hypothesis.

Null Hypothesis, Type I and II Errors, Level of Significance, Power of Test

The hypothesis that we are testing is called the null hypothesis. When testing the null hypothesis,

we can make a correct conclusion or an incorrect conclusion. We make a correct conclusion

when we: 1) reject the null hypothesis when it is false, 2) accept the null hypothesis when it is

true. We make an incorrect conclusion when we 1) reject the null hypothesis when it is true, 2)

accept the null hypothesis when it is false. Thus, when testing an hypothesis we can make two

errors. A type I error occurs when we reject the null hypothesis when it is true. A type II error

occurs when we accept the null hypothesis when it is false. The probability of making a type I

error is called the level of significance of the test. The level of significance of the test is also

called the size of the test. The power of the test is the probability of rejecting the null hypothesis

when it is false. It is given by one minus the probability of a type II error.

PROCEDURES FOR TESTING HYPOTHESES

There are 3 alternative approaches that can be used to test an hypothesis. 1) Level of significance

approach. 2) Confidence interval approach. 3) P-value approach. In this class, we will use the

level of significance approach.

LEVEL OF SIGNIFICANCE APPROACH

There are 5 basic steps involved in the level of significance approach.

1. Specify the null and alternative hypotheses.

2. Derive a test statistic and the sampling distribution of the test statistic under the null

hypothesis.

3. Choose a level of significance and find the critical value(s) for the test statistic.

4. Use the sample data to calculate the actual value of the test statistic.

5. Compare the actual (calculated) value of the test statistic to the critical value and accept or

reject the null hypothesis.

Null and Alternative Hypotheses

The first step in testing an hypothesis is to state the null and alternative hypotheses. The null

hypothesis is as assertion about the value(s) of one or more parameters. The test is designed to

determine the “strength of evidence” against the null hypothesis. We usually hope to reject the

null. The alternative hypothesis is the hypothesis we usually hope is true. We usually hope to

accept the alternative. The most often tested null hypothesis is the hypothesis that one variable,

X, has no effect on some other variable Y. The alternative hypothesis is that X has an effect on Y.

We hope to reject the null hypothesis of “no effect” and accept the alternative hypothesis that

there is an effect. The null and alternative hypotheses are written as Ho: β = 0 and H1: β ≠ 0. This

is called a two-sided or two-tailed test because the alternative hypothesis allows β to be greater

than or less than zero. If the alternative hypothesis was written as either β > 0 or β < 0, this would

be a one-sided or one-tailed test.

Derive a Test Statistic and the Sampling Distribution of the Test Statistic Under the Null

Hypothesis

The second step in testing an hypothesis is to derive a test statistic and the sampling distribution

of the test statistic under the null hypothesis. Like an estimator, a test statistic is a random

variable whose value depends on the sample data, varies from sample to sample, and can be

described by a sampling distribution. When we choose the t-statistic as our test statistic, we are

using what is called the t-test. When testing a hypothesis about the value of a single parameter in

the SCLRM, the appropriate test is the t-test. The t-statistic and its sampling distribution are: t =

(β^ – β) / s.e.( β^)^ ~ t(n - k), where β^ is the estimate, β is the hypothesized value, and s.e.( β^) is

the standard error of the estimate. The t-statistic has a t-distribution with n – k degrees of

freedom, where k is the number of regression coefficients in the model.

Choose the Level of Significance of the Test and Find the Critical Value(s) of the Test Statistic

The third step in testing a hypothesis is to choose the level of significance of the test and find the

critical value(s) of the test statistic. The level of significance of the test is the probability of

making a type I error; that is, the probability of rejecting the null hypothesis when it its true.

Use the Sample Data to Calculate the Actual Value of the Test Statistic

The fourth step in testing an hypothesis is to use the sample data and the estimator to calculate the

actual value of the test statistic.

Compare the Actual Value of the Test Statistic to the Critical Value

The fifth step in testing an hypothesis is to compare the actual value of the test statistic, t, to the

critical value(s) of the test statistic, t*. If the actual value is greater than or equal to (less than )

the absolute value of the critical value, then reject (accept) the null hypothesis. If we reject

(accept) the null hypothesis we say that the estimate is statistically significant (insignificant) at

the {critical value}% level.

P-VALUE AS A MEASURE OF STRENGTH OF EVIDENCE AGAINST THE NULL

HYPOTHESIS

The P-value is the probability of obtaining a parameter estimate at least as large as the one

produced by an estimator, if the null hypothesis is true. The p-value can be interpreted as a

measure of the strength of evidence against the null hypothesis, and for the alternative hypothesis.

The smaller (larger) the p-value, the stronger (weaker) the evidence against the null and for the

alternative hypothesis.

DOES X HAVE AN EFFECT ON Y?: TWO APPROACHES TO ANSWERING THIS

QUESTION

If our objective is to explain an economic relationship, the first question we ask is: “Does X have

an effect or Y?” Two approaches can be used to answer this question. 1) Level of significance

hypothesis test approach. 2) P-value strength of evidence approach.

Level of Significance Hypothesis Test Approach

To answer this question “yes or no” we must use the level of significance approach. This

approach requires us to choose a specific level of significance. Choosing a level of significance is

somewhat arbitrary. It depends upon how willing we are to make the incorrect conclusion. If we

want very badly to avoid a Type I error, we will want to choose a level of significance of 0.01 or

less. If we want very badly to avoid a Type II error, we will want to choose a level of significance

of 0.05 or higher.

P-Value Strength of Evidence Approach

Rather than answering this question yes or no, we might simply provide a measure of the strength

of evidence for an effect. The smaller (larger) the p-value, the stronger (weaker) the evidence for

an effect. Alternatively, the smaller (larger) the p-value, the smaller (larger) the probability the

observed effect is the result of chance, and the larger (smaller) the probability the observed effect

is a real effect.

PREDICTION AND GOODNESS OF FIT

To use X to predict Y, we use the sample regression function. The sample regression function is

the regression function with the estimated values of the parameters. We then substitute a value of

X into the sample regression function and calculate the corresponding value of Y. This value of Y

is the predicted value of Y.

Measures of Goodness of fit

If our objective is to use X to predict Y, then we should measure the goodness of fit of the model.

Goodness-of-fit refers to how well the model (regression line) fits the sample data. The better the

model fits the data, the higher the predictive validity of the model, and therefore the better values

of X should predict values of Y. The two most often used measures of goodness of fit for the

SCLRM are the standard error of the regression, and the R2 statistic.

Standard error of the regression

The standard error of the regression (SER) is given by the square root of the estimated error

variance, SER = √σ2^ = √RSS/(n – k). It measures how far a typical Y-value differs from the

predicted value of Y given by the regression line. The smaller (larger) the standard error of the

regression, the better (worse) the model fits the data, and therefore the higher (lower) the

predictive validity of the model.

R2 Statistic

The R2 measures the proportion of the variation in the dependent variable that is explained by the

variation in the explanatory variable. It can take any value between 0 and 1. If the R2 is equal to

one, all of the data points lie on the sample regression line, and therefore the explanatory variable

explains all of the variation in the dependent variable. If the R2 statistic is equal to zero the data

points are highly scattered around the regression line, which is a horizontal line, and therefore the

explanatory variable explains none of the variation in the dependent variable. The closer R2 to 1,

the more tightly the data points are clustered around the regression line, and therefore the larger

the proportion of the variation in Y explained by X. For example, if the R2 statistic is 0.40 this

means that X explains 40% of the variation in Y. The remaining 60% of the variation is Y is

explained by factors other than X that affect Y, summarized by the error term. The larger

(smaller) the R2 statistic, the better (worse) the model fits the data, and therefore the higher

(lower) the predictive validity of the model. The R2 statistic is calculated using either of the

following formulas: R2 = RSS / TSS or R2 = 1 – (RSS / TSS), where RSS is the residual

sum of squares, and TSS is the total sum-of-squares for Y.

DRAWING CONCLUSIONS FROM THE STUDY

The final step of an empirical study has two parts. 1) Make conclusions about the economic

relationship. 2) Assess the validity of the conclusions

CONCLUSIONS

If the objective of the study is to explain the relationship between X and Y, then our conclusions

should answer the following questions. Does X effect Y? If so what is the direction and size of

the effect? What mechanism produces the effect?

VALIDITY OF CONCLUSIONS

To assess the validity of the conclusions we use two criteria. 1) Internal validity. 2) External

validity.

INTERNAL VALIDITY

An empirical study is internally valid if the conclusions about the independent causal effect of X

on Y are valid for the population being studied.

Criteria for Internal Validity

To assess internal validity, we must address two questions.

1. Is the estimate of the effect of X on Y unbiased?

2. Is the standard error of the estimate of the effect of X on Y correct?

If the estimate is biased, then it is systematically too high or low, and we can’t have much

confidence in our conclusions. If the standard error is incorrect, then the t-test, p-value, margin of

error, and confidence interval are incorrect, and therefore our conclusions may be incorrect.

UNBIASEDNESS OF ESTIMATE

The most important potential sources of bias are confounding variables and reverse causation.

Confounding Variables

A variable Z is a confounding variable if the effect of Z on Y cannot be separated from the effect

of X on Y. Two conditions are necessary for Z to be a confounding variable. 1) Z has an effect

on Y. 2) Z is correlated with X. There are two types of confounding variables. 1) Observable. 2)

Unobservable. Z is observable if we have data for it. Z is unobservable if we don’t have data for

it. If we don’t control for confounding variables, then our estimate of the effect of X on Y will be

biased in small samples, and inconsistent in large samples. This is called omitted variable bias.

Identification of Potential Confounding Variables

To identify potential confounding variables, we can use economic theory, past studies,

experience, intuition, or a formal statistical test.

Controlling for Confounding Variables

There are 4 methods to control for confounding variables. 1) Specify a multiple classical linear

regression model (MCLRM) and include the confounding variable(s) as explanatory variables in

the model. This method can be used only if the confounding variable(s) are observable. If the

confounding variable(s) is unobservable, then one of the following 3 methods can be used. 2)

Collect panel data and specify an error components statistical model. 3) Use an instrumental

variables estimator. This requires data on a variable(s) correlated with X but not correlated with

μ; that is, a variable(s) that does not have a direct effect on Y, but has an indirect effect on Y by

affecting X. 4) Conduct a randomized controlled experiment.

Reverse Causation

Reverse causality exists if Y causes X, and therefore the effect of Y on X cannot be separated

from the effect of X on Y. Because the OLS estimator β^ picks up both effects, it is biased and

inconsistent. Reverse causality produces a correlation between X and μ. Why? If X causes Y, and

Y causes X then we have two equations, Y = α + βX + μ and X = γ + θY + ν. When μ changes

Y changes. When Y changes X changes. Therefore, μ is correlated with X. Because Corr(μ, X) ≠

0, the error term does not have mean zero. We know that if the error term does not have mean

zero, then the OLS estimator is biased. This is called simultaneous equations bias.

Identification of Reverse Causation

To identify reverse causation, we can use economic theory, past studies, experience, intuition, or

a formal statistical test.

Controlling for Reverse Causation

There are 2 methods to control for reverse causality. 1) Use an instrumental variables estimator.

2) Conduct a randomized controlled experiment.

CORRECT STANDARD ERROR

The two most important sources of incorrect standard errors are the following. 1)

Heteroskedasticity. 2) Autocorrelation.

Heteroskedasticity

Heteroskedasticity exists when the error term has non-constant variance. This violates an

assumption of the SCLRM that is required to obtain the correct formula for the standard error.

This causes the estimated standard error of the OLS estimate to be incorrect. As a result, the ttest, p-value, margin of error, and confidence interval are incorrect.

Identifying Heteroskedasticity

If the error term has constant variance, then the conditional variance of Y is the same for all

values of X. Theory, past studies, experience, intuition, or a formal statistical test can be used to

assess whether the conditional variance of Y depends upon X.

Obtaining the Correct Standard Error

To obtain the correct standard error, we can calculate a heteroskedasticity-robust standard error,

using Halbert White’s method. Most statistical software packages will do this for us upon request.

Autocorrelation

Autocorrelation exists when the errors for different units in the population are correlated with one

another. This violates an assumption of the SCLRM that is required to obtain the correct formula

for the standard error. This causes the estimated standard error of the OLS estimate to be

incorrect. As a result, the t-test, p-value, margin of error, and confidence interval are incorrect.

Identifying Autocorrelation

Autocorrelation is most likely to exist when we use time series data.

EXTERNAL VALIDITY

An empirical study is externally valid if the conclusions about the independent causal effect of X

on Y can be generalized from the population and setting studied to the population and setting of

interest. An empirical study is externally valid if there are not big differences between the

population and setting being studied, and the population and setting of interest.