Statistics Review

advertisement

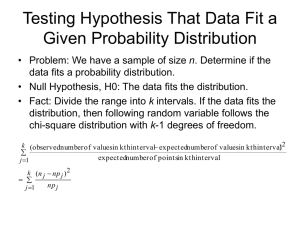

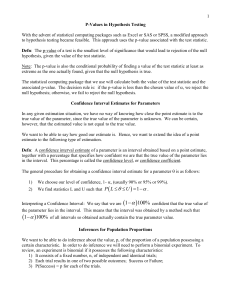

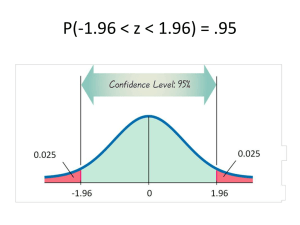

STATISTICS REVIEW Introduction Students are often intimidated by statistics. This brief overview is intended to place statistics in context and to provide a reference sheet for those who are trying to interpret statistics that they read. It does not attempt to show or to explain the mathematics involved. Although it is helpful if those who use statistics understand the math, the computer age has rendered that understanding unnecessary for many purposes. Practically speaking, students often simply want to know whether a particular result is significant, i.e. how likely it is that the obtained result may be attributable to something other than chance. Computer programs can easily produce numbers that allow such conclusions, if the student knows which tests to use and has an understanding of what the numbers mean. This summary is intended to help achieve that understanding. Basic Concepts Variables Most statistics involve at least two variables: an independent variable and a dependent variable. The independent variable is the one that the researcher focuses on as influencing the dependent variable. The dependent variable “depends” on the independent variable. For example, height “depends” on age: As individuals age, they usually grow taller. One cannot alter age by withholding food and thereby stunting the growth of children, so age cannot logically “depend” on height. As another example, scores on a test “depend” on the amount of knowledge an individual has on the subject matter. Assigning higher test scores would not increase knowledge. Attributes Every variable has attributes or components that constitute the variable. For example, the attributes of gender include male, female, and transgendered. The attributes of age (in years) include all of the numbers from zero to 122 or so. In research, the list of a variable’s attributes has to be exhaustive, that it, it has to cover all possibilities, and the attributes have to be discrete, that is they cannot overlap with each other. Levels of Measurement Every variable fits into one and only one level of measurement: nominal, ordinal, interval, or ratio. Nominal variables are differentiated by names (from Latin nomen, which means name). Examples include ethnicity, language group, and hair color. Ordinal variables are differentiated by their order (from Latin ordo, which means order) in relationship to each other. Examples include class rank, place of finish in a race, and birth order. We know which individual is above or below another individual based on their places in the ordered list, but the place on the ordered list tells nothing about the amount of difference between any two individuals. For example, the second place finisher in a race could have been behind the first place finisher by .01 second or by 100 seconds, but if all we know is the order of finishing, we know nothing about the closeness of their times. Likert scales produce ordinal levels of measurement. Interval variables are differentiated by a measurement that has regular intervals, such as inches, degrees Celsius, and some test scores. With interval measurements, unlike ordinal measurements, one could properly say that one individual was twice as tall as another or that one individual did half as well on a test as another individual. Ratio variables are similar to interval variables, except ratio variables have a true zero. Examples include degrees Kelvin (but not Celsius or Fahrenheit), number of children, number of times married, and number of statistics tests passed. Descriptive and Inferential Statistics Statistics are divided into two general categories—descriptive and inferential. Descriptive statistics include mean, median, mode, range, sum of squares (sum of squared deviation scores), variance (also known as mean square, which is short for mean of squared deviation scores), and standard deviation. It is assumed that graduate students have some familiarity with each of these statistics. Scores are often converted to z scores. A z score is simply a number that shows how far a score is from a mean. Therefore, a z score could range from 0 to infinity, but practically speaking, a z score of 4, which means that the raw score is 4 standard deviations from the mean, is so large that the area under the distribution curve beyond the z score is less than 0.0001. Although not a statistic, a regression line is a line through a data plot that best fits the data. The line produces the lowest possible difference between actual values and predicted values. Other descriptive statistics include the error sum of squares (SSE), regression sum of squares (SSR) and total sum of squares (SST). SSE + SSR = SST. Another descriptive statistic is proportion of variance explained (PVE). PVE is a measure of how well the regression line predicts the scores that are actually obtained. A correlation coefficient is a measure of the strength of a relationship between two variables. It is represented by the symbol r, and it can range between –1 and +1. The negative and positive signs reflect the direction of the slope of the line that shows the relationship between the two variables. The standard error of estimate is the standard deviation of the prediction errors. It tells how spread out scores are with respect to their predicted values. Inferential statistics involve tests to support conclusions about a population from which a sample is presumed to have been drawn. The tests that follow are considered to be inferential statistics. Assumptions All tests are based on the assumption that samples are randomly selected and randomly assigned and that individuals are independent from each other, i.e. that one member’s score does not influence another member’s score. Parametric tests are based on the assumption that populations from which samples are drawn have a normal distribution. Nonparametric tests do not have this assumption. Each test has other assumptions, such as regarding the type of data and the number of data points. The following test descriptions outline those assumptions. Number of Samples Different situations require different testing procedures. The following discussion is organized according to the number of samples that is being evaluated: one sample, two samples, and more than two samples. In each category, both parametric and nonparametric tests are explained. Single sample tests One-sample t test (Parametric) A t test is commonly used to compare two means to see if the are significantly different from each other. The t distribution varies according to the size of the sample, so once a t value is calculated, it has to be looked up in a table to find its significance level. The range of t values extends from 1.28 to 636.62, and higher numbers show increasing significance. (These statements are true of all t tests.) A one-sample or single-sample t test is used to see whether a group within a population is different from the population as a whole. The dependent variable must be interval or ratio. Chi-square goodness of fit test (Nonparametric) A Chi-square goodness of fit test is used to compare observed and expected frequencies within a group in a sample, i.e. whether the observed results differ from the expected results, with the expected results derived either from the whole population or from theoretical expectations. In addition to the universal assumptions, the Chi-square goodness of fit test rests on the assumption that the categories in the cross tabulation are mutually exclusive and exhaustive, the dependent variable must be nominal, and no expected frequency should be less than 1, and no more than 20% of the expected frequencies should be less than 5. The chi-square statistic is looked up in a table of critical values, and the statistic must be larger than the critical value to reject the null hypothesis. Chi square values range from 0 into the hundreds, and higher numbers show increasing independence of the variables. Two related samples tests These tests are used in which measurements on one group are taken at two different times, or two samples are drawn, and members are individually matched on some attribute. Dependent samples t test (Parametric) The dependent samples t test is also known as the correlated, paired, or matched t test. The dependent samples t test is used to determine whether the results of one measure differ significantly from another measure. The dependent variable must be interval or ratio. Results fit the t distribution, and the statistic must be larger than the critical value to reject the null hypothesis. Significant t values range from about 1.96 and run into the hundreds, with higher numbers being increasingly significant. Wilcoxon matched-pairs signed ranks test (Nonparametric) The Wilcoxon matched-pairs signed ranks test is used to determine whether the results of one measure differ significantly from another measure. The dependent variable must be ordinal (interval or ratio differences must be converted to ranks). The test statistic is called T. (This is not the same as the t test.) The statistic must be looked up in a table to find level of significance. The values of T range from 0 to 100. Unlike most tests, T must be less than or equal to the critical value to reject the null hypothesis. McNemar change test (Nonparametric) The McNemar change test is used to test whether a change in a pre- post- design is significant. The dependent measure must be nominal, (e.g. improved-not improved, increaseddecreased), and no expected frequency within a category should be less than 5. Although the calculation requires a correction factor (the Yates correction), the result produces a Chi-square statistic, which must be found in a table to find significance, and the statistic must be larger than the critical value to reject the null hypothesis. Two Independent Samples Tests Independent samples (group) t test (Parametric) The independent samples t test is used to determine whether the results of one experimental condition are independent from another experimental condition. The dependent measure must be interval or ratio, the samples must be drawn from populations whose variances are equal, and the sample must be of the same size. Results fit the t distribution, and the statistic must be larger than the critical value to reject the null hypothesis. Wilcoxon/Mann-Whitney test (Nonparametric) The Wilcoxon/Mann-Whitney test is used to determine whether the results of one experimental condition are independent from another experimental condition. The dependent measures must be ordinal (interval or ratio scores must be converted to ranks). The test statistic is U, which must be less than or equal to the critical value to reject the null hypothesis. The critical value is found in the table of critical values for the Wilcoxon rank-sum test. The U statistic can range from 0 into the hundreds, depending on how many data points are in the test. Some authors show a method for calculating a z value, which is then looked up in a table of the z distribution. Chi-square test of independence (2 x k) (Nonparametric) The Chi-square test of independence is used to determine the likelihood that a perceived relationship between categories could have come from a population in which no such relationship existed. The categories must be mutually exclusive, the dependent measure must be nominal, no expected frequency should be less than 1, and no more than 20% of the expected frequencies should be less than 5. The chi-square statistic is looked up in a table of critical values, and the statistic must be larger than the critical value to reject the null hypothesis. Three or more independent samples tests One-way analysis of variance (ANOVA) (Parametric) The one-way analysis of variance (ANOVA) is used to determine whether differences among three or more groups are significant. The dependent measure must be interval or ratio, the samples must be drawn from populations whose variances are equal, and the samples must be of the same size. ANOVA produces an F statistic, which is compared to F statistics in a table of critical values. To find the proper critical value in the table, one must know the degrees of freedom associated with the numerator and the denominator. The F statistic can range from 1 to about 34, and the statistic must be larger than the critical value to reject the null hypothesis. Kruskal-Wallis test (Nonparametric) The Kruskal-Wallis test is used to determine whether differences among three or more groups are significant in situations that do not meet the assumptions necessary for ANOVA. The dependent measure must be ordinal (interval or ratio scores must be converted to ranks). The Kruskal-Wallis test is a screening test. If it reveals a significant difference, individual pairs are evaluated with the Wilcoxon/Mann-Whitney test. The statistic for the Kruskal-Wallis test is H, which is approximately distributed like chisquare with degrees of freedom = k – 1. The H statistic must be larger than the critical value to reject the null hypothesis. Chi-square test for independence (k x k) (Nonparametric) The (k x k) Chi-square test of independence is the same as the (2 x k) Chi-square test of independence. Recommended Resources Cohen, B.C. (2001). Explaining psychological statistics (2nd ed.). New York: Wiley. Pyrczak, F. (2001). Making sense of statistics: A conceptual overview (2nd ed.). Los Angeles: Pyrczak. Stocks, J.T. (2001). Statistics for Social Workers. In B.A. Thyer (Ed.), The handbook of social work research methods (pp 81 – 129). Thousand Oaks: Sage. Urdan, T.C. (2001). Statistics in plain English. Mahwah, NJ: Erlbaum.