Do Course Evaluations Truly Reflect Student

advertisement

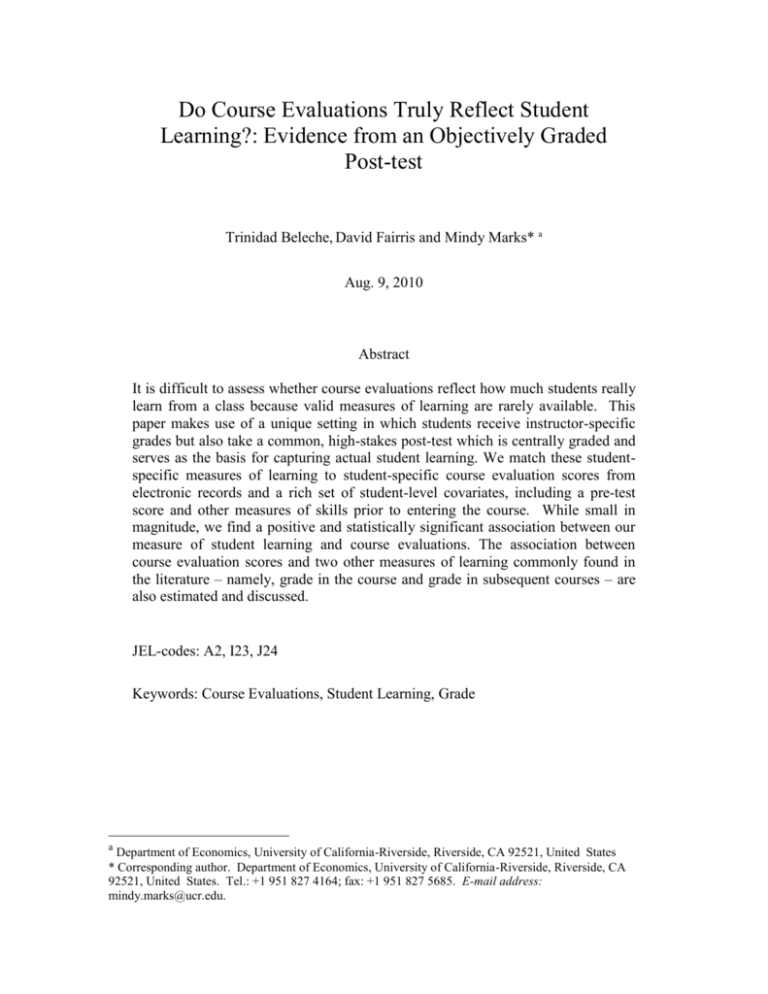

Do Course Evaluations Truly Reflect Student Learning?: Evidence from an Objectively Graded Post-test Trinidad Beleche, David Fairris and Mindy Marks* a Aug. 9, 2010 Abstract It is difficult to assess whether course evaluations reflect how much students really learn from a class because valid measures of learning are rarely available. This paper makes use of a unique setting in which students receive instructor-specific grades but also take a common, high-stakes post-test which is centrally graded and serves as the basis for capturing actual student learning. We match these studentspecific measures of learning to student-specific course evaluation scores from electronic records and a rich set of student-level covariates, including a pre-test score and other measures of skills prior to entering the course. While small in magnitude, we find a positive and statistically significant association between our measure of student learning and course evaluations. The association between course evaluation scores and two other measures of learning commonly found in the literature – namely, grade in the course and grade in subsequent courses – are also estimated and discussed. JEL-codes: A2, I23, J24 Keywords: Course Evaluations, Student Learning, Grade a Department of Economics, University of California-Riverside, Riverside, CA 92521, United States * Corresponding author. Department of Economics, University of California-Riverside, Riverside, CA 92521, United States. Tel.: +1 951 827 4164; fax: +1 951 827 5685. E-mail address: mindy.marks@ucr.edu. 1. Introduction Student evaluations of courses are the most common evaluation tool in higher education today. Scores on course evaluation forms are used by many university stakeholders as a measure of the transmission of knowledge in a course. Since the wide spread use of course evaluations in higher education in the 1960s and 1970s, there has been a flurry of papers investigating the relationship between evaluation scores and various measures of student learning (See Cashin (1988; 1995), Cohen (1981), Dowell and Neal (1982), and Clayson (2009) for comprehensive reviews). A recent review (Clayson, 2009) concludes that no general consensus has been reached about the validity of this relationship largely because of the challenge associated with obtaining valid measures of student learning. Many researchers have viewed a positive correlation between student grades and scores on student course evaluations as evidence of greater knowledge transmission, assuming that grades reflect learning. However, it is not clear that higher course grades necessarily reflect more learning. The positive association between grades and course evaluations may also reflect initial student ability and preferences, instructor grading leniency, or even a favorable meeting time, all of which may translate into higher grades and greater student satisfaction with the course, but perhaps not greater learning. What is required is a measure of learning that is independent of instructor-assigned grades. Grades in subsequent courses have been used by some researchers as an alternative measure of student learning (see Carrell and West (2008), Ewing and Koching (2010), Johnson (2003), Weinberg, Fleisher & Hashimoto (2009), and Yunker and Yunker (2003)). 1 While the evidence from these studies is mixed, they often find a negative association between grade in the subsequent class and the quality of the preceding course as proxied by a summary measure of the preceding instructors’ course evaluations. On the assumption that future grades are a valid measure of learning in the prior class, this line of research suggests that course evaluations are at best weakly correlated with the underlying learning experience of a class. However, while grade in a subsequent class is arguably a superior proxy for learning than is grade in the current class, if subsequent classes do not build on knowledge conveyed in the initial class, the observed relationship between course evaluations and this measure of learning may be very weak. Additionally, results that use subsequent class grade as a measure of learning are likely to suffer from selection concerns since not all students enroll in a subsequent class. Hence, an objective measure of student learning in the specific course under evaluation remains an important goal for a careful analysis of this issue. In addition to the absence of an objective measure of student learning, the course evaluation literature faces a number of difficulties regarding empirical methodology as well. For example, due to the anonymous nature of course evaluations, student evaluations are typically available to researchers only as course means, and only for that subset of students who choose to fill out evaluations. Measures of learning, on the contrary, are available at the individual student level, and for all students in the course. This may pose a serious measurement problem: if researchers cannot identify which particular students complete evaluations, evaluation scores and learning outcomes are 2 necessarily derived from different and potentially dissimilar segments of the class population (Dowell and Neal, 1982). This does not pose a problem if we can assume that evaluation scores for the subset of students who fill out evaluation forms reflect the average experience of the class as a whole, but there is ample research calling this assumption into question (Palmer, 1978; Clayson, 2007; Kherfi, 2009). Data which allow the researcher to link individual evaluation scores with individual measures of student learning would overcome this potential measurement problem. This paper tests the relationship between student course evaluations and an objective measure of student learning. The objective measure of learning derives from a unique setting in which all students must take a high-stakes post-test in a remedial course that is graded by people other than the faculty teaching the sections. This measure of learning reflects concepts that relate directly to the course, as opposed to subsequent courses, but unlike course grade it is not issued by the instructor. Our data contain other attractive features which allow us to address some of the empirical methodological problems found in the previous literature. We possess electronic course evaluations that make it possible for us to match the student’s evaluation response to information contained in official student records, thereby eliminating the measurement problem cited above. Thus, for each student we know their demographic information, their score on a pre-test that is conducted for placement purposes, their scores on various elements of the course evaluation, their instructor-assigned grade, their score on the post-test, and their course grade.1 1 Carrell and West (2008) is the only other paper of which we are aware that employs an independently graded, common post-test as a measure of learning. They find that classes in the US Air Force Academy with higher than average course evaluations have students who perform better on the post-test. However, their study is limited to comparing learning for the entire class to an average evaluation score that reflects the 3 Furthermore, this learning measure is available for all students who complete the initial class as opposed to the subsample of students who continue on to a subsequent class. The results of our analysis reveal a weak but positive and statistically significant relationship between an objective measure of student learning and student course evaluation scores. This finding holds when we control for student-specific demographic characteristics, ability, and pre-test scores. Additionally, our findings are robust to the inclusion of instructor and section fixed effects. A one standard deviation increase in learning is associated with a 0.05 to 0.07 increase in course evaluation scores on a fivepoint scale, whereas, a one standard deviation increase in course grade leads to a 0.09 to 0.12 increase in course evaluations. This suggests that the observed relationship between grades and course evaluations reflects something more than mere learning. When we use grades in the subsequent class as a measure of learning, we find a negative, and always statistically insignificant relationship between course evaluations and this proxy for learning. This result calls into question the use of performance in future classes as an accurate measure of student learning. 2. Institutional Description and Data The four-year public university from which we draw our data has in place an entry-level skills requirement in one of the core disciplines. Students can fulfill this requirement by obtaining a sufficiently high score on either one component of the SAT or an appropriate subsample of students who complete evaluations. There is evidence that students who fill out evaluations are non-representative in terms of academic ability. Since we possess individual level data on course evaluations we are able to overcome this problem. 4 advanced placement test.2 Students who do not meet the above requirements must sit for a placement exam; we use the numerical score on this test as a pre-test measure. All of the placement exams for entering freshmen from every campus of the state university system are graded centrally in the spring of their senior year of high school. 3 Students who fail to achieve a score above the predetermined threshold are required to take and pass a remedial course. Students normally enroll in the remedial class during the fall of their freshman year. In practice, this requirement must be met by the fall of their second year or students cannot continue at the university. Students may repeat the class, and so the same student can appear multiple times in our sample. The data in this paper come from 1106 students in 97 sections of the remedial program for students who enrolled in the remedial class in the academic years 2007, 2008 and Fall 2009. The remedial sections are taught by instructors who are supervised by a senior professor at the university. Each section is a standalone class with around 20 students. It is common for an instructor to teach multiple sections. The average instructor in our sample has taught 2.41 sections over the course of seven quarters. All sections use the same text, a regulated number of assignments and the same point system for grading. However, individual instructors assign grades and have control over the content of the sections they teach, including supplementary materials and the nature of assignments and exams. The 2 Students may also fulfill this requirement by passing a designated course at an accredited community college prior to entering the university. 3 Exam scores range from 2 to 12 with a grade of 6 or less considered failing, and a grade of 8 considered passing. If the student receives a 7, then an additional grader’s score is used. The mean student in our sample has a score of 5.4. 5 instructors have discretion over 700 points available in each section. Although the classes are somewhat standardized and the students are more or less homogenous across sections, we observe large variability in section grades. There is more than a two-letter grade difference between the mean grade in the sections with the highest and lowest average grades. All students take a common post-test that is worth 300 points. The students need 730 points out of a total of 1000 to move onto the next course in a required series of classes. The overall grade (lecture points plus post-test score) is not curved (i.e. 800 points is a Bregardless of section or instructor). The post-test is identical in structure to the pre-test, a fact of which the students are aware. We note that the post-test is modeled on the system wide placement test which was designed by a team of experts to capture the subject’s fundamental concepts. Additionally, part of the section grade consists of exercises that replicate the post-test. The post-test appears to be a valid instrument in as much as further analysis reveals that students with stronger academic backgrounds perform better on the exam. The post-test is administered to all students at the same time and place, and after the deadline for submission of course evaluations. This test is graded by a committee according to a set diagnostic standard. There is a norming session, followed by mentored grading; many of the instructors doing this grading also participated in the grading of the original pre-test. We will use the post-test score conditioned on initial student ability as our measure of learning.4 4 A student had to have taken the post-test to be included in our sample, thus we drop 185 students who did not take the post-test. 6 During the time of our study, the university was phasing in electronic evaluations.5 Thus, during much of the period instructors could choose between electronic evaluations and paper evaluations. The sample we analyze in this paper is restricted to students whose instructors used electronic evaluations. To the best of our knowledge this is the first study to make use of electronic course evaluations. We focus on electronic evaluations because there is an electronic record, which allows us to match a given evaluation to the corresponding institutional data for that student. Thus, we limit our analysis to the subsample of students for which we can observe both learning and course evaluations. Our data do not contain student identifiers, and so the anonymity of the evaluation process remains intact. Column 1 of Table 1 shows summary statistics for those instructors who opted for traditional paper evaluations, while Column 2 shows summary statistics for those instructors who chose electronic evaluations. Column 3 contains tests for differences in the means between these two samples. The test statistics in Column 3 show that instructors with academically stronger students as measured by their SAT Verbal, ACT scores, high school GPA, and class grades were more likely to use electronic evaluations. 6 However, the comparison also reveals that sections with electronic course evaluations are generally representative of the overall remedial population with respect to student demographic characteristics and pre-test scores. 5 The phase-in was completed by Fall 2009, when the only mechanism for course evaluation was electronic. 6 This could be because weaker students who do not regularly attend class have the opportunity to complete an electronic evaluation whereas such students must show up in class to complete a paper evaluation. If instructors fear that weaker students (or those with low expected grades) give poorer evaluations then paper evaluations are a safer strategy. 7 Since we are interested in the relationship between student-level learning and course evaluations, in addition to having an instructor who elected for electronic course evaluations (the sample in Column 2) a student had to have completed an electronic course evaluation to be included in our sample. For the instructors that opted for electronic course evaluations about 68% of the students completed an evaluation form. Information about this sample is contained in Column 5 of Table 1. This is the sample used in this paper, and it contains 1106 students, 33 instructors in 97 sections. Sixty-two percent of the sample is first-generation college-bound, and 58 percent of the sample is female. The average high school GPA is 3.42, and the average student earned a C+ on the section component and a C+ on the post-test. Column 6 tests if there are differences between the sample of students used in this study (those who complete electronic course evaluations (Column 5)) and students who do not complete evaluations (Column 4). The students who complete course evaluations are not representative of the class overall. Female and low-income students are more likely to complete course evaluations, as are students with higher high school grade point averages. Students who performed better on the SATs are less likely to complete electronic evaluations. Importantly, students who complete evaluations earn significantly higher section grades and perform slightly better on our measure of learning (Post-test 300). The difference in characteristics and outcomes between students who complete evaluations and those who do not suggests that aggregate evaluation scores (based on the subsample of students who complete evaluations) may not accurately reflect the average learning 8 experience of the entire class. Thus, studies that match aggregate evaluation scores to aggregate measures of learning (such as average grade) for the class as a whole, likely suffer from measurement error.7 Table 2 contains information about the evaluation instrument as well as the descriptive statistics for each of the questions on the instrument. Each evaluation score ranges from 1 (strongly disagree) to 5 (strongly agree). The student may also answer “N/A” to a given question. Table 2 also shows the number of completed responses for each question after we exclude the “N/A”s from our sample. Since we are interested in capturing the amount of learning in the course we will focus on the final question, “The course overall as a learning experience was excellent” [C6].8 The mean response to this question is a 4.42, but there is high variability. 3. Empirical Methodology A common model in the literature takes average grade (or expected grade) as a measure of learning and relates it to average course evaluation score for an instructor in a particular section of a course. This model often includes section level covariates and may include observable characteristics of the instructor – for example, age, gender, or foreign-born status. 7 Additionally, it should be noted that the estimates in this paper reflect the relationship between learning and course evaluations for the non-random subsample of students who complete course evaluations. Caution should be exercised when mapping these results to the entire student population, as students who complete course evaluations differ from those who do not. 8 Results are robust to using “Instructor was effective as a teacher overall” [B8]. 9 We can estimate a variant of this model (Equation (1)) by utilizing student-level as opposed to section-level information and a richer set of covariates than is typically found in the literature. ' Evalijs Gradeijs X ijs 1 ijs (1) In our Equation (1), Evalijs denotes the evaluation score given by student i to instructor j in section s. X ijs' includes student background traits such as age, and indicators for gender, ' ethnicity, living on campus, first generation and low income. Also included in X ijs are the following section-specific controls: term, enrollment, course evaluation response rate, include the withdrawal rate and percent of students repeating the class. We will also following student ability measures: high school GPA, SAT or ACT scores, pre-test scores, and indicators for missing SAT, ACT or pre-test scores. Gradeijs denotes the lecture points earned by student i enrolled under instructor j in section s. Gradeijs is the portion of student i’s grade that the instructor has control over, and it is normalized by z-scores. The z-score earned by students who completed an is normed so that zero reflects the mean lecture point electronic course evaluation. In this model, α, is the parameter of interest. Given our previous concerns that section grades may reflect more than merely learning in the course, in Equation (2) we replace Gradeijs with a variable which we feel better captures student learning -- namely, Posttestijs . This variable is the z-score of the points earned by student i on the common post-test. ' Evalijs Posttestijs X ijs 2 uijs (2) 10 Recall that the instructor does not administer or grade the post-test; thus, Posttestijs , is an ' independent, objective measure of learning. X ijs is the same vector of covariates in Equation (1). Note that controlling for pre-test scores allows us to net out the learning score is a measure of skills upon entry to, and the accumulated by students; the pre-test post-test score is a measure of skills upon exit from, the class. The estimated coefficient capture the change in student evaluation scores for a one-standard deviation change in our measure of learning, holding all other measurable factors constant. For comparison to the literature, we will also estimate Equation (2) using grade in the required subsequent course as a proxy for learning. We can employ instructor fixed effects in these models as well. There is ample evidence that instructor attributes can influence course evaluations (Felton, Koper, Mitchell & Stinson, 2006; Fischer, 2006; McPherson, 2006). The instructor fixed effects control for unmeasured instructor characteristics that may be correlated with both the proxies for learning and Evalijs, and which thereby lead to biased estimates. In these specifications, the coefficient of interest captures the average within-instructor impact of student learning on course evaluations. student Finally, since we have student-specific evaluation data, we can also control for additional omitted factors by including section fixed effects (which subsumes instructor fixed effects). While numerous papers have employed instructor fixed effects, to the best of our knowledge no paper has been able to include section fixed effects. The section fixed effects control for any additional section-level characteristics that may bias the previous 11 estimates. 9 For instance, early morning sections might be associated with both lower course evaluations and reduced student learning, leading to a positive bias in the absence of explicit controls for the timing of sections. One can imagine other uncaptured sectionspecific features (like a class clown) leading to negative bias in the estimated effects; thus, the overall direction of the bias is unclear. In these specifications, the coefficient of interest captures the average within-section impact of student learning on student course evaluations. 4. Results Table 3 presents regression results where the dependent variable is the numerical score on the specific question of the evaluation asking whether “the course overall as a learning experience was excellent.” Column 1 shows the estimated impact of instructor-assigned grade on the course evaluation score, with a rich set of student demographic and sectionlevel controls. Students who receive higher section grades give significantly higher course evaluations. More specifically, a one-standard deviation increase in section grade translates into a 0.11 point increase in the course evaluation score. Columns 2 adds student ability measures, including the student’s pre-test scores. This allows us to eliminate potential bias due to differences in students’ starting skills. Including student ability controls does not alter the relationship between section grade and course evaluations. 9 We have estimated Equations (1) and (2) using ordered probits as well. However, we are concerned about the reliability of the standard errors when section fixed effects are included in this analysis. The results under the ordered probit model lead to a similar conclusion in that both coefficients of interest (Grade and Posttest) are positive and statistically significant. The evaluation scores are also skewed towards the right of the distribution, with only 12 percent of the students giving a score of three or less. We have also estimated all models with an indicator variable that takes a value of one if the student gave the highest evaluation score and zero otherwise. The overall pattern of results is unchanged under this specification of the dependent variable. 12 In this regression, as for most of the regression results that follow, the only control variables that are statistically significant are an indicator for Asian, which enters negatively, and the section response rate which is positive. There is never a statistically significant relationship between gender, first generation college student, or student ability measures and course evaluation scores. Column 3 adds instructor fixed effects to the specification in Column 2. The inclusion of instructor fixed effects controls for any time invariant instructor traits (such as grading standards or language skills) that may impact both section grade and course evaluations. Adding a full set of instructor fixed effects reduces slightly the qualitative magnitude of the estimated coefficient but does not affect its statistical significance. These results are consistent with instructor grading standards biasing slightly upward the observed relationship between learning and course evaluations. Column 4 adds section fixed effects thereby subsuming the instructor fixed effects. The section fixed effects control for any additional section-level characteristics (such as time of day the class meets or peer effects) that may affect the previous estimates. In essence these regressions ask, “Within a given section do students who receive a higher section grade give higher course evaluations?” Including the section fixed effects further reduces the relationship between section grade and course evaluations. However, even after controlling for these additional factors a robust and statistically significant relationship persists. 13 Overall, the results in Columns (1-4) confirm what is found in the existing literature— namely, that there exists a strong and positive relationship between measures of course grades and course evaluations. An increase of one standard deviation in the section grade (almost one full letter grade) increases the expected course evaluation score by about 1/7 of a standard deviation, and each additional full letter grade received in the course is associated with a 0.09 to 0.12 increase in the average course evaluation score. There are obvious concerns associated with using student grades as a proxy for learning. Instructors who present more challenging material in a course may impart more knowledge to students, but students may also receive below average grades because of the difficulty of the material. Additionally, course grades arguably reflect more than just learning; they also capture elements of effort such as class attendance and the follow through to complete assignments. In Columns (5-8) of Table 3 we replicate our earlier regression results, but with an independent measure of learning instead of course grade as the dependent variable. In these regressions, we use grade on the common, independently graded post-test (measured in z-scores) as a measure of learning. Column 5 shows the association between scores on the post-test and course evaluations with student demographics and section control variables only. The association between performance on the post-test and instructor evaluation scores is positive but only weakly statistically significant. 14 The Column 6 results include student initial abilities, including pre-test scores, which render the coefficient of interest a measure of the impact of net learning on course evaluation scores. This adjustment strengthens our findings in that the estimated coefficient becomes slightly larger in magnitude and is statistically significant at the 5 percent level. The results suggest that models focusing on levels rather than gains in learning are misspecified. A one-standard deviation in net learning translates into a 0.06 point increase in course evaluation score. Note that the point estimates are half the size of those in Column 2 where section grade is used as a proxy for learning. Thus, a sizeable portion of the relationship between grade and course evaluations reflects something other than performance on the comprehensive post-test. In Column 7 and Column 8 we add instructor and section fixed effects, respectively, and the qualitative magnitude of the estimated effect is unchanged, which indicates that there are not important instructor-level or section-level omitted factors that bias our estimate of the relationship between learning and course evaluations. The results in Column 8 imply that if two students in the same section are otherwise identical, the student who acquires more knowledge will, on average, give a higher course evaluation score. While these results suggest that it is possible to infer information about knowledge acquired from course evaluation results, a certain amount of caution is required because the estimated relationships are very small. A one standard deviation increase in learning (as measured by the post-test) is associated with less than one-tenth of standard deviation increase in course evaluation. 15 The results in Table 3 reveal a statistically significant relationship between grade in class and course evaluation scores, and between learning and evaluation scores as well. However, the estimated magnitude of the relationship between section grades and course evaluations is almost double that of the estimated relationship between learning and course evaluations. Arguably, grades capture something about a course and its value to students more than the direct learning it imparts.10 A common story in the literature is that the observed association between grades and evaluations reflects in part the fact that students reward instructors who are easy graders or who demand less work (see, for example, Aigner and Thum (1986), Babcock (forthcoming), Greenwald and Gilmore (1997), and Isely and Singh (2005)). However, the change in the quantitative impact going from the results in Column 2 to those in Column 3 of Table 3 indicates that surprisingly little of the estimated cross-instructor relationship between grades and evaluation scores is accounted for by time-invariant instructor characteristics. This finding suggests the need for a more nuanced explanation for why students translate high grades into high course evaluation scores, and one that rests on students doing so within a class far more than across classes. Clayson (2009) cites evidences that students are poor judges of their own knowledge. Perhaps students interpret a relatively high class grade, especially when awarded in 10 When both current grade and our measure of learning are included in the model, the effect of current grade continues to dominate. In our preferred specification we find that both coefficients remain positive and statistically significant but decrease slightly in magnitude (see Column 9 of Table 3). The interpretation of this regression is unclear; however, owing to the multicollinearity induced by the fact that grades and learning are significantly interrelated. We note that learning remains statistically significant even after conditioning on current grade. 16 comparison to others in a specific class, as a valid measure of learning and acknowledge this “learning” on the course evaluation form. This explanation is, of course, pure speculation at this point, but it does not take away from our conclusion that course evaluations do indeed capture student learning. In Table 4, we invoke “grade in the subsequent class” as a proxy for learning, and explore the relationship between this measure of learning and course evaluation scores. Although in this particular case the subsequent course is required for graduation, subsequent course grades are available for only 919 of the students in our sample.11 The smaller sample size is due to the fact that students separate from the university before moving on to the next class in the series, or they postpone taking the subsequent class beyond the latest period for which we have data. We find that students who continue on to the required subsequent class are academically stronger in terms of standardized tests and class performance. Columns (1-4) of Table 4 replicate the results from Table 3 (Columns 5-8) with post-test as the measure of learning for the subsample of students who continue on to the subsequent class. The results mirror those of the full sample – there is a positive and statistically significant relationship between learning, as measured by performance on the post-test, and course evaluations. The regression results with grade in the subsequent class as a measure of learning can be found in Columns (5-8) of Table 4. The results show a slight negative, albeit statistically 11 The sample includes students who failed and retook the initial class before moving on to the subsequent class. For these students their most recent course evaluation score was used. However, we find similar results if these students are excluded from the analysis. 17 insignificant relationship between learning as proxied by subsequent course grade and course evaluation scores. That is, a student’s performance in the subsequent class is negatively related to how highly he or she evaluated the preceding course. These findings are similar to those of other researchers who have used grade in subsequent class as a measure of learning.12 Given the validity of our preferred measure of learning, the results in Table 4 suggest that grade in subsequent courses is a poor proxy for learning in the current class. If the subsequent class emphasizes different material, one might expect little correlation between the learning in the preceding course and performance in the subsequent course. 5. Robustness Checks In this section, we conduct a series of robustness checks on the specification in Table 3. We begin by limiting the sample to the 891 students who took the course in the fall of their freshman year. More advanced freshmen might select into course sections based on knowledge of instructor or peer characteristics. For instance, if groups of hard-working students enroll in a class section together, this could induce an artificial relationship between learning and instructor evaluation if conscientious students give higher evaluations. The results for this sample are shown in Panel A of Table 5. The coefficient estimates are very similar to those in Table 3 pointing to limited concerns about selection. However, statistical significance is weakened, arguably due to the reduced sample size. 12 Carrell and West (2008) in a study of Air Force Academy cadets and Johnson (2003) focusing on Duke students find that course evaluations are insignificant (and often negative) predictors of follow-on student achievement. Weinberg et al (2009) have data on economics students at the Ohio State University and they show that grades in subsequent classes have a positive but on course evaluations in the preceding class but a statistically insignificant impact once current grades are controlled for Yunker and Yunker (2003) focus on accounting students and find a statistically significant negative association between introductory course with high evaluations and performance in the subsequent class. 18 As an additional robustness check we limit the sample to students who had an incentive to perform well on the post-test. By university regulations, students must pass this class in their first year. The 183 students with very high scores (above 600 points) on the section portion of the grade may be somewhat unconcerned about their performance on the posttest; they are almost guaranteed a passing grade in the class as they only need 130 points out of 300 on their post-test in order to pass the course. We also dropped 22 students with very low scores (less than 430 point out of a total of 700 points) in the classroom portion of the total grade. These students cannot earn enough points on the post-test to pass the class. By focusing on students who have a greater incentive to take the post-test seriously we reduce the measurement error associated with the use of the post-test as a measure of learning. The results from this subsample are shown in Panel B of Table 5. The coefficient estimates on the variable that capture learning are slightly larger, consistent with measurement error causing attenuation bias. For instance, in the specification that includes the full set of fixed effects (Column 4) a one standard deviation increase in performance on the post-test is associated with 0.07 higher score on the evaluation instrument. This compares to a 0.06 higher score for the full sample (in Table 3). 6. Which Evaluation Questions Best Capture Learning Given that institutions are often limited to course evaluations as the primary measure of course quality, it is worth investigating which elements, if any, of a typical course evaluation form provide the most information about the learning acquired in a class. Because the university is concerned about the knowledge gain for all students, not just 19 those who fill out evaluations, for this analysis we include all students in sections with electronic evaluations, irrespective of whether or not the student completed an evaluation.13 For all students who took the post-test, we estimate the following regression to generate a measure of learning in a given section. 82 Posttestij j X ij' ij (3) j 1 Posttestij is the numerical score on the post-test for student i in section j, is a set of section fixed effects and X ij' is a vector of student characteristics and ability measures. This regression will generate 82 coefficients, j , j 1,...,82 , each one representing the average amount of gains in the post-test acquired in each section. We refer to them as learning measures. 14 The estimated coefficients on the ' s tell us, conditional on the initial ability and demographic characteristics of the students, in which sections students learn the most as reflected by their performance on the independently graded post-test. In Figure 1, we plot these estimated learning coefficients against the average course evaluation scores in each section using the question (C6) that refers to the quality of the overall class experience. The average student in the “best” section performed 25 points better on the post-test than the average student in the “worst” section. In those sections where more learning occurred, as measured by ability-adjusted performance on the post13 We also conducted the analysis with only those students who filled out electronic evaluations. The overall pattern of results is similar. 14 Because we do not want the response of a few students to reflect learning for the entire class we restrict our sample to sections with response rates greater than 45 percent in this analysis. When we include all sections, the correlations are systematically weaker. For instance, the correlation coefficient is 0.16 and 0.06 for elements C6 and B8, respectively. However, the relative rankings of the elements of the course evaluation in terms of information about estimated learning remain unchanged. 20 test, students awarded the higher scores on question C6 (the correlation coefficient is 0.19). While this is true on average, one can clearly see from the figure that greater knowledge transmission does not translate into higher evaluations in every case. While there is an apparent relationship between low course evaluations (those with average scores below 4) and poor learning, the overall relationship between course learning and course evaluations is weak. Indeed, the section with the lowest course evaluation score witnessed similar amounts of estimated learning gains as the sections with the highest course evaluations. Thus, it would not be prudent to rely solely on course evaluations as a means of gauging student learning. The correlation coefficients between the estimated post-test learning measures, , and mean section-level course evaluation scores for all the elements of the course evaluations are found in Table 6. In every case, there exists a positive relationship, suggesting that sections with high performance on the post-test do, on average, receive slightly higher course evaluations. The questions that reflect learning in the course, as opposed to characteristics of the instructor, seem to better reflect estimated post-test results. The questions that solicit information about the clarity of the instructor, the use of supplemental material and overall course experience are the strongest predictors of estimated learning gains. Elements of the course evaluation that reflect the syllabus, the relationship between the exams and the course material and the instructor’s respect for his students and helpfulness convey little information about how much learning was acquired in the course section. 21 7. Conclusion This paper utilizes a measure of individual student learning not found thus far in the literature to explore the relationship between course evaluation scores and the amount of student learning. We have data from a unique setting in which students take a pre-test placement exam and a post-test exit exam which is common to all students and is graded by a team of instructors instead of the instructor of record for the course. We treat the score on this post-test as an independent measure of student learning, conditional on the pre-test score and other ability measures. In addition to a new, more objective measure of student learning, we possess electronic course evaluations which are available at the individual student level as opposed to the section or class level. We show that students who choose to fill out course evaluations are not representative of the overall class as they tend to be academically stronger students. To overcome the potential econometric problems found in the larger literature which utilizes more aggregate level data, we investigate how an individual student’s learning maps to his course evaluation as opposed to mapping student-level learning to the average evaluation score for his class. Our results reveal that course evaluations do indeed reflect student learning. In specifications that use the common post-test as a measure of learning, there is a consistently positive and statistically significant relationship between individual student learning and course evaluations. The relationship between learning and course evaluations is strengthened by ability controls and is robust to the inclusion of instructor 22 and section fixed effects. While the estimated relationship is positive and statistically significant, the quantitative association is in fact quite small, suggesting perhaps that it would be prudent for institutions wishing to capture the extent of knowledge transmission in the classroom to explore measures beyond student evaluations of the course. In addition to an objective measure of learning, we possess, at the individual student level, grade in the course as assigned by the course instructor, which is one of the most common measures of learning found in the literature. When section grades are used as a proxy for learning, we find a quantitatively stronger relationship to course evaluation scores than is found for our more objective measure of learning. This suggests that, with regard to its impact on course evaluations, grade in the course reflects more than mere learning. We also possess grade in a subsequent course – another measure of learning found in the literature. Our results indicate a negative relationship between course evaluations and subsequent course grade. This finding likely reflects the fact that grade in the subsequent class is a much weaker measure of learning than the post-test which reflects cumulative learning in the current class. Finally, we show that some questions on a typical course evaluation form better reflect student learning than do others. The elements that explicitly ask about learning or the clarity and effectiveness of the instructor are more strongly correlated with estimated learning gains for the class. Questions asking about the value of the class as a whole provide more information on learning than those that refer to instructor-student interactions. 23 Acknowledgements We gratefully acknowledge Jorge Agüero, Philip Babcock, Marc Law, and participants of the 2010 Western International Economic Association meetings for their valuable comments. Thanks also go to the director of the remedial program and Junelyn Peeples for assistance in collecting the data. 24 References Aigner, D. J., & Thum, F.D. (1986). On Student Evaluation of Teaching Ability. Journal of Economic Education, 17(4), 243-265. Babcock, P. Real Costs of Nominal Grade Inflation? New Evidence from Student Course Evaluations. Economic Inquiry, forthcoming. Bedard, K., & Kuhn, P. (2008). Where Class Size Really Matters: Class Size and Student Ratings of Instructor Effectiveness. Economics of Education Review, 27 (3), 253265. Cashin, W. E. (1988). Student Ratings of Teachings: A Summary of the Research. IDEA Paper 20. Manhattan, KS: Kansas State University, Center for Faculty Evaluation and Development. Cashin, W. E. (1995). Student Ratings of Teaching The Research Revisited. IDEA Paper 32. Manhattan, KS: Kansas State University, Center for Faculty Evaluation and Development. Carrell, S. E., & West, J. E. (2008). Does Professor Quality Matter? Evidence from Random Assignment of Students to Professors. Working Paper 14081. Cambridge, MA: National Bureau of Economic Research. Clayson, D. E. (2007). Conceptual and Statistical Problems of Using Between-Class Data in Educational Research. Journal of Marketing Education, 29(1), 34-38. Clayson, D. E. (2009). Student Evaluations of Teaching: Are They Related to What Students Learn?: A Meta-Analysis and Review of the Literature. Journal of Marketing Education, 31(1), 16-30. Cohen, P. A. (1981). Student Ratings of Instruction and Student Achievement: A MetaAnalysis of Multi-Section Validity Studies. Review of Educational Research, 51(3), 281-309. Dowell, D. A. & Neal J. A. (1982). A Selective Review of the Validity of Student Ratings of Teaching. Journal of Higher Education, 53(1), 51-61. Ewing, A. & Kochin, L. (2010). Learning, Grades, and Student Evaluations of Teaching in an Economics Course Sequence. Mimeo. Felton, J., Koper, P. T., Mitchell, J. B., & Stinson, M. (2006). Attractiveness, Easiness, and Other Issues: Student Evaluations of Professors on RateMyProfessors.com. SSRN Working Paper. 25 Fischer, J. D. (2006). Implications of Recent Research on Student Evaluations of Teaching. The Montana Professor, 17: 11. Greenwald, A. G. & Gillmore, G. M. (1997). Grading Leniency is a Removable Contaminant of Student Ratings. American Psychologist, 52(11), 1209-1217. Isely, P., & Singh, H. (2005). Do Higher Grades Lead to Favorable Student Evaluations? Journal of Economic Education, 36(1), 29-42. Johnson, V. E. (2003). Grade Inflation: A Crisis in College Education. New York: Springer. Kherfi, S. (2009). Course Grade and Perceived Instructor Effectiveness When the Characteristics of Survey Respondents are Observable, American University, Sharjah Working Paper. McPherson, M. A. (2006). Determinants of How Students Evaluate Teachers. Journal of Economic Education, 37(1), 3-20. Palmer, J., Carliner, G., & Romer, T. (1978). Leniency, Learning and Evaluations. Journal of Educational Psychology, 70(5), 855-863. Seldin P. (1993). The Use and Abuse of Student Ratings of Professors. Chronicles of Higher Education, 39(4), A40. Weinberger, B. A., Fleisher, B. M., & Hashimoto, M. (2009). Evaluating Teaching in Higher Education. Journal of Economic Education, 40(3), 227-261. Yunker, P. J., & Yunker, J. A. (2003). Are Student Evaluations of Teaching Valid? Evidence from an Analytical Business Core Course. Journal of Education for Business, 78(6), 313-317. 26 3 3.5 4 4.5 5 Figure 1. Estimated Learning and Evaluation Instrument. [C6] -12 -8 -4 0 4 Estimated Learning (lambda) 8 12 Fitted Value 27 Table 1. Descriptive Statistics. Faculty Chose Electronic Evaluation (Eval) (1) (2) (3) (4) (5) (6) Faculty Did Not Choose Eval Faculty Chose Eval t-test Student Did Not Fill Out Eval Student Filled Out Eval t-test Mean S.D. Mean S.D. p-value Mean S.D. Mean S.D. p-value Lecture 700 Post-test (300) 540 224 (63.59) (17.91) 547 226 (56.43) (16.54) 0.000 0.000 535 225 (61.66) (17.03) 553 227 (52.83) (16.27) 0.000 0.018 Total Points 764 (69.58) 773 (61.69) 0.000 760 (66.33) 780 (58.30) 0.000 Took Next Class 0.85 (0.36) 0.85 (0.36) 0.790 0.82 (0.38) 0.86 (0.35) 0.053 Subsequent Grade 2.79 (0.76) 2.81 (0.76) 0.465 2.66 (0.86) 2.88 (0.69) 0.000 Pre-Test 5.44 (0.81) 5.45 (0.85) 0.635 5.47 (0.81) 5.44 (0.86) 0.6067 SAT Verbal 455 (71.12) 462 (68.08) 0.001 470 (66.21) 458 (68.62) 0.000 SAT Writing 460 (67.54) 466 (65.88) 0.006 470 (64.48) 464 (66.48) 0.078 SAT Math 508 (95.40) 512 (96.96) 0.215 527 (95.92) 505 (96.74) 0.000 ACT 19 (3.32) 20 (3.44) 0.001 20 (3.30) 20 (3.50) 0.585 High School GPA 3.35 (0.31) 3.40 (0.31) 0.000 3.35 (0.29) 3.42 (0.32) 0.000 Female 0.54 (0.50) 0.54 (0.50) 0.972 0.47 (0.50) 0.58 (0.49) 0.000 African American 0.07 (0.25) 0.08 (0.27) 0.225 0.08 (0.27) 0.08 (0.27) 0.699 Hispanic 0.36 (0.48) 0.36 (0.48) 0.710 0.33 (0.47) 0.37 (0.48) 0.130 Asian 0.45 (0.50) 0.45 (0.50) 0.934 0.46 (0.50) 0.44 (0.50) 0.538 Low Income 0.53 (0.50) 0.51 (0.50) 0.166 0.47 (0.50) 0.52 (0.50) 0.046 First Generation 0.61 (0.49) 0.61 (0.49) 0.991 0.58 (0.49) 0.62 (0.48) 0.074 On Campus 0.69 (0.46) 0.67 (0.47) 0.155 0.70 (0.46) 0.66 (0.47) 0.127 Repeating Class 0.15 (0.36) 0.12 (0.32) 0.16 (0.37) 0.09 (0.29) 0.001 0.000 Section Enrollment 20 (1.79) 20 (1.59) 20 (1.61) 20 (1.57) 0.005 0.001 Number of Sections 149 97 Number of Instructors 77 33 Observations 2766 1634 528 1106 Unique Observations 2682 1611 519 1092 Notes: Difference in means statistically significant at 5% level in bold. Standard deviations in parentheses. Eval refers to electronic evaluation. In the sample of students whose instructor did (not) choose electronic evaluations there are: 39(32) students with missing SAT Math scores, 994(1814) students with ACT scores. In the sample of students who did (not) fill out electronic evaluations there are: 27(12) students with missing SAT Math scores, and 646(348) students with ACT scores. Total Points is the sum of Lecture 700 and Pre-test 300. Pre-test score ranges from 1 to 6. Low income is an indicator variable for parent’s income is less than or equal to $30,000. First Generation implies that neither of the student’s parents completed university. On Campus refers to students who reside in campus housing. Unique observations refers to the number of students that did not retake the class. 28 Table 2. Descriptive Statistics about the Evaluation Instrument. Instrument Evaluation Question N Mean S.D. B1 Instructor was prepared and organized. 1117 4.65 (0.66) B2 Instructor used class time effectively. 1114 4.64 (0.71) B3 Instructor was clear and understandable. 1116 4.65 (0.67) B4 Instructor exhibited enthusiasm for subject and teaching. 1118 4.64 (0.73) B5 Instructors respected students; sensitive to and concerned with their progress. 1116 4.65 (0.73) B6 Instructor was available and helpful. 1117 4.66 (0.73) B7 Instructor was fair in evaluating students. 1117 4.53 (0.83) B8 Instructor was effective as a teacher overall. 1116 4.61 (0.73) C1 The syllabus clearly explained the structure of the courses. 1114 4.61 (0.71) C2 The exams reflected the materials covered during the course. 1112 4.59 (0.70) C3 The required readings contributed to my learning. 1112 4.50 (0.79) C4 The assignments contributed to my learning. 1111 4.54 (0.76) C5 Supplementary materials (e.g. films, slides, videos, guest lectures, web pages, etc.) were informative. 1106 4.34 (0.95) C6 The course overall as a learning experience was excellent. 1106 4.42 (0.84) Notes: Standard deviation in parentheses. Evaluation score ranges from 1 (strongly disagree) to 5 (strongly agree). “N/A” responses are excluded. . 29 Table 3. Determinants of “The course overall as a learning experience was excellent.” [C6] Lecture 700a (1) (2) (3) (4) 0.113*** (0.030) 0.118*** (0.031) 0.104*** (0.037) 0.093** (0.038) Post-test 300a Student Abilityb Sectionc Characteristics Yes Fixed Effects Instructor Fixed Effects (5) 0.054* (0.027) Yes Yes Yes Yes Yes Yes (6) (7) (9) 0.064** (0.029) 0.062** (0.028) 0.065** (0.029) 0.084** (0.037) 0.055* (0.029) Yes Yes Yes Yes Yes Yes Yes Yes Yes Yes (8) Yes 1106 1106 1106 1106 1106 1106 1106 1106 1106 0.059 0.047 0.192 0.059 0.088 0.096 0.046 0.083 0.093 Notes: Robust standard errors in parentheses. * significant at 10%; ** significant at 5%; *** significant at 1%. a. Lecture 700 and Post-test 300 represent z-scores of the lecture grade and post-test, respectively. Robust standard errors in parentheses. b. Student ability includes: cumulative high school GPA, placement score, SAT Verbal, SAT I Writing, ACT, and indicators for missing SAT, ACT or placement score. c. Section characteristics include student’s age, and indicators for gender, ethnicity, living on campus, first generation, low income, term, enrollment, response rate, withdrawal rate, and percent of students repeating the class. Observations Adjusted R2 30 Table 4: Using Subsequent Grade as a Measure of Learning.a Dependent variable: “The course overall as a learning experience was excellent.” Post-test 300 (1) (2) (3) (4) 0.047* (0.030) 0.056* (0.031) 0.057* (0.031) 0.060** (0.032) Subsequent Gradeb Student Ability Section Characteristics Yes Fixed Effects Instructor Fixed Effects Observations Adjusted R2 919 0.050 Yes Yes Yes Yes (5) (6) (7) (8) -0.018 (0.037) -0.024 (0.037) -0.004 (0.037) -0.020 (0.039) Yes Yes Yes Yes Yes Yes Yes Yes Yes Yes 919 0.052 919 0.078 Yes 919 0.083 919 0.047 919 0.048 919 0.075 919 0.078 Notes: Robust standard errors in parentheses. * significant at 10%; ** significant at 5%; *** significant at 1%. See notes to Table 3 for variable descriptions and a list of control variables. a. Sample is restricted to students who took the subsequent class. b. Subsequent grade is the grade on a 4 point scale that the student received in the required subsequent class. 31 Table 5. Robustness Checks on the Determinants of “The course overall as a learning experience was excellent.” [C6] (1) (2) (3) (4) 0.051* (0.029) 891 0.043 0.062** (0.031) 891 0.044 0.055* (0.030) 891 0.079 0.058* (0.031) 891 0.096 Panel A. Fall Onlya Post-test 300 Observations Adjusted R2 Panel B. Restricted to Students on the Margin of Passing the Classb Post-test 300 Observations Adjusted R2 Student Ability Section Characteristics Fixed Effects Instructor Fixed Effects 0.054* (0.032) 906 0.057 Yes 0.071** (0.034) 906 0.060 0.073** (0.034) 906 0.099 0.074** (0.035) 906 0.111 Yes Yes Yes Yes Yes Yes Yes Notes: Robust standard errors in parentheses. * significant at 10%; ** significant at 5%; *** significant at 1%. The dependent variable is a numerical score on response to the question, “The course overall as a learning experience was excellent.“ [C6] See notes to Table 3 for variable descriptions and a list of control variables. a. Sample restricted to students who enrolled in the class fall term of their freshman year. b. Sample restricted to students whose lecture score was between 430 and 600. 32 Table 6. Validity of the Evaluation Questions. Evaluation Question Correlation Coefficient Instructor Elements B1 Instructor was prepared and organized 0.14 B2 Instructor used class time effectively 0.18 B3 Instructor was clear and understandable 0.24 B4 Instructor exhibited enthusiasm for subject and teaching 0.12 B5 0.09 B6 Instructor respected students; sensitive to and concerned with their progress Instructor was available and helpful B7 Instructor was fair in evaluating students 0.15 B8 Instructor was effective as a teacher overall 0.16 0.10 Course Elements C1 The syllabus clearly explained the structure of the courses 0.04 C2 The exams reflected the materials covered during the course 0.08 C3 The required readings contributed to my learning 0.11 C4 The assignments contributed to my learning 0.16 C5 Supplementary materials (e.g. films, slides, videos, guest lectures, web pages, etc) were informative 0.26 C6 The course overall as a learning experience was excellent 0.19 33