TEXT PROCESSING 1 - clic

advertisement

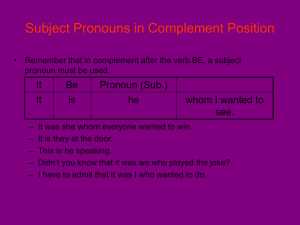

TEXT PROCESSING 1 Anaphora resolution Introduction to Anaphora Resolution Outline A reminder: anaphora resolution, factors affecting the interpretation of anaphoric expressions A brief history of anaphora resolution First algorithms: Charniak, Winograd, Wilks Pronouns: Hobbs Salience: S-List, LRC Early ML work Definite descriptions: Vieira & Poesio The MUC initiative – also: coreference evaluation methods Soon et al Anaphora resolution: a specification of the problem Anaphora resolution: coreference chains Reminder: Factors that affect the interpretation of anaphoric expressions Factors: Morphological features (agreement) Syntactic information Salience Lexical and commonsense knowledge Distinction often made between CONSTRAINTS and PREFERENCES Agreement GENDER strong CONSTRAINT for pronouns (in other languages: for other anaphors as well) [Jane] blamed [Bill] because HE spilt the coffee (Ehrlich, Garnham e.a, Arnold e.a) NUMBER also strong constraint [[Union] representatives] told [the CEO] that THEY couldn’t be reached Some complexities Gender: [India] withdrew HER ambassador from the Commonwealth “…to get a customer’s 1100 parcel-a-week load to its doorstep” [actual error from LRC algorithm] Number: The Union said that THEY would withdraw from negotations until further notice. Syntactic information BINDING constraints EMBEDDING constraints [[his] friend] PARALLELISM preferences [John] likes HIM Around 60% of pronouns occur in subject position; around 70% of those refer to antecedents in subject position [John] gave [Bill] a book, and [Fred] gave HIM a pencil Effect of syntax on SALIENCE (Next) Salience In every discourse, certain entities are more PROMINENT Factors that affect prominence Distance Order of mention in the sentence Entities mentioned earlier in the sentence more prominent Type of NP (proper names > other types of NPs) Number of mentions Syntactic position (subj > other GF, matrix > embedded) Semantic role (‘implicit causality’ theories) Discourse structure Focusing theories Hypothesis: One or more entities in the discourse are the FOCUS OF (LINGUISTIC) ATTENTION just like some entities in the visual space are the focus of VISUAL attention Grosz 1977, Reichman 1985: ‘focus spaces’ Sidner 1979, Sanford & Garrod 1981: ‘focused entities’ Grosz et al 1981, 1983, 1995: Centering Lexical and commonsense knowledge [The city council] refused [the women] a permit because they feared violence. [The city council] refused [the women] a permit because they advocated violence. Winograd (1974), Sidner (1979) BRISBANE – a terrific right rip from [Hector Thompson] dropped [Ross Eadie] at Sandgate on Friday night and won him the Australian welterweight boxing title. (Hirst, 1981) Problems to be resolved by an AR system: mention identification Effect: recall Typical problems: Nested NPs (possessives) Appositions: [a city] 's [computer system] [[a city]’s computer system] [Madras], [India] [Madras, [India]] Attachments Problems for AR: Complex attachments [The quality that’s coming out of [software from [India]] The quality that’s coming out of software from India is now exceeding the quality of software that’s coming out from the United States scanning through millions of lines of computer code ACE/bnews/devel/ABC19981001.1830.1257 Problems for AR: agreement extraction The committee are meeting / is meeting The Union sent a representative. They …. The doctor came to visit my father. SHE told him … Problems to be solved: anaphoricity determination Expletives: IT’s not easy to find a solution Is THERE any reason to be optimistic at all? Non-anaphoric definites Outline A reminder: anaphora resolution, factors affecting the interpretation of anaphoric expressions A brief history of anaphora resolution First algorithms: Charniak, Winograd, Wilks Pronouns: Hobbs Salience: S-List, LRC Early ML work Definite descriptions: Vieira & Poesio The MUC initiative, coreference evaluation methods Soon et al 2001 A brief history of early work on anaphora resolution Algorithms heavily based on (handcoded) commonsense knowledge: Charniak 72, Winograd 74, Hobbs 1974, Wilks 1975 Syntax-based algorithms: Hobbs 1974 Salience-based algorithms: Brennan et al, Strube & Hahn, Tetreault First approaches to anaphora resolution: Charniak, Winograd, Wilks Heavy emphasis on inference Hand-coded No formal evaluation 1970s Example: SHRDLU http://hci.stanford.edu/~winograd/shrdlu/ Terry Winograd. 1971. MIT Ph.D. Thesis. • This is one of the only dialogs it knows. • SHRDLU is too stupid to make mistakes. • Beautiful “Demo-ware” Person: PICK UP A BIG RED BLOCK. Computer: OK. (does it) Person: GRASP THE PYRAMID. Terry Winograd Computer: I DON'T UNDERSTAND WHICH PYRAMID YOU MEAN. Person: FIND A BLOCK WHICH IS TALLER THAN THE ONE YOU ARE HOLDING AND PUT IT INTO THE BOX. Computer: BY "IT", I ASSUME YOU MEAN THE BLOCK WHICH IS TALLER THAN THE ONE I AM HOLDING. Computer: OK. (does it) Person: WHAT DOES THE BOX CONTAIN? Computer: THE BLUE PYRAMID AND THE BLUE BLOCK. Person: WHAT IS THE PYRAMID SUPPORTED BY? Computer: THE BOX. Person: HOW MANY BLOCKS ARE NOT IN THE BOX? Computer: FOUR OF THEM. Person: IS AT LEAST ONE OF THEM NARROWER THAN THE ONE WHICH I TOLD YOU TO PICK UP? Computer: YES, THE RED CUBE. Anaphora in SHRDLU First example of HISTORY LIST algorithm Uses a combination of agreement features and semantic constraints Check all possibilities and assign a global score rather than simply find the first match Score incorporates syn component: entities in subj position higher score than entities in object position, in turn ranked more highly than entities in adjunct position Performance made more impressive by including solutions to a number of complex cases, such as reference to events (Why did you do it?) – often ad hoc Hobbs’ `Naïve Algorithm’ (Hobbs, 1974) The reference algorithm for PRONOUN resolution (until Soon et al it was the standard baseline) Interesting since Hobbs himself in the 1974 paper suggests that this algorithm is very limited (and proposes one based on semantics) The first anaphora resolution algorithm to have an (informal) evaluation Purely syntax based Hobbs’ `Naïve Algorithm’ (Hobbs, 1974) Works off ‘surface parse tree’ Starting from the position of the pronoun in the surface tree, first go up the tree looking for an antecedent in the current sentence (leftto-right, breadth-first); then go to the previous sentence, again traversing left-to-right, breadth-first. And keep going back Hobbs’ algorithm: Intrasentential anaphora Steps 2 and 3 deal with intrasentential anaphora and incorporate basic syntactic constraints: S NP John V likes X p NP him Also: John’s portrait of him Hobbs’ Algorithm: intersentential anaphora S candidate NP Bill V is NP a good friend X S NP John V likes NP him Evaluation The first anaphora resolution algorithm to be evaluated in a systematic manner, and still often used as baseline (hard to beat!) Hobbs, 1974: Tetreault 2001 (no selectional restrictions; all pronouns) 300 pronouns from texts in three different styles (a fiction book, a nonfiction book, a magazine) Results: 88.3% correct without selectional constraints, 91.7% with SR 132 ambiguous pronouns; 98 correctly resolved. 1298 out of 1500 pronouns from 195 NYT articles (76.8% correct) 74.2% correct intra, 82% inter Main limitations Reference to propositions excluded Plurals Reference to events Salience-based algorithms Common hypotheses: Entities in discourse model are RANKED by salience Salience gets continuously updated Most highly ranked entities are preferred antecedents Variants: DISCRETE theories (Sidner, Brennan et al, Strube & Hahn): 1-2 entities singled out CONTINUOUS theories (Alshawi, Lappin & Leass, Strube 1998, LRC): only ranking Salience-based algorithms Sidner 1979: Brennan et al 1987 (see Walker 1989) Ranking based on grammatical function One focus (CB) Strube & Hahn 1999 Most extensive theory of the influence of salience on several types of anaphors Two FOCI: discourse focus, agent focus never properly evaluated Ranking based on information status (NP type) S-List (Strube 1998): drop CB LRC (Tetreault): incremental LRC An update on Strube’s S-LIST algorithm (= Centering without centers) Initial version augmented with various syntactic and discourse constraints LRC Algorithm (LRC) Maintain a stack of entities ranked by grammatical function and sentence order (Subj > DO > IO) Each sentence is represented by a Cf-list: list of salient entities ordered by grammatical function While processing utterance’s entities (left to right) do: Push entity onto temporary list (Cf-list-new), if pronoun, attempt to resolve first: Search through Cf-list-new (l-to-r) taking the first candidate that meets gender, agreement constraints, etc. If none found, search past utterance’s Cf-lists starting from previous utterance to beginning of discourse Results Algorithm PTB-Fic (511) LRC PTB-News (1694) 74.9% S-List 71.7% 66.1% BFP 59.4% 46.4% 72.1% Comparison with ML techniques of the time Algorithm All 3rd LRC 76.7% Ge et al. (1998) 87.5% (*) Morton (2000) 79.1% Carter’s Algorithm (1985) The most systematic attempt to produce a system using both salience (Sidner’s theory) & commonsense knowledge (Wilks’s preference semantics) Small-scale evaluation (around 100 handconstructed examples) Many ideas found their way in the SRI’s Core Language Engine (Alshawi, 1992) Outline A reminder: anaphora resolution, factors affecting the interpretation of anaphoric expressions A brief history of anaphora resolution First algorithms: Charniak, Winograd, Wilks Pronouns: Hobbs Salience: S-List, LRC Modern work in anaphora resolution Early ML work The MUC initiative – also: coreference evaluation methods Soon et al MODERN WORK IN ANAPHORA RESOLUTION Availability of the first anaphorically annotated corpora circa 1993 (MUC6) made statistical methods possible STATISTICAL APPROACHES TO ANAPHORA RESOLUTION UNSUPERVISED approaches Eg Cardie & Wagstaff 1999, Ng 2008 SUPERVISED approaches Early (NP type specific) Soon et al: general classifier + modern architecture ANAPHORA RESOLUTION AS A CLASSIFICATION PROBLEM 1. 2. Classify NP1 and NP2 as coreferential or not Build a complete coreferential chain SOME KEY DECISIONS ENCODING I.e., what positive and negative instances to generate from the annotated corpus Eg treat all elements of the coref chain as positive instances, everything else as negative: DECODING How to use the classifier to choose an antecedent Some options: ‘sequential’ (stop at the first positive), ‘parallel’ (compare several options) Outline A reminder: anaphora resolution, factors affecting the interpretation of anaphoric expressions A brief history of anaphora resolution First algorithms: Charniak, Winograd, Wilks Pronouns: Hobbs Salience: S-List, LRC Modern work in anaphora resolution Early ML work The MUC initiative – also: coreference evaluation methods Soon et al Early machine-learning approaches Main distinguishing feature: concentrate on a single NP type Both hand-coded and ML: Aone & Bennett (pronouns) Vieira & Poesio (definite descriptions) Ge and Charniak (pronouns) Definite descriptions: Vieira & Poesio A first attempt at going beyond pronouns while still doing a large-scale evaluation Definite descriptions chosen because they require lexical and commonsense knowledge Developing both a hand-coded and a ML decision tree (as in Aone and Bennett) Vieira & Poesio 1996, Vieira 1998, Vieira & Poesio 2000 Preliminary corpus study (Poesio and Vieira, 1998) Annotators asked to classify about 1,000 definite descriptions from the ACL/DCI corpus (Wall Street Journal texts) into three classes: DIRECT ANAPHORA: a house … the house DISCOURSE-NEW: the belief that ginseng tastes like spinach is more widespread than one would expect BRIDGING DESCRIPTIONS: the flat … the living room; the car … the vehicle Poesio and Vieira, 1998 Results: More than half of the def descriptions are first-mention Subjects didn’t always agree on the classification of an antecedent (bridging descriptions: ~8%) The Vieira / Poesio system for robust definite description resolution Follows a SHALLOW PROCESSING approach (Carter, 1987; Mitkov, 1998): it only uses Structural information (extracted from Penn Treebank) Existing lexical sources (WordNet) (Very little) hand-coded information Methods for resolving direct anaphors DIRECT ANAPHORA: the red car, the car, the blue car: premodification heuristics segmentation: approximated with ‘loose’ windows Methods for resolving discoursenew definite descriptions DISCOURSE-NEW DEFINITES the first man on the Moon, the fact that Ginseng tastes of spinach: a list of the most common functional predicates (fact, result, belief) and modifiers (first, last, only… ) heuristics based on structural information (e.g., establishing relative clauses) The (hand-coded) decision tree 1. 2. 3. 4. Apply ‘safe’ discourse-new recognition heuristics Attempt to resolve as same-head anaphora Attempt to classify as discourse new Attempt to resolve as bridging description. Search backward 1 sentence at a time and apply heuristics in the following order: 1. 2. 3. Named entity recognition heuristics – R=.66, P=.95 Heuristics for identifying compound nouns acting as anchors – R=.36 Access WordNet – R, P about .28 The decision tree obtained via ML Same features as for the hand-coded decision tree Using ID3 classifier (non probabilistic decision tree) Training instances: Positive: closest annotated antecedent Negative: all mentions in previous four sentences Decoding: consider all possible antecedents in previous four sentences Automatically learned decision tree Overall Results Evaluated on a test corpus of 464 definite descriptions Overall results: Version 1 Version 2 R 53% 57% P 76% 70% F 62% 62% D-N def 77% 77% 77% ID3 75% 75% 75% Overall Results Results for each type of definite description: Direct anaphora Disc new Bridging R P F 62% 83% 71% 69% 29% 72% 38% 70% 32.9% Outline A reminder: anaphora resolution, factors affecting the interpretation of anaphoric expressions A brief history of anaphora resolution First algorithms: Charniak, Winograd, Wilks Pronouns: Hobbs Salience: S-List, LRC Early ML work Definite descriptions: Vieira & Poesio The MUC initiative and coreference evaluation methods Soon et al MUC First big initiative in Information Extraction Produced first sizeable annotated data for coreference Developed first methods for evaluating systems MUC terminology: MENTION: any markable COREFERENCE CHAIN: a set of mentions referring to an entity KEY: the (annotated) solution (a partition of the mentions into coreference chains) RESPONSE: the coreference chains produced by a system Evaluation of coreference resolution systems Lots of different measures proposed ACCURACY: Consider a mention correctly resolved if Measures developed for the competitions: Correctly classified as anaphoric or not anaphoric ‘Right’ antecedent picked up Automatic way of doing the evaluation More realistic measures (Byron, Mitkov) Accuracy on ‘hard’ cases (e.g., ambiguous pronouns) Vilain et al 1995 The official MUC scorer Based on precision and recall of links Vilain et al: the goal The problem: given that A,B,C and D are part of a coreference chain in the KEY, treat as equivalent the two responses: And as superior to: Vilain et al: RECALL To measure RECALL, look at how each coreference chain Si in the KEY is partitioned in the RESPONSE, and count how many links would be required to recreate the original, then average across all coreference chains. Vilain et al: Example recall In the example above, we have one coreference chain of size 4 (|S| = 4) The incorrect response partitions it in two sets (|p(S)| = 2) R = 4-2 / 4-1 = 2/3 Vilain et al: precision Count links that would have to be (incorrectly) added to the key to produce the response I.e., ‘switch around’ key and response in the equation before Beyond Vilain et al Problems: Only gain points for links. No points gained for correctly recognizing that a particular mention is not anaphoric All errors are equal Proposals: Bagga & Baldwin’s B-CUBED algorithm Luo recent proposal Outline A reminder: anaphora resolution, factors affecting the interpretation of anaphoric expressions A brief history of anaphora resolution First algorithms: Charniak, Winograd, Wilks Pronouns: Hobbs Salience: S-List, LRC Early ML work Definite descriptions: Vieira & Poesio The MUC initiative – also: coreference evaluation methods Soon et al Soon et al 2001 First ‘modern’ ML approach to anaphora resolution Resolves ALL anaphors Fully automatic mention identification Developed instance generation & decoding methods used in a lot of work since Soon et al: preprocessing POS tagger: HMM-based Noun phrase identification module 96% accuracy HMM-based Can identify correctly around 85% of mentions (?? 90% ??) NER: reimplementation of Bikel Schwartz and Weischedel 1999 HMM based 88.9% accuracy Soon et al: training instances <ANAPHOR (j), ANTECEDENT (i)> Soon et al 2001: Features NP type Distance Agreement Semantic class Soon et al: NP type and distance NP type of anaphor j (3) j-pronoun, def-np, dem-np (bool) NP type of antecedent i i-pronoun (bool) Types of both both-proper-name (bool) DIST 0, 1, …. Soon et al features: string match, agreement, syntactic position STR_MATCH ALIAS dates (1/8 – January 8) person (Bent Simpson / Mr. Simpson) organizations: acronym match (Hewlett Packard / HP) AGREEMENT FEATURES number agreement gender agreement SYNTACTIC PROPERTIES OF ANAPHOR occurs in appositive contruction Soon et al: semantic class agreement PERSON FEMALE OBJECT MALE ORGANIZATION TIME LOCATION DATE MONEY SEMCLASS = true iff semclass(i) <= semclass(j) or viceversa PERCENT Soon et al: generating training instances Marked antecedent used to create positive instance All mentions between anaphor and marked antecedent used to create negative instances Generating training instances ((Eastern Airlines) executives) notified ((union) leaders) that (the carrier) wishes to discuss (selective (wage) reductions) on (Feb 3) POSITIVE NEGATIVE NEGATIVE NEGATIVE Soon et al: decoding Right to left, consider each antecedent until classifier returns true Soon et al: evaluation MUC-6: P=67.3, R=58.6, F=62.6 MUC-7: P=65.5, R=56.1, F=60.4 Soon et al: evaluation Subsequent developments Different models of the task Different preprocessing techniques Using lexical / commonsense knowledge (particularly semantic role labelling) Next lecture Salience Anaphoricity detection Development of AR toolkits (GATE, LingPipe, GUITAR) Other models Cardie & Wagstaff: coreference as (unsupervised) clustering Much lower performance Ng and Cardie Yang ‘twin-candidate’ model Ng and Cardie 2002: Changes to the model: Many more features: Positive: first NON PRONOMINAL Decoding: choose MOST HIGH PROBABILITY Many more string features Linguistic features (binding, etc) Subsequently: Discourse new detection (see below) Readings Kehler’s chapter in Jurafsky & Martin Hobbs J.R. 1978, “Resolving Pronoun References,” Lingua, Vol. 44, pp. 311-. 338. Alternatively: Elango’s survey http://pages.cs.wisc.edu/~apirak/cs/cs838/pradheepsurvey.pdf Also in Readings in Natural Language Processing, Renata Vieira, Massimo Poesio, 2000. An Empirically-based System for Processing Definite Descriptions. Computational Linguistics 26(4): 539593 W. M. Soon, H. T. Ng, and D. C. Y. Lim, 2001. A machine learning approach to coreference resolution of noun phrases. Computational Linguistics, 27(4):521--544,