slides III - George C. Tseng

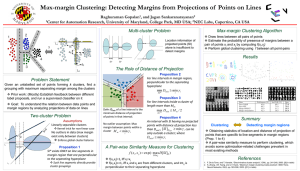

advertisement

Cluster Analysis III

10/5/2012

Outline

Estimate the number of clusters.

Evaluation of clustering results.

Estimate the number of clusters

Milligan & Cooper(1985) compared over 30 published rules.

None is particularly better than all the others. The best

method is data dependent.

1. Calinski & Harabasz (1974)

B(k ) /(k 1)

max CH (k )

W (k ) /(n k )

Where B(k) and W(k) are the between-and within-cluster sum

of squares with k clusters.

2. Hartigan (1975)

, Stop when H(k)<10

Estimate the number of clusters

3. Gap statistic: Tibshirani, Walther & Hastie (2000)

• Within sum of squares W(k) is a decreasing function of k.

• Normally look for a turning point of elbow-shape to

identify the number of clusters, k.

Estimate the number of clusters

• Instead of the above arbitrary criterion, the paper proposes

to maximize the following Gap statistics. The background

expectation is calculated from random sampling from .

max Gapn (k ) En* (log(W (k ))) log(W (k ))

• The background expectation is calculated from random

sampling from uniform distribution.

Observation

s

Observatio

ns

Bounding Box

(aligned with

feature axes)

Bounding Box

(aligned with

principle axes)

Monte Carlo

Simulations

Monte Carlo

Simulations

Computation of the Gap Statistic

for l = 1 to B

Compute Monte Carlo sample X1b, X2b, …, Xnb (n is # obs.)

for k = 1 to K

Cluster the observations into k groups and compute log Wk

for l = 1 to B

Cluster the M.C. sample into k groups and compute log Wkb

Compute Gap(k ) 1 B logW logW

B

b 1

kb

k

Compute sd(k), the s.d. of {log Wkb}l=1,…,B

Set the total s.e.

s 1 1/ B sd (k )

k

Find the smallest k such that

Gap(k ) Gap(k 1) sk 1

Estimate the number of clusters

4. Resampling methods:

Tibshirani et al. (2001); Dudoit and Fridlyand (2002)

Treat as underlying truth.

Compare

: training data

: testing data

: K-means clustering cetroids from training data

: comembership matrix

Prediction strenth:

Ak1, Ak2,…, Akk be the K-means clusters from test data Xte

nkj=#(Akj)

Find k that maximizes ps(k).

Estimate the number of clusters

Conclusions:

1. There’s no dominating “good” method for estimating

the number of clusters. Some are good only in some

specific simulations or examples.

2. Imagine in a high-dimensional complex data set.

There might not be a clear “true” number of clusters.

3. The problem is also about the “resolution”. In

“coarser” resolution few loose clusters may be

identified, while in “refined” resolution many small

tight clusters will stand out.

Cluster Evaluation

• Evaluation and comparison of clustering methods is

always difficult.

• In supervised learning (classification), the class labels

(underlying truth) are known and performance can be

evaluated through cross validation.

• In unsupervised learning (clustering), external validation is

usually not available.

• Ideal data for cluster evaluation:

• Data with class/tumor labels (for clustering samples)

• Cell cycle data (for clustering genes)

• Simulated data

Rand Index

Y={(a,b,c), (d,e,f)}

ab

together

in both

ac

ae

af

bc

bd

be

bf

cd

ce

cf

*

de

df

ef

Total

*

separate

in both

discorda

nt

ad

Y'={(a,b), (c,d,e), (f)}

* * *

*

* * *

*

2

*

* *

7

* *

Rand index: c(Y, Y') =(2+7)/15=0.6 (percentage of concordance)

1. 1 c(Y, Y')0

2. Clustering methods can be evaluated by c(Y, Ytruth) if Ytruth

available.

6

Adjusted Rand index: (Hubert and Arabie 1985)

index - expectedindex

maximumindex - expectedindex

Adjusted Rand index =

The adjusted Rand index will take maximum value at 1 and

constant expected value 0 (when two clusterings are totally

independent)

Comparison

Advantage

Hierarchical • Intuitive algorithm

clustering

• Good interpretability

• Do not need to estimate # of

clusters

Disadvantage

• Very vulnerable to outliers

• Tree not unique; gene closer not

necessarily more similar

• Hard to read when tree is big

K-means

• Simplified Gaussian mixture model

• Normally get nice clusters

• Local minimum

• Estimating # of clusters

SOM

• Clusters has interpretation on 2D

geometry (more interpretable)

• The algorithm very heuristic

• Solution sub-optimal due to 2D

geometry restriction

Model-based • Flexibility on cluster structure

clustering

• Rigorous statistical inference

Tight

clustering

• Allow genes not being clustered;

only produce tight clusters

• Ease the problem of accurate

estimation of # of clusters

• Biologically more meaningful

• Model selection usually difficult

• Local minimum problem

• Slower computation when data

large

20 samples

Simulation:

• 20 time-course samples for each gene.

• In each cluster, four groups of samples

with similar intensity.

15

clusters

• Individual sample and gene variation

are added.

• # of genes in each cluster ~ Poisson(10)

• Scattered (noise) genes are added.

• The simulated data well assembles real

Thalamuthu et al. 2006

data by visualization.

Different types of perturbations

Type I: a number (0, 5, 10, 20, 60, 100 and 200% of the

original total number of clustered genes) of randomly

simulated scattered genes are added. E.g. For sample j in

a scattered gene, the expression level is randomly

sampled from the empirical distribution of expressions

of all clustered genes in sample j.

Type II: For each element of the log-transformed

expression matrix, a small random error from normal

distribution (SD = 0.05, 0.1, 0.2, 0.4, 0.8, 1.2) is added, to

evaluate robustness of the clustering against potential

random errors.

Type III: combination of type I and II.

Different degree of perturbation in the simulated microarray data

Simulation schemes performed in the paper.

In total, 25 (simulation settings) X 100 (data sets) = 2500 are evaluated.

• Adjusted Rand index: a measure of similarity of two clustering;

• Compare each clustering result to the underlying true clusters.

Obtain the adjusted Rand index (the higher the better).

T: tight clustering

M: model-based

P: K-medoids

K: K-means

H: hierarchical

S: SOM

Consensus Clustering (Monti et al,

2003)

Simpson et al. BMC Bioinformatics 2010 11:590 doi:10.1186/1471-2105-11-590

Consensus Clustering

The consensus matrix can be used as

distance matrix for clustering.

Alternatively, people also can cluster on

the original data and attach a

measurement of robustness for each

cluster.

Cluster and membership robustness

Cluster robustness: average connectivity in a

cluster.

mk

1

M i , j where M i , j is the connectivity

N k N k 1 / 2 i , jIk

i j

measurement of the ith and jth element of cluster k.

Membership robustness: average connectivity

between and one element and all of the other

element of the cluster.

1

mi k

M i, j

N k 1 jI k

j i

•Consensus clustering with PAM (blue)

•Consensus clustering with hierarchical clustering (red)

•HOPACH (black)

•Fuzzy c-means (green)

Evaluate the clustering results

26

Comparison in real data sets:

(see paper for detailed comparison criteria)

• Despite many sophisticated methods for detecting regulatory

interactions (e.g. Shortest-path and Liquid Association), cluster

analysis remains a useful routine in high dimensional data

analysis.

• We should use these methods for visualization, investigation

and hypothesis generation.

• We should not use these methods inferentially.

• In general, methods with resampling evaluation, allowing

scattered genes and related to model-based approach are

better.

• Hierarchical clustering specifically: we are provided with a

picture from which we can make many/any conclusions.

Common mistakes or warnings:

1. Run K-means with large k and get excited to

see patterns without further investigation.

K-means can let you see patterns even in randomly

generated data and besides human eyes tend to see

“patterns”.

2. Identify genes that are predictive to survival

(e.g. apply t-statistics to long and short

survivors). Cluster samples based on the

selected genes and find the samples are

clustered according to survival status.

The gene selection procedure is already biased

towards the result you desire.

Common mistakes (con’d):

3. Cluster samples into k groups. Perform F-test

to identify genes differentially expressed among

subgroups.

Data has been re-used for both clustering and

identifying differentially expressed genes.You always

obtain a set of differentially expressed genes but not

sure it’s real or by random.