measurement and instrumentation bmcc 4743

advertisement

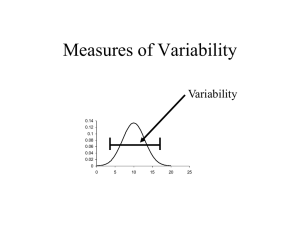

MEASUREMENT AND INSTRUMENTATION BMCC 3743 LECTURE 3: ANALYSIS OF EXPERIMENTAL DATA Mochamad Safarudin Faculty of Mechanical Engineering, UTeM 2010 Introduction Measures of dispersion Parameter estimation Criterion for rejection questionable data points Correlation of experimental data 2 Needed in all measurements with random inputs, e.g. random broadband sound/noise ◦ Tyre/road noise, rain drops, waterfall Some important terms are: ◦ Random variable (continuous or discrete), histogram, bins, population, sample, distribution function, parameter, event, statistic, probability. 3 Population : the entire collection of objects, measurements, observations and so on whose properties are under consideration Sample: a representative subset of a population on which an experiment is performed and numerical data are obtained 4 Introduction Measures of dispersion Parameter estimation Criterion for rejection questionable data points Correlation of experimental data 5 =>Measures of data spreading or variability Deviation (error) is defined as di xi x Mean deviation is defined as n d di n Population standard deviation is defined as i 1 N xi 2 i 1 N 6 Sample standard deviation is defined as 2 n xi x S i 1 n 1 ◦ is used when data of a sample are used to estimate population std dev. Variance is defined as 2 for the population or S 2 for a sam ple 7 Find the mean, median, standard deviation and variance of this measurement: 1089, 1092, 1094, 1095, 1098, 1100, 1104, 1105, 1107, 1108, 1110, 1112, 1115 8 Mean = 1103 (1102.2) Median = 1104 Std deviation = 5.79 (7.89) Variance = 33.49 (62.18) 9 Introduction Measures of dispersion Parameter estimation Criterion for rejection questionable data points Correlation of experimental data 10 Generally, Estimation of population mean, is sample mean, x . Estimation of population standard deviation, is sample standard deviation, S. 11 Confidence interval is the interval between , where is an uncertainty. x to x Confidence level is the probability for the population mean to fall within specified interval: Px x 12 Normally referred in terms of , also called level of significance, where confidence level 1 If n is sufficiently large (> 30), we can apply the central limit theorem to find the estimation of the population mean. 13 1. 2. 3. If original population is normal, then distribution for the sample means’ is normal (Gaussian) If original population is not normal and n is large, then distribution for sample means’ is normal If original population is not normal and n is small, then sample means’ follow a normal distribution only approximately. 14 When n is large, P z / 2 x z / 2 1 / n where z x / n Rearranged to get P x z / 2 x z / 2 1 n n Or with confidence level x z / 2 1 n 15 Table z Confidence Interval Confidence Level (%) Level of Significance (%) 3.30 99.9 0.1 3.0 99.7 0.3 2.57 99.0 1.0 2.0 95.4 4.6 1.96 95.0 5.0 1.65 90.0 10.0 1.0 68.3 31.7 Area under 0 to z 16 When n is small, P t / 2 x t / 2 1 S/ n where t x S/ n Rearranged to get S S P x t / 2 x t / 2 1 n n S with confidence level 1 Or x t /2 n t table 17 Similarly as before, but now using chi2 squared distribution, , (always positive) where 2 S2 2 P v ,1 / 2 n 1 2 v , / 2 1 2 n 1 S2 2 18 Hence, the confidence interval on the population variance is n 1S 2 2 n 1S 2 v2, / 2 v2,1 / 2 Chi squared table 19 Introduction Measures of dispersion Parameter estimation Criterion for rejection questionable data points Correlation of experimental data 20 To eliminate data which has low probability of occurrence => use Thompson test. Example: Data consists of nine values, Dn = 12.02, 12.05, 11.96, 11.99, 12.10, 12.03, 12.00, 11.95 and 12.16. D = 12.03, S = 0.07 So, calculate deviation: 1 Dl argest D 12.16 12.03 0.13 2 Dsmallest D 11.95 12.03 0.08 21 From Thompson’s table, when n = 9, then 1.777 Comparing S 0.07 1.77 0.12 with 1 0.13, where 1 S , then D9 = 12.16 should be discarded. Recalculate S and D to obtain 0.05 and 12.01 respectively. Hence for n = 8, 1.749 so remaining data stay. and S 0.09, Thompson’s table 22 Introduction Measures of dispersion Parameter estimation Criterion for rejection questionable data points Correlation data of experimental 23 A) B) C) Correlation coefficient Least-square linear fit Linear regression using data transformation 24 Case I: Strong, linear relationship between x and y Case II: Weak/no relationship Case III: Pure chance => Use correlation coefficient, rxy to determine Case III 25 Given as n rxy x x y y i 1 i i 2 2 x x y y i i i 1 i 1 n n 1/ 2 where 1 rxy 1 +1 means positive slope (perfectly linear relationship) -1 means negative slope (perfectly linear relationship) 0 means no linear correlation 26 In practice, we use special Table (using critical values of rt) to determine Case III. If from experimental value of |rxy| is equal or more than rt as given in the Table, then linear relationship exists. If from experimental value of |rxy| is less than rt as given in the Table, then only pure chance => no linear relationship exists. 27 To get best straight line on the plot: Simple approach: ruler & eyes More systematic approach: least squares ◦ Variation in the data is assumed to be normally distributed and due to random causes ◦ To get Y = ax + b, it is assumed that Y values are randomly vary and x values have no error. 28 For each value of xi, error for Y values are Then, the sum of squared errors is ei Yi yi n n 2 E Yi yi axi b yi i 1 2 i 1 29 Minimising this equation and solving it for a & b, we get a b n xi yi xi yi n xi2 xi 2 2 x i yi xi xi yi n x xi 2 i 2 30 Substitute a & b values into Y = ax + b, which is then called the least-squares best fit. To measure how well the best-fit line represents the data, we calculate the standard error of estimate, given by 2 S y,x y i b y1 a x1 y1 n2 where Sy,x is the standard deviation of the differences between data points and the best-fit line. Its unit is the same as y. 31 …Is another good measure to determine how well the best-fit line represents the data, using 2 r 2 ax b y 1 y y i i 2 i For a good fit,r 2 must be close to unity. 32 For some special cases, such as y aebx Applying natural logarithm at both sides, gives ln y bx lna where ln(a) is a constant, so ln(y) is linearly related to x. 33 Thermocouples are usually approximately linear devices in a limited range of temperature. A manufacturer of a brand of thermocouple has obtained the following data for a pair of thermocouple wires: T(0C) 20 30 40 50 60 75 100 V(mV) 1.02 1.53 2.05 2.55 3.07 3.56 4.05 Determine the linear correlation between T and V Solution: Tabulate the data using this table: x (0C) No 1 2 3 4 5 6 7 x y x y(mV) 20 30 40 50 60 75 100 53.57 1.02 1.53 2.05 2.55 3.07 3.56 4.05 i x ( x i -33.57 -23.57 -13.57 -3.57 6.43 21.43 46.43 1127.04 555.61 184.18 12.76 41.33 459.18 2155.61 y y i y 2 -1.53 2.33 -1.02 1.03 -0.50 0.25 0.00 0.00 0.52 0.27 1.01 1.03 1.50 2.26 4535.71 7.17 x ) 2 y i ( x i x ) ( y y ) 51.27 23.98 6.75 -0.01 3.36 21.70 69.78 2.55 176.82 n rxy i x x y y i 1 i i n n 2 2 x x y y i i i 1 i 1 1/ 2 rxy= 0.980392 Another example The following measurements were obtained in the calibration of a pressure transducer: a. Determine the best fit straight line b. Find the coefficient of determination for the best fit Voltage DP H2O 0.31 1.96 0.65 4.20 0.75 4.90 0.85 5.48 0.91 5.91 1.12 7.30 1.19 7.73 1.38 9.00 1.52 9.90 xi2 0.0961 0.4225 0.5625 0.7225 0.8281 1.2544 1.4161 1.9044 2.3104 9.517 xi 0.31 0.65 0.75 0.85 0.91 1.12 1.19 1.38 1.52 8.68 sum () a n xi yi xi yi n xi2 xi 2 x y x x y b n x x 2 i i i 2 i i 2 i i yi 1.96 4.2 4.9 5.48 5.91 7.3 7.73 9 9.9 56.38 xiyi 0.6076 2.73 3.675 4.658 5.3781 8.176 9.1987 12.42 15.048 61.8914 yi2 3.8416 17.64 24.01 30.0304 34.9281 53.29 59.7529 81 98.01 402.503 a= 6.560646 b= -0.062934 Y=6.56x-0.06 From the result before we can find coeff of determination r2 by tabulating the following values xi yi 0.31 0.65 0.75 0.85 0.91 1.12 1.19 1.38 1.52 sum () 1.96 4.2 4.9 5.48 5.91 7.3 7.73 9 9.9 (Yi-yi)2 0.000118 0.000002 0.001802 0.001130 0.000008 0.000225 0.000203 0.000085 0.000086 0.003659 (yi-y)2 18.53 4.26 1.86 0.62 0.13 1.07 2.15 7.48 13.22 49.31 ax b y 1 y y 2 r 2 i i 2 i r2= 0.999926 Experimental Uncertainty Analysis End of Lecture 3 39