Pershing, Validity and Reliability in Questionnaire Development

advertisement

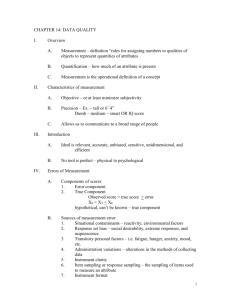

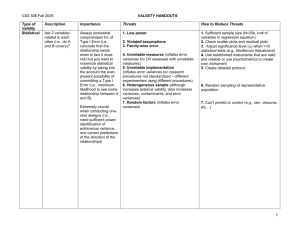

Questionnaire Development Measuring Validity & Reliability James A. Pershing, Ph.D. Indiana University Definition of Validity Instrument measures what it is intended to measure: Appropriate Meaningful Useful Enables a performance analyst or evaluator to draw correct conclusions Types of Validity Face Content Criterion Concurrent Predictive Construct Face Validity It looks OK Looks to measure what it is supposed to measure Look at items for appropriateness Looks Good To Me Client Sample respondents Least scientific validity measure Content-Related Validity Balance Organized review of format and content of instrument Definition Sample Content Format Comprehensiveness Adequate number of questions per objective No voids in content By subject matter experts Criterion-Related Validity Subject Instrument A Task Inventory John yes Mary no Lee yes Pat no Jim yes Scott yes Jill no Instrument B Observation Checklist no no no no yes yes yes Usually expressed as a correlation coefficient (0.70 or higher is generally accepted as representing good validity) How one measure stacks-up against another Concurrent = at same time Predictive = now and future Independent sources that measure same phenomena Seeking a high correlation Construct-Related Validity Prediction 1 - Confirmed T H E O R Y Prediction 2 - Confirmed Prediction 3 - Confirmed Prediction n - Confirmed A theory exists explaining how the concept being measured relates to other concepts Look for positive or negative correlation Often over time and in multiple settings Usually expressed as a correlation coefficient (0.70 or higher is generally accepted as representing good validity) Definition of Reliability The degree to which measures obtained with an instrument are consistent measures of what the instrument is intended to measure Sources of error Random error = unpredictable error which is primarily affected by sampling techniques Select more representative samples Select larger samples Measurement error = performance of instrument Types of Reliability Test-Retest Equivalent Forms Internal Consistency Split-Half Approach Kuder-Richardson Approach Cronbach Alpha Approach Test-Retest Reliability Administer the same instrument twice to the same exact group after a time interval has elapsed. Calculate a reliability coefficient (r) to indicate the relationship between the two sets of scores. r of+.51 to +.75 moderate to good r over +.75 = very good to excellent TIME Equivalent Forms Reliability Also called alternate or parallel forms Instruments administered to same group at same time Vary: Stem: Response Set: -- Order -- Order -- Wording -- Wording Calculate a reliability coefficient (r) to indicate the relationship between the two sets of scores. r of+.51 to +.75 moderate to good r over +.75 = very good to excellent Internal Consistency Reliability Split-Half Break instrument or subparts in ½ -- like two instruments Correlate scores on the two halves Kuder-Richardson (KR) Treats instrument as whole Compares variance of total scores and sum of item variances Cronbach Alpha Best to consult statistics book and consultant and use computer software to do the calculations for these tests Like KR approach Data scaled or ranked Reliability and Validity So unreliable as to be invalid Fair reliability and fair validity Fair reliability but invalid Good reliability but invalid Good reliability and good validity The bulls-eye in each target represents the information that is desired. Each dot represents a separate score obtained with the instrument. A dot in the bulls-eye indicates that the information obtained (the score) is the information the analyst or evaluator desires. Comments and Questions