Lecture 2: T-tests Part 1

advertisement

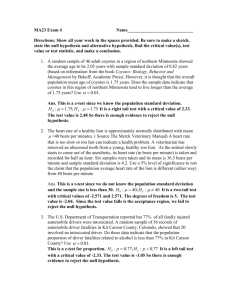

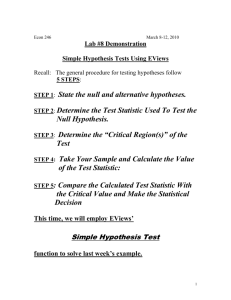

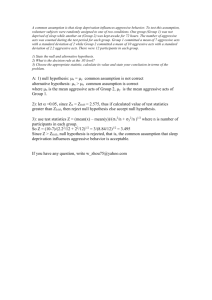

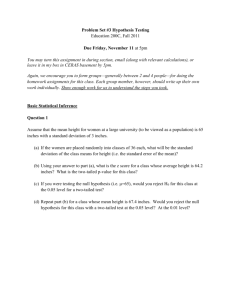

T-tests Part 1 PS1006 Lecture 2 Sam Cromie What has this got to do with statistics? Overview • • • • Review: hypothesis testing The need for the t-test The logic of the t-test Application to: – Single sample designs – Two group designs • Within group designs (Related samples) • Between group designs (Independent samples) • Assumptions • Advantages and disadvantages Generic Hypothesis testing steps 1. State hypotheses – Null and alternative 2. State alpha value 3. Calculate the statistic (z score, t score, etc.) 4. Look the statistic up on the appropriate probability table 5. Accept or reject null hypothesis Generic form of a statistic Data – Hypothesis Error What you got – what you expected (null) The unreliability of your data Z = Individual score – Population mean Population standard deviation Hypothesis testing with an individual data point… 1. State null hypothesis 2. State α value 3. Convert the score to a z score 4. Look z score up on z score tables z x In this case we have… Data of interest Error Sample Population Individual score, n=1 Mean known Standard deviation known Hypothesis testing with one sample (n>1) … • 100 participants saw video containing violence • Then they free associated to 26 homonyms with aggressive & non-aggressive forms - e.g., pound, mug, • Mean number of aggressive free associates = 7.10 • Suppose we know that without an aggressive video the mean ()=5.65 and the standard deviation () = 4.5 • Is 7.10 significantly larger than 5.65? In this case we have… Data of interest Error Sample Population Mean, n=100 Mean known Standard deviation known Hypothesis testing with one sample (n>1) … • Use the sample mean instead of x in the z score formula • Use standard error of sample instead of the population standard deviation z x becomes where X z x X n n = the number of scores in the sample Standard error: X is thestandarddeviationof a samplingdistribution but is known as a standarderrorfor thepurposesof differentiation • If we know then X can be calculated using the formula X n Sampling distribution • http://www.ruf.rice.edu/~lane/stat_sim/sam pling_dist/index.html • Will always be narrower than the parent population • The more samples that are taken the more normal the distribution • As sample size increases standard error decreases Back to video violence • H0: = 5.65 • H1: 5.65 (two-tailed) • Calculate p for sample mean of 7.10 assuming =5.65 • Use z from normal distribution as sampling distribution can be assumed to be normal • Calculate z X 7.1 5.65 1.45 X 4.5 z = = 3.22 = X .45 100 n • If z > + 1.96, reject H0 • 3.22 > 1.96 the difference is significant But mostly we do not know σ • E.g. do penalty-takers show a preference for right or left? • 16 penalty takers; 60 penalties each; null hypothesis = 50% or 30 each way • Result mean of 39 penalties to the left; is this significantly different? • µ = 30, but how do we calculate the standard error without the σ? In this case we have… Sample Population Data of interest Mean n=100 Hypothesis = 30 Error Variance ? Using s to estimate σ • Can’t substitute s for in a z score because s likely to be too small • So we need: – a different type of score – a t-score – a different type of distribution – Student’s t distribution T distribution • First published in 1908 by William Sealy Gosset, • Worked at a Guinness Brewery in Dublin on best yielding varieties of barley • Prohibited from publishing under his own name • so the paper was written under the pseudonym Student. T-test in a nut-shell… Allows us to calculate precisely what small samples tell us Uses three critical bits of information – mean, standard deviation and sample size t test for one mean • Calculated the same way as z except is replaced by s. • For the video example we gave before, s = 4.40 X z X = X X t sX n X s n = 7.1 5.65 4 .5 100 1.45 = 3.22 .45 7.1 5.65 4.40 100 1.45 3.30 .44 Degrees of freedom • t distribution is dependent on the sample size and this must be taken into account when calculating p • Skewness of sampling distribution decreases as n increases • t will differ from z less as sample size increases • t based on df where df = n - 1 t table Two-Tailed Significance Level df 10 15 20 25 30 100 .1 0 1 .8 1 2 1 .7 5 3 1 .7 2 5 1 .7 0 8 1 .6 9 7 1 .6 6 0 .0 5 2 .2 2 8 2 .1 3 1 2 .0 8 6 2 .0 6 0 2 .0 4 2 1 .9 8 4 .0 2 2 .7 6 4 2 .6 0 2 2 .5 2 8 2 .4 8 5 2 .4 5 7 2 .3 6 4 .0 1 3 .1 6 9 2 .9 4 7 2 .8 4 5 2 .7 8 7 2 .7 5 0 2 .6 2 6 Statistical inference made • With n = 100, t.02599 = 1.98 • Because t = 3.30 > 1.98, reject H0 • Conclude that viewing violent video leads to more aggressive free associates than normal Factors affecting t • Difference between sample & population means – As value increases so t increases • Magnitude of sample variance – As sample variance decreases t increases • Sample size - as it increases – The value of t required to be significant decreases – The distribution becomes more like a normal distribution Application of t-test to Within group designs t for repeated measures scores • Same participants give data on two measures • Someone high on one measure probably high on other • Calculate difference between first and second score • Base subsequent analysis on these difference scores. Before and after data are ignored Example - Therapy for PTSD • Therapy for victims of psychological trauma-Foa et al (1991) – 9 Individuals received Supportive Counselling – Measured post-traumatic stress disorder symptoms before and after therapy Mean St. Dev. Before After Diff. 21 24 21 26 32 27 21 25 18 23.84 4.20 15 15 17 20 17 20 8 19 10 15.67 4.24 6 9 4 6 15 7 13 6 8 8.22 3.60 In this case we have… Sample Data of interest Error Population Mean Hypothesis: Difference =0 (n=9) S of the ? Difference Results • The Supportive Counselling group decreased number of symptoms - was difference significant? • If no change, mean of differences should be zero • So, test the obtained mean of difference scores against = 0. • We don’t know , so use s and solve for t Repeated measures t test • D and sD = mean and standard deviation of differences respectively X t sX X s n 7.1 5.65 4.40 100 1.45 3.30 .44 D t sD D s D n 8.22 3.6 9 8.22 6.85 1.2 df = n - 1 = 9 - 1 = 8 Inference made • • • • With 8 df, t.025 = +2.306 We calculated t = 6.85 Since 6.85 > 2.306, reject H0 Conclude that the mean number of symptoms after therapy was less than mean number before therapy. • Infer that supportive counselling seems to work + & - of Repeated measures design • Advantages – Eliminate subject-to-subject variability – Control for extraneous variables – Need fewer subjects • Disadvantages – Order effects – Carry-over effects – Subjects no longer naïve – Change may just be a function of time t test is robust • Test assumes that variances are the same – Even if the variances are not the same, the test still works pretty well • Test assumes data are drawn from a normally distributed population – Even if the population is not normally distributed, the test still works pretty well