NUMERICAL ANALYSIS OF

BIOLOGICAL AND

ENVIRONMENTAL DATA

Lecture 9.

Discriminant Analysis

DISCRIMINANT ANALYSIS

Discriminant analysis of two groups

Assumptions of discriminant analysis -

Multivariate normality

Homogeneity

Comparison of properties of two groups

Identification of unknowns -

Picea pollen

Canonical variates analysis (= multiple discriminant analysis) of three or more

groups

Discriminant analysis in the framework of regression

Discriminant analysis and artificial neural networks

Niche analysis of species

Relation of canonical correspondence analysis (CCA) to canonical variates

analysis (CVA)

Generalised distance-based canonical variates analysis

Discriminant analysis and classification trees

Software

IMPORTANCE OF CONSIDERING GROUP STRUCTURE

Visual comparison of

the method used to

reduce dimensions in

(a) an unconstrained

and (b) a constrained

ordination procedure.

Data were simulated

from a multivariate

normal distribution

with the two groups

having different

centroids (6, 9) and (9,

7), but both variables

had a standard

deviation of 2, and the

correlation between

the two variables was

0.9. Note the

difference in scale

between the first

canonical axis (CV1)

and the first principal

component (PC1).

DISCRIMINANT ANALYSIS

1.

Taxonomy – species discrimination

e.g. Iris setosa, I. virginica

2.

Pollen analysis – pollen grain separation

3.

Morphometrics – sexual dimorphism

4.

Geology – distinguishing rock samples

Klovan & Billings (1967) Bull. Canad. Petrol. Geol. 15, 313-330

Discriminant function – linear combination of variables x1 and x2.

z = b1x1 + b2x2

where b1 and b2 are weights attached to each variable that determine the

relative contributions of the variable.

Geometrically – line that passes through where group ellipsoids cut each

other L, then draw a line perpendicular to it, M, that passes through the

origin, O. Project ellipses onto the perpendicular to give two univariate

distributions S1 and S2 on discriminant function M.

X2

Plot of two bivariate distributions, showing

overlap between groups A and B along both

variables X1 and X2. Groups can be distinguished

by projecting members of the two groups onto

the discriminant function line.

z = b1 x 1 + b2 x 2

Schematic diagram indicating

part of the concept underlying

discriminant functions.

Can generalise for three or more variables

m discriminant function

coefficients for m variables

Sw = D = (x1 – x2)

m x m matrix of

pooled variances

and covariances

vector of mean differences

Solve from:

inverse of Sw

= Sw-1 (x1 – x2) = Sw-1D

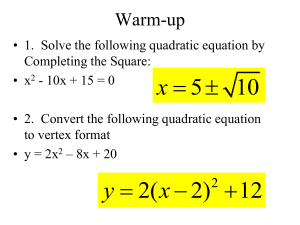

SIMPLE EXAMPLE OF LINEAR DISCRIMINANT

ANALYSIS

Group A

Mean of variable x1

= 0.330

With na individuals

Mean of variable x2

= 1.167

Mean vector

= [0.330 1.167]

Group B

Mean of variable x1

= 0.340

With nb individuals

Mean of variable x2

= 1.210

Mean vector

= [0.340 1.210]

Vector of mean differences (D)

= [-0.010

Variance-covariance matrix

n

Sij =

(x

ik

x i )(x jk x j )

k 1

where xik is the value of variable i for individual k.

-0.043]

Covariance matrix for group A (SA) = 0.00092

and for group B (SB) =

Pooled matrix SW =

-0.00489

0.07566

0.00138

-0.00844

-0.00844

0.10700

SA + SB

na + nb - 2

=

-0.00489

0.00003

-0.00017

-0.00017

0.00231

To solve [SW] [] = [D]

we need the inverse of SW = SW-1 = 59112.280

4312.646

4312.646

747.132

Now SW-1 . D =

59112.280

4312.646 -0.010

=

4312.646

747.132

-0.043

-783.63 x1

-75.62

x2

i.e. discriminant function coefficients are -783.63 for variable x1 and -75.62

for x2.

[z = -783.63 x1 - 75.62 x2 ]

MATRIX INVERSION

Division of one matrix by another, in the sense of ordinary algebraic division,

cannot be performed.

To solve the equation for matrix [X]

[A] . [X] = [B]

we first find the inverse matrix [A], generally represented as [A]-1.

The inverse or reciprocal matrix of [A] satisfies the relationship

[A] . [A]-1 = [I]

where [I] is an identity matrix with zeros in all the elements except the

diagonal where the elements are all 1.

To solve for [X] we multiply both sides by [A]-1 to get

[A]-1 . [A]. [X] = [A]-1 . B

As [A-] . [A] = [I] and [I].[X] = [X]

the above equation reduces to

[X] = [A]-1 . B

If matrix A is

4

10

10

30

to find its inverse we first place an identity matrix [I] next to it.

4

10

10

30

.

1

0

0

1

We now want to convert the diagonal elements of A to ones and the offdiagonal elements to zeros. We do this by dividing the matrix rows by

constants and subtracting the rows of the matrix from other rows, i.e.

1

2.5

0.25

0

Row one is divided by 4 to

0

5

0

1

produce an element a11 = 1

To reduce a21 to zero we now subtract ten times row one from row 2 to give

1

2.5

0.25

0

0

5

-2.5

1

To make a22 = 1 we now divide row two by 5,

1

2.5

0.25

0

0

1

-0.5

0.2

To reduce element a12 to zero, we now subtract 2.5 times row one to give

1

0

1.5

-0.5

0

1

-0.5

0.2

The inverse of A is thus

1.5

-0.5

-0.5

0.2

This can be checked by multiplying [A] by [A]-1 which should yield the identity

matrix I i.e.

1.5

-0.5

-0.5

0.2

.

4

10

10

30

=

1

0

0

1

R.A. Fisher

Can position the means of group A and of group B on the discriminant function

RA = 1x1 + 2x2

Rb = -783.63 x 0.340 + -75.62 x 1.210

= -783.63 x 0.330 + -75.62 x 1.167

= -357.81

= -346.64

D2 = (x1 – x2) Sw-1 (x1 – x2)

We can position individual samples along discriminant axis.

The distance between the means = D2 = 11.17

To test the significance of this we use Hotelling's T2 test for differences

between means =

n a n b D2

with an F ratio of

na + nb – m – 1 T2

R

n a + nb

(na + nb – 2) m

CANOCO

and m and (na + nb – m – 1) degrees of freedom.

ASSUMPTIONS

1. Objects in each group are randomly chosen.

2. Variables are normally distributed within each group.

3. Variance-covariance matrices of groups are statistically

homogeneous (similar size, shape, orientation).

4. None of the objects used to calculate discriminant function is

misclassified.

Also in identification:

5. Probability of unknown object belonging to either group is

equal and cannot be from any other group.

MULTIVARIATE NORMALITY

Mardia

(1970)

Biometrika 57, 519–530

SKEWNESS

b1,m

1

2

n

x

n

n

i 1 j 1

i

x 1 S 1 x j x

3

Significance A = n.b1,m/6 x2 distribution with m(m + 1)(m + 2)/6 degrees of

freedom.

n

KURTOSIS

b2,m

Test significance

2

1

1 1

x

x

S

x

x

i

i

n i 1

B b2,m mm 2/8mm 2 n 2

1

Asymptotically distributed as N(0,1).

Reyment

(1971)

J. Math. Geol. 3, 357-368

Malmgren

(1974)

Stock. Contrib. Geol. 29, 126 pp

Malmgren

(1979)

J. Math. Geol. 11, 285-297

Birks & Peglar

(1980)

Can. J. Bot. 58, 2043-2058

Probability plotting D2 plots.

MULTNORM

Multidimensional

probability plotting. The

top three diagrams show

probability plots (on arithmetic paper) of generalized

distances between two

variables: left, plot of D2

against probability; middle,

plot of D2 against probability; right, a similar plot

after removal of four outlying values and recalculations of D2 values. If

the distributions are

normal, such plots should

approximate to S-shaped.

The third curve is much

closer to being S-shaped

than is the second, so that

we surmise that removal of

the outlying values has

converted the distribution

to normal. Again, however, a judgement as to degree of fit to a curved line is difficult to make

visually. Replotting the second and third figures above on probability paper gives the probability

plots shown in the bottom two diagrams. It is now quite clear that the full data set does not

approximate to a straight line; the data set after removal of four outliers is, on visual inspection

alone, remarkably close to a straight line.

STATISTICAL HOMOGENEITY OF COVARIANCE

MATRICES

Primary causes

Secondary causes

B 2 Nalog e det S det S Nblog e det S det S

a

b

Approximate x2 distribution ½ m(m + 1)d.f. and B distribution with ½ m(m + 1)d.f.

2m3 3m2 m

Na Nb 2

12

2

Campbell (1981) Austr. J. Stat. 23, 21-37

ORNTDIST

COMPARISON OF PROPERTIES OF TWO

MULTIVARIATE GROUPS

ORNTDIST

Reyment

(1969)

J. Math. Geol. 1, 185-197

Reyment

(1969)

Biometrics 25, 1-8

Reyment

(1969)

Bull. Geol. Inst. Uppsala 1, 121-159

Reyment

(1973)

In Discriminant Analysis & Applications (ed. T. Cacoulos)

Birks & Peglar

(1980)

Can. J. Bot. 58, 2043-2058

Gilbert

(1985)

Proc. Roy. Soc. B 224, 107-114

Outliers -

probability plots of D2

gamma plot (m/2 shape parameter)

PCA of both groups separately and test for homogeneity of group matrices

Chi-square probability

plot of generalized

distances. (In this and

subsequent figures of

probability the

theoretical quantities

are plotted along the

x-axis and the ordered

observations along the

y-axis.)

Ordered

observa

-tions

D2

Chi-square probability

plot of generalized

distances

D2

1 = 2

1 2

Lengths

1 2

1 2

TESTS FOR ORIENTATION DIFFERENCES

Anderson (1963) Ann. Math. Stat. 34, 122-148

ORNTDIST

Calculate

n 1 di biS11bi 1 d

bS b 2

i 1 1

i

where n is sample size of dispersion matrix S1, di is eigenvalue i, bi is

eigenvector i of dispersion matrix S2 (larger of the two). This is x2 distributed

with (m – 1) d.f.

If heterogeneous, can test whether due to differences in orientation. If no

differences in orientation, heterogeneity due to differences in size and shape

of ellipsoids.

SQUARED GENERALISED DISTANCE VALUES

Species

Inflation

heterogeneity

Orientation

heterogeneity

Approx

D2a

Heterogenous

D2h

Standar

d D2s

D2h – D2s

Reyment's

D2r

Carcinus maenas

+

+

3.11

3.16

2.60

0.56 (17.7)*

2.60

Artemia salina

+

+

0.77

0.85

0.77

0.08 (9.4)

0.88

Rana esculenta

+

+

0.180

0.182

0.190

0.08 (44.0)

0.30

Rana temporaria

+

-

0.902

0.928

0.887

0.041 (4.4)

1.12

Omocestus

haemorrhoidalis

Kinnekulle

+

+

54.83

54.96

54.63

0.33 (0.6)

59.67

Kinnekulle-Gotland

+

+

0.49

0.49

0.48

0.01 (2.0)

0.55

Öland-Kinnekulle

+

+

1.70

1.70

1.69

0.01 (0.6)

1.72

Chrysemys picta

marginata

(raw data)

+

+

5.56

6.66

5.56

0.10 (1.5)

4.88

* Percentages within parentheses (Reyment (1969) Bull. Geol. Inst. Uppsala 1, 97-119)

GENERALISED STATISTICAL DISTANCES

BETWEEN TWO GROUPS

Mahalanobis

D2

ORNTDIST

= d' S d

where S-1 is the inverse of the pooled variance-covariance matrix, and d is

the vector of differences between the vectors of means of the two samples.

1

Anderson and Bahadur D2 =

2b ' d

1

2

(b' S1 b) (b' S2 b)

1

2

where b = (t S1 (1 t) S2)1 d , S1 and S2 are the respective group

covariance matrices, and t is a scalar term between zero and 1 that is

improved iteratively

Reyment D2 = 2d ' Sr 1 d where Sr is the sample covariance matrix of

differences obtained from the random pairing of the two groups. As N1Dr2/2 =

T2, can test significance.

Average D2 = d ' Sa

1

d where Sa = ½ (S1 + S2 )

Dempster’s directed distances

D(1)2 =

d ' S11 d

and

D(2)2 =

d ' S21 d

Dempster’s generalised distance

D12 =

(d Sw 1) S1 (d Sw 1)t

and

D22 =

(d Sw 1) S2 (d Sw 1)t

Dempster’s delta distance

D2 =

d ' S1 d

S2

na

nb

1 1

na

nb

S1

where S =

IDENTIFICATION OF UNKNOWN OBJECTS

DISKFN, R

Assumption that probability of unknown object belonging to either group only

is equal. Presupposes no other possible groups it could come from.

Closeness rather than either/or identification.

If unknown, u, has position on discriminant function:

Ru 1u1 2u2

then:

2

xau

a u 1 S 1 a u

2

x bu

b u 1 S 1 b u

m degrees of freedom

Birks & Peglar (1980) Can. J. Bot. 58, 2043-2058

Picea glauca (white spruce) pollen

Picea mariana (black spruce) pollen

Quantitative characters of Picea pollen (variables x1 – x7). The means (vertical line), 1

standard deviation (open box), and range (horizontal line) are shown for the reference

populations of the three species.

Results of testing for multivariate skewness

and kurtosis in the seven size variables for

Picea glauca and P. mariana pollen

P. glauca

P. mariana

Skewness

b1,7

4.31

7.67

A

81.21

127.79

º of freedom

84

84

b2,7

59.16

60.55

B

-1.82

-1.09

Kurtosis

The homogeneity of the covariance matrices

based on all seven size variables (x1 – x7, Fig 3)

was tested by means of the FORTRAN IV

program ORNTDIST written by Reyment et al

(1969) and modified by H.J.B Birks. The value

of B2 obtained is 52.85, which for 2 = 0.98 and

28 degrees of freedom is significant (p =

0.003). This indicates that the hypothesis of

homogenous covariance matrices cannot be

accepted. Thus the assumption implicit in

linear discriminant analysis of homogenous

matrices is not justified for these data.

Delete x7 (redundant, invariant variable)

Kullback's test suggests that there is now no

reason to reject the hypothesis that the

covariance matrices are homogenous (B2 =

31.3 which, for 2 = 0.64 and 21 degrees of

freedom is not significant (p = 0.07)). These

results show that when only variables x1 – x6

are considered the assumptions of linear

discriminant analysis are justified. All the

subsequent numerical analyses discussed here

are thus based on variables x1 – x6 only.

Results of testing for multivariate skewness

and kurtosis in the size variables x1 – x6 for

Picea glauca and P. mariana pollen.

P. glauca

P. mariana

Skewness

b1,6

2.99

5.05

A

56.31

74.15

º of freedom

56

56

Kurtosis

b2,6

44.48

45.94

B

-1.91

-1.05

NOTE: None of the values for A or B is significant at the 0.05

probability level.

Representation of the discriminant function for two

populations and two variables. The population means I

and II and associated 95% probability contours are

shown. The vector c~ is the discriminant vector. The

points yI and yII represent the discriminant means for

the two populations. The points (e), (f) and (h)

represent three new individuals to be allocated. The

points (q) and (r) are the discriminant scores for the

individuals (e) and (f). The point (0I) is the

discriminant mean yI.

Alternative representation of the discriminant

function. The axes PCI and PCII represent orthonormal linear combinations of the original variables.

The 95% probability ellipses become 95% probability

circles in the space of the orthonormal variables.

The population means I and II for the discriminant

function for the orthonormal variables are equal to

the discriminant means yI and yII. Pythagorean

distance can be used to determine the distances

from the new individuals to the population means.

CANONICAL VARIATES ANALYSIS

MULTIPLE DISCRIMINANT ANALYSIS

Bivariate plot of

three populations. A

diagrammatic

representation of the

positions of three

populations, A, B and

C, when viewed as

the bivariate plot of

measurements x and

y (transformed as in

fig 20 to equalize

variations) and taken

from the specimens

(a's, b's and c's) in

each population. The

positions of the

populations in

relation to the

transformed

measurements are

shown.

A diagrammatic

representation of

the process of

generalized

distance analysis

performed upon

the data of left

figure; d1, d2, and

d3 represent the

appropriate

distances. D2

A diagrammatic representation of the process of canonical analysis

when applied to the data of top left figure. The new axes ' and "

represent the appropriate canonical axes. The positions of the

populations A, B, and C in relation to the canonical axes are shown.

g groups

g – 1 axes

(comparison between

two means

- 1 degree of freedom

three means

- 2 degrees of freedom)

m variables

If

m < g – 1, only need m axes

i.e. min (m, g – 1)

Dimension reduction technique

An analysis of 'canonical variates' was also made for all six variables

Artemia

measured by GILCHRIST.* The variables are: body length (x1), abdomen

salina

length (x2), length of prosoma (x3), width of abdomen (x4), length of

(brine

furca (x5), and number of setae per furca (x6). (Prosoma = head plus

shrimp) thorax.) The eigenvalues are, in order of magnitude, 33.213, 1.600,

0.746, 0.157, 0.030, -0.734. The first two eigenvalues account for

about 99 percent of the total variation. The equations deriving from

the eigenvalues of the first two eigenvectors are:

E1 = –0.13x1 + 0.70x2 + 0-07x3 – 0.36x4 – 0.35x5 – 0.14x6

E2 = –0.56x1 + 0.48x2 + 0-08x3 – 0.18x4 – 0.20x5 + 0.31x6

By substituting the means for each sample, the sets of mean canonical

variates shown in Table App II.10 (below) were obtained.

14 groups

Six variables

Five localities

35 ‰, 140 ‰

♂, ♀

2669

individuals

CANVAR

R.A. Reyment

Example of the

relationship

between the shape

of the body of the

brine shrimp

Artemia salina and

salinity. Redrawn

from Reyment

(1996). The

salinities are

marked in the

confidence circles

(35‰, respectively,

140‰). The first

canonical variate

reflects geographical variation in

morphology, the

second canonical

variate indicates

shape variation.

The numbers in

brackets after

localities identify

the samples.

♂

♀

Sexual

dimorphism

♂ (green) to

left of ♀

(pink)

Salinity

changes

35‰ 140‰

SOME REVISION

Matrix X(nxm) Y(mxm)

PCA

Y1Y (sum of squares and cross-products matrix)

Canonical variates analysis

X(nxm) g submatrices

X1

X2

X3

Xg

Y1

Y2

Y3

Yg

Y11Y1 Y21Y2 Y31Y3

centring by variable

means for each

submatrix

within group SSP matrix

Yg

= Wi

W W

within-groups SSP matrix

Y 1Y T

total SSP matrix

B T W

between-groups SSP matrix

i

T I u 0

PCA

CVA

B W u 0

T I 0

or

BW

B W 0

1

I u 0

BW 1 I 0

i.e. obvious difference is that BW-1 has replaced T. Number of CVA eigenvalues

= m or g – 1, which ever is smaller. Maximise ratio of B to W.

Canonical variate is linear combination of variables that maximises the ratio

of between-group sum of squares B to within-group sum of squares W.

i.e.

1 u1Bu u1Wu

(cf. PCA

1 = u1Tu )

B T W

Normalised eigenvectors (sum of squares = 1, divide by x) give normalised

canonical variates.

Adjusted canonical variates – within-group degrees of freedom.

1

1 W

u

u u

ng

2

Standardised vectors – multiply eigenvectors by pooled within-group

standard deviation.

Scores

yij uj xi

Dimension reduction technique.

Other statistics relevant

CANVAR, R

1)

Multivariate analysis of variance

Wilks W T

2)

Homogeneity of dispersion matrices

g

i 1

ni

2

W

log e

Wi

x2 distribution

where ni is sample size –1,

W pooled matrix,

Wi is group i matrix

g 1mm 1 2 d.f.

Geometrical interpretation – Campbell & Atchley (1981)

Ellipses for pooled

within-group matrix W

P1, P2 principal

components of W

7 groups, 2

variables

Scatter ellipses and

means

Scale each principal

component to unit

variance (divide by )

Project 7 groups

onto principal

component areas

p1, and P2

PCA of groups means, I

and II

Euclidian space

I and II are canonical

roots.

Reverse from

orthonormal to

orthogonal

Reverse from

orthogonal to original

variables

(as in (c))

(as in (a)

and (b))

Illustration of the rotation and scaling implicit in the calculation of

the canonical vectors.

AIDS TO INTERPRETATION

CANVAR

Plot group means and individuals

Goodness of fit i/i

Plot axes 1 & 2, 2 & 3, 1 & 3

Individual scores and 95% group confidence contours

2 standard deviations of group scores or z /n where z is standardised

normal deviate at required probability level, n is number of individuals in

group. 95% confidence circle, 5% tabulated value of F based on 2 and (n – 2)

degrees of freedom.

Minimum spanning tree of D2

Scale axes to ’s

Total D2 = D2 on axes + D2 on other axes (the latter should be small if the

model is a good fit)

Residual D2 of group means

INTERPRETATION OF RELATIVE IMPORTANCE OF VARIABLE LOADINGS

Weighted loadings

- multiply loading by pooled W standard deviation

Structure coefficients

- correlation between observed variables and

canonical variables

S = Rc

1

c

D 2u

u1Wu

where D = diagonal element of W

W = within-group matrix

u = normalised eigenvectors

Very important for interpretation because canonical coefficients are ‘partial’ –

reflect an association after influence of other variables has been removed.

Common to get highly correlated variables with different signed canonical

correlations or non-significant coefficients when jointly included but highly

significant when included separately.

Canonical variate ‘biplots’.

CANVAR

Canonical variates analysis of the Manitoba set

1

2

Canonical variate loadings

Picea

-0.026

-0.029

Pinus

-0.034

-0.03

Betula

-0.026

-0.034

Alnus

-0.032

-0.036

Salix

-0.018

-0.002

Gramineae

-0.047

-0.006

Cyperaceae

-0.026

-0.047

Chenopodiaceae

-0.072

-0.052

Ambrosia

-0.018

-0.029

Artemisia

-0.063

-0.048

Rumex/Oxyria

-0.034

-0.045

Lycopodium

-0.027

-0.126

Ericaceae

-0.033

-0.092

Tubuliflorae

-0.111

-0.062

Larix

-0.047

-0.02

Corylus

-0.066

0.018

Populus

-0.047

0.009

Quercus

-0.056

-0.025

Fraxinus

-0.076

-0.093

Canonical variate group means

Tundra

3.361

-1.336

Forest-tundra

3.11

-2.401

Open coniferous forest

2.643

-0.982

Closed conif. Forest A

-0.805

3.134

Closed conif. Forest B

2.126

1.089

Closed conif. Forest C

1.393

0.873

Mixed forest (uplands)

-0.676

0.711

Mixed forest (lowlands)

0.538

2.836

Deciduous forest

-12.415

0.312

Aspen parkland

-12.468

-0.161

Grassland

-18.039

-5.932

Eignevalue

% total variance accounted for

Cummulative % variance

accounted for

3012.9

62.4

62.4

588.7

12.1

74.5

3

4

5

6

0.047

0.028

0.021

0.012

0.063

0.022

0.036

0.036

0.058

0.033

0.017

-0.055

0.071

0.093

0.027

0.081

0.052

0.037

0.088

0.016

0.019

0.008

0.027

-0.012

0.016

0.015

0.041

0.014

0.023

0.022

-0.011

0.029

-0.003

0.021

-0.096

0.007

0.009

-0.014

0.035

0.041

0.035

0.039

0.021

0.015

0.033

0.01

0.009

0.047

0.046

0.019

0.033

0.104

0.057

0.106

-0.006

0.034

0.169

-0.009

-0.014

-0.003

-0.033

0.019

-0.014

-0.012

0.009

-0.065

-0.013

-0.021

-0.025

-0.05

-0.034

0.049

-0.026

-0.073

-0.018

0.054

-2.303

2.702

1.249

-3.553

2.128

-1.595

0.689

-0.787

1.72

-0.045

0.506

-1.338

0.332

0.812

1.266

0.372

0.733

-1.395

-0.985

-7.372

1.192

4.475

-0.837

-0.619

0.185

2.169

0.44

1.114

0.846

-2.738

3.655

-2.451

2.827

0.247

-0.984

-0.781

-1.355

0.305

0.118

0.388

0.788

-0.841

-1.069

3.848

432.2

8.9

83.4

518.1

6.5

89.9

302.1

6.2

96.1

92.5

1.9

98

Birks et al. (1975) Rev. Palaeobot. Palynol. 20, 133-169

Modern pollen

Modern vegetation

OTHER INTERESTING EXAMPLES

Nathanson

(1971)

Appl. Stat. 20, 239-249 Astronomy

Green

(1971)

Ecology 52, 542-556 Niche

Margetts et al.

(1981)

J Human Nutrition 35, 281-286 Dietary Patterns

Carmelli & Cavalli-Sforza

(1979)

Human Biology 51, 41-61 Genetic Origin of Jews

Oxnard

(1973)

Form and Pattern in Human Evolution - Chicago

ASSUMPTIONS – Williams (1983) Ecology 64, 1283-1291

Homogeneous matrices and multivariate normality.

Heterogeneous matrices common.

1.

Compare results using different estimates of within-group matrices –

pool over all but one group, pool subsets of matrices. Robust estimation

of means and covariances. Influence functions. Campbell (1980) Appl.

Stat. 29, 231–237.

2.

Calculate D2 for each pair of groups using pooled W or pooled for each

pair. Do two PCOORDs of D2 using pooled and paired matrices. Compare

results. Procrustes rotation.

3. Determine D2 twice for each pair of groups using each matrix in

turn. Degree of symmetry and asymmetry examined. Several

ordinations possible based on different D2 estimates.

Procrustes rotation.

4. Campbell (1984) J. Math. Geol. 16, 109–124 extension with

heterogeneous matrices – weighted between group and

likelihood ratio – non-centrality matrix generalisations.

Outliers – Campbell (1982) Appl. Stat. 31, 1–8. Robust CVA –

incidence functions, linearly bounded for means, quadratically

bounded or exponentially weighted for covariances.

Campbell & Reyment (1980) Cret. Res. 1, 207–221.

DISCRIMINANT ANALYSIS A DIFFERENT FORMULATION

Response

variables

Predictor

variables

Class 1

Class 2

1

0

1

0

1

0

0

1

0

1

0

1

x1 x2 x3 ... xm

Regression with 0/1 response variable and predictor variables.

DISCRIMINANT FUNCTION FOR SEXING FULMARINE

PETRELS FROM EXTERNAL MEASUREMENTS

(Van Franketer & ter Braak (1993) The Auk, 110: 492-502)

Lack plumage characters by which sexes can be recognised.

Problems of geographic variation in size and shape.

Approach:

1.

A generalised discriminant function from data from sexed birds of a

number of different populations

2.

Population – specific cut points without reference to sexed birds

Measurements

Five species of fulmarine petrels

HL – head

length

Antarctic petrel Northern fulmar

Cape petrel

Southern fulmar

Snow petrel

CL – bill length

BD – bill depth

TL – tarsus

length

STEPWISE MULTIPLE REGRESSION

Ranks characters according to their discriminative power, provides

estimates for constant and regression coefficient b1 (character

weight) for each character.

For convenience, omit constant and divide the coefficient by the

first-ranked character.

Discriminant score = m1 + w2m2 + ..... + wnmn

where mi = bi/b1

Cut point – mid-point between ♂ and ♀ mean scores.

Reliability tests

1. Self-test

- how well are the two sexes discriminated? Ignores

bias, over-optimistic

2. Cross-test

- divide randomly into training set and test set

3. Jack-knife (or leave-one-out – LOO)

- use all but one bird, predict it, repeat for all birds.

Use n-1 samples. Best reliability test.

Small data-sets - self-test

OVERESTIMATE

- cross-test

UNDERESTIMATE

- jack-knife

RELIABLE

MULTISAMPLE DISCRIMINANT ANALYSIS

If samples of sexed birds in different populations are small but different

populations have similar morphology (i.e. shape) useful to estimate

GENERALISED DISCRIMINANT from combined samples.

1.

2.

Cut-point established with reference to sex

(determined by dissection)

WITH SEX

Cut-point without reference to sex

NO SEX

Decompose mixtures of distributions into their underlying

components. Maximum likelihood solution based on assumption of

two univariate normal distributions with unequal variances.

Expectation – maximization (EM) algorithm to estimate means 1 and

2 and variances 1 and 2 of the normals.

Cut point is where the two normal densities intersect.

xs = (22 - 12)-1 {122 - 212 + 12 [(1 - 2)2

+ (12 - 22) log n 12/22]0.5}

DISCRIMINANT ANALYSIS AND ARTIFICIAL

NEURAL NETWORKS

Artificial neural networks

Input vectors

Output vectors

>1 Predictor

1 or more Responses

Regression

>1 Variable

2 or more Classes

(or 1/0 Responses)

Discriminant

analysis

DISCRIMINANT ANALYSIS BY NEURAL

NETWORKS

Malmgren & Nordlund (1996) Paleoceanography 11, 503–512

Four distinct volcanic ash zones in late Quaternary sediments of Norwegian Sea.

Zone A B C D

Basaltic and Rhyolithic types

8 classes x 9 variables (Na2O, MgO, Al2O3, SiO, K2O, CaO, TiO2, MnO, FeO)

183 samples

(A). Diagram showing the general

architecture of a 3-layer back

propagation network with five

elements in the input layer, three

neurons in the hidden layer, and two

neurons in the output layer. Each

neuron in the hidden and output

layers receives weighted signals

from the neurons in the previous

layer. (B) Diagram showing the

elements of a single neuron in a

back propagation network. In

forward propagation, the incoming

signals from the neurons of the

previous layer (p) are multiplied

with the weights of the connections

(w) and summed. The bias (b) is

then added, and the resulting sum is

filtered through the transfer

function to produce the activity (a)

of the neuron. This is sent on to the

next layer or, in the case of the last

layer, represents the output. (C) A

linear transfer function (left) and a

sigmoidal transfer function (right).

4 zones

ABCD

2 types

Rhyolite

Basalt

Configuration of grains referable to the 4 late Quaternary volcanic ash zones, A through D, in the Norwegian

sea described by Sjøholm et al [1991] along first and second canonical variate axes. The canonical variate

analysis is based on the geochemical composition of the individual ash particles (nine chemical elements were

analyzed: Na2O, MgO, Al2O3, SiO2, K2O, CaO, TiO2, MnO, and FeO). Two types of grains, basaltic and rhyolithic,

were distinguished within each zone. This plane, accounting for 98% of the variability among group mean

vectors in nine-dimensional space (the first axis represents 95%), distinguishes basaltic and rhyolithic grains.

Apart from basaltic grains from zone C, which may be differentiated from such grains from other zones, grains

of the same type are clearly overlapping with regard to the geochemical composition among the zones.

Malmgren & Nordlund (1996)

Changes in error rate (percentages of misclassifications in the test set) for a three-layer

back propagation network with increasing number of neurons when applied to training-test

set 1 (80:20% training test partition). Error rates were determined for an incremental series

of 3, 6, 9, …., 33 neurons in the hidden layer. Error rates were computed as average rates

based on ten independent trials with different initial random weights and biases. The error

rates represent the minimum error obtained for runs of 300, 600, 900, and up to 9000

epochs. The minimum error rate (9.2%) was obtained for a configuration with 24 neurons in

the hidden layer, although there is a major reduction already at nine neurons.

Malmgren & Nordlund (1996)

Changes in error rate (percentages of misclassifications) in the training set with increasing

number of epochs in the first out of ten trials in training set 1. This network had 24 neurons

in the hidden layer, and the network error was monitored over 30 subsequent intervals of

300 training epochs each. During training, the error rate in the training set decreased from

18.5% after 300 epochs to a minimum of 2.1% after 7500 epochs. The minimum error rate in

the test set (10.8%) was reached after 3300 epochs.

Malmgren & Nordlund (1996)

CRITERION OF NEURAL NETWORK SUCCESS

OTHER TECHNIQUES USED

ERROR RATE of predictions in independent test set

that is not part of the training set.

Linear discriminant analysis (LDA)

Cross-validation 5 random test sets

Training set

37 particles

146 particles

Error rate of misclassification (%) for each test set

Average rate of misclassification (%) for five test

sets

k-nearest neighbour

technique) (=KNN)

(= modern analog

Soft independent modelling of close

analogy (SIMCA)

(close to PLS with classes)

NETWORK CONFIGURATION & NUMBER OF

TRAINING CYCLES

CONCLUSIONS

24 neurons

Average error rate NN network 9.2%

Training set

– minimum in error rate

7500 cycles

Test set

– minimum in error rate

(10.8%) 3300 cycles

i.e. 33.6 out of 37 particles correctly

classified

LDA 38.4%

K-NN 30.8%

SIMCA 28.7%

Error rates (percentages of misclassifications in the test sets) for each of the five independent training-test

set partitions (80% training set and 20% test set members) and average error rates over the five partitions

for a three-layer back propagation (BP) neural network, linear network, linear discriminant analysis, the knearest neighbours technique (k-NN) and SIMCA. Neural network results are based on ten independent trials

with different initial conditions. Error rates for each test set are represented by the average of the

minimum error rates obtained during each of the ten trials, and the fivefold average error rates are the

averages of the minimum error rates for the various partitions.

Error rates in each of five training-test set partitions, fivefold average error rates

in the test sets, and 95% confidence intervals for the fivefold average error rates

for the techniques discussed in this paper.

Neural N

The fivefold average error rates were determined as the average error rates over five

independent training and test sets using 80% training and 20% test partitions. Error rates for

the neural networks are averages of ten trials for each training-test set partition using

different initial conditions ((random initial weights and biases). The minimum fivefold error

rate for the back propagation (BP) network was obtained using 24 neurons in the hidden

layer. Apart from regular error rates for soft independent modelling of class analogy (SIMCA

1), the total error rates for misclassified observations that could be referable to one or

several other groups are reported under SIMCA 2. LDA represents linear discriminant analysis

and k-NN, k-nearest neighbour.

Average error rates (percentages) for basaltic and rhyolithic

particles in ash zones A through D

As before, error average error rates over five experiments based on 80% training set

members and 20% test set members. N is the range of sample sizes in these experiments.

As in the use of ANN in regression, problems of over-fitting and overtraining and reliable model testing occur. n-fold cross-validation

needed with an independent test set (10% of observations), an

optimisation data set (10%), and a training or learning set (80%).

repeated n-times (usually 10).

ANN a computationally slow way of implementing two- or manygroup discriminant analysis. No obvious advantages.

Allows use of 'mixed' data about groups (e.g. continuous, ordinal,

qualitative, presence/absence). But can use mixed data in canonical

analysis of principal co-ordinates if use the Gower coefficient for

mixed data (see Lecture 12 for details).

NICHE ANALYSIS OF SPECIES

Niche region in m-dimensional space where each axis is environmental

variable and where particular species occurs.

Green

(1971)

(1974)

Ecology 52, 543–556

Ecology 55, 73–83

CVA

345 samples, 32 lakes, 10 species (= groups), 9 environmental

variables

[CCA

Y

10 species

x

345 samples

X

9 environmental variables

x

345 samples]

Multivariate niche analysis with temporally varying environmental factors

partialled out time-varying variables – Green 1974.

[CCA

Y

i.e. partial CCA]

X

Z covariables

Lake Winnipeg

Lake Manitoba

All DF I and DF II discriminant scores for all Lake

Winnipeg samples fall within the white area on

the left. All scores for all Lake Manitoba samples

fall within the white area on the right. The

scales of the two ordinates and the two abscissas

are identical. The 50% contour ellipses for each

of the four species most frequently collected

from Lake Winnipeg are shown for the two lakes.

Two of the species were not collected from Lake

Manitoba.

Each shaded area represents a lake for

which all points, defined by DF I and DF IV

discriminant scores for all samples from

that lake, fall within the area. Lake

numbers refer to Table 1. The two

concentric ellipses contain 50 and 90% of

all samples of Anodonta grandis and are

calculated from the means, variances and

covariance in DF I – DF II space for the

samples containing A. grandis.

A. Normal distributions along the discriminant axis for

the three species in the unmodified discriminant

analysis of artificial data.

B. The species 0.5 probability ellipses in the space

defined by DF (Space) I and DF (Time) I in the

discriminant-covariance analysis of artificial data.

SPACE

TIME

The distribution of Rat River

benthic species means in the space

defined by DF (Space) I and DF

(Time) I. Genera represented are

listed in Table 4 and abbreviations

of trophic categories are defined.

Table 4. Summary by genus of Rat River benthos

analysis. AH-D = active herbivore-detritivore,

PH-D = passive herbivore-detritivore, C =

carnivore. Calculation of niche breadth and size is

based on standardized and normalized data with

standardized and normalized discriminant

function vectors.

CANONICAL VARIATES ANALYSIS – via CANOCO

Only makes sense if number of samples n >> m or g.

i.e. more samples than either number of variables or number of groups.

(Axes are min (m, g – 1))

In practice:

1.

No problem with environmental data (m usually small).

2.

Problems with biological data (m usually large and > n).

3.

Use CCA or RDA to display differences in species composition between

groups without having to delete species. Code groups as +/– nominal

environmental variables and do CCA or RDA = MANOVA.

4.

Can use CVA to see which linear combinations of environmental variables

discriminate best between groups of samples – CANOCO.

Use groups 1/0 as ‘species data’

Use env variables as ‘env data’

Responses

Explanatory vars

Species scores – CVA group means

Sample scores LC – individual samples

Biplot of environmental variables

Permutation tests

Partial CVA one-way multivariate analysis of covariance.

RELATION OF CANONICAL CORRESPONDENCE

ANALYSIS TO CANONICAL VARIATES ANALYSIS

(= Multiple Discriminant Analysis)

Green (1971, 1974) - multiple discriminant analysis to quantify multivariate

Hutchinsonian niche of species

Ca

1

CVA II

2

3

4

depth

CVA I

organic content

particle size

Carnes & Slade (1982) Niche analysis metrics

Niche breadth = variance or standard deviation of canonical scores

Niche overlap

Chessel et al (1987), Lebreton et al (1988) CCA & CVA

CVA

- measurements of features of objects belonging to different groups

- CVA linear combinations of those features that show maximum

discrimination between groups, i.e. maximally separate groups

Replace 'groups' by 'niches of species'.

CCA

- linear combinations of external features to give maximum

separation of species niches.

But

in CVA features of the individuals are measured

in CCA features of the sites are measured

If

SPECIES DATA IN CCA ARE COUNTS OF INDIVIDUALS AT SITES, LINK

BETWEEN CVA and CCA is complete by TREATING EACH

INDIVIDUAL COUNTED AS A SEPARATE UNIT, i.e. as a separate row

in data matrix.

DATA FOR EACH INDIVIDUAL COUNTED ARE THEN THE SPECIES TO WHICH IT

BELONGS AND THE MEASUREMENTS OF THE FEATURES OF THE SITE AT

WHICH IT OCCURS.

CVA CCA except for scaling.

CVA CCA – main difference is that unit of analysis is the individual in CVA

whereas it is the site in CCA. Can be coded to be the individual and hence

do CVA via CCA for niche analysis.

i.e.

Site

Species

Site

pH Ca Mg Cl

A

B

C

D

1

1

0

0

0

1

2

1

0

0

0

2

3

1

0

0

1

3

4

0

1

0

0

4

5

0

1

0

1

5

6

0

0

1

0

6

7

0

0

1

1

7

Y

Environment

X

Species scores = CVA group means Sample scores LC = Individual observations

Biplot of environmental variables

Hill's scaling -2 (Inter-species distances)

DISTANCES BETWEEN GROUP MEANS (SPECIES)

= MAHALONOBIS DISTANCES

Biplot scores for the environmental variables form a biplot with the

group means for each of the environmental variables, and with the

individual sample points for each of the environmental variables.

Sample scores that are LC environmental variables are scaled so that

the within-group variance equals 1.

Permutation tests can be used to see if the differences between

groups are statistically significant.

With covariables present, can do partial CVA = multivariate analysis

of covariance. Tests for discrimination between groups in addition to

the discrimination obtainable with the covariables.

Technical points about using CANOCO to implement CVA

1. The eigenvalues reported by CANOCO are atypical for CVA

Recalculate as = /(1-)

e.g.

1 = 0.9699

2 = 0.2220

0.9699/(1 – 0-9699)

0.2220/(1 – 0.2220)

= 32.2

= 0.28

CANOCO

2. Hill's scaling and a focus on inter-sample distances gives

distance between group means to be Mahalonobis distances.

Triplot based on a CVA of the Fisher's Iris data

DEFINITION OF CCA BY MAXIMUM NICHE

SEPARATION

For a standardized gradient x, i.e. a gradient for which

n

yi

yi 2

.

xi 0

x i 1

i 1 y

i 1 y

n

(1)

the weighted variance of species centroids {uk} (k = 1 ... m) of equation (1)

is defined by

y k 2

uk

k 1 y

m

(2)

Now let x be a synthetic gradient, i.e. a linear combination of environmental

variables

p

(3)

xi c j zij

j 1

with zij the value of environmental variable j (j=1....p) in site i and cj its

coefficient or weight {cj}, i.e. the weights that result in a gradient x for

which the weighted variance of the species scores (5) is maximum.

Mathematically, the synthetic gradient x can be obtained by solving an

eigenvalue problem; x is the first eigenvector x1 with eigenvalue the

maximum . The optimized weights are termed canonical coefficients.

Each subsequent eigenvector xs = (xls, ..., xns)’ (s>1) maximises (2) subject to

constraint (3) and the extra constraint that it is uncorrelated with previous

eigenvectors, i.e.

y

i

i

xit xis 0(t s)

CANOCO calculates

1.

Species standard deviations (= tolerances) of scores per axis.

2.

Root mean square standard deviation across the four axes as summary

niche breadth.

3.

N2 for each species ‘effective number of occurrences of a species’.

Species 1000, 1, 1, .1 – WA determined by 1000, so effective number of

occurrences N2 close to 1.

y ik

N2

y

i 1 k

n

n

where

2

y ik

i 1

1

GENERALISED DISTANCE-BASED CANONICAL

VARIATES ANALYSIS

(= CANONICAL ANALYSIS OF PRINCIPAL CO-ORDINATES)

See lecture 7 and Anderson, M.J. & Willis, T.J. (2003) Ecology 84, 511-525

CAP - www.stat.auckland.ac.nz/~mja

Canonical variates analysis of principal co-ordinates based on any symmetric

distance matrix including permutation tests.

Y

response variable data (n x m)

X

predictor variables ('design matrix') to represent group membership as

1/0 variables

Result is a generalised discriminant analysis (2 groups) or generalised canonical

variates analysis (3 or more groups). Finds the axis or axes in principal coordinate space that best discriminate between the a priori groups.

Input raw data, number of groups, and number of objects in each group.

Output includes results of 'leave-one-out' classification of individual objects to

groups, the misclassification error for t principal co-ordinates axes, and

permutation results to test the null hypothesis that there are no significant

differences in composition between the a priori groups (trace statistic and axis

one eigenvalue).

Plots of the proportion

of correct allocations of

observations to groups

(= 1 minus the

misclassification error)

with increases in the

number of principal

coordinate axes (m)

used for the CAP

procedure on data from

the Poor Knights Islands

at three different times

on the basis of (a) the

Bray-Curtis dissimilarity

measure on data

transformed to y' = ln(y

+ 1), and (b) the chisquare distance

measure.

SUMMARY OF CONSTRAINED ORDINATION METHODS

Methods of constrained ordination relating response variables, Y (species abundance

variables) with predictor variables, X (such as quantitative environmental variables or

qualitative variables that identify factors or groups as in ANOVA).

Name of methods

(acronyms, synonyms)

Distance

measure

preserved

Relationship of ordination

axes with original variables

Takes into account

correlation structure

Redundancy Analysis (RDA)

Euclidean

distance

Linear with X, linear with

fitted values, Ŷ = X(X'X)-1 X'Y

... among variables in X,

but not among variables

in Y

Canonical Correspondence

Analysis (CCA)

Chi-square

distance

Linear with X, approx

unimodal with Y, linear with

^

fitted

values, Y*

... among variables in X,

but not among variables

in Y

Canonical Correlation

Analysis (CCorA, COR)

Mahalanobis

distance

Linear with X, linear with Y

... among variables in X,

and among variables in Y

Canonical Discriminant

Analysis (CDA; Canonical

Variate Analysis CVA;

Discriminant Function

Analysis, DFA)

Mahalanobis

distance

Linear with X, linear with Y

... among variables in X,

and among variables in Y

Canonical Analysis of

Principal Coordinates (CAP;

Generalized Discriminant

Analysis)

Any chosen

distance or

dissimilarity

Linear with X, linear with

Qm; unknown with Y

(depends on distance

measure)

... among variables in X,

and among principal

coordinates Qm

CRITERION FOR DRAWING ORDINATION AXES

RDA •

Finds axis of maximum correlation between Y and some linear

combination of variables in X (i.e., multivariate regression of Y on

X, followed by PCA on fitted values, Ŷ).

CCA •

Same as RDA, but Y are transformed to Y* and weights (square roots

of row sums) are used in multiple regression.

CCorA •

Finds linear combination of variables in Y and X that are maximally

correlated with one another.

CVA •

Finds axis that maximises differences among group locations. Same

as CCorA when X contains group identifiers. Equivalent analysis is

regression of X on Y, provided X contains orthogonal contrast

vectors.

CAP •

Finds linear combination of axes in Qm and in X that are maximally

correlated, or (if X contains group identifiers) finds axis in PCO

space that maximises differences among group locations.

DISCRIMINANT ANALYSIS AND

CLASSIFICATION TREES

Recursive partition of data on the basis of set of predictor variables

(in discriminant analysis a priori groups or classes, 1/0 variables).

Find the best combination of variable and split threshold value that

separates the entire sample into two groups that are internally

homogeneous as possible with respect to species composition.

Lindbladh et al. 2002. American Journal of Botany 89: 1459-1476

Picea pollen in eastern North America.

Three species

P. rubens

P. mariana

P. glauca

R

Lindbladh et al. (2002)

Lindbladh et al. (2002)

Cross-validation of classification tree

(419 grains in training set, 103 grains in test set)

Binary trees - Picea glauca

vs rest

Picea mariana

vs rest

Picea rubens

vs rest

In identification can have several outcomes

e.g. not identifiable at all

unequivocally P. rubens

P. rubens or P. mariana

Can now see which grains can be equivocally identified in test set, how

many are unidentifiable, etc. Assessment of inability to be identified

correctly.

Test set (%)

Correct (100, 010, 001)

Equivocal (101, 110, 011, 111)

Unidentifiable (000)

P. glauca

P. mariana

P. rubens

79.3

70.0

75.9

0.0

2.7

2.5

20.7

27.3

21.6

Unidentifiable about the same for each species, worst in P. mariana.

Applications

to fossil data

Cutting classification trees down to size

With 'noisy' data, when classes overlap, can easily have a tree

that fits the data well but is adapted too well to features of that

data set. Trees can easily be too elaborate and over-fit the

training set.

Pruning trees by cross-validation (10-fold cross-validation) using

some measure of tree complexity as a penalty.

Plot relative error against the size of tree and select the largest

value within one standard error of the minimum. Useful cut-off

where increased complexity of data does not give concomitant

pay-off in terms of predictive power.

Pruned tree often as good as full unpruned tree

R

SOFTWARE

DISKFN

MULTNORM

ORNTDIST

CANVAR

CANOCO & CANODRAW

CAP

R