Linear Regression - The Joy of Stats

advertisement

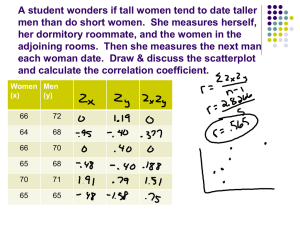

Linear Regression (Bivariate) Creating a Model to Predict an Outcome [1] If we wanted to predict the win/loss record for each team in a league, what variables would we consider? This would require a very complex model — there are many variables involved. For starters, we will look at a two-variable model with one predictor variable and one outcome variable, for example, using the past performance of the quarterbacks to predict the teams’ performances. Creating a Model [2] How would you operationalize quarterback performance as a single interval-ratio predictor variable? How would you operationalize a team’s win/loss record as an interval-ratio variable? What is the Question? Are these two variables related? Does knowing about the distribution of the predictor variable (or IV) allow us to estimate values of the outcome variable (or DV)? Can we write the equation of a line to represent the relationship? Are these estimates any better than just guessing the mean of the DV distribution? What Type of Variables? Both variables should be at the interval-ratio level of measurement. Examples? Examples: Two-Variable Questions Can we estimate individuals’ weights if we know their heights? Can we estimate how long it takes a person to get to class (time) if we know how far people live (distance)? If we know students’ high school GPAs, can we estimate (or predict) their college GPAs? Examples: Places or Organizations as the UNITS OF ANALYSIS (cases) Can we estimate countries’ infant mortality rates if we know the number of physicians per 1,000 people? Are female literacy rates related to male life expectancies, for countries? Are cities’ unemployment rates related to their homicide rates? Are the number of books in the libraries of various colleges a good predictor of the incomes of the alumni from each of those colleges? First Step: Univariate Analysis Look at the distribution of each variable separately. Use histograms, boxplots, descriptives, and other SPSS/PASW functions (e.g., Analyze– Statistics–Explore). A very skewed or otherwise “non-normal” variable may not be suitable for the linear regression. Second Step: The Scatterplot IV (predictor variable) on the x-axis. DV (outcome variable) on the y-axis. Each point is a case located by its X score and its Y score (ordered pair). Does the shape of points look “sort of” linear? Put the line into the chart. What does it mean for a point (case) to be above or below the line? Direction of the Relationship: Positive and Negative Slopes A positive relation will show up as a line with positive slope, from lower left to upper right. A negative or inverse relationship will show up as a line with negative slope, from upper left to lower right. Whether a relationship is positive or negative will be apparent from the sign of R, the correlation coefficient. Pearson’s R: The Correlation Coefficient [1] If the plot looks “sort of linear” Find R (Pearson’s correlation coefficient), which ranges from –1 to +1. 0 means no relationship. –1 means a perfect negative or inverse relationship. +1 means a perfect positive relationship. Correlation Coefficient [2] The correlation coefficient is NOT a percentage or proportion. R = ∑ [ZxZy] / N R expresses the strength and the direction of the relationship. The Direction of the Relationship: Positive The maximum, +1, is reached, if for every case, Zx = Zy In a positive relationship, Z-scores will be multiplied together for a positive product, and negative Z-scores will be multiplied together for a positive product. An example is heights and weights. The Direction of the Relationship: Negative The minimum, –1, will be obtained when, for each case, Zx = –Zy In a negative relationship, each product will involve multiplying two Z-scores with opposite signs (negative and positive), and the product will be negative. An example from the country data set is literacy rate and infant mortality rate. Interpreting R Not all texts agree on how to interpret the strength of R. See Garner (2010, p. 173) for a commonly used interpretation. R2: The Coefficient of Determination Square R to obtain R2 which is called the coefficient of determination. R2 ranges from 0 to 1, and it can be read as a proportion. (Or move the decimal point two places to the right, and read it as a percent.) It reveals what proportion of the variation in the outcome variable was predicted by the predictor variable. R2 is a Proportion between Variances Three variances: Total variance (difference between mean and Y). Explained regression variance (difference between mean and estimated Y). Unexplained residual or error variance (difference between estimated and observed Y). R2 is the ratio of explained, regression variance to total variance. See Figure 19 in Garner (2010), p. 177. Why is R2 So Important? If R2 is 0, it means that the predictor variable is worthless as a predictor. Our best estimate of the outcome (DV) variable remains the mean of the DV. This situation would look like a flat line at the mean of the Y-distribution for the total fit line in the scatterplot. A large R2 means that the linear model provides good estimates of the dependent variable — better than guessing the mean. Why is There an ANOVA in My Regression Analysis? The ANOVA “box” we see in the middle of the regression output is a test (F-test) for the significance of R2. It is testing the null hypothesis that the predictor variable does NOT predict any of the variation we found in the outcome variable’s distribution. R2 is a Proportion R2 = regression variance / total variance It answers these questions: How good is my model (the regression line) for predicting the distribution of the dependent (outcome) variable? How close are the observed Ys to the Y′s estimated in the linear model? The Regression Coefficients Our linear model of the relationship of the two variables is written as the equation of a line. Y = a + bX The y-intercept (constant) is a. The slope of the line is b. We are mostly interested in b. The Table of Coefficients In SPSS/PASW output, the table of coefficients shows the constant term and the slope coefficient. (They are under the heading “B”.) It shows the standardized and unstandardized slope coefficients. The standardized coefficients are called the betas or beta-weights, and are based on the Zscores for each of the two distributions. Each coefficient is tested for significance with a t-test. The null hypothesis is that beta = 0. The Regression Model [1] In the real world, the constant term is often meaningless, and we are interested in it only for writing the equation. We want to know if b is significant. If it is not, forget about it — the whole analysis is off (and R and R2 will also be NOT significant). If it is, we can write the equation with either a standardized or an unstandardized coefficient. The Regression Model [2] The unstandardized coefficients express the relationship in terms of “real world” units of measurement — e.g., feet, kilos, metres, inches, minutes, literacy percentage points, and books in libraries. The standardized coefficient expresses the relationship in terms of the Z-scores of the two variables. Positive and Negative (Inverse) Relationships [1] If the slope coefficient is a positive number, it expresses a positive relationship between the variables — more of one is associated with more of the other. (For example, more study time, higher GPA) If the slope coefficient is a negative number, it expresses a negative or inverse relationship between the variables — more of one is associated with less of the other. (For example, more binge drinking, lower GPA) Positive and Negative [2] Positive relationships will have a positive slope for the line in the graph, a positive slope coefficient, and a positive R. Negative relationships will have a negative slope for the line in the graph, a negative slope coefficient, and a negative R. (Warning: This negative R is missing its minus sign in some parts of SPSS/PASW output.) R2 is always 0 or positive. The End Now we have our linear model BASED ON THE AVAILABLE DATA. If R, R2, and the slope coefficients are significant, we have improved our ability to predict the outcome. Our estimates, Y′, are better than just guessing the mean of the Y distribution. We can return to our first question and start the analysis: Is the past performance of the quarterbacks a good predictor of their teams’ records in the coming season?