Lecture 1: Overview

advertisement

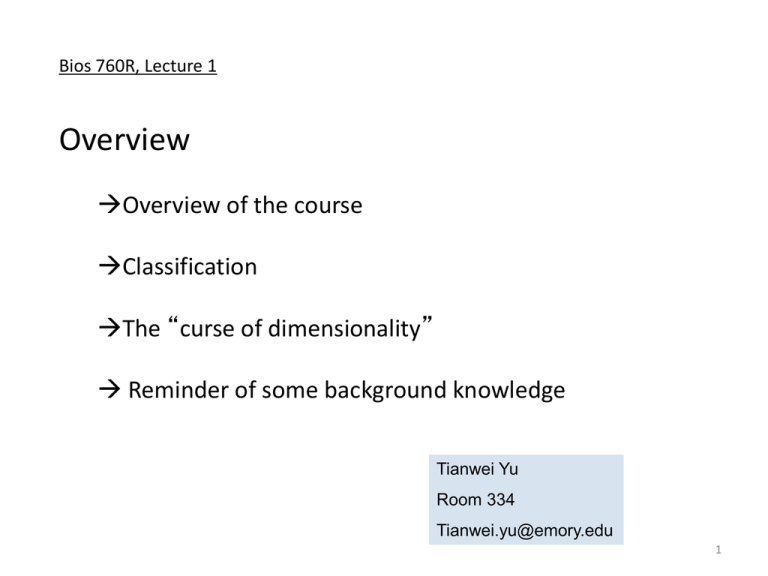

Bios 760R, Lecture 1

Overview

Overview of the course

Classification

The “curse of dimensionality”

Reminder of some background knowledge

Tianwei Yu

Room 334

Tianwei.yu@emory.edu

1

Overview

Lecture 1

Overview

Lecture 2

Bayesian decision theory

Lecture 3

Density estimation

Lecture 4

Linear machine

Classification

Lecture 5

Neural network

Clustering

Lecture 6

Support vector machine

Dimension reduction

Lecture 7

Tree and forest

Lecture 8

Bump hunting

Lecture 9

Boosting

Lecture 10

Model generalization

Lecture 11

Similarity measures, Hierarchical

clustering, Subspace clustering

Lecture 12

Center-based & Model-based

clustering

Lecture 13

PCA, SIR

Lecture 14

ICA, PLSDA

Focus of the course:

2

Overview

References:

Textbook:

The elements of statistical learning. Hastie, Tibshirani &

Friedman.

Other references:

Pattern classification. Duda, Hart & Stork.

Data clustering: theory, algorithms and application. Gan, Ma &

Wu.

Applied multivariate statistical analysis. Johnson & Wichern.

Evaluation:

Three projects (30% each)

Class participation (10%)

3

Overview

Supervised learning

”direct data mining”

Classification

Estimation

Prediction

Unsupervised learning

”indirect data mining”

Clustering

Association rules

Description, dimension

reduction and

visualization

Machine Learning

/Data mining

Semi-supervised learning

Modified from Figure 1.1 from <Data Clustering> by Gan, Ma and Wu

4

Overview

In supervised learning, the problem is well-defined:

Given a set of observations {xi, yi},

estimate the density Pr(Y, X)

Usually the goal is to find the model/parameters to

minimize a loss,

A common loss is Expected Prediction Error:

It is minimized at

Objective criteria exists to measure the success of a supervised

learning mechanism.

5

Overview

In unsupervised learning, there is no output variable, all we

observe is a set {xi}.

The goal is to infer Pr(X) and/or some of its properties.

When the dimension is low, nonparametric density estimation is

possible;

When the dimension is high, may need to find simple properties

without density estimation, or apply strong assumptions to

estimate the density.

There is no objective criteria to evaluate the outcome;

Heuristic arguments are used to motivate the methods;

Reasonable explanation of the outcome is expected from the

subjective field of study.

6

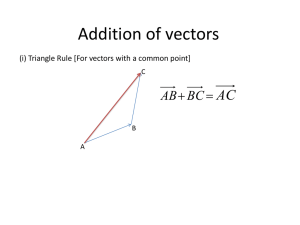

Classification

The general scheme.

An example.

7

Classification

In most cases, a single

feature is not enough to

generate a good

classifier.

8

Classification

Two extremes:

overly rigid and

overly flexible

classifiers.

9

Classification

Goal: an optimal trade-off between model simplicity and training

set performance.

This is similar to the AIC / BIC / …… model selection in

regression.

10

Classification

An example of the overall

scheme involving

classification:

11

Classification

A classification

project:

a systematic view.

12

Curse of Dimensionality

Bellman R.E.,

1961.

In p-dimensions, to get a hypercube with volume r, the edge length

needed is r1/p.

In 10 dimensions, to capture 1% of the data to get a local average,

we need 63% of the range of each input variable.

13

Curse of Dimensionality

In other words,

To get a “dense” sample, if we need N=100 samples in 1

dimension, then we need N=10010 samples in 10 dimensions.

In high-dimension, the data is always sparse and do not support

density estimation.

More data points are closer to the boundary, rather than to any

other data point prediction is much harder near the edge of the

training sample.

14

Curse of

Dimensionality

Estimating a 1D

density with 40

data points.

Standard normal

distribution.

15

Curse of

Dimensionality

Estimating a 2D

density with 40

data points.

2D normal

distribution; zero

mean; variance

matrix is identity

matrix.

16

Curse of Dimensionality

Another example – the EPE of the nearest neighbor predictor.

To find E(Y|X=x), take the average of data points close to a given x, i.e. the

top k nearest neighbors of x

Assumes f(x) is well-approximated

by a locally constant function

When N is large, the neighborhood

is small, the prediction is accurate.

17

Curse of Dimensionality

Just a reminder, the

expected prediction error

contains variance and bias

components.

Under model:

Y=f(X)+ε

EPE ( x0 ) = E[(Y - fˆ ( x0 )) 2 ]

= E[(e 2 + 2e ( f ( x0 ) - fˆ ( x0 )) + ( f ( x0 ) - fˆ ( x0 )) 2 )]

= s 2 + E[( f ( x ) - fˆ ( x )) 2 ]

0

0

= s 2 + E[ fˆ ( x0 ) - E ( fˆ ( x0 ))]2 + [ E ( fˆ ( x0 )) - f ( x0 )]2

= s 2 + Var ( fˆ ( x )) + Bias 2 ( fˆ ( x ))

0

0

18

Curse of Dimensionality

We have talked about the curse of dimensionality in the

sense of density estimation.

In a classification problem, we do not necessarily need

density estimation.

Generative model --- care about class density function.

Discriminative model --- care about boundary.

Example: Classifying belt fish and carp. Looking at the

length/width ratio is enough. Why should we care how many

teeth each kind of fish have, or what shape fins they have?

19

High-throughput biological data

We talk about “curse of dimensionality” when N is not >>>p.

In bioinformatics, usually N<100, and p>1000.

How to deal with this N<<p issue? It is assumed most

predictors do not contain information to predict Y.

Dramatically reduce p before model-building.

Filter genes based on:

variation.

normal/disease test statistic.

projection.

functional groups/network

modules.

……

For the most part of this course, we will pretend the N<<p issue

doesn’t exist. Some methods are robust against large

20

numbers of nuisance variables.

Reminder of some results for random vectors

The multivariate Gaussian distribution:

The covariance

matrix, not limited to

Gaussian.

* Gaussian is fully

defined by mean

vector and covariance

matrix (first and

second moments).

21

Reminder of some results for random vectors

The correlation matrix:

Relationship with covariance matrix:

V

1

1

2

V rV

2

[

= diag s ii

1

2

]

=S

22

Reminder of some results for random vectors

k

k

x ¢Ax = å å aij x i x j

Quadratic form:

i=1 j=1

A linear combination of the elements of a random vector:

2

Var(aX

+

bX

)

=

E[(aX

+

bX

)

(a

m

+

b

m

)]

1

2

1

2

1

2

2-D example:

= E[a(X1 - m1 ) + b(X 2 - m2 )]2

és11 s12 ùéaù

= a s11 + b s 22 + 2abs12 = [a b]ê

úê ú = c ¢Sc

ës12 s 22 ûëbû

2

2

23

Reminder of some results for random vectors

A “new” random vector generated from linear combinations of a

random vector:

24

Reminder of some results for random vectors

Let A be a kxk square symmetrix matrix, then it has k pairs of eigenvalues

and eigenvectors. A can be decomposed as:

A = l1e1e1¢ + l2e2e2¢ + ....... + lkekek¢ = PLP ¢

Positive-definite matrix:

x ¢Ax > 0,"x ¹ 0

l1 ³ l2 ³ ...... ³ lk > 0

Note : x ¢Ax = l1 ( x ¢e1 ) 2 + ...... + lk ( x ¢ek ) 2

Reminder of some results for random vectors

Inverse of a positive-definite matrix:

k

A = PL P ¢ = å

-1

-1

i=1

1

li

eiei¢

Square root matrix of a positive-definite matrix:

k

A1 2 = PL 2 P ¢ = å li eiei¢

1

i=1

Reminder of some results for random vectors

27

Reminder of some results for random vectors

Proof of the first (and second) point of the previous slide.

28

Reminder of some results for random vectors

With a sample of the random vector:

29

Reminder of some results for random vectors

To estimate mean vector and covariance matrix:

⌢

m=X

n

⌢

1

¢

S=S=

X

X

X

X

(

)

(

)

å

i

i

n -1 i=1

30

Reminder of some results for random vectors

31

Reminder of some results for random vectors

32

Reminder of some results for random vectors

33