U3.1-TwoPopulations

advertisement

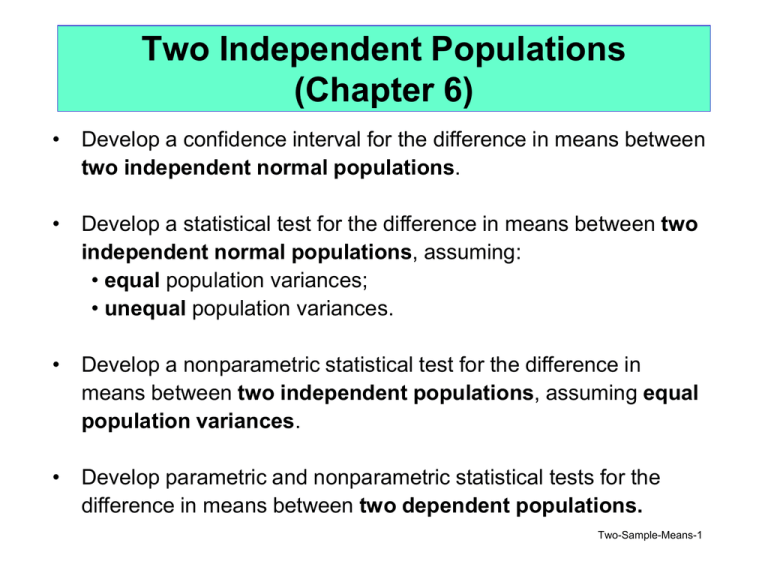

Two Independent Populations (Chapter 6) • Develop a confidence interval for the difference in means between two independent normal populations. • Develop a statistical test for the difference in means between two independent normal populations, assuming: • equal population variances; • unequal population variances. • Develop a nonparametric statistical test for the difference in means between two independent populations, assuming equal population variances. • Develop parametric and nonparametric statistical tests for the difference in means between two dependent populations. Two-Sample-Means-1 Situation Need to determine if concentration of contaminant at an old industrial site is greater than background levels from areas surrounding the site. Take soil samples at random locations within the site and at random locations at areas outside the site. Assume values at areas outside the site are unaffected by site activities that lead to contamination. Two-Sample-Means-2 Hypotheses of Interest 1. Background level of contaminant is greater than the no-detect level (-5.0) [on a natural log scale]. 2. Site level of contaminant is greater than the no-detect level (-5.0). 3. Site level is different from Background level. Is the situation this or this? 2 -5.0 1 2 Two-Sample-Means-3 One- Sample Hypothesis Tests Background level of contaminant is greater than the no-detect level (-5.0). Site level of contaminant is greater than the no-detect level (-5.0). H0: i -5.0 HA: i > -5.0 One sided t-tests: T. S. R.R. ( y i i ) ti s ni ti t ,ni 1 i = 1 => Background areas (B) i = 2 => Contamination site (S) Critical Values n t.05,n-1 7 1.943 8 1.895 9 1.860 10 1.833 Two-Sample-Means-4 Y -2.96 -1.09 -3.13 -2.12 -2.59 -4.31 -1.20 DATA and One-Sided T-tests n1 7 y1 2.48 H0: B 0 = -5.0 HA: B > 0 = -5.0 s1 1.13 Conclusion: Pr(Type I error) = = 0.05 T.S. y1 0 2.48 ( 5.0) t 5.90 s 1.13 n 7 R.R. Reject H0 if t > t,n-1 T0.05,6=1.943 Since 5.90 > 1.943 we reject H0 and conclude that the true average background level is above -5.0. Same test could be performed for contaminated site data. Two-Sample-Means-5 Comparison Hypothesis H0: Site level is not different from Background level. (B = S) HA: Site level is different from Background level. (B S) This requires comparing sample means from two “independent” samples, one from each population. T. S. s y1 y 2 (y1 y 2 ) t sy1y2 Obvious test statistic. the standard error of the difference of the two means. Two-Sample-Means-6 Standard Error of the Difference of Two Means If the true variances of the two populations are known, we use the property of independent random variables that says: Var(X-Y) = Var(X) + Var(Y) . 2 y1 y 2 y y 1 2 Var(y1) Var(y 2 ) n1 n2 12 22 From sampling Dist of Y 1 n1 n2 From sampling Dist of Y 2 1 2 2 2 T. S. z ( y1 y2 ) y1 y2 Two-Sample-Means-7 Assume the two populations have the same, or nearly the same true variance, 2p. True standard error of the difference of two means. y y p Estimate of the standard error of the mean differences. s y1 y2 s p Estimate of the common (Pooled) standard deviation. 1 2 1 1 n1 n2 1 1 n1 n2 (n1 1) s (n2 1) s sp n1 n2 2 2 1 2 2 Two-Sample-Means-8 Confidence Interval for difference Assume confidence level of (1-)100% y1 y 2 t ,n n 2sp 2 sp 1 2 1 1 n1 n2 (n1 1)s12 (n2 1)s22 n1 n2 2 Two-Sample-Means-9 Statistical test for difference of means Pooled Variances T-test Pr(Type I Error) = Ho : 1 2 D0 H A : 1 2 D 0 , reject H o if t t ,df H A : 1 2 D 0 , reject H o if t t ,df H A : 1 2 D 0 , reject H o if t t / 2,df Test Statistic: y1 y 2 Do t 1 1 sp n1 n2 df n1 n2 2 sp (n1 1)s12 (n2 1)s22 n1 n2 2 Two-Sample-Means-10 CONT Background n1 7 y1 2.48 H0: B - S = 0 HA: B - s 0 s1 1.13 YB -2.96 -1.09 -3.13 -2.12 -2.59 -4.31 -1.20 YS -3.81 -5.83 -5.70 -4.11 -3.83 -5.01 -5.49 Site n2 7 y 2 4.83 s2 0.89 HA: Average site level significantly different from background. (n1 1) s1 (n2 1) s2 (6)1.132 (6)0.892 sp n1 n2 2 772 2 2 1.017 Two-Sample-Means-11 Pr(Type I Error) = = 0.05 sp 1.017 s y1 y2 s p 1 1 1 1 1.017 .5436 n1 n2 7 7 y1 y2 Do 2.48 (4.82) 0 4.305 T.S. t 0.5436 1 1 sp n1 n2 R.R. Reject if: t t 2 , df t.025,12 2.179 Conclusion: Since 4.304 > 2.179 we reject H0 and conclude that site concentration levels are Two-Sample-Means-12 significantly different from background. What if the two populations do not have the same variance? Separate Variances CI and T-test • New estimate of standard error of difference. • Test and CI is no longer exact - uses Satterthwaite’s approximate df value (df’). C.I.: T.S.: ( y1 y 2 ) t / 2 ,df t ' ( y1 y 2 Do ) (n1 1)(n2 1) df , 2 2 (n2 1)c (n1 1)(1 c) Round df’ down to the nearest integer. s12 s22 n1 n2 s12 s22 n1 n2 s12 / n1 c 2 2 s1 / n1 s2 / n2 Two-Sample-Means-13 For site contamination example, assume 1 2 and redo test. 1.276 7 c 2 .6171 2 s1 s2 1.276 0.7921 n1 n2 7 7 df s12 n1 Redo Test (n1 1)(n2 1) (n1 1)c 2 (n2 1)(1 c) 2 (6)(6) 11.4 T.S. 2 2 (6)0.6171 (6)(1 0.6171) y1 y 2 Do 2,48 (4.82) 2.34 ' t 4.304 2 2 2 2 0.5437 s1 s2 1.13 0.87 n1 n2 7 7 R.R. Reject if: | t | t / 2,df t.025,11 2.201 Conclusion: Since 4.304 > 2.201 we reject H0 and conclude that site concentration levels are Two-Sample-Means-14 significantly different from background. Sample Size Determination (equal variances) (1-)100% CI for μ1 – μ2 : 2 z / 2 n , 2 E 2 2 1 E intervalwidth, n1 n2 n 2 = Pr(Type I Error) = Pr(Type II Error) One-sided Test: n 2 2 ( z z ) 2 2 , | 1 2 |, n1 n2 n , | 1 2 |, n1 n2 n Two-sided Test: n 2 2 ( z / 2 z ) 2 2 Two-Sample-Means-15 Example of Sample Size Determination In our sample, a of 2.34 was observed. What if we had wanted to be sure that a of say 1 unit would be declared significant with: 0.05 = = Pr(Type I Error) 0.10 = = Pr(Type II Error) Assume a common population variance of 2 = 1. One-sided test: n 22 (z z )2 2 2(1)2 (z0.05 z0.1)2 2 2 ( 1 . 645 1 . 280 ) 17.11 18 2 (1) Two-sided test: n 2 2 ( z z ) 2 2 2 2(1) 2 ( z0.025 z0.1 ) 2 2 2 ( 1 . 96 1 . 280 ) 21 2 (1) n=n1=n2 Two-Sample-Means-16 Summary • These two-sample inferences require the assumption of independent, normal population distributions. • If we have reason to believe the two population variances are equal, then we should use the pooled variances method. This results in more powerful inferences. • We need not worry about the normality assumption when both sample sizes are large, all results are still approximately correct. (CLT.) • What to do when independence does not hold? Advanced! Partial solution next lecture (paired samples). • What to do when we have small samples and we don’t Two-Sample-Means-17 believe the data are normal? Next slide… The Wilcoxon Rank Sum Test A class of nonparametric tests. These: • • Do not require data to have normal distributions. Seek to make inferences about the median, a more appropriate representation of the center of the population for highly skewed and/or very heavy-tailed distributions. In the Wilcoxon Rank Sum Test: • • • • • Population variances assumed to be equal. Measurements (observations) are assumed to be independent from continuous distributions. Interest is whether the center of the two population distributions are the same or not. Also known as the Mann-Whitney U Test. 1-sample equivalent: Wilcoxon Signed Rank Test. Two-Sample-Means-18 Idea behind the Wilcoxon Test H0: Populations are identical If H0 is true, when we put the data from the two samples together and sort them from lowest to highest, i.e. we rank them (lowest obs gets rank=1, 2nd rank=2, etc., tied obs get average of ranks). The ranks of the observations from the two samples should be fairly well intermingled. Thus, the sum of the ranks from population 1 observations should be approximately equal to the sum of the ranks of population 2 observations. HA: Population 1 is shifted to the right of population 2. If HA is true, if we put the data from the two samples together then sort them lowest to highest, the sum of the ranks of population 1 observations should be greater than the sum of ranks of observations from population 2 . Two-Sample-Means-19 Definition of Population 1 NOTE: Population 1 should be taken to be the one corresponding to the smaller sample size (n1 n2). If the sample sizes are equal (n1=n2), either one can be Population 1. This is so that the Tables in Ott will give correct critical values. (Ott inadvertantly does not mention this!) Two-Sample-Means-20 Wilcoxon Test Statistic H0: Populations are identical HA: 1. Population 1 is shifted to right of population 2. 2. Population 1 is shifted to the left of population 2. 3. Populations 1 and 2 have different location parameters. T.S. Let T denote the sum of the ranks of population 1 observations. If n110 and n2 10 use T as the test statistic and Situation #1 Table 5 in Ott & Longnecker for critical values. R.R. 1. 2. 3. Reject H0 if T > TU Reject H0 if T < TL Reject H0 if T>TU or T<TL Two-Sample-Means-21 Situation #2 If n1>10, n2 >10 we use a normal approximation to the distribution of the sum of the ranks. Let T denote the sum of the ranks of population 1 observations. T.S. T T z T n1(n1 n2 1) T 2 k 2 t ( t 1 ) j n n j j 1 T2 1 2 (n1 n2 1) 12 ( n1 n2 )(n1 n2 1) and tj denotes the number of tied ranks in the jth group of ties, j=1,…,k. R.R. 1. 2. 3. Reject H0 if z > z Reject H0 if z < -z Reject H0 if |z|>z /2 = Pr(Type I Error) Two-Sample-Means-22 Situation #1: n110 and n2 10 Group Value Rank 1 1 1 1 1 1 2 2 2 1 2 2 2 2 -1.09 -1.20 -2.12 -2.59 -2.96 -3.13 -3.81 -3.83 -4.10 -4.31 -5.01 -5.41 -5.41 -5.83 SUM 14 13 12 11 10 9 8 7 6 5 4 2.5 2.5 1 Pop 1 Ranks 14 13 12 11 10 9 5 74 = T Two sided alternative hypothesis R.R. :Reject H0 if T>TU or T<TL Table 5 n1 = n2 = 7 TL = 37 TU = 68 Conclusion: Reject H0 Two-Sample-Means-23 Table 5 Wilcoxon Critical Values Two-Sample-Means-24 Confidence Interval for Δ = μ1 – μ2 Can be constructed in analogous way to the nonparametric CI for μ based on the Sign Test. (See §6.3 in Ott.) Two-Sample-Means-25 Paired Data Situation (§6.4-6.5) In this set of slides we will: • Develop confidence intervals and test for the difference between two means that have been measured on the same or highly related experimental units. • The underlying population of differences is assumed to be normally distributed. • A nonparametric alternative that does not rely on normality will be discussed (§6.5). Two-Sample-Means-26 Situation Two analysts, supposedly of identical abilities, each measure the parts per million of a certain type of chemical impurity in drinking water. It is claimed that analyst 1 tends to give higher readings than analyst 2. To test this theory, each of six water samples is divided and then analyzed by both analysts separately. The data are as follows: Water Sample Analyst 1 1 31.4 2 37.0 3 44.0 4 28.8 5 59.9 6 37.6 Column mean 39.8 standard deviation 11.19 Data are paired hence observations are not independent. Analyst 2 28.1 37.1 40.6 27.3 58.4 38.9 38.4 11.28 Row mean 29.8 37.1 42.3 28.1 59.2 38.3 Difference 3.3 -0.1 3.4 1.5 1.5 -1.3 1.4 1.85 Observations in the same row are more likely to be close to each other than are observations between rows. Two-Sample-Means-27 Paired Data Considerations Because of the dependence within rows we don’t use the difference of two means but instead use the mean of the individual differences. Ho : 1 2 D0 written as Ho : d D0 Let yij represent the ith observation for the jth sample, i=1,…,n, j=1,2. Compute di = yi1 - yi2 the difference in responses for the ith observation, and then proceed as in the one sample t-test situation. 1 n d di n i1 1 n 2 sd (di d) i1 n 1 the sample mean of the differences. the sample standard deviation of the differences. Two-Sample-Means-28 Importance of Independence/Dependence on Variance of Difference If the two populations were independent, the variance of the difference would be computed using the probability rule: Var( X - Y ) = Var(X) + Var(Y) But here the two populations are dependent and the above rule does not hold. i.e we don’t use a pooled 2 2 variance estimator. We use s s s d the variance of the Hence 1 2 differences. n n n In above Example: sd 1.85 0.76, n 6 1 2 (s1 s22 ) n Very different! 1 (11.192 11.282 ) 6.49 6 Two-Sample-Means-29 d = D0 H0: Ha: 1. 2. 3. d > D0 d < D0 d D0 Rejection Region: The paired t-test Test Statistic: d D0 t sd n -level confidence interval: 1. Reject H0 if t > t,n-1 2. Reject H0 if t < -t ,n-1 3. Reject H0 if |t| > t /2,n-1 d t 2 ,n1 sd n For the Example, t = 1.853 and t0.05,5 = 2.015 hence we do not reject H0: d =0 in testing situation 1, and conclude that there are no significant differences. 95% CI: 1.4 1.94 or ( -.54 , 3.34 ) Two-Sample-Means-30 Wilcoxon Signed-Rank Test for Paired Data (§6.5) A nonparametric alternative to the paired t-test when the population distribution of differences are not normal. (Requires symmetry about the population median of the differences, M.) Test Construction: 1. Compute the differences in the pairs of observations. 2. Let D0 be the hypothesized value of M, and subtract D0 from all the differences. 3. Delete all zero values, and let n be the resulting number of nonzero values. 4. List the absolute values in increasing order, and assign them ranks 1,…,n (or average of ranks for ties). T+ = sum of the positive ranks (T+ = 0 if no positive ranks). T- = sum of the negative ranks (T- = 0 if no negative ranks). Two-Sample-Means-31 Situation 1: Small Sample (n≤50) H0: HA: 1. 2. 3. M = D0 (usually 0). M > D 0. M < D 0. M ≠ D 0. T.S. 1. T=T2. T=T+ 3. T=smaller(T-,T+) R.R. 1. Reject if TT,n 2. Reject if T T,n 3. Reject if T T/2,n Critical values (from Table 6) Two-Sample-Means-32 Situation 2: Large Sample (n>50) T.S. z T T T H 0: HA: 1. 2. 3. M = D0 (usually 0). M > D 0. M < D 0. M ≠ D 0. n(n 1) T 4 1 1 g 2 T n(n 1)(2n 1) t j (t j 1)(t j 1) 24 2 j 1 g = number of distinct ranks assigned to the differences. (If no ties, g=n.) tj = number of tied ranks in jth group. (If no ties, tj=1 for j=1,…,g, and the summation term is zero.) R.R. 1. Reject H0 if z > z 2. Reject H0 if z < - z 3. Reject H0 if |z| > z /2 Critical values (from Table 1) Two-Sample-Means-33 Differences -1.3 -0.1 1.5 1.5 3.3 3.4 Ranks (signs) 1 (-) 2 (-) 3.5 (+) 3.5 (+) 5 (+) 6 (+) Back to the Problem n6 T 18 T 3 T min(T , T ) 3 For HA: M > 0 (1st case): • the test statistic is T- = 3; • the critical value is T0.05,6 = 2. Hence we do not reject H0 and conclude that analyst 1 does not tend to give higher readings than analyst 2. Two-Sample-Means-34 Differences -1.3 -0.1 1.5 1.5 3.3 3.4 Ranks (signs) 1 (-) 2 (-) 3.5 (+) 3.5 (+) 5 (+) 6 (+) Illustration of calculations supposing the problem were of a large sample nature (not the case here). n(n 1) 6(7) 10.5 4 4 1 1 543 6 ( 7 )( 2 6 1 ) 2 ( 2 1 )( 2 1 ) 22.6 24 2 24 T T 2 For HA: M > 0, test statistic is Z = (T+ – μT) / σT = (18 – 10.5) / 4.8 = 1.6 Since z = 1.6 < z0.05 = 1.645, we do not reject H0. Two-Sample-Means-35 Concluding Comments The paired measurements (samples) case can be extended to more than two measurements; called repeated measurements. When repeated measurements are taken on an individual, we are in the same situation as with two paired samples, that is, the repeated measurements on an individual are expected to be more correlated than measurements among individuals. Solutions to the repeated measurements case cannot follow the simple solution for two dependent samples. The final solution involves specifying not only what we expect to happen in the means of the sampling “times” but we have to specify the structure of the correlations between sampling times. It is advantageous to design a paired data experiment rather than an independent samples one. This helps to eliminate the confounding effect (masking of treatment differences) that sources of variation other Two-Sample-Means-36 than the treatments have on the experimental units.